As the value of large AI pre-trained models continues to emerge, the scale of the models is becoming larger and larger. Industry and academia have reached a consensus: In the AI era, computing power is productivity.

Although this understanding is correct, it is not comprehensive. Digital systems have three pillars: storage, computing, and networking, and the same goes for AI technology. If you put aside storage and network computing power, then large models can only stand alone. In particular, network infrastructure adapted to large models has not received effective attention.

Faced with large-scale AI models that frequently require "tens of thousands of cards for training", "tens of thousands of miles of deployment" and "trillions of parameters", network transport capacity is a link that cannot be ignored in the entire intelligent system. The challenges it faces are very prominent, and it is waiting for answers that can break the situation.

Wang Lei, President of Huawei Data Communication Product Line

On September 20, a data communications summit with the theme of "Galaxy AI Network, Accelerating Industry Intelligence" was held during the Huawei Connect Conference 2023. Representatives from all walks of life discussed the transformation and development trends of AI network technology. At the meeting, Wang Lei, President of Huawei’s Data Communications Product Line, officially launched the Galaxy AI network solution. He said that large models make AI smarter, but the cost of training a large model is very high, and the cost of AI talent must also be considered. Therefore, in the intelligentization stage of the industry, only by concentrating on building large computing power clusters and providing intelligent computing cloud services to the society can artificial intelligence be truly penetrated into thousands of industries. Huawei has released a new generation of Galaxy AI network solution. Facing the intelligent era, it builds a new network infrastructure with ultra-high throughput, long-term stability, reliability, elasticity and high concurrency to help AI benefit everyone and accelerate the intelligence of the industry.

Take this opportunity to learn about the network challenges brought by the rise of large models to intelligent computing data centers, and why Huawei Galaxy AI Network is the optimal solution to these problems.

When it comes to the AI era, a model, a piece of data, and a computing unit can be regarded as a starlight. However, only by connecting them together efficiently and stably can a brilliant intelligent world be formed

The explosion of large models triggered a hidden network torrent

We know that the AI model is divided into two stages: training and inference deployment. With the rise of pre-trained large models, huge AI network challenges have also occurred in these two stages.

The first is the training phase of the large model. As the model scale and data parameters become larger and larger, large model training begins to require computing clusters of kilocalorie or even 10,000 kilowatts to complete. This also means that large model training must occur in data centers with AI computing power.

At the current stage, the cost of intelligent computing data centers is very high. According to industry data, the cost of building a cluster with 100P computing power reaches 400 million yuan. Taking a well-known international large model as an example, its daily computing power expenditure during the training process reaches 700,000 US dollars

If the connection capability of the data center network is not smooth, resulting in a large amount of computing resources being lost during network transmission, the losses to the data center and AI models will be immeasurable. On the contrary, if cluster training is more efficient under the same computing power scale, then data centers will gain huge business opportunities. The load rate and other network factors directly determine the training efficiency of the AI model. On the other hand, as the scale of the AI computing power cluster continues to expand, its complexity also increases accordingly, so the probability of failure is also increasing. Building a long-term stable and reliable cluster network is an important pivot for data centers to improve their input-output ratio

Outside of the data center, the value of the AI network can also be seen in the reasoning and deployment scenarios of AI models. The inference deployment of large models mainly relies on cloud services, and cloud service providers must try to serve larger customers with limited computing resources to maximize the commercial value of large models. As a result, the more users there are, the more complex the entire cloud network structure will be. How to provide long-term and stable network services has become a new challenge for cloud computing service providers.

In addition, in the last mile of AI inference deployment, government and enterprise users are faced with the need to improve network quality. In real scenarios, 1% link packet loss will cause TCP performance to drop 50 times, which means that for a 100Mbps broadband, the actual capacity is less than 2Mbps. Therefore, only by improving the network capabilities of the application scenario itself can we ensure the smooth flow of AI computing power and realize truly inclusive AI.

It is not difficult to see from this that in the entire process of the birth, transmission, and application of large AI models, every link faces the challenges and needs of network upgrades. The transportation capacity problem in the era of large models needs to be solved urgently.

The network breakthrough ideas in the intelligent era can extend from starlight to galaxy

The rise of large models has brought about a multi-link, full-process network problem. Therefore, we must take a systematic approach to address this challenge

Huawei has proposed a new network infrastructure for intelligent computing cloud services. The facility needs to support the three capabilities of "high-efficiency training", "non-stop computing power" and "inclusive AI services". These three capabilities cover the entire scenario of AI large models from training to inference deployment. Huawei not only focuses on meeting a single need and upgrading a single technology, but also comprehensively promotes the iteration of AI networks, bringing unique breakthrough ideas to the industry

Specifically, the network infrastructure in the AI era needs to include the following capabilities:

First of all, the network needs to maximize the value of the AI computing cluster in the training scenario. By building a network with ultra-large-scale connection capabilities, we can achieve high efficiency in training large AI models.

Secondly, in order to ensure the stability and sustainability of AI tasks, it is necessary to build long-term and reliable network capabilities to ensure that monthly training is not interrupted, and at the same time, stable delimitation, positioning and recovery at the second level are required. Minimize training interruptions as much as possible. This is the non-stop capacity building of computing power.

Thirdly, during the AI inference deployment process, the network is required to have the characteristics of elasticity and high concurrency, which can intelligently orchestrate massive user flows and provide the best AI landing experience. At the same time, it can resist the impact of network degradation and ensure different AI computing power flows smoothly between regions, which also realizes the capacity building of "inclusive AI services".

Huawei finally launched the Galaxy AI network solution, adhering to this game-breaking idea. This solution integrates dispersed AI technologies and forms a galaxy-like network through powerful computing capabilities

Galaxy AI Network gives a capacity answer to the big model era

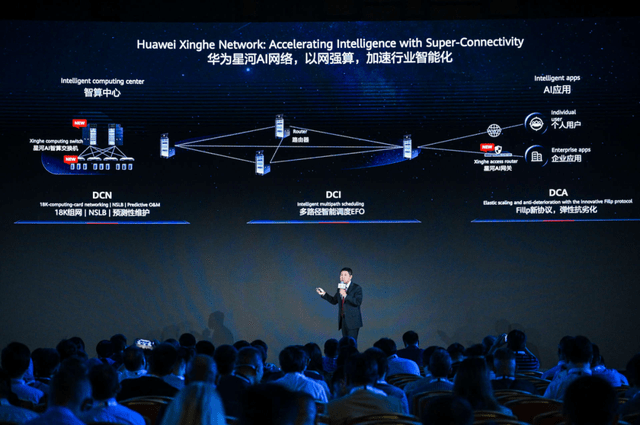

During the Huawei Full Connection Conference 2023, Huawei shared its development vision for accelerating the creation of large AI models with large computing power, large storage capacity, and large transportation capacity. The new generation of Huawei's Galaxy AI network solution can be said to be Huawei's solution to large-scale transport capacity in the era of intelligence.

For intelligent data centers, Huawei Galaxy AI Network is the optimal solution based on network power.

Its ultra-high throughput network characteristics can provide important value to the AI cluster in the intelligent computing center to improve the network load rate and enhance training efficiency. Specifically, Galaxy AI network intelligent computing switches have the industry's highest density 400GE and 800GE port capabilities. Only a layer 2 switching network can realize a convergence-free cluster network of 18,000 cards, thus supporting large model training with over one trillion parameters. . Once the networking levels are reduced, it means that the data center can save a lot of optical module costs, while improving the predictability of network risks and obtaining more stable large model training capabilities.

The Galaxy AI network can support network-level load balancing NSLB, increasing the load rate from 50% to 98%, which is equivalent to realizing overclocking operation of the AI cluster, thereby improving the training efficiency by 20%, and meeting the expectations of efficient training

For cloud service manufacturers, Galaxy AI Network can provide stable and reliable computing power guarantee.

In DCI computing room interconnection scenarios, this technology can provide functions such as multi-path intelligent scheduling, automatically identify and proactively adapt to the impact of peak business traffic. It can identify large and small flows from millions of data flows and reasonably allocate them to 100,000 paths to achieve zero congestion in the network and provide elastic guarantee for high-concurrency intelligent computing cloud services

For government and enterprise users, Galaxy AI Network can cope with network degradation problems and ensure universal AI computing power.

It can support elastic anti-degradation capabilities in DCA calculation scenarios. It uses Fillp technology to optimize the TCP protocol, which can increase the bandwidth load rate from 10% to 60% under the condition of 1% packet loss rate, thereby ensuring that data from metropolitan areas The smooth flow of computing power to remote areas accelerates the inclusive application of AI services.

In this way, the network requirements of all aspects of large models from training to deployment are solved. From intelligent computing centers to thousands of industries, they all have the development fulcrum of network-based computing.

In an era of intelligence, a new era of science and technology opened by large models has just begun. Galaxy AI Network provides answers to transportation capacity in the intelligent era

The above is the detailed content of Galaxy AI Network, the answer to transportation capacity in the era of large models. For more information, please follow other related articles on the PHP Chinese website!

Characteristics of the network

Characteristics of the network

Network cable is unplugged

Network cable is unplugged

What's going on when I can't connect to the network?

What's going on when I can't connect to the network?

What software is adobe

What software is adobe

The difference between xdata and data

The difference between xdata and data

Tutorial on merging multiple words into one word

Tutorial on merging multiple words into one word

Can BAGS coins be held for a long time?

Can BAGS coins be held for a long time?

phpstudy database cannot start solution

phpstudy database cannot start solution