How to Easily Deploy a Local Generative Search Engine Using VerifAI

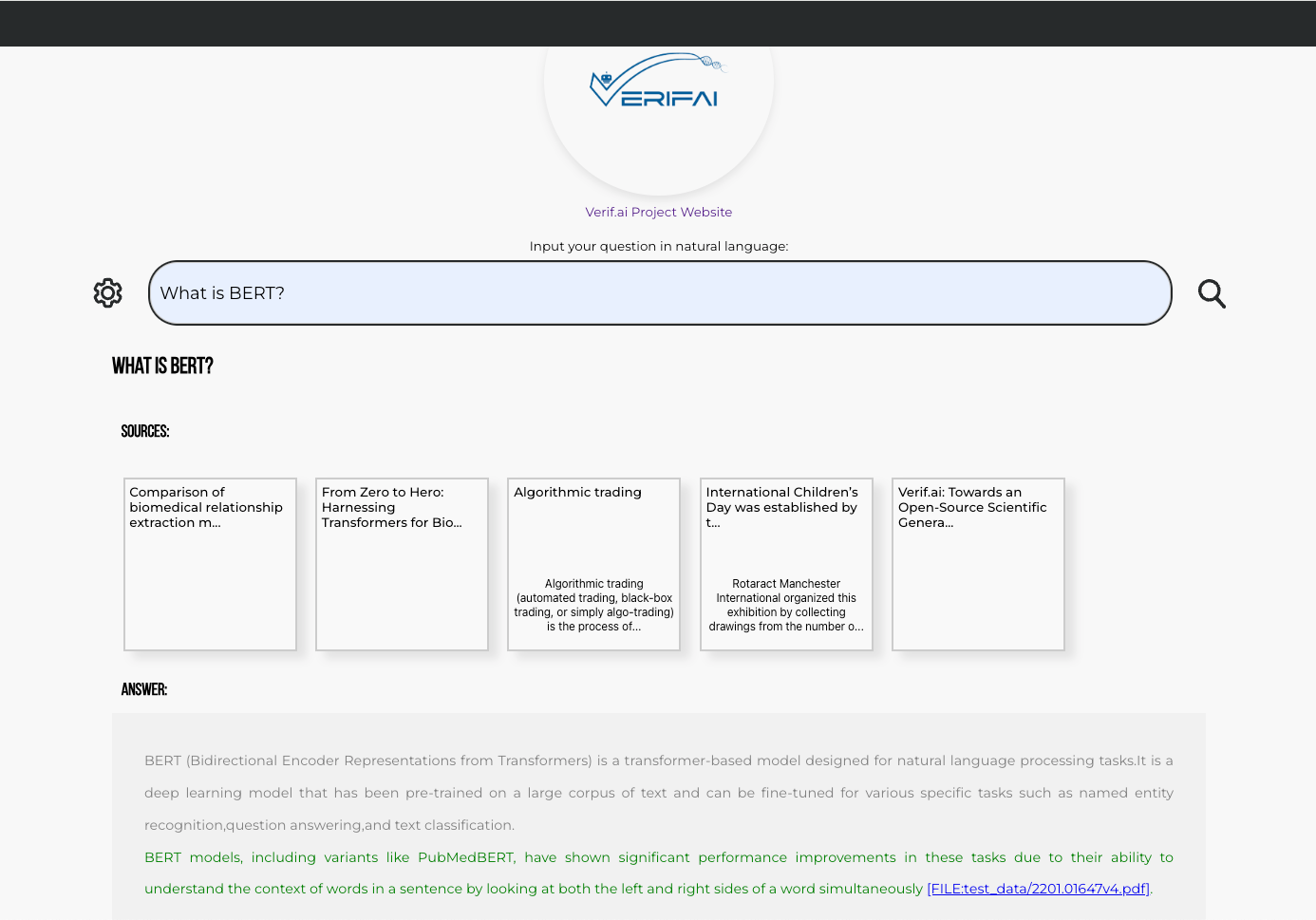

This article details a significant update to the VerifAI project, an open-source generative search engine. Previously focused on biomedical data (VerifAI BioMed, accessible at //m.sbmmt.com/link/ae8e20f2c7accb995afbe0f507856c17), VerifAI now offers a core functionality (VerifAI Core) allowing users to create their own generative search engine from local files. This empowers individuals, organizations, and enterprises to build custom search solutions.

Key Features and Architecture:

VerifAI Core's architecture comprises three main components:

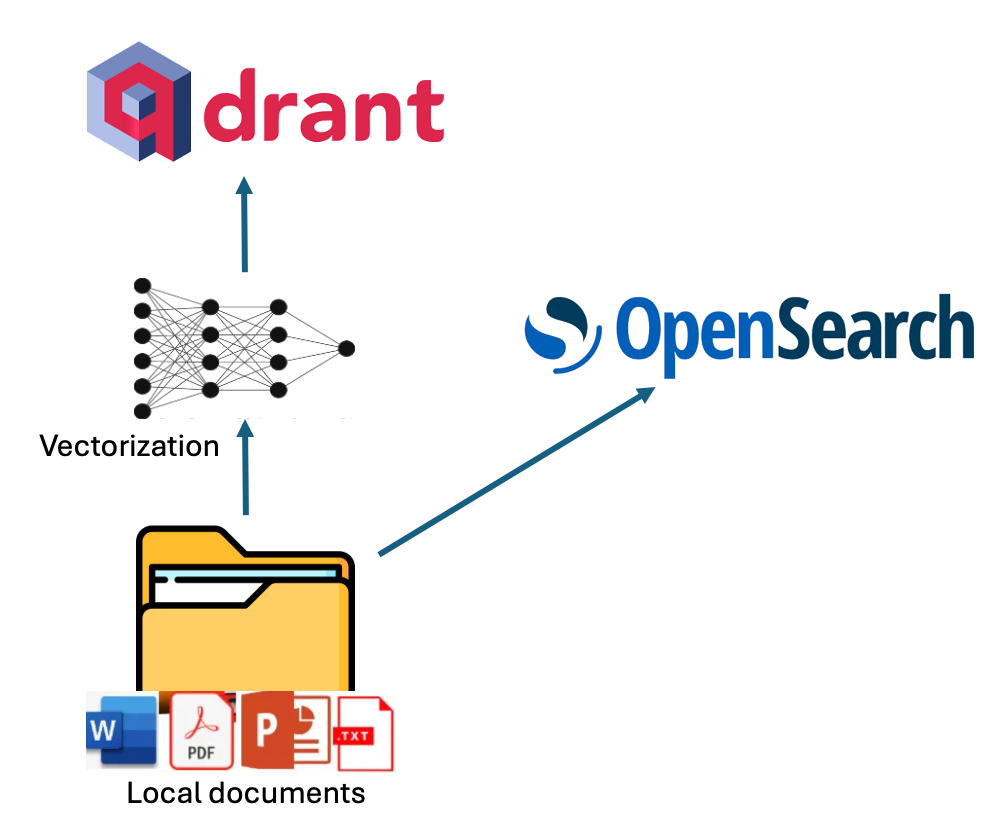

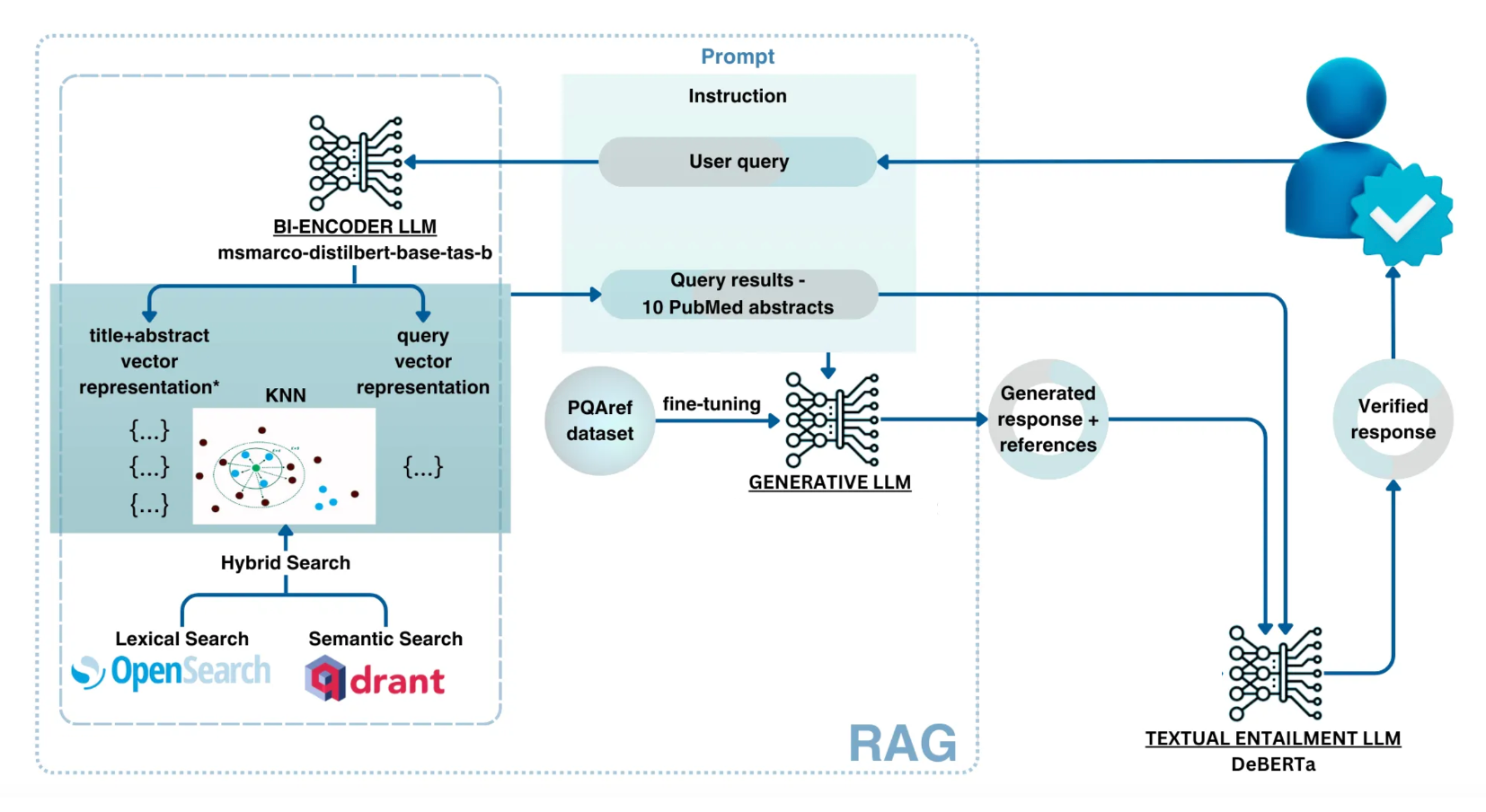

- Indexing: Utilizes OpenSearch for lexical indexing and Qdrant for semantic indexing (using Hugging Face embedding models). This dual approach ensures comprehensive document representation. The indexing script supports various file types (PDF, Word, PowerPoint, Text, Markdown).

-

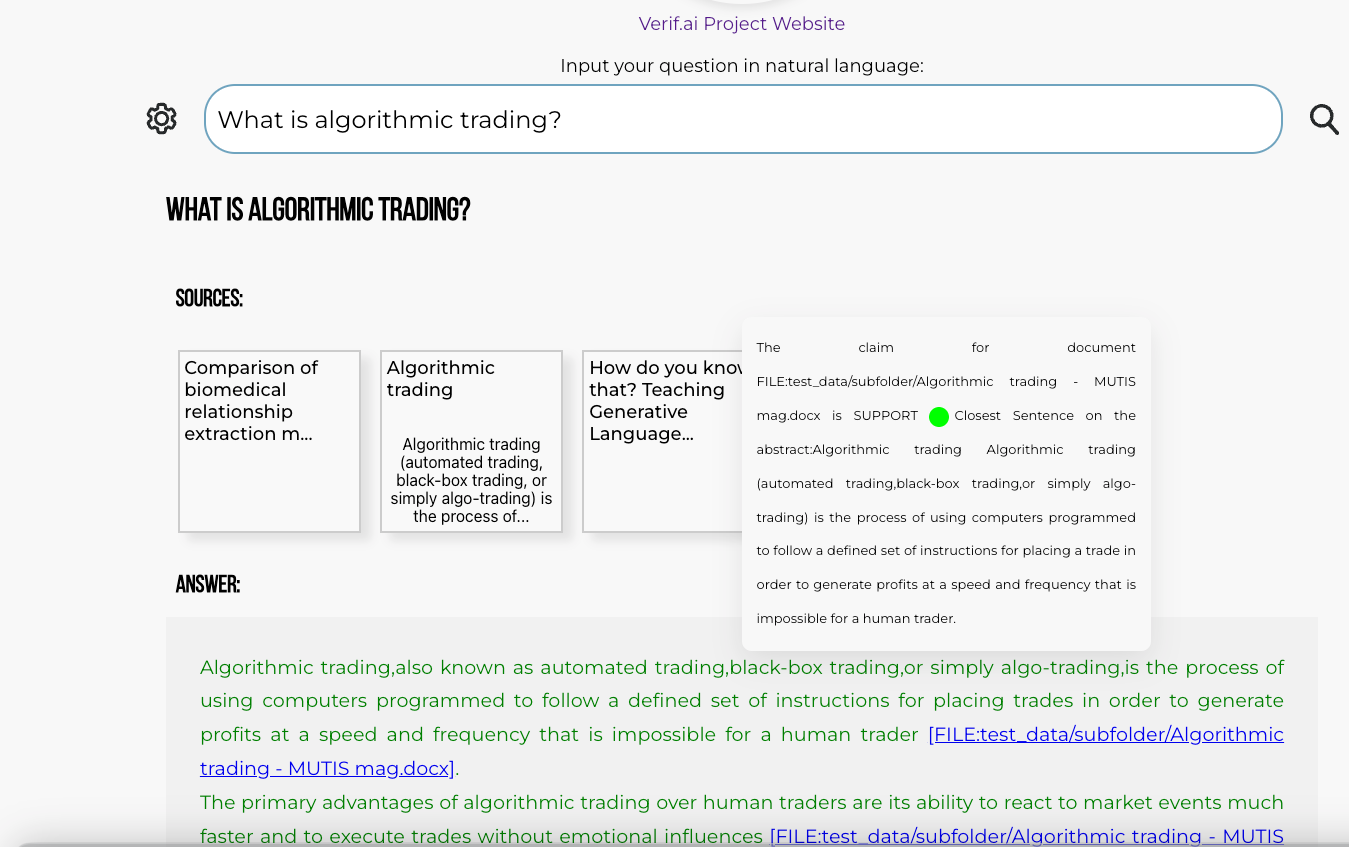

Retrieval-Augmented Generation (RAG): Combines results from OpenSearch's lexical search and Qdrant's semantic search (using dot product similarity). The merged results inform a prompt for the chosen large language model (LLM). The default LLM is a locally deployed, fine-tuned version of Mistral, but users can specify others (OpenAI API, Azure API, etc., via vLLM, OLlama, or Nvidia NIMs).

-

Verification Engine: A crucial component that checks the generated answer against the source documents, minimizing hallucinations.

Setup and Installation:

-

Clone the Repository:

git clone https://github.com/nikolamilosevic86/verifAI.git -

Create a Python Environment:

python -m venv verifai; source verifai/bin/activate -

Install Dependencies:

pip install -r verifAI/backend/requirements.txt -

Configure VerifAI: Configure the

.envfile (based on.env.local.example) specifying database credentials (PostgreSQL), OpenSearch, Qdrant, LLM details (path, API key, deployment name), embedding model, and index names. -

Install Datastores:

python install_datastores.py(requires Docker). -

Index Files:

python index_files.py <path-to-directory-with-files></path-to-directory-with-files>(e.g.,python index_files.py test_data). -

Run the Backend:

python main.py -

Run the Frontend: Navigate to

client-gui/verifai-ui, runnpm install, thennpm start.

Contribution and Future Development:

VerifAI is an open-source project welcoming contributions. The project was initially funded by the Next Generation Internet Search project (European Union) and developed in collaboration with the Institute for Artificial Intelligence Research and Development of Serbia and Bayer A.G. Further development is ongoing, with a focus on expanding its capabilities and usability. Contributions are encouraged via pull requests, bug reports, and feature requests. Visit //m.sbmmt.com/link/d16c19f1f2ab8361fda1f625ce3ff26a for more information.

The above is the detailed content of How to Easily Deploy a Local Generative Search Engine Using VerifAI. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

Investing is booming, but capital alone isn’t enough. With valuations rising and distinctiveness fading, investors in AI-focused venture funds must make a key decision: Buy, build, or partner to gain an edge? Here’s how to evaluate each option—and pr

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). Heading Toward AGI And

Build Your First LLM Application: A Beginner's Tutorial

Jun 24, 2025 am 10:13 AM

Build Your First LLM Application: A Beginner's Tutorial

Jun 24, 2025 am 10:13 AM

Have you ever tried to build your own Large Language Model (LLM) application? Ever wondered how people are making their own LLM application to increase their productivity? LLM applications have proven to be useful in every aspect

Kimi K2: The Most Powerful Open-Source Agentic Model

Jul 12, 2025 am 09:16 AM

Kimi K2: The Most Powerful Open-Source Agentic Model

Jul 12, 2025 am 09:16 AM

Remember the flood of open-source Chinese models that disrupted the GenAI industry earlier this year? While DeepSeek took most of the headlines, Kimi K1.5 was one of the prominent names in the list. And the model was quite cool.

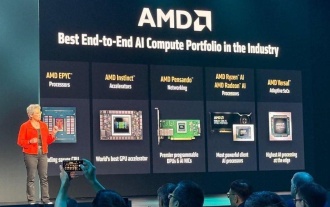

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

Overall, I think the event was important for showing how AMD is moving the ball down the field for customers and developers. Under Su, AMD’s M.O. is to have clear, ambitious plans and execute against them. Her “say/do” ratio is high. The company does

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). For those readers who h

Chain Of Thought For Reasoning Models Might Not Work Out Long-Term

Jul 02, 2025 am 11:18 AM

Chain Of Thought For Reasoning Models Might Not Work Out Long-Term

Jul 02, 2025 am 11:18 AM

For example, if you ask a model a question like: “what does (X) person do at (X) company?” you may see a reasoning chain that looks something like this, assuming the system knows how to retrieve the necessary information:Locating details about the co

Grok 4 vs Claude 4: Which is Better?

Jul 12, 2025 am 09:37 AM

Grok 4 vs Claude 4: Which is Better?

Jul 12, 2025 am 09:37 AM

By mid-2025, the AI “arms race” is heating up, and xAI and Anthropic have both released their flagship models, Grok 4 and Claude 4. These two models are at opposite ends of the design philosophy and deployment platform, yet they