ASFAFAsFasFasFasF

This article explores Agentic RAG, a powerful approach combining agentic AI's decision-making with RAG's adaptability for dynamic information retrieval and generation. Unlike traditional models limited by training data, Agentic RAG independently accesses and reasons with information from various sources. This practical guide focuses on building a LangChain-based RAG pipeline.

Agentic RAG Project: A Step-by-Step Guide

The project constructs a RAG pipeline following this architecture:

-

User Query: The process begins with a user's question.

-

Query Routing: The system determines if it can answer using existing knowledge. If yes, it responds directly; otherwise, it proceeds to data retrieval.

-

Data Retrieval: The pipeline accesses two potential sources:

- Local Documents: A pre-processed PDF (Generative AI Principles) serves as the knowledge base.

- Internet Search: For broader context, the system uses external sources via web scraping.

-

Context Building: Retrieved data is compiled into a coherent context.

-

Answer Generation: This context is fed to a Large Language Model (LLM) to generate a concise and accurate answer.

Setting Up the Environment

Prerequisites:

- Groq API key (Groq API Console)

- Gemini API key (Gemini API Console)

- Serper.dev API key (Serper.dev API Key)

Installation:

Install necessary Python packages:

pip install langchain-groq faiss-cpu crewai serper pypdf2 python-dotenv setuptools sentence-transformers huggingface distutils

API Key Management: Store API keys securely in a .env file (example below):

import os

from dotenv import load_dotenv

# ... other imports ...

load_dotenv()

GROQ_API_KEY = os.getenv("GROQ_API_KEY")

SERPER_API_KEY = os.getenv("SERPER_API_KEY")

GEMINI = os.getenv("GEMINI")

Code Overview:

The code utilizes several LangChain components: FAISS for vector database, PyPDFLoader for PDF processing, RecursiveCharacterTextSplitter for text chunking, HuggingFaceEmbeddings for embedding generation, ChatGroq and LLM for LLMs, SerperDevTool for web search, and crewai for agent orchestration.

Two LLMs are initialized: llm (llama-3.3-70b-specdec) for general tasks and crew_llm (gemini/gemini-1.5-flash) for web scraping. A check_local_knowledge() function routes queries based on local context availability. A web scraping agent, built using crewai, retrieves and summarizes web content. A vector database is created from the PDF using FAISS. Finally, generate_final_answer() combines context and query to produce the final response.

Example Usage and Output:

The main() function demonstrates querying the system. For example, the query "What is Agentic RAG?" triggers web scraping, resulting in a comprehensive explanation of Agentic RAG, its components, benefits, and limitations. The output showcases the system's ability to dynamically access and synthesize information from diverse sources. The detailed output is omitted here for brevity but is available in the original input.

The above is the detailed content of ASFAFAsFasFasFasF. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

Investing is booming, but capital alone isn’t enough. With valuations rising and distinctiveness fading, investors in AI-focused venture funds must make a key decision: Buy, build, or partner to gain an edge? Here’s how to evaluate each option—and pr

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). Heading Toward AGI And

Build Your First LLM Application: A Beginner's Tutorial

Jun 24, 2025 am 10:13 AM

Build Your First LLM Application: A Beginner's Tutorial

Jun 24, 2025 am 10:13 AM

Have you ever tried to build your own Large Language Model (LLM) application? Ever wondered how people are making their own LLM application to increase their productivity? LLM applications have proven to be useful in every aspect

Kimi K2: The Most Powerful Open-Source Agentic Model

Jul 12, 2025 am 09:16 AM

Kimi K2: The Most Powerful Open-Source Agentic Model

Jul 12, 2025 am 09:16 AM

Remember the flood of open-source Chinese models that disrupted the GenAI industry earlier this year? While DeepSeek took most of the headlines, Kimi K1.5 was one of the prominent names in the list. And the model was quite cool.

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). For those readers who h

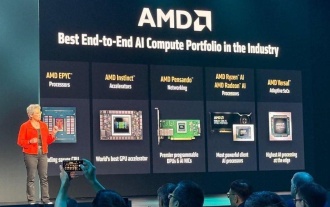

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

Overall, I think the event was important for showing how AMD is moving the ball down the field for customers and developers. Under Su, AMD’s M.O. is to have clear, ambitious plans and execute against them. Her “say/do” ratio is high. The company does

Grok 4 vs Claude 4: Which is Better?

Jul 12, 2025 am 09:37 AM

Grok 4 vs Claude 4: Which is Better?

Jul 12, 2025 am 09:37 AM

By mid-2025, the AI “arms race” is heating up, and xAI and Anthropic have both released their flagship models, Grok 4 and Claude 4. These two models are at opposite ends of the design philosophy and deployment platform, yet they

Chain Of Thought For Reasoning Models Might Not Work Out Long-Term

Jul 02, 2025 am 11:18 AM

Chain Of Thought For Reasoning Models Might Not Work Out Long-Term

Jul 02, 2025 am 11:18 AM

For example, if you ask a model a question like: “what does (X) person do at (X) company?” you may see a reasoning chain that looks something like this, assuming the system knows how to retrieve the necessary information:Locating details about the co