Hello, everyone.

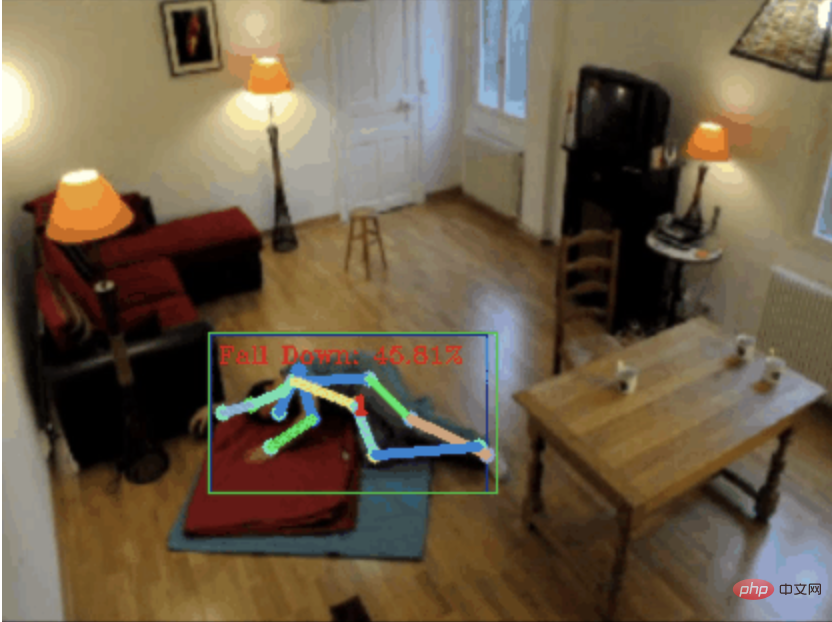

Today I would like to share with you a fall detection project, to be precise, it is human movement recognition based on skeletal points.

It is roughly divided into three steps

The project source code has been packaged, see the end of the article for how to obtain it.

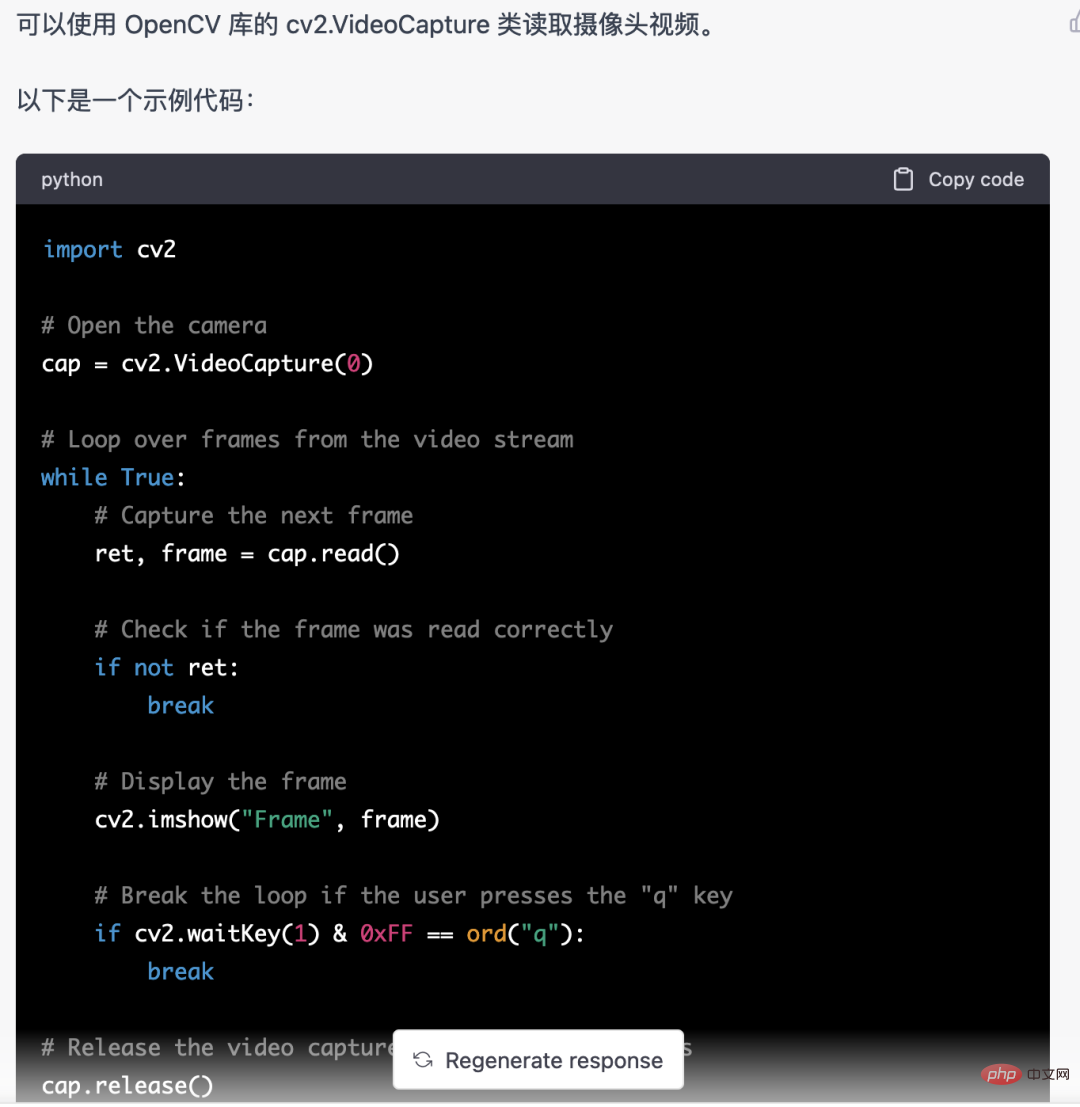

First, we need to obtain the monitored video stream. This code is relatively fixed, we can directly let chatgpt complete

This code written by chatgpt has no problem and can be used directly.

But when it comes to business tasks, such as using mediapipe to identify human skeleton points, the code given by chatgpt is incorrect.

I think chatgpt can be used as a toolbox, which can be independent of business logic. You can try to leave it to chatgpt to complete.

So, I think the requirements for programmers in the future will pay more attention to the ability of business abstraction. Without further ado, let’s get back to the topic.

Human body recognition can use target detection models, such as: YOLOv5. We have also shared many articles on training YOLOv5 models before.

But here I did not use YOLOv5, but mediapipe. Because mediapipe runs faster and runs smoothly on the CPU.

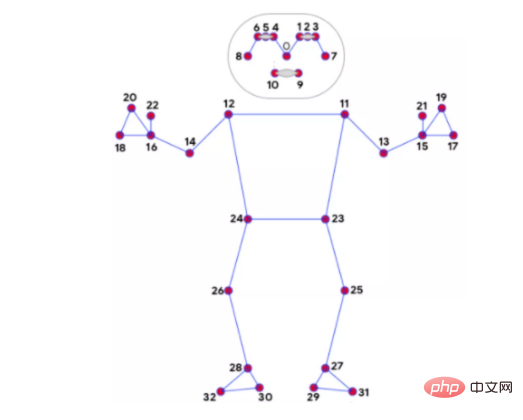

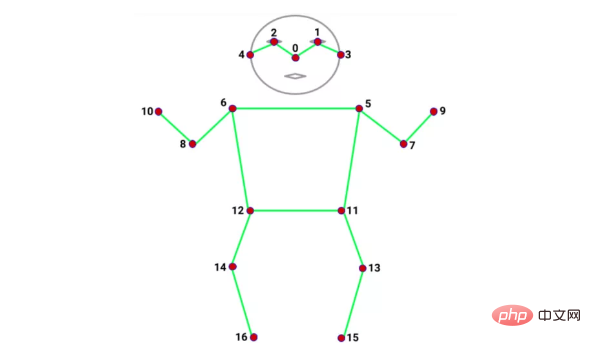

There are many models for recognizing skeleton points, such as alphapose and openpose. The number and position of skeleton points recognized by each model are different. For example, the following two types:

mediapipe 32 bone points

coco 17 bone points

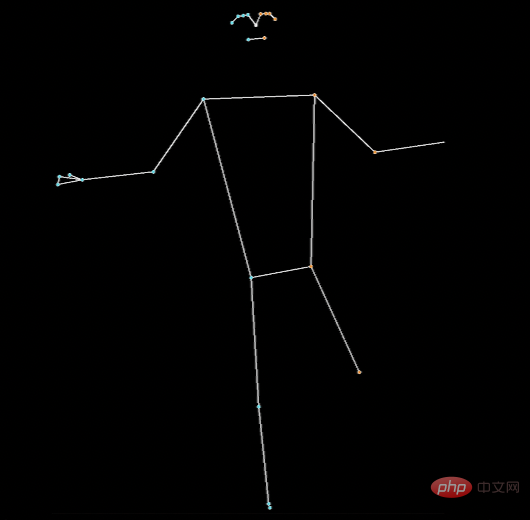

I still use mediapipe for the recognition of bone points. In addition to its fast speed, another advantage is that mediapipe recognizes many bone points, 32 of them, which can meet our needs. Because the classification of human body movements to be used below relies heavily on skeletal points.

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB) results = pose.process(image) if not results.pose_landmarks: continue # 识别人体骨骼点 image.flags.writeable = True image = cv2.cvtColor(image, cv2.COLOR_RGB2BGR) mp_drawing.draw_landmarks( image, results.pose_landmarks, mp_pose.POSE_CONNECTIONS, landmark_drawing_spec=mp_drawing_styles.get_default_pose_landmarks_style() )

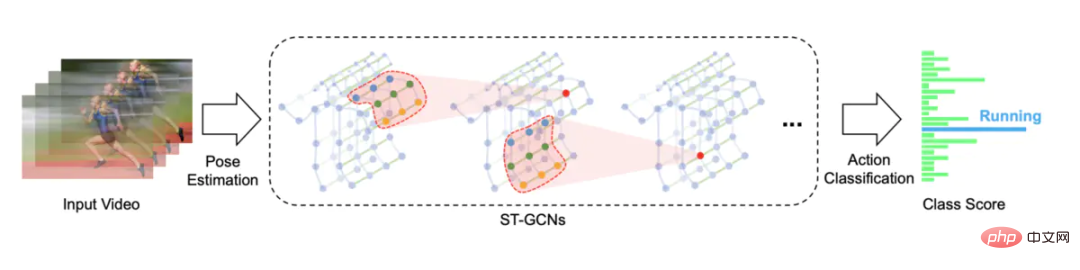

Action recognition uses a spatio-temporal graph convolutional network based on skeleton action recognition. The open source solution is STGCN (Skeleton-Based Graph Convolutional Networks )

https://github.com/yysijie/st-gcn

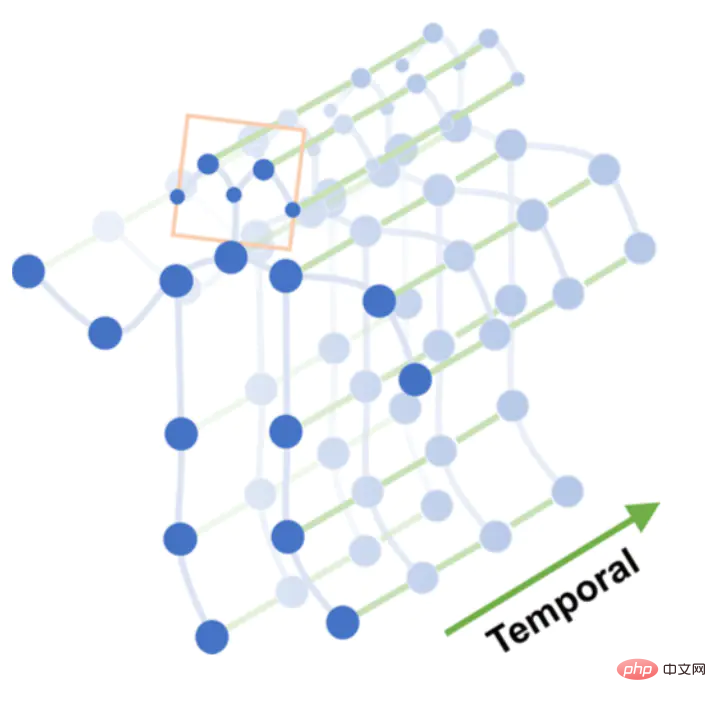

A set of actions, such as falling, consists of N frames, each One frame can construct a space graph composed of skeletal point coordinates. The skeletal points are connected between frames to form a time graph. The connection of the skeletal points and the connection of time frames can construct a space-time graph.

Space-time graph

Perform multi-layer graph convolution operations on the space-time graph to generate higher-level feature maps. Then it is input to the SoftMax classifier for action classification (Action Classification).

Graph Convolution

Originally I planned to train the STGCN model, but there were too many pitfalls, so I ended up training it directly with someone else. model.

Pit 1. STGCN supports skeleton points recognized by OpenPose, and there is a dataset Kinetics-skeleton that can be used directly. The pitfall is that the installation of OpenPose is too cumbersome and requires a lot of steps. After struggling, you give up.

Pit 2. STGCN also supports the NTU RGB D data set, which has 60 action categories, such as: standing up, walking, falling, etc. The human body in this data set contains 25 skeletal points, only coordinate data, and the original video is basically unavailable, so there is no way to know which positions these 25 skeletal points correspond to, and what model can be used to identify these 25 skeletal points. Struggle Then give up.

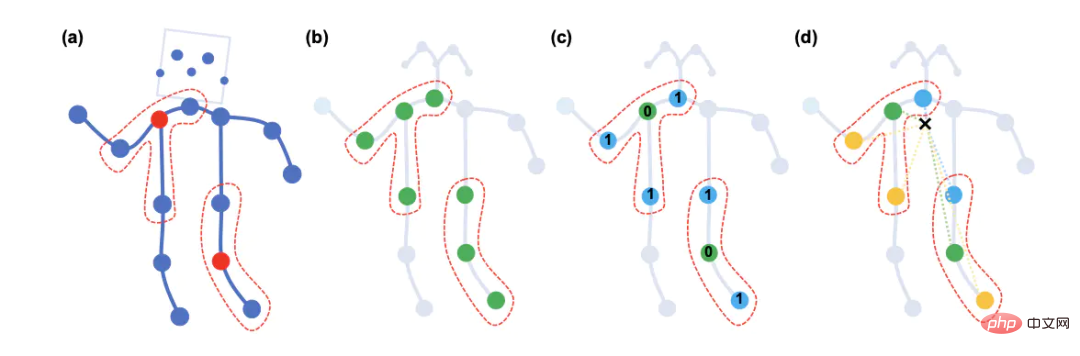

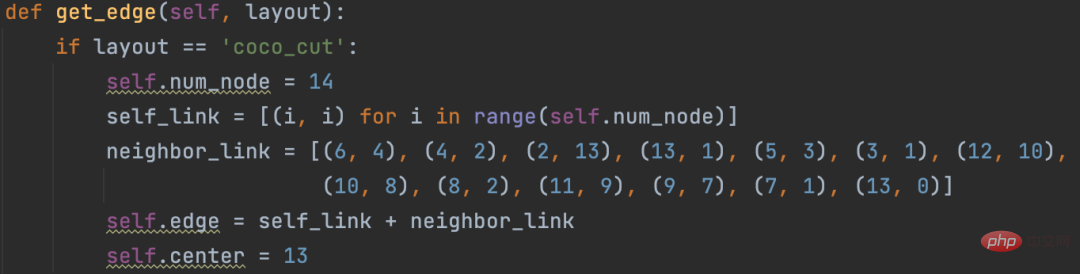

The above two big pitfalls made it impossible to directly train the STGCN model. I found an open source solution, which used alphapose to identify 14 bone points, and modified the STGCN source code to support custom bone points.

https://github.com/GajuuzZ/Human-Falling-Detect-Tracks

我看了下mediapipe包含了这 14 个骨骼点,所以可以用mediapipe识别的骨骼点输入他的模型,实现动作分类。

mediapipe 32个骨骼点

选出14个关键骨骼点

14个骨骼点提取代码:

KEY_JOINTS = [ mp_pose.PoseLandmark.NOSE, mp_pose.PoseLandmark.LEFT_SHOULDER, mp_pose.PoseLandmark.RIGHT_SHOULDER, mp_pose.PoseLandmark.LEFT_ELBOW, mp_pose.PoseLandmark.RIGHT_ELBOW, mp_pose.PoseLandmark.LEFT_WRIST, mp_pose.PoseLandmark.RIGHT_WRIST, mp_pose.PoseLandmark.LEFT_HIP, mp_pose.PoseLandmark.RIGHT_HIP, mp_pose.PoseLandmark.LEFT_KNEE, mp_pose.PoseLandmark.RIGHT_KNEE, mp_pose.PoseLandmark.LEFT_ANKLE, mp_pose.PoseLandmark.RIGHT_ANKLE ] landmarks = results.pose_landmarks.landmark joints = np.array([[landmarks[joint].x * image_w, landmarks[joint].y * image_h, landmarks[joint].visibility] for joint in KEY_JOINTS])

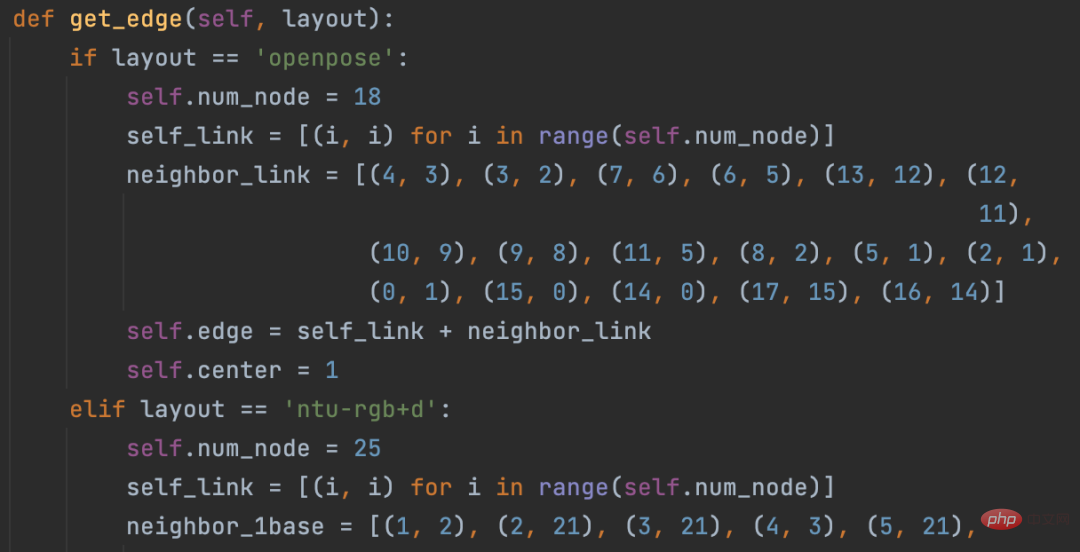

STGCN原始方案构造的空间图只支持openpose18个骨骼点和NTU RGB+D数据集25个骨骼点

修改这部分源码,以支持自定义的14个骨骼点

模型直接使用Human-Falling-Detect-Tracks项目已经训练好的,实际运行发现识别效果很差,因为没有看到模型训练过程,不确定问题出在哪。

有能力的朋友可以自己训练模型试试,另外,百度的Paddle也基于STGCN开发了一个跌倒检测模型,只支持摔倒这一种行为的识别。

当然大家也可以试试Transformer的方式,不需要提取骨骼点特征,直接将 N 帧Fall detection, based on skeletal point human action recognition, part of the code is completed with Chatgpt送入模型分类。

关于STGCN的原理,大家可以参考文章:https://www.jianshu.com/p/be85114006e3 总结的非常好。

需要源码的朋友留言区回复即可。

如果大家觉得本文对你有用就点个 在看 鼓励一下吧,后续我会持续分享优秀的 Python+AI 项目。

The above is the detailed content of Fall detection, based on skeletal point human action recognition, part of the code is completed with Chatgpt. For more information, please follow other related articles on the PHP Chinese website!

ChatGPT registration

ChatGPT registration

Recommended hard drive detection tools

Recommended hard drive detection tools

Domestic free ChatGPT encyclopedia

Domestic free ChatGPT encyclopedia

How to install chatgpt on mobile phone

How to install chatgpt on mobile phone

Can chatgpt be used in China?

Can chatgpt be used in China?

How to remove other people's TikTok watermarks from TikTok videos

How to remove other people's TikTok watermarks from TikTok videos

linux file rename command

linux file rename command

Where is the login entrance for gmail email?

Where is the login entrance for gmail email?