In the past two years, the "diffusion model of text-generated images" has become quite popular. DALL·E 2 and Imagen are both applications developed based on this.

This article is reprinted with the authorization of AI New Media Qubit (public account ID: QbitAI). Please contact the source for reprinting.

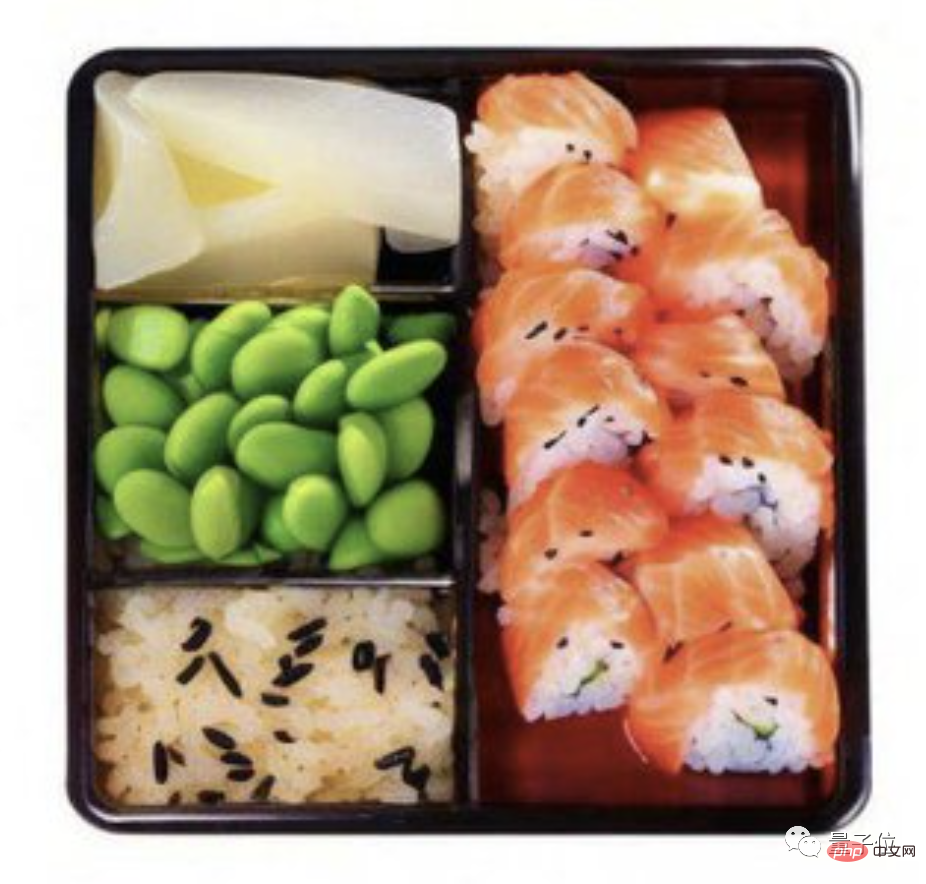

This is a seemingly ordinary Japanese bento.

But can you believe it, in fact, every grid of food is P-ed, and the original picture is still Aunt Jiang’s:

△Directly cut out the image and paste it on, and the effect will look fake at first sight

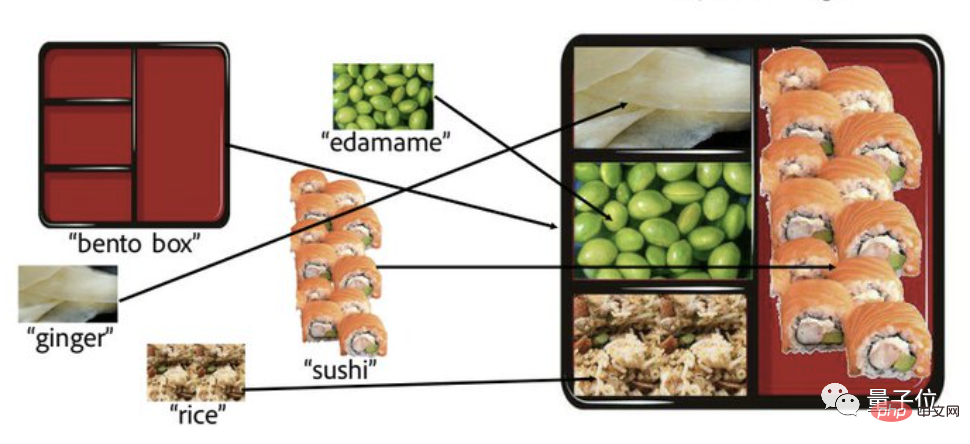

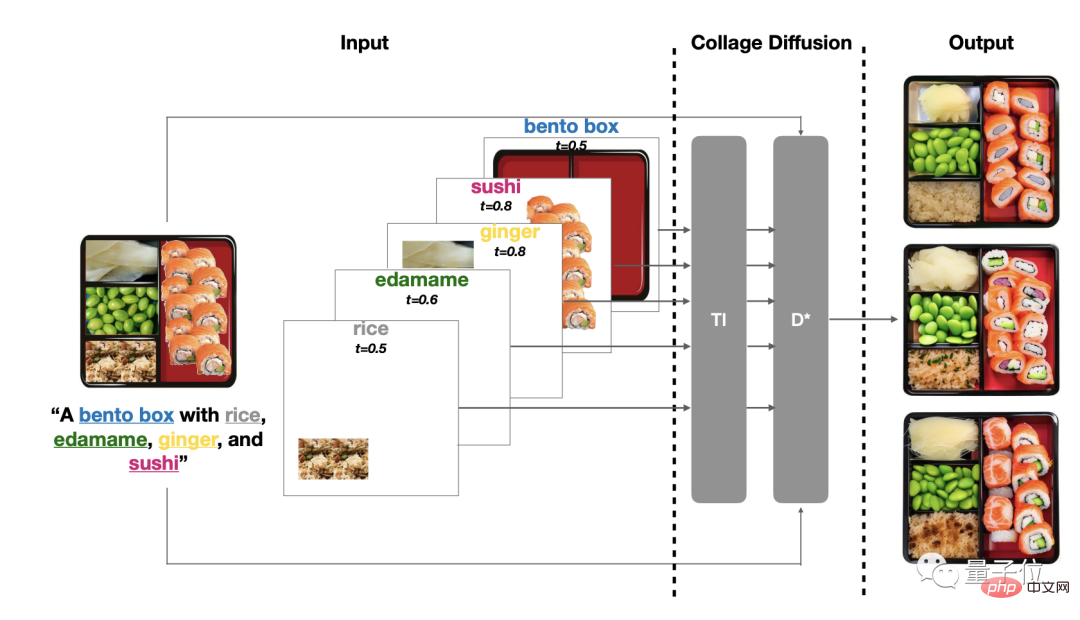

The operator behind it is not a PS boss, but an AI with a very straightforward name: Collage Diffusion.

Just find a few small pictures and give it to it, and the AI will be able to understand the content of the picture on its own, and then put the elementsvery naturallyinto a big picture - there is no fakeness at first glance.

The effect amazed many netizens.

Some PS enthusiasts even said directly:

This is simply a godsend... I hope it will be available in Automatic1111 soon (the network UI commonly used by Stable Diffusion users will also be integrated into PS see it in the plug-in version of .

In fact, there are several generated versions of the "Japanese bento" generated by this AI - all of them are natural and natural.

As for why there are multiple versions? The reason why I ask is because users can also customize it. They can fine-tune various details without making the overall situation too outrageous.

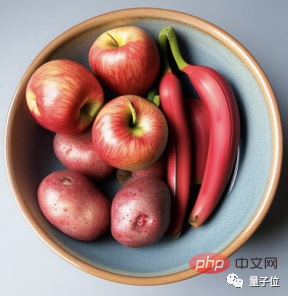

In addition to "Japanese bento", it also has many outstanding works.

For example, this is the material given to the AI. The P picture traces are obvious:

This is the picture put together by the AI. Anyway, I didn’t look at it. What P-picture traces are there:

In the past two years, the "diffusion model of text-generated images" has become really popular. DALL·E 2 and Imagen are both based on this developed applications. The advantage of this diffusion model is that the generated images are diverse and of high quality.

However,textafter all, for the target image, it can only play afuzzynormative role at best, so users usually have to spend a lot of time adjusting the prompts, and also It must be paired with additional control components to achieve good results.

Take the Japanese bento shown above as an example:

If the user only enters "a bento box containing rice, edamame, ginger and sushi", then it does not describe what kind of bento. There is no explanation of where the food is placed or what each food looks like. But if you have to make it clear, the user may have to write a short essay...

In view of this, the Stanford team decided to start from another angle.

They decided to refer to traditional ideas and generate the final image throughpuzzle, and thus developed anew diffusion model.

What’s interesting is that, to put it bluntly, this model can be considered “spelled out” using classic techniques.

The first is layering: Use the layer-based image editing UI to decompose the source image into RGBA layers (R, G, and B represent red, green, and blue respectively, A for transparency), then arrange these layers on the canvas and pair each layer with a text prompt.

Through layering, various elements in the image can be modified.

So far, layering has been a mature technology in the field of computer graphics, but previously layered information was generally used as a single image output result.

In this new “puzzle diffusion model”, hierarchical information becomes the input for subsequent operations.

In addition to layering,is also paired with existing diffusion-based image coordination technologyto improve the visual quality of images.

In short, this algorithm not only limits changes in certain attributes of objects (such as visual features), but also allows attributes (direction, lighting, perspective, occlusion) to change.

——This balances the relationship between the degree of restoration and the degree of naturalness, generating pictures that are “spiritually similar” and have no sense of violation.

The operation process is also very easy. In interactive editing mode, users can create a collage in a few minutes.

They can not only customize the spatial arrangement in the scene (that is, put the pictures taken from elsewhere into the appropriate position); they can also adjust the various components that generate the image. Using the same source image, you can get different effects.

△The rightmost column is the output result of this AI

And in the non-interactive mode (that is, the user does not puzzle, but directly puts a bunch of small pictures into the puzzle) Throw it to AI), and AI can automatically create a large picture with natural effects based on the small picture it gets.

Finally, let’s talk about the research team behind it. They are a group of teachers and students from the Computer Science Department of Stanford University.

The first author of the thesis, Vishnu Sarukkai is currently a graduate student in the Department of Computer Science at Stanford, where he is still studying for a master's degree and a Ph.D.

His main research directions are: computer graphics, computer vision and machine learning.

In addition, Linden Li, the co-author of the paper, is also a graduate student in the Department of Computer Science at Stanford.

While studying at school, he interned at NVIDIA for 4 months. He collaborated with NVIDIA's deep learning research team and participated in training a visual converter model that added 100M parameters.

Paper address: https://arxiv.org/abs/2303.00262

The above is the detailed content of It doesn't matter if you don't know how to use PS, AI puzzle technology can already make the fake look real.. For more information, please follow other related articles on the PHP Chinese website!