OpenAI co-founder Greg Brockman and chief scientist Ilya Sutskever evaluate the performance of GPT-4 and explain security issues and open source controversies.

There is no doubt that once GPT-4 was released, it detonated the entire industry and academia.

With its powerful reasoning and multi-modal capabilities, it has triggered a lot of heated discussions.

However, GPT-4 is not an open model.

Although OpenAI shared a large number of benchmarks and test results of GPT-4, it basically did not provide the data used for training, the cost, or the method used to create the model.

Of course, OpenAI will definitely not announce such an "exclusive secret".

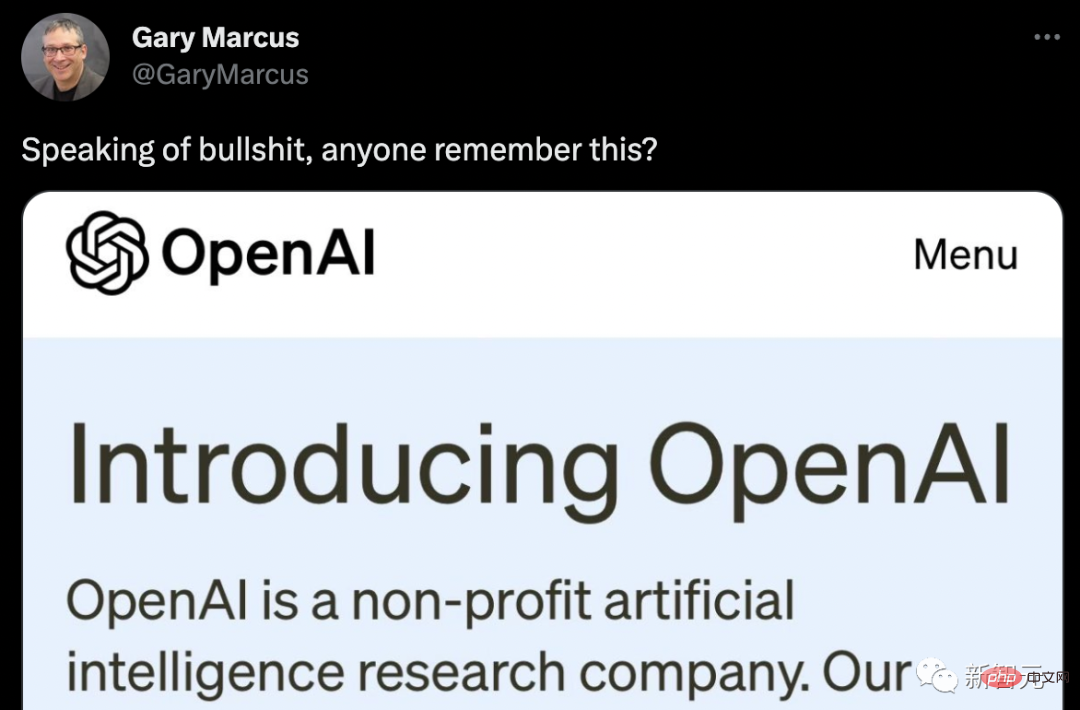

Marcus directly took out the original intention of OpenAI and posted a wave of ridicule.

Netizens improved a version.

When Greg Brockman, president and co-founder of OpenAI, compared GPT-4 and GPT-3, he said one word --different.

"It's just different, the model still has a lot of problems and bugs... but you can really see it improving skills in things like calculus or law. In some areas, it Evolved from performing very poorly to now being able to match the enemy."

The test results of GPT-4 are very good: on the AP Calculus BC exam, GPT-4 scored 4 points , while GPT-3 scored 1 point. In the simulated bar exam, GPT-4 passed with a score of about the top 10% of candidates; GPT-3.5's score hovered in the bottom 10%.

In terms of contextual capabilities, that is to say, the text that can be remembered before generating the text, GPT-4 can remember about 50 pages of content, which is 8 times that of GPT-3.

In terms of prompts, GPT-3 and GPT-3.5 can only accept text prompts: "Write an article about giraffes", while multi-modal GPT-4 can accept picture and text prompts: Give Show a picture of a giraffe and ask "How many giraffes are there?" These GPT-4s can answer correctly, and its ability to read memes is also very strong!

As soon as the ridiculously powerful GPT-4 was released, it aroused the interest of a large number of researchers and experts. But what is disappointing is that the GPT-4 released by OpenAI is not an "Open AI model."

Although OpenAI has shared a large number of benchmarks, test results and interesting demonstrations of GPT-4, there are basically no Provide information about the data used to train the system, energy costs, or the specific hardware or methods used to create it.

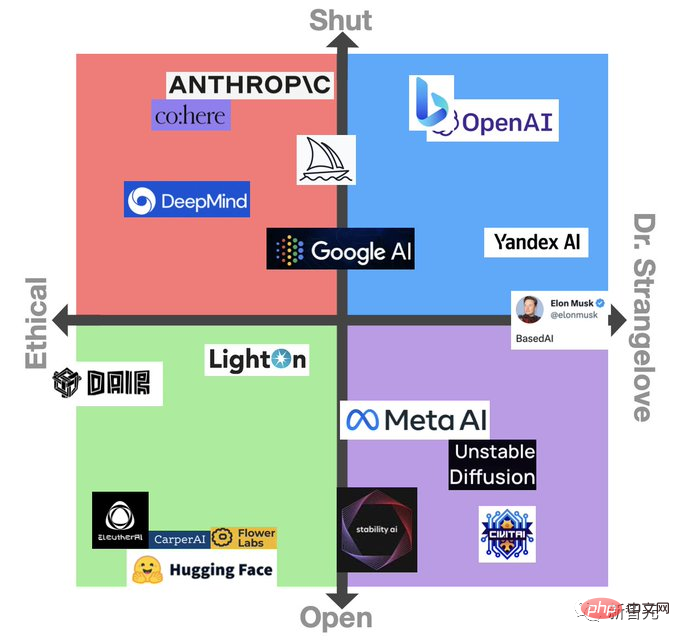

When Meta’s LLaMa model was leaked before, it triggered a wave of discussions about open source. However, this time everyone’s initial reaction to the GPT-4 closed model was mostly negative.

There is a general feeling in the artificial intelligence community that this not only undermines OpenAI’s founding spirit as a research institution, but also makes it difficult for others to develop safeguards to deal with threats.

Ben Schmidt, vice president of information design at Nomic AI, said that without being able to see what data GPT-4 was trained on, it is difficult for everyone to know where the system is safe to use and propose fixes. .

"In order for people to know where this model doesn't work, OpenAI needs to better understand what GPT-4 does and the assumptions it makes. I'm not going to trust someone who isn't snowing when it's snowing. Self-driving cars trained under local climate conditions. Because it is likely that loopholes and problems will only emerge during actual use."

In this regard, Ilya Sutskever, chief scientist and co-founder of OpenAI, explained : There is no doubt that OpenAI does not share more information about GPT-4 because of fear of competition and also because of concerns about security.

"The competition outside is fierce, and the development of GPT-4 is not easy. Almost all OpenAI employees have worked together for a long time to produce this thing. From a competitive perspective, there are many Many companies want to do the same thing, and GPT-4 is like a mature fruit."

As we all know, OpenAI was a non-profit organization when it was founded in 2015. Its founders include Sutskever, current CEO Sam Altman, president Greg Brockman, and Musk, who has now left OpenAI.

Sutskever and others have said that the organization's goal is to create value for everyone, not just shareholders, and said it will "cooperate freely" with all parties in the field.

However, in order to obtain billions of dollars of investment (mainly from Microsoft), OpenAI has still been given a layer of commercial attributes.

However, when asked why OpenAI changed its approach to sharing its research, Sutskever simply replied:

"We were wrong. At some point, AI/AGI will become extremely powerful, and at that time, open source will not make sense. It can be expected that within a few years, everyone will fully understand that open source artificial intelligence is Unwise. Because this model is very powerful. If someone wanted to, it would be quite easy to do massive damage with it. So as the model's abilities get higher and higher, it makes sense not to want to reveal them ."

William Falcon, CEO of Lightning AI and creator of the open source tool PyTorch Lightning, explained from a business perspective: "As a company, you have every right to do this."

At the same time, Brockman also believes that the application promotion of GPT-4 should be promoted slowly because OpenAI is evaluating the risks and benefits.

"We need to solve some policy issues, such as facial recognition and how to treat images of people. We need to figure out where the danger zone is, where the red line is, and then slowly clarify these points."

There is also the cliché, the risk of GPT-4 being used to do bad things.

Israeli cybersecurity startup Adversa AI published a blog post demonstrating how to bypass OpenAI’s content filters, allowing GPT-4 to generate phishing emails, generate sexual descriptions of homosexuals, and other highly objectionable content. text method.

Therefore, many people hope that GPT-4 will bring significant improvements in auditing.

In response to this, Brockman emphasized that they spent a lot of time trying to understand the capabilities of GPT-4, and the model has undergone six months of security training. In internal testing, GPT-4 was 82% less likely than GPT-3.5 to respond to content not allowed by OpenAI's usage policy, and was 40% more likely to produce "factual" responses.

However, Brockman does not deny that GPT-4 has shortcomings in this regard. But he highlighted the model's new mitigation-oriented tool, an API-level capability called "system information."

System messages are essentially instructions that set the tone and establish boundaries for GPT-4 interactions. In this way, using system information as a guardrail can prevent GPT-4 from deviating from the direction.

For example, the persona of a system message might look like this: "You are a tutor who always answers questions in the Socratic way. You never give students the answers, but always try to come up with the right ones." Questions to help them learn to think for themselves."

In fact, to a certain extent, Sutskever also agrees with the critics: "If more people are willing to study these models, We will learn more about them, which will be good."

So OpenAI provides certain academic and research institutions with access to its systems for these reasons.

And Brockman also mentioned Evals, OpenAI’s newly open-sourced software framework for evaluating the performance of its artificial intelligence models.

Evals uses a crowdsourcing approach to model testing, allowing users to develop and run benchmarks for evaluating models such as GPT-4, while checking their performance. This is also one of the signs of OpenAI's commitment to "sound" models.

"Through Evals, we can see the use cases that users care about and be able to test them in a systematic way. Part of the reason why we are open source is that we are moving from releasing a new model every three months to continuously improving New model. When we make a new model version, we can at least know what the changes are through open source."

In fact, the discussion about sharing research has always been quite hot. On the one hand, tech giants like Google and Microsoft are rushing to add AI capabilities to their products, often putting aside previous ethical concerns. Microsoft recently fired a team that was dedicated to ensuring that AI products comply with ethics); on the other hand; rapid improvements in technology have raised concerns about artificial intelligence.

Balancing these different pressures poses serious governance challenges and means we may need third-party regulators to get involved, said Jess Whittlestone, UK head of AI policy.

"OpenAI's intention in not sharing more details about GPT-4 is good, but it may also lead to the centralization of power in the artificial intelligence world. These decisions should not be made by individual companies."

Whittlestone said: "Ideally, we need to codify the practices here and then have independent third parties review the risks associated with certain models."

The above is the detailed content of GPT-4 is so powerful that OpenAI refuses to open! Chief Scientist: Open source is not smart, we were wrong before. For more information, please follow other related articles on the PHP Chinese website!