As a SQL Boy, there won’t be anyone who doesn’t know the basics, right? There are not many questions in the interview. Friends who have good basic knowledge can skip this part. Of course, you may write some SQL statements on the spot. SQ statements can be practiced through websites such as Niuke, LeetCode, and LintCode.

MySQL connections are mainly divided into inner connections and outer connections. Commonly used outer connections include left joins and right joins.

The role of the three major paradigms is to control the redundancy of the database and save space. In fact, the designs of general Internet companies are anti-paradigm , by redundant some data, avoid cross-table and cross-database, use space for time, and improve performance.

In daily design, for strings with a relatively fixed length, char can be used, and for strings with uncertain lengths, varchar is more appropriate.

The in statement in MySQL performs a hash connection between the external table and the internal table, while the exists statement performs a loop on the external table, and queries the internal table each time the loop loops. We may think that exists is more efficient than the in statement. This statement is actually inaccurate. We need to distinguish between scenarios:

Currency is commonly represented by Decimal and Numric types in MySQL databases. These two types are implemented as the same type by MySQL. They are used to store currency-related data.

For example, salary DECIMAL(9,2), 9(precision) represents the total number of decimal places that will be used to store the value, and 2(scale) represents the number of digits after the decimal point that will be used to store the value. The range of values stored in the salary column is from -9999999.99 to 9999999.99.

DECIMAL and NUMERIC values are stored as strings rather than as binary floating point numbers in order to preserve the decimal precision of those values.

The reason why float or double is not used: Because float and double are stored in binary, there is a certain error.

MySQL can directly use strings to store emoji.

But it should be noted that utf8 encoding is not possible. The utf8 in MySQL is a castrated version of utf8. It only uses up to 3 bytes to store characters, so it cannot store expressions. so what should I do now?

Requires utf8mb4 encoding.

|

delete |

truncate |

drop |

| Type |

belongs to DML |

belongs to DDL |

belongs DDL |

##Rollback | Can be rolled back | Cannot be rolled back | Cannot be rolled back |

Delete content | The table structure is still there, delete all or part of the data rows in the table | The table structure is still there, delete all the data in the table | From the database When deleting a table, all data rows, indexes and permissions will also be deleted |

Deletion speed | The deletion speed is slow and you need to delete rows one by one | Delete Fast speed | The fastest deletion speed |

Therefore, when a table is no longer needed, use drop; when you want to delete some data rows, use delete; when retaining the table and deleting all data, use truncate.

11.What is the difference between UNION and UNION ALL?

- If you use UNION ALL, duplicate record rows will not be merged

- The efficiency of UNION is higher than that of UNION ALL

12.count(1), count What is the difference between (*) and count (column name)?

Execution effect:

- count(*) includes all columns, equivalent to the number of rows, in statistics When calculating results, column values that are NULL

- will not be ignored. count(1) includes ignoring all columns, using 1 to represent code lines. When counting results, column values that are NULL

# will not be ignored. ##count (column name) only includes the column with the column name. When counting the results, the count of a column value that is empty (the empty here is not just an empty string or 0, but represents null), that is, a certain field When the value is NULL, no statistics are collected. -

Execution speed:

The column name is the primary key, count (column name) will be faster than count(1)- If the column name is not the primary key, count(1) will be faster than count(column name)

- If the table has multiple columns and no primary key, the execution efficiency of count(1) is better than count(*)

-

If there is a primary key, the execution efficiency of select count (primary key) is optimal- If the table has only one field, select count(*) is optimal.

-

13. The execution order of a SQL query statement?

- #FROM

: Execute the flute on the left table and the right table in the FROM clause Cartesianproduct (Cartesianproduct), generates virtual table VT1

- ON

: Apply ON filtering to virtual table VT1, only those rows that meet the Insert

- JOIN

into virtual table VT2: If OUTER JOIN (such as LEFT OUTER JOIN, RIGHT OUTER JOIN) is specified, then the unmatched rows in the table are retained as External rows are added to virtual table VT2, resulting in virtual table VT3. If the FROM clause contains more than two tables, repeat steps 1) to 3) for the result table VT3 generated by the previous connection and the next table until all tables are processed

- WHERE

: Apply WHERE filter conditions to virtual table VT3. Only records that match are inserted into virtual table VT4

## GROUP BY- : Group the records in VT4 according to the columns in the GROUP BY clause, resulting in VT5

CUBE|ROLLUP- : Group the table VT5 performs CUBE or ROLLUP operations to generate table VT6

HAVING- : Apply the HAVING filter to virtual table VT6, and only records that meet are inserted Virtual table VT7.

SELECT- : Perform the SELECT operation for the second time, select the specified column, and insert it into virtual table VT8

DISTINCT- : Remove duplicate data and generate virtual table VT9

ORDER BY- : Process the records in virtual table VT9 according to Sorting operation generates virtual table VT10. 11)

LIMIT- : Take out the records of the specified row, generate virtual table VT11, and return it to the query user

Database Architecture

14. Talk about the basic architecture of MySQL?

The MySQL logical architecture diagram is mainly divided into three layers:

- Client: The top-level service is not unique to MySQL. Most network-based client/server tools or services have a similar architecture. Such as connection processing, authorization authentication, security, etc.

- Server layer: Most of MySQL's core service functions are in this layer, including query parsing, analysis, optimization, caching, and all built-in functions (such as date, time, mathematics, and encryption functions), all Cross-storage engine functions are implemented in this layer: stored procedures, triggers, views, etc.

- Storage engine layer: The third layer contains the storage engine. The storage engine is responsible for the storage and retrieval of data in MySQL. The Server layer communicates with the storage engine through APIs. These interfaces shield the differences between different storage engines, making these differences transparent to the upper-layer query process.

15. How is a SQL query statement executed in MySQL?

- First check whether the statement

has permission. If there is no permission, an error message will be returned directly. If there is permission, the cache will be queried first (before MySQL8.0 version).

- If there is no cache, the analyzer performs

grammar analysis, extracts key elements such as select in the sql statement, and then determines whether the sql statement has syntax errors, such as whether the keywords are correct, etc.

- After syntax analysis, the MySQL server will optimize the query statement and determine the execution plan.

- After completing the query optimization, call the database engine interface

according to the generated execution plan and return the execution results.

Storage Engine

16.What are the common storage engines for MySQL?

The main storage engines and functions are as follows:

| Function |

MylSAM |

MEMORY |

InnoDB |

| Storage Limit |

256TB |

RAM |

64TB |

| Support transactions |

No |

No |

Yes |

| Support full-text indexing |

Yes |

No |

Yes |

| Support tree index |

Yes |

Yes |

Yes |

| Support hash index |

No |

Yes |

Yes |

| Support data caching |

No |

N/A |

Yes |

| Support foreign keys |

No |

No |

Yes |

Before MySQL 5.5, the default storage engine was MylSAM, and after 5.5 it became InnoDB.

The hash index supported by InnoDB is adaptive. InnoDB will automatically generate a hash index for the table based on the usage of the table. Human intervention is not allowed to generate a hash index in a table.

InnoDB supports full-text indexing starting from MySQL 5.6.

17. How should you choose a storage engine?

You can roughly choose this:

- In most cases, using the default InnoDB is enough. If you want to provide transaction security (ACID compatibility) capabilities for commit, rollback, and recovery, and require concurrency control, InnoDB is the first choice.

- If the data table is mainly used to insert and query records, the MyISAM engine provides higher processing efficiency.

- If the data is only temporarily stored, the amount of data is not large, and high data security is not required, you can choose to save the data in the MEMORY engine in the memory. This engine is used as a temporary table in MySQL to store queries. intermediate results.

Which engine to use can be flexibly chosen according to needs. Because the storage engine is table-based, multiple tables in a database can use different engines to meet various performance and actual needs. Using the appropriate storage engine will improve the performance of the entire database.

18.What are the main differences between InnoDB and MylSAM?

PS: MySQL8.0 is slowly becoming popular. If it is not an interview, you don’t need to know much about MylSAM.

1. Storage structure : Each MyISAM is stored in three files on the disk; all InnoDB tables are stored in the same data file (It may also be multiple files, or independent table space files). The size of the InnoDB table is only limited by the size of the operating system file, which is generally 2GB.

2. Transaction support: MyISAM does not provide transaction support; InnoDB provides transaction support, with transaction (commit), rollback (rollback) and crash recovery capabilities (crash recovery capabilities) Transaction security features.

3 Minimum lock granularity: MyISAM only supports table-level locks. The entire table will be locked during updates, causing other queries and updates to be blocked. InnoDB supports row-level locks.

4. Index type: MyISAM's index is a clustered index, and the data structure is a B-tree; InnoDB's index is a non-clustered index, and the data structure is a B-tree.

5. The primary key is required: MyISAM allows tables without any indexes and primary keys to exist; InnoDB will automatically generate a 6-word number if no primary key or non-empty unique index is set. The primary key of the section (not visible to the user), the data is part of the main index, and the additional index saves the value of the main index.

6. The specific number of rows in the table: MyISAM saves the total number of rows in the table. If you select count() from table;, the value will be taken out directly; InnoDB does not save the table. The total number of rows, if you use select count() from table; it will traverse the entire table; but after adding the wehre condition, MyISAM and InnoDB handle it in the same way.

7. Foreign key support: MyISAM does not support foreign keys; InnoDB supports foreign keys.

Log19.What are the MySQL log files? Introduce the functions respectively?

There are many MySQL log files, including:

- Error log (error log): Error log file is very important to MySQL The startup, operation, and shutdown processes are recorded, which can help locate MySQL problems.

- Slow query log (slow query log): The slow query log is used to record query statements whose execution time exceeds the length defined by the long_query_time variable. Through the slow query log, you can find out which query statements have low execution efficiency for optimization.

- General query log (general log): The general query log records all information requested for the MySQL database, regardless of whether the request is executed correctly.

- Binary log (bin log): Regarding the binary log, it records all DDL and DML statements executed by the database (except data query statements select, show, etc.), recorded in the form of events and Saved in binary file.

There are also two InnoDB storage engine-specific log files:

-

Redo log (redo log): Redo logs are crucial because they record transaction logs for the InnoDB storage engine.

-

Rollback log(undo log): The rollback log is also a log provided by the InnoDB engine. As the name suggests, the role of the rollback log is to roll back the data. When a transaction modifies the database, the InnoDB engine will not only record the redo log, but also generate the corresponding undo log; if the transaction execution fails or rollback is called, causing the transaction to be rolled back, the information in the undo log can be used to restore the data. Scroll to the way it looked before the modification.

20.What is the difference between binlog and redo log?

- bin log will record all log records related to the database, including logs of storage engines such as InnoDB and MyISAM, while redo log only records logs of the InnoDB storage engine.

- The recorded content is different. The bin log records the specific operation content of a transaction, that is, the log is a logical log. The redo log records the physical changes to each page (Page).

- The writing time is different. The bin log is only submitted before the transaction is submitted, that is, it is only written to the disk once. While the transaction is in progress, redo ertry is constantly being written to the redo log.

- The writing methods are also different. Redo log is cyclic writing and erasing, while bin log is appending writing and will not overwrite already written files.

21. Do you understand how to execute an update statement?

The execution of the update statement is completed by the cooperation of the server layer and the engine layer. In addition to writing the data to the table, the corresponding logs must also be recorded.

The executor first looks for the engine to get the line ID=2. ID is the primary key, and the storage engine retrieves the data and finds this row. If the data page where the row with ID=2 is located is already in the memory, it will be returned directly to the executor; otherwise, it needs to be read into the memory from the disk first and then returned.

The executor gets the row data given by the engine, adds 1 to this value, for example, it used to be N, but now it is N 1, gets a new row of data, and then calls the engine interface to write Enter this row of new data.

The engine updates this new row of data into the memory and records the update operation into the redo log. At this time, the redo log is in the prepare state. Then inform the executor that the execution is completed and the transaction can be submitted at any time.

The executor generates the binlog of this operation and writes the binlog to disk.

The executor calls the engine's commit transaction interface, and the engine changes the redo log just written to the commit state, and the update is completed.

As can be seen from the above figure, when MySQL executes the update statement, it parses and executes the statement in the service layer, extracts and stores data in the engine layer; at the same time, in the service layer The layer writes the binlog and writes the redo log in InnoDB.

Not only that, there are two stages of submission when writing redo log. One is the writing of prepare state before binlog writing, and the other is after binlog writing Writing of commit status.

22. Then why is there a two-stage submission?

Why two-stage submission? Can't you just submit it directly?

We can assume that instead of using a two-stage commit method, we use a "single-stage" commit, that is, either write the redo log first and then write the binlog; or write the binlog first and then write the redo log. Submitting in these two ways will cause the state of the original database to be inconsistent with the state of the restored database.

Write redo log first, then write binlog:

After writing redo log, the data now has crash-safe capability , so the system crashes and the data will be restored to the state before the transaction started. However, if the system crashes when the redo log is completed and before the binlog is written, the system crashes. At this time, binlog does not save the above update statement, resulting in the above update statement being missing when binlog is used to back up or restore the database. As a result, the data in the row id=2 is not updated.

Write to binlog first, then redo log:

After writing binlog, all statements are saved. Therefore, the data in the row id=2 in the database copied or restored through binlog will be updated to a=1. However, if the system crashes before the redo log is written, the transaction recorded in the redo log will be invalid, resulting in the data in the id=2 row in the actual database not being updated.

Simply put, both redo log and binlog can be used to represent the commit status of a transaction, and two-phase commit is to keep the two states logically consistent.

23.redo log怎么刷入磁盘的知道吗?

redo log的写入不是直接落到磁盘,而是在内存中设置了一片称之为redo log buffer的连续内存空间,也就是redo 日志缓冲区。

什么时候会刷入磁盘?

在如下的一些情况中,log buffer的数据会刷入磁盘:

log buffer 的大小是有限的,如果不停的往这个有限大小的 log buffer 里塞入日志,很快它就会被填满。如果当前写入 log buffer 的redo 日志量已经占满了 log buffer 总容量的大约一半左右,就需要把这些日志刷新到磁盘上。

在事务提交时,为了保证持久性,会把log buffer中的日志全部刷到磁盘。注意,这时候,除了本事务的,可能还会刷入其它事务的日志。

有一个后台线程,大约每秒都会刷新一次log buffer中的redo log到磁盘。

重做日志缓存、重做日志文件都是以块(block) 的方式进行保存的,称之为重做日志块(redo log block) ,块的大小是固定的512字节。我们的redo log它是固定大小的,可以看作是一个逻辑上的 log group,由一定数量的log block 组成。

它的写入方式是从头到尾开始写,写到末尾又回到开头循环写。

其中有两个标记位置:

write pos是当前记录的位置,一边写一边后移,写到第3号文件末尾后就回到0号文件开头。checkpoint是当前要擦除的位置,也是往后推移并且循环的,擦除记录前要把记录更新到磁盘。

当write_pos追上checkpoint时,表示redo log日志已经写满。这时候就不能接着往里写数据了,需要执行checkpoint规则腾出可写空间。

所谓的checkpoint规则,就是checkpoint触发后,将buffer中日志页都刷到磁盘。

SQL 优化

24.慢SQL如何定位呢?

慢SQL的监控主要通过两个途径:

-

慢查询日志:开启MySQL的慢查询日志,再通过一些工具比如mysqldumpslow去分析对应的慢查询日志,当然现在一般的云厂商都提供了可视化的平台。

-

服务监控:可以在业务的基建中加入对慢SQL的监控,常见的方案有字节码插桩、连接池扩展、ORM框架过程,对服务运行中的慢SQL进行监控和告警。

25.有哪些方式优化慢SQL?

慢SQL的优化,主要从两个方面考虑,SQL语句本身的优化,以及数据库设计的优化。

避免不必要的列

这个是老生常谈,但还是经常会出的情况,SQL查询的时候,应该只查询需要的列,而不要包含额外的列,像slect * 这种写法应该尽量避免。

分页优化

在数据量比较大,分页比较深的情况下,需要考虑分页的优化。

例如:

select * from table where type = 2 and level = 9 order by id asc limit 190289,10;

Copy after login

优化方案:

select * from table where id >

(select * from table where type = 2 and level = 9 order by id asc limit 190

Copy after login

索引优化

合理地设计和使用索引,是优化慢SQL的利器。

利用覆盖索引

InnoDB使用非主键索引查询数据时会回表,但是如果索引的叶节点中已经包含要查询的字段,那它没有必要再回表查询了,这就叫覆盖索引

例如对于如下查询:

select name from test where city='上海'

Copy after login

我们将被查询的字段建立到联合索引中,这样查询结果就可以直接从索引中获取

alter table test add index idx_city_name (city, name);

Copy after login

低版本避免使用or查询

在 MySQL 5.0 之前的版本要尽量避免使用 or 查询,可以使用 union 或者子查询来替代,因为早期的 MySQL 版本使用 or 查询可能会导致索引失效,高版本引入了索引合并,解决了这个问题。

避免使用 != 或者 操作符

SQL中,不等于操作符会导致查询引擎放弃查询索引,引起全表扫描,即使比较的字段上有索引

解决方法:通过把不等于操作符改成or,可以使用索引,避免全表扫描

例如,把column’aaa’,改成column>’aaa’ or column,就可以使用索引了

适当使用前缀索引

适当地使用前缀所云,可以降低索引的空间占用,提高索引的查询效率。

比如,邮箱的后缀都是固定的“@xxx.com”,那么类似这种后面几位为固定值的字段就非常适合定义为前缀索引

alter table test add index index2(email(6));

Copy after login

PS:需要注意的是,前缀索引也存在缺点,MySQL无法利用前缀索引做order by和group by 操作,也无法作为覆盖索引

避免列上函数运算

要避免在列字段上进行算术运算或其他表达式运算,否则可能会导致存储引擎无法正确使用索引,从而影响了查询的效率

select * from test where id + 1 = 50;

select * from test where month(updateTime) = 7;

Copy after login

正确使用联合索引

使用联合索引的时候,注意最左匹配原则。

JOIN优化

优化子查询

尽量使用 Join 语句来替代子查询,因为子查询是嵌套查询,而嵌套查询会新创建一张临时表,而临时表的创建与销毁会占用一定的系统资源以及花费一定的时间,同时对于返回结果集比较大的子查询,其对查询性能的影响更大

小表驱动大表

关联查询的时候要拿小表去驱动大表,因为关联的时候,MySQL内部会遍历驱动表,再去连接被驱动表。

比如left join,左表就是驱动表,A表小于B表,建立连接的次数就少,查询速度就被加快了。

select name from A left join B ;

Copy after login

适当增加冗余字段

增加冗余字段可以减少大量的连表查询,因为多张表的连表查询性能很低,所有可以适当的增加冗余字段,以减少多张表的关联查询,这是以空间换时间的优化策略

避免使用JOIN关联太多的表

《阿里巴巴Java开发手册》规定不要join超过三张表,第一join太多降低查询的速度,第二join的buffer会占用更多的内存。

如果不可避免要join多张表,可以考虑使用数据异构的方式异构到ES中查询。

排序优化

利用索引扫描做排序

MySQL有两种方式生成有序结果:其一是对结果集进行排序的操作,其二是按照索引顺序扫描得出的结果自然是有序的

但是如果索引不能覆盖查询所需列,就不得不每扫描一条记录回表查询一次,这个读操作是随机IO,通常会比顺序全表扫描还慢

因此,在设计索引时,尽可能使用同一个索引既满足排序又用于查找行

例如:

--建立索引(date,staff_id,customer_id)

select staff_id, customer_id from test where date = '2010-01-01' order by staff_id,customer_id;

Copy after login

只有当索引的列顺序和ORDER BY子句的顺序完全一致,并且所有列的排序方向都一样时,才能够使用索引来对结果做排序

UNION优化

条件下推

MySQL处理union的策略是先创建临时表,然后将各个查询结果填充到临时表中最后再来做查询,很多优化策略在union查询中都会失效,因为它无法利用索引

最好手工将where、limit等子句下推到union的各个子查询中,以便优化器可以充分利用这些条件进行优化

此外,除非确实需要服务器去重,一定要使用union all,如果不加all关键字,MySQL会给临时表加上distinct选项,这会导致对整个临时表做唯一性检查,代价很高。

26.怎么看执行计划(explain),如何理解其中各个字段的含义?

explain是sql优化的利器,除了优化慢sql,平时的sql编写,也应该先explain,查看一下执行计划,看看是否还有优化的空间。

直接在 select 语句之前增加explain关键字,就会返回执行计划的信息。

id Column: MySQL will assign a unique id value to each select statement

select_type Column, query type, classified according to association, union, subquery, etc. Common query types include SIMPLE and PRIMARY.

#table Column: Indicates which table a row of explain is accessing.

-

#type Column: One of the most important columns. Represents the type of association or access type that MySQL determines how to find rows in the table.

Performance from best to worst is: system > const > eq_ref > ref > fulltext > ref_or_null > index_merge > unique_subquery > index_subquery > range > index > ALL

-

system

system: When the table has only one row of records (system table), the amount of data is very small, and disk IO is often not required. , very fast

-

const

const: indicates that the primary key primary key or unique# is hit during query ## The unique index, or connected part, is a constant (const) value. This type of scanning is extremely efficient, returns a small amount of data, and is very fast.

- eq_ref

eq_ref: Hit the primary key primary key or unique key index during query, type is eq_ref.

- ref_or_null

ref_or_null: This connection type is similar to ref, the difference is that MySQL will additionally search for items containing ## Rows with #NULL values.

index_merge-

index_merge

: The index merge optimization method is used, and the query uses more than two indexes.

unique_subquery-

unique_subquery

: Replace the following IN subquery, and the subquery returns a unique set.

index_subquery-

index_subquery

: Different from unique_subquery, it is used for non-unique indexes and can return duplicate values.

range-

range

: Select rows using index, retrieving only rows within the given range. To put it simply, it is to retrieve data within a given range for an indexed field. Use bettween...and, , <code>>, in the <code>where statement , in and other conditional queries type are all range.

index-

index

: Index and ALL actually read the entire table, the difference The reason is that index is read by traversing the index tree, while ALL is read from the hard disk.

ALL-

Needless to say, full table scan.

- possible_keys

Column: Shows which indexes the query may use to find, which is more important when using indexes to optimize SQL.

- key

Column: This column shows which index mysql actually uses to optimize access to the table, which is commonly used to determine whether the index is invalid.

- key_len

Column: shows what MySQL uses

- ref

Column: ref column shows It is the value that matches the index column as equal value. Common ones are: const (constant), func, NULL, and field name.

- rows

Column: This is also an important field. Based on statistical information, the MySQL query optimizer estimates the data rows that SQL needs to scan to find the result set. Number, this value very intuitively shows the efficiency of SQL. In principle, the fewer rows, the better.

- Extra

Column: Displays additional information that does not fit in other columns. Although it is called extra, there is also some important information:

Using index: Indicates that MySQL will use a covering index to avoid returning the table

- Using where: Indicates that filtering will be performed after the storage engine is retrieved

- Using temporary: Indicates A temporary table is used when sorting query results.

- Index

The index can be said to be the top priority in the MySQL interview, and it must be completely won.

27. Can you briefly talk about the classification of indexes?

Classify indexes from three different dimensions:

For example, from the perspective of basic usage:

For example, from the perspective of basic usage:

Primary key Index: InnoDB primary key is the default index. Data columns are not allowed to be repeated or NULL. A table can only have one primary key.

- Unique index: Duplication of data columns is not allowed, NULL values are allowed, and a table allows multiple columns to create unique indexes.

- Ordinary index: Basic index type, no uniqueness restrictions, NULL values allowed.

- Combined index: Multiple column values form an index for combined search, which is more efficient than index merging

- 28. Why does using an index speed up queries?

The traditional query method traverses the table in order. No matter how many pieces of data are queried, MySQL needs to traverse the table data from beginning to end.

After we add the index, MySQL generally generates an index file through the BTREE algorithm. When querying the database, find the index file to traverse, search in the relatively small index data, and then map it to the corresponding data, which can greatly improve Search efficiency.

It’s the same as when we look for the corresponding content through the book’s table of contents.

#29. What are the points to note when creating an index?

Although the index is a powerful tool for sql performance optimization, index maintenance also requires costs, so when creating an index, you must also pay attention to:

-

The index should be built in the query application Frequent fields

Create indexes on (on) fields used for where judgment, order sorting and join.

-

The number of indexes should be appropriate

Indexes need to occupy space; they also need to be maintained during updates.

-

Do not create indexes for fields with low differentiation, such as gender.

For fields with too low dispersion, the number of scanned rows will be limited.

-

Frequently updated values should not be used as primary keys or indexes

It costs money to maintain index files; it will also lead to page splits and increased IO times.

-

The combined index puts values with high hashing (high distinction) in front

In order to satisfy the leftmost prefix matching principle

-

Create a composite index instead of modifying a single column index.

Combined index replaces multiple single-column indexes (for single-column indexes, MySQL can basically only use one index, so it is more suitable to use combined indexes when multiple condition queries are often used)

For fields that are too long, use prefix index. When the field value is relatively long, indexing will consume a lot of space and the search will be very slow. We can create an index by intercepting the previous part of the field, which is called a prefix index.

-

It is not recommended to use unordered values (such as ID cards, UUID) as indexes

When the primary key is uncertain, it will cause leaf nodes to split frequently and disks will appear. Storage fragmentation

#30. Under what circumstances will the index fail?

- The query condition contains or, which may cause the index to fail

- If the field type is a string, the where must be enclosed in quotation marks, otherwise the index will fail due to implicit type conversion

- like wildcards may cause index failure.

- Joint index, the condition column when querying is not the first column in the joint index, and the index becomes invalid.

- When using mysql's built-in function on the index column, the index becomes invalid.

- When performing operations on index columns (such as , -, *, /), the index becomes invalid.

- When using (!= or , not in) on an index field, it may cause index failure.

- Using is null or is not null on index fields may cause index failure.

- The encoding formats of fields associated with left join queries or right join queries are different, which may lead to index failure.

- The MySQL optimizer estimates that using a full table scan is faster than using an index, so the index is not used.

31. What scenarios are indexes not suitable for?

- Tables with a relatively small amount of data are not suitable for indexing

- Fields that are updated frequently are not suitable for indexing

- Fields with low discreteness are not suitable for indexing ( Such as gender)

32. Is it better to build more indexes?

of course not.

- The index will occupy disk space

-

Although the index will improve the query efficiency, it will reduce the efficiency of updating the table. For example, every time a table is added, deleted, or modified, MySQL must not only save the data, but also save or update the corresponding index file.

33.Do you know what data structure MySQL index uses?

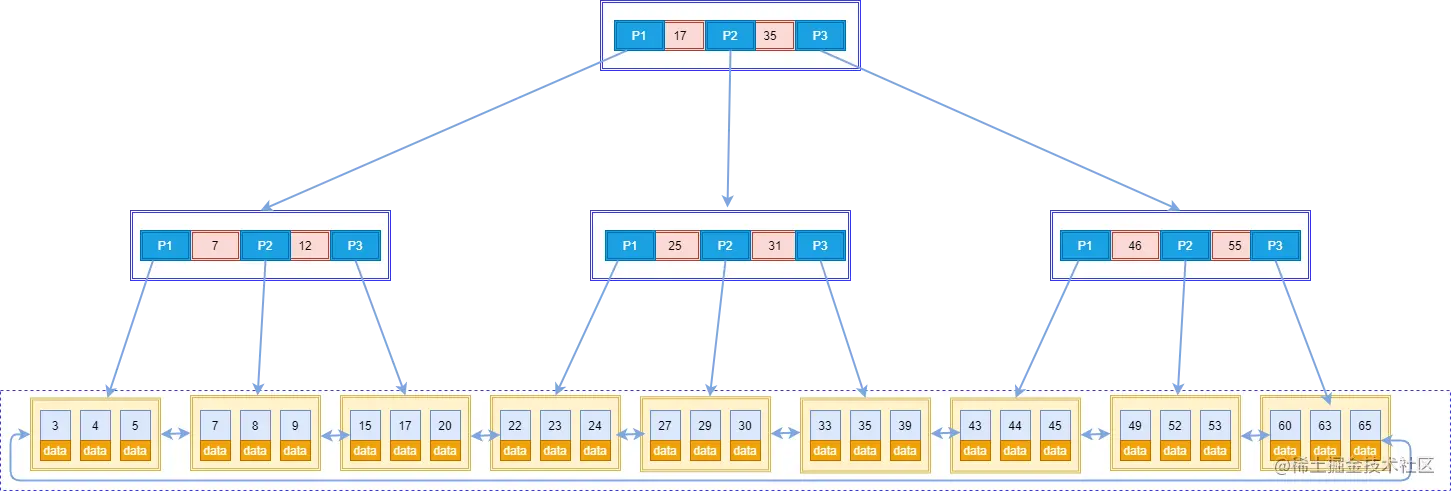

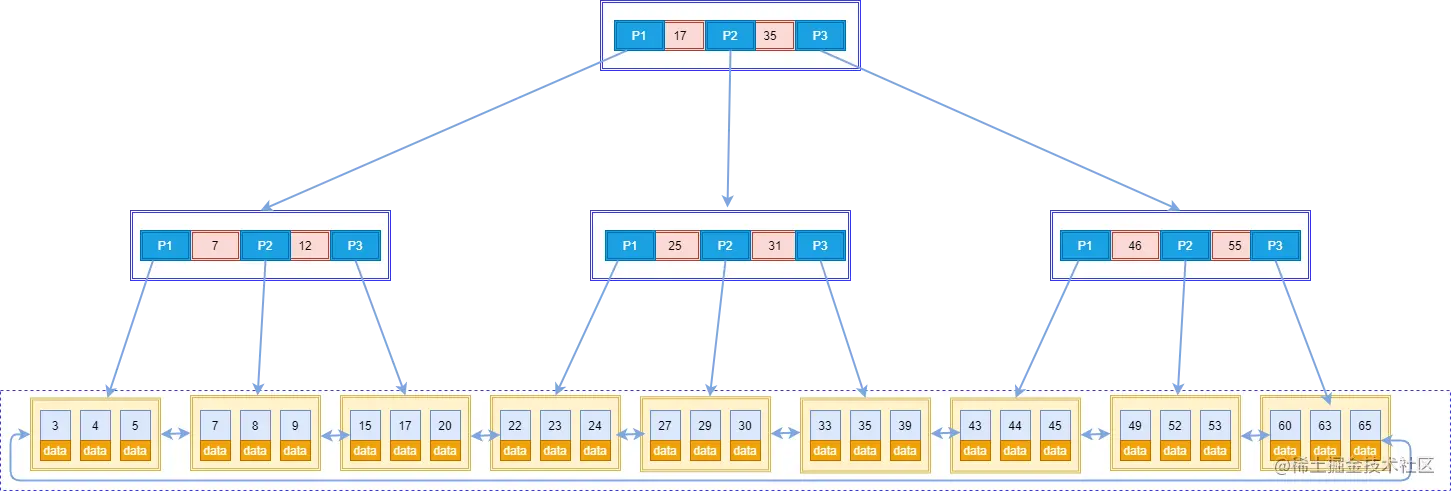

MySQL's default storage engine is InnoDB, which uses a B-tree structured index.

- B tree: Only leaf nodes store data, and non-leaf nodes only store key values. Leaf nodes are connected using bidirectional pointers, and the lowest leaf nodes form a bidirectional ordered linked list.

In this picture, there are two important points:

- The outermost block is called a disk block. You can see that each disk block contains several data items (shown in pink) and pointers (shown in yellow/gray), such as root The node disk contains data items 17 and 35, including pointers P1, P2, and P3. P1 represents disk blocks less than 17, P2 represents disk blocks between 17 and 35, and P3 represents disk blocks greater than 35. The real data exists in the leaf nodes, namely 3, 4, 5..., 65. Non-leaf nodes do not store real data, but only data items that guide the search direction. For example, 17 and 35 do not actually exist in the data table.

- The leaf nodes are connected using two-way pointers. The lowest leaf node forms a two-way ordered linked list, which can be range-queried.

34. How many pieces of data can a B-tree store?

#Assume that the index field is of type bigint and has a length of 8 bytes. The pointer size is set to 6 bytes in the InnoDB source code, making a total of 14 bytes. Non-leaf nodes (one page) can store 16384/14=1170 such units (key value pointers), which means there are 1170 pointers.

When the tree depth is 2, there are 1170^2 leaf nodes, and the data that can be stored is 1170117016=21902400.

When searching for data, one page search represents one IO. In other words, for a table of about 20 million, querying data requires up to three disk accesses.

So the B-tree depth in InnoDB is generally 1-3 layers, which can satisfy tens of millions of data storage.

35. Why use B-tree instead of ordinary binary tree?

You can look at this problem from several dimensions, whether the query is fast enough, whether the efficiency is stable, how much data is stored, and the number of disk searches.

Why not use ordinary binary trees?

Ordinary binary trees are degenerated. If it degenerates into a linked list, it is equivalent to a full table scan. Compared with binary search trees, balanced binary trees have more stable search efficiency and faster overall search speed.

Why not balance the binary tree?

When reading data, it is read from the disk into the memory. If a data structure like a tree is used as an index, each time you search for data, you need to read a node from the disk, which is a disk block, but a balanced binary tree only stores one key value and data per node. If it is a B-tree , more node data can be stored, and the height of the tree will also be reduced, so the number of disk reads will be reduced, and the query efficiency will be faster.

36. Why use B-tree instead of B-tree?

Compared with B-Tree, B has these advantages:

-

It is a variant of B-Tree. It can solve all the problems that B-Tree can solve.

Two major problems solved by B Tree: each node stores more keywords; more paths

-

Stronger ability to scan databases and tables

If we want to perform a full table scan on the table, we only need to traverse the leaf nodes. There is no need to traverse the entire B Tree to get all the data.

-

B Tree has stronger disk read and write capabilities than B Tree, and has fewer IO times

The root node and branch nodes do not save the data area, so a node More keywords can be saved, more keywords can be loaded into the disk at one time, and the number of IO times is reduced.

-

Stronger sorting ability

Because there is a pointer to the next data area on the leaf node, the data forms a linked list.

-

The efficiency is more stable

B Tree always gets data at the leaf nodes, so the number of IOs is stable.

37. What is the difference between Hash index and B-tree index?

- B tree can perform range query, Hash index cannot.

- B-tree supports the leftmost principle of joint index, but Hash index does not support it.

- B-tree supports order by sorting, but Hash index does not support it.

- Hash index is more efficient than B-tree for equivalent queries.

- When B-tree uses like for fuzzy query, the words after like (such as starting with %) can play an optimization role, and the Hash index cannot perform fuzzy query at all.

38. What is the difference between clustered index and non-clustered index?

First understand that the clustered index is not a new index, but a data storage method. Clustering means that rows of data and adjacent key values are stored compactly together. The two storage engines we are familiar with - MyISAM uses non-clustered indexes, and InnoDB uses clustered indexes.

It can be said:

- The data structure of the index is a tree. The index and data of the clustered index are stored in a tree. The leaf nodes of the tree are the data. Non-clustered Index The index and data are not in the same tree.

- 一个表中只能拥有一个聚簇索引,而非聚簇索引一个表可以存在多个。

- 聚簇索引,索引中键值的逻辑顺序决定了表中相应行的物理顺序;索引,索引中索引的逻辑顺序与磁盘上行的物理存储顺序不同。

- 聚簇索引:物理存储按照索引排序;非聚集索引:物理存储不按照索引排序;

39.回表了解吗?

在InnoDB存储引擎里,利用辅助索引查询,先通过辅助索引找到主键索引的键值,再通过主键值查出主键索引里面没有符合要求的数据,它比基于主键索引的查询多扫描了一棵索引树,这个过程就叫回表。

例如:select * from user where name = ‘张三’;

40.覆盖索引了解吗?

在辅助索引里面,不管是单列索引还是联合索引,如果 select 的数据列只用辅助索引中就能够取得,不用去查主键索引,这时候使用的索引就叫做覆盖索引,避免了回表。

比如,select name from user where name = ‘张三’;

41.什么是最左前缀原则/最左匹配原则?

注意:最左前缀原则、最左匹配原则、最左前缀匹配原则这三个都是一个概念。

最左匹配原则:在InnoDB的联合索引中,查询的时候只有匹配了前一个/左边的值之后,才能匹配下一个。

根据最左匹配原则,我们创建了一个组合索引,如 (a1,a2,a3),相当于创建了(a1)、(a1,a2)和 (a1,a2,a3) 三个索引。

为什么不从最左开始查,就无法匹配呢?

比如有一个user表,我们给 name 和 age 建立了一个组合索引。

ALTER TABLE user add INDEX comidx_name_phone (name,age);

Copy after login

组合索引在 B+Tree 中是复合的数据结构,它是按照从左到右的顺序来建立搜索树的 (name 在左边,age 在右边)。

从这张图可以看出来,name 是有序的,age 是无序的。当 name 相等的时候, age 才是有序的。

这个时候我们使用where name= ‘张三‘ and age = ‘20 ‘去查询数据的时候, B+Tree 会优先比较 name 来确定下一步应该搜索的方向,往左还是往右。如果 name 相同的时候再比较age。但是如果查询条件没有 name,就不知道下一步应该查哪个 节点,因为建立搜索树的时候 name 是第一个比较因子,所以就没用上索引。

42.什么是索引下推优化?

索引条件下推优化(Index Condition Pushdown (ICP) )是MySQL5.6添加的,用于优化数据查询。

- 不使用索引条件下推优化时存储引擎通过索引检索到数据,然后返回给MySQL Server,MySQL Server进行过滤条件的判断。

- 当使用索引条件下推优化时,如果存在某些被索引的列的判断条件时,MySQL Server将这一部分判断条件下推给存储引擎,然后由存储引擎通过判断索引是否符合MySQL Server传递的条件,只有当索引符合条件时才会将数据检索出来返回给MySQL服务器。

例如一张表,建了一个联合索引(name, age),查询语句:select * from t_user where name like '张%' and age=10;,由于name使用了范围查询,根据最左匹配原则:

不使用ICP,引擎层查找到name like '张%'的数据,再由Server层去过滤age=10这个条件,这样一来,就回表了两次,浪费了联合索引的另外一个字段age。

但是,使用了索引下推优化,把where的条件放到了引擎层执行,直接根据name like '张%' and age=10的条件进行过滤,减少了回表的次数。

索引条件下推优化可以减少存储引擎查询基础表的次数,也可以减少MySQL服务器从存储引擎接收数据的次数。

锁

43.MySQL中有哪几种锁,列举一下?

如果按锁粒度划分,有以下3种:

- Table lock: low overhead, fast locking; strong locking force, high probability of lock conflict, lowest concurrency; no deadlock.

- Row lock: high overhead, slow locking; deadlock may occur; small locking granularity, low probability of lock conflict, and high concurrency.

- Page lock: The cost and locking speed are between table locks and row locks; deadlocks may occur; the locking granularity is between table locks and row locks, and the concurrency is average

In terms of compatibility, there are two types,

- Shared lock (S Lock), also called read lock (read lock), does not block each other.

- Exclusive lock (X Lock), also called write lock (write lock), exclusive lock is blocking. Within a certain period of time, only one request can perform writing and prevents other locks from reading and writing. input data.

44. Talk about the implementation of row locks in InnoDB?

We use such a user table to represent row-level locks, in which 4 rows of data are inserted, and the primary key values are 1,6,8,12, now simplify its clustered index structure and only retain data records.

The main implementation of InnoDB's row lock is as follows:

Record lock is to directly lock a row of records. When we use unique indexes (including unique indexes and clustered indexes) to perform equivalent queries and accurately match a record, the record will be locked directly. For example, select * from t where id =6 for update; will lock the record with id=6.

The gap of Gap Locks refers to two The logical part between records that has not been filled with data is a left-open-right space.

Gap lock is to lock certain gap intervals. When we use equality query or range query and do not hit any record, the corresponding gap interval will be locked. For example, select * from t where id =3 for update;or select * from t where id > 1 and id will convert (1,6) Range lock.

- Next-key Lock Pro key lock

Next-key refers to the gap plus the record to the right of it Left open Right closed interval . For example, the above (1,6], (6,8], etc.

Pro-key lock is a combination of record locks (Record Locks) and gap locks (Gap Locks) , that is, in addition to locking the record itself, we also need to lock the gap between the indexes. When we use range query and hit some record records, the immediate key interval is locked at this time. Note, The interval locked by the temporary key lock will include the temporary key interval to the right of the last record. For example, select * from t where id > 5 and id will lock (4, 7], (7, ∞). MySQL’s default row lock type is <code>Next-Key Locks. When a unique index is used and an equal value query matches a record, Next-Key Locks (Next-Key Locks) will degenerate into record locks; when no records are matched, they will degenerate into gap locks.

Gap Locksand Next-Key Locks are used to solve the problem of phantom reading. Under READ COMMITTEDisolation level, Gap Locks and Next-Key Locks will be invalid!

The above are the three implementation algorithms of row locks. In addition, there are also Insert Intention Lock.

When a transaction inserts a record, it needs to determine whether the insertion position is blocked. The transaction has an intention lock. If there is one, the insertion operation needs to wait until the transaction with the gap lock commits. However, while the transaction is waiting, it also needs to generate a lock structure in the memory, indicating that there is a transaction that wants to lock in a certain gap. Insert new records in, but now it is waiting. This type of lock is named Insert Intention Locks, which is the insertion intention lock.

If we have a T1 transaction, add the (1,6) interval Intention lock. Now there is a T2 transaction that wants to insert a piece of data with an id of 4. It will acquire an insertion intention lock in the (1,6) range. There is also a T3 transaction that wants to insert a piece of data with an id of 3. It will also acquire an insertion intention lock in the (1,6) range, but the two insertion intention locks will not be mutually exclusive.

45. The intention lock is Do you know anything?

Intention lock is a table-level lock, not to be confused with insertion intention lock.

The intention lock appears to support InnoDB's multi-granularity lock, which solves the problem of table The problem of coexistence of locks and row locks.

When we need to add a table lock to a table, we need to judge whether there are any data rows in the table that are locked to determine whether the addition can be successful.

If there is no intention lock, then we have to traverse all the data rows in the table to determine whether there is a row lock;

With the intention lock, a table-level lock, we can directly judge once. Know whether any data rows in the table are locked.

With the intention lock, before transaction A to be executed applies for a row lock (write lock), the database will automatically apply for the intention exclusive lock of the table to transaction A. When transaction B applies for a mutex lock on the table, it will fail because there is an intention exclusive lock on the table and transaction B will be blocked when applying for a mutex lock on the table.

#46. Do you understand MySQL’s optimistic locking and pessimistic locking?

-

Pessimistic Lock(Pessimistic Concurrency Control):

Pessimistic lock believes that the data protected by it is extremely unsafe, all the time All may be modified. After a transaction obtains a pessimistic lock, no other transaction can modify the data and can only wait for the lock to be released before executing it.

Row locks, table locks, read locks, and write locks in the database are all pessimistic locks.

- Optimistic Concurrency Control

Optimistic lock believes that the data will not change too frequently.

Optimistic locking is usually implemented by adding a version (version) or timestamp (timestamp) to the table. Among them, version is the most commonly used.

When a transaction fetches data from the database, it will also take out the version of the data (v1). When the transaction completes the changes to the data and wants to update it to the table, it will take out the version that was previously taken out. Comparing v1 with the latest version v2 in the data, if v1=v2, it means that during the data change period, no other transactions modified the data. At this time, transactions are allowed to modify the data in the table, and the version will be modified during the modification. Add 1 to indicate that the data has been changed.

If v1 is not equal to v2, it means that the data was changed by other transactions during the data change period. At this time, the data is not allowed to be updated into the table. The general solution is to notify the user and let them re-operate. Unlike pessimistic locking, optimistic locking is usually implemented by developers.

47.Have you ever encountered a deadlock problem in MySQL? How did you solve it?

The general steps for troubleshooting deadlocks are as follows:

(1) Check the deadlock log show engine innodb status;

(2) Find out the deadlock sql

(3) Analyze sql lock situation

(4) Simulate deadlock case

(5) Analyze deadlock log

(6) Analyze deadlock Lock result

Of course, this is just a simple process description. In fact, deadlocks in production are all kinds of strange, and it is not that simple to troubleshoot and solve.

Transaction

48. What are the four major characteristics of MySQL transactions?

- Atomicity: The transaction is executed as a whole, and either all or none of the operations on the database contained in it are executed.

- Consistency: means that the data will not be destroyed before the transaction starts and after the transaction ends. If account A transfers 10 yuan to account B, the total amount of A and B will remain unchanged regardless of success or failure. of.

- Isolation: When multiple transactions access concurrently, the transactions are isolated from each other, that is, one transaction does not affect the running effects of other transactions. In short, it means that there is no conflict between affairs.

- Persistence: Indicates that after the transaction is completed, the operational changes made by the transaction to the database will be permanently saved in the database.

49. So what guarantee does ACID rely on?

-

Isolation of transactions is achieved through the database lock mechanism.

-

Consistency of transactions is guaranteed by undo log: undo log is a logical log, which records the insert, update, and deltete operations of the transaction. When rolling back, the reverse delete, update, and insert operation to restore data.

- The atomicityandpersistenceof the transaction are guaranteed by redo log: redolog is called redo log, which is a physical log. When a transaction is submitted, it must first Write all the logs of the transaction to the redo log for persistence, and the transaction is not completed until the commit operation.

#50. What are the isolation levels of transactions? What is MySQL's default isolation level?

- Read Uncommitted

- Read Committed

- Repeatable Read )

- Serializable

The default transaction isolation level of MySQL is Repeatable Read (Repeatable Read).

51.What are phantom reads, dirty reads, and non-repeatable reads?

- Transactions A and B are executed alternately, and transaction A reads the uncommitted data of transaction B. This is dirty reading.

- Within a transaction scope, two identical queries read the same record, but return different data. This is non-repeatable reading.

- Transaction A queries the result set of a range, and another concurrent transaction B inserts/delete data into this range and commits it silently. Then transaction A queries the same range again, and reads twice. The result set is different, this is phantom reading.

Different isolation levels, problems that may occur under concurrent transactions:

| Isolation level |

Dirty read |

Non-repeatable read |

Phantom read |

| Read Uncommited Read uncommitted |

Yes | Yes |

Yes |

| Read Committed Read Committed |

No |

Yes |

No |

| Repeatable Read Repeatable Read |

No |

No |

Yes |

## Serialzable Serializable | No | No | No |

52. How are various isolation levels of transactions implemented?

Read uncommitted

Read uncommitted, needless to say, the principle of reading without locking is adopted.

- Transactional reading does not lock and does not block the reading and writing of other transactions

- Transactional writing blocks the writing of other transactions, but does not block the reading of other transactions;

Read Committed & Repeatable Read

The Read Committed and Repeatable Read levels utilize ReadView and MVCC, also That is, each transaction can only read the version it can see (ReadView).

- READ COMMITTED: Generate a ReadView every time before reading data

- REPEATABLE READ: Generate a ReadView when reading data for the first time

Serialization

The implementation of serialization adopts the principle of locking both reading and writing.

In the case of serialization, for the same row transaction, write will add write lock, read will add read lock . When a read-write lock conflict occurs, the transaction accessed later must wait for the completion of the previous transaction before it can continue to execute.

53.Do you understand MVCC? How is it achieved?

MVCC (Multi Version Concurrency Control), the Chinese name is multi-version concurrency control. Simply put, it solves the read consistency problem under concurrent access by maintaining historical versions of data. Regarding its implementation, we must grasp several key points, Implicit fields, undo logs, version chains, snapshot reading & current reading, and Read View.

Version chain

For the InnoDB storage engine, each row of records has two hidden columnsDB_TRX_ID, DB_ROLL_PTR

-

DB_TRX_ID, transaction ID, each time it is modified, the transaction ID will be copied to DB_TRX_ID;

-

DB_ROLL_PTR, rollback pointer , pointing to the undo log of the rollback segment.

Suppose there is a user table with only one row of records, and the transaction ID inserted at that time is 80. At this time, the sample picture of this record is as follows:

The next two DB_TRX_ID are 100, ## The transaction #200 performs an update operation on this record. The entire process is as follows:

Since each change will first Undo log is recorded and uses DB_ROLL_PTR to point to the undo log address. Therefore, it can be considered that the modification logs of this record are concatenated to form a version chain, and the head node of the version chain is the latest value of the current record. As follows:

ReadView

For Read Committed and Repeatable ReadIn terms of isolation level, you need to read the records of submitted transaction modifications. That is to say, if the modifications of a certain version in the version chain are not submitted, then the records of that version cannot be read. Therefore, it is necessary to determine which version in the version chain can be read by the current transaction under the Read Committed and Repeatable Read isolation levels. So the concept ReadView was introduced to solve this problem.

Read View is the read view generated when a transaction is executed Snapshot read, which is equivalent to a snapshot recorded in a certain schedule. Through this snapshot, we can obtain:

m_ids: Represents the transaction ID list of active read and write transactions in the current system when the ReadView is generated. - min_trx_id: Indicates the smallest transaction ID among the active read and write transactions in the current system when the ReadView is generated, that is, the smallest value in m_ids.

- max_trx_id: Indicates the id value that should be assigned to the next transaction in the system when generating ReadView.

- creator_trx_id: Indicates the transaction id of the transaction that generated the ReadView

-

With this ReadView, when accessing a certain record, you only need to follow the steps below to determine a certain part of the record. Whether the version is visible:

- If the DB_TRX_ID attribute value of the accessed version is the same as the creator_trx_id value in ReadView, it means that the current transaction is accessing its own modified records, so this version can be accessed by the current transaction.

- If the DB_TRX_ID attribute value of the accessed version is less than the min_trx_id value in ReadView, it indicates that the transaction that generated this version has been committed before the current transaction generates ReadView, so this version can be accessed by the current transaction.

- If the DB_TRX_ID attribute value of the accessed version is greater than the max_trx_id value in ReadView, it means that the transaction that generated this version was opened after the current transaction generated ReadView, so this version cannot be accessed by the current transaction.

- If the DB_TRX_ID attribute value of the accessed version is between the min_trx_id and max_trx_id of ReadView, then you need to determine whether the trx_id attribute value is in the m_ids list. If it is, it means that this version of the transaction was generated when the ReadView was created. If it is still active, this version cannot be accessed; if not, it means that the transaction that generated this version when the ReadView was created has been committed, and this version can be accessed.

If a certain version of data is not visible to the current transaction, then follow the version chain to find the next version of data, continue to follow the above steps to determine the visibility, and so on, until the version The last version in the chain. If the last version is not visible, it means that the record is completely invisible to the transaction, and the query result does not include the record.

In MySQL, a very big difference between READ COMMITTED and REPEATABLE READ isolation levels is that they generate ReadView at different times.

READ COMMITTED is Generate a ReadView before each time reading data, so that you can ensure that you can read the data submitted by other transactions every time; REPEATABLE READ is A ReadView is generated when the data is read for the first time, thus ensuring that the results of subsequent reads are completely consistent.

High availability/performance

54. Do you understand the separation of database read and write?

The basic principle of read and write separation is to disperse database read and write operations to different nodes. The following is the basic architecture diagram:

Read and write separation The basic implementation is:

- The database server builds a master-slave cluster, either one master and one slave, or one master and multiple slaves.

- The database host is responsible for read and write operations, and the slave is only responsible for read operations.

- The database host synchronizes data to the slave machine through replication, and each database server stores all business data.

- The business server sends write operations to the database host and read operations to the database slave.

55. How to realize the allocation of read and write separation?

To separate read and write operations, and then access different database servers, there are generally two ways: program code encapsulation and middleware encapsulation.

1. Program code encapsulation

Program code encapsulation refers to abstracting a data access layer in the code (so some articles also call this method "middle layer encapsulation" " ) to realize the separation of read and write operations and the management of database server connections. For example, simple encapsulation based on Hibernate can achieve read-write separation:

Among the current open source implementation solutions, Taobao's TDDL (Taobao Distributed Data Layer, nickname: header are all big) is relatively famous.

2. Middleware encapsulation

Middleware encapsulation refers to an independent system that realizes the separation of read and write operations and the management of database server connections. The middleware provides a SQL-compatible protocol to the business server, and the business server does not need to separate reading and writing by itself.

For the business server, there is no difference between accessing the middleware and accessing the database. In fact, from the perspective of the business server, the middleware is a database server.

The basic structure is:

56. Do you understand the principle of master-slave replication?

- Master data writing, update binlog

- master creates a dump thread to push binlog to slave

- When slave connects to master, it will create an IO thread to receive binlog, and record it to the relay log relay log

- slave starts a sql thread to read the relay log event and execute it on the slave to complete the synchronization

- slave records its own binglog

57. How to deal with master-slave synchronization delay?

Cause of master-slave synchronization delay

A server opens N links for clients to connect, so there will be large concurrent update operations, but there is only one thread to read the binlog from the server. When a certain SQL is executed on the slave server If it takes a little longer or because a certain SQL needs to lock the table, there will be a large backlog of SQL on the master server and it will not be synchronized to the slave server. This leads to master-slave inconsistency, that is, master-slave delay.

Solutions to master-slave synchronization delay

There are several common methods to solve master-slave replication delay:

For example, after the account registration is completed, the read operation to read the account when logging in is also sent to the main database server. This method is strongly bound to the business and has a greater intrusion and impact on the business. If a new programmer does not know how to write code in this way, it will cause a bug.

This is what is usually called "secondary reading", secondary reading It is not bound to the business and only needs to encapsulate the API accessed by the underlying database. The implementation cost is small. The disadvantage is that if there are many secondary reads, it will greatly increase the read operation pressure on the host. For example, if a hacker violently cracks an account, it will lead to a large number of secondary read operations. The host may not be able to withstand the pressure of read operations and collapse.

For example, for a user management system, All the read and write operations of registration and login business access the host. The user's introduction, love, level and other services can use read and write separation, because even if the user changes his self-introduction, he will see that the self-introduction is still the same when querying. Compared with being unable to log in, the business impact is much smaller and can be tolerated.

58. How do you usually divide the database?

- Vertical database splitting: Based on tables, different tables are split into different databases according to different business affiliations.

- Horizontal sub-database: Based on fields and according to certain strategies (hash, range, etc.), the data in one database is split into multiple in a library.

#59. How do you divide the tables?

- Horizontal table splitting: Split the data in one table into multiple tables based on fields and certain strategies (hash, range, etc.).

- Vertical table splitting: Based on the fields and according to the activity of the fields, the fields in the table are split into different tables (main table and extended table).

#60. What are the routing methods for horizontal table sharding?

What is routing? That is which table the data should be divided into.

There are three main routing methods for horizontal table sharding:

-

Range routing: Select ordered data columns (for example, shaping, timestamp, etc.) as routes According to the conditions, different segments are scattered into different database tables.

We can observe some payment systems and find that we can only check payment records within a year. This may be because the payment company has divided the tables according to time.

The complexity of range routing design is mainly reflected in the selection of segment size. If a segment is too small, it will lead to too many sub-tables after segmentation and increase maintenance complexity. ; Too large a segment may cause performance problems in a single table. It is generally recommended that the segment size be between 1 million and 20 million. The appropriate segment size needs to be selected based on the business.

The advantage of range routing is that new tables can be expanded smoothly as data increases. For example, if the current number of users is 1 million, if the number increases to 10 million, you only need to add a new table, and the original data does not need to be changed. A relatively implicit disadvantage of range routing is uneven distribution. If the table is divided into tables according to 10 million, it is possible that the actual amount of data stored in one segment is only 1,000, while the actual amount of data stored in another segment is 900. Ten thousand.

-

Hash routing: Select the value of a certain column (or a combination of certain columns) for Hash operation, and then disperse it to different database tables based on the Hash result.

Also taking the order id as an example, if we plan 4 database tables from the beginning, the routing algorithm can simply use the value of id % 4 to represent the database table number to which the data belongs, and the id is The order 12 is placed in the subtable numbered 50, and the order with id 13 is placed in the subtable numbered 61.

The complexity of Hash routing design is mainly reflected in the selection of the initial number of tables. Too many tables are troublesome to maintain, and too few tables may cause performance problems with a single table. After using Hash routing, it is very troublesome to increase the number of sub-tables, and all data must be redistributed. The advantages and disadvantages of Hash routing are basically opposite to those of range routing. The advantage of Hash routing is that the tables are relatively evenly distributed. The disadvantage is that it is troublesome to expand new tables and all data must be redistributed.

-

Configuring routing: Configuring routing is the routing table, using an independent table to record routing information. Taking the order id as an example, we add a new order_router table. This table contains two columns: orderjd and tablejd. The corresponding table_id can be queried based on orderjd.

The configuration routing design is simple and very flexible to use, especially when expanding the table. You only need to migrate the specified data and then modify the routing table.

The disadvantage of configuring routing is that it must be queried more than once, which will affect the overall performance; and if the routing table itself is too large (for example, hundreds of millions of data), the performance may also become Bottleneck: If we divide the routing table into databases and tables again, we will face an endless loop routing algorithm selection problem.

61. How to achieve capacity expansion without downtime?

In fact, expansion without downtime is a very troublesome and risky operation. Of course, the interview is much simpler to answer.

- ##The third stage: the old library goes offline

The old library no longer writes new data

62. Commonly used middleware for sub-library and table sub-database What?

62. Commonly used middleware for sub-library and table sub-database What?

sharding-jdbc

- Mycat

- 63. So what problems do you think will be caused by sub-database and sub-table?

From the perspective of sub-database:

Transaction issues

After the database is divided, single-machine transactions are no longer needed and must be solved using distributed transactions.

Cross-database JOIN problem

The solution at this time is to

correlate in the business code, that is, first check the data of one table, and then check another table through the obtained results, and then Use code to correlate to get the final result. This method is slightly more complicated to implement, but it is acceptable.

There are also some fields that can be

appropriately redundant. For example, the previous table stored a correlation ID, but the business often required the corresponding Name or other fields to be returned. At this time, these fields can be redundantly added to the current table to remove operations that require association. Another way is

Data heterogeneity, through binlog synchronization and other methods, isomerize the data that needs cross-database join into a storage structure such as ES, and query it through ES. From the perspective of sub-tables:

Cross-node count, order by, group by and aggregate function issues

Data migration, capacity planning, expansion and other issues

ID Problem

It’s still self-increasing, but the self-increasing step size is set. For example, there are three tables now, the step size is set to 3, and the initial ID values of the three tables are 1, 2, and 3 respectively. In this way, the ID growth of the first table is 1, 4, and 7. The second table is 2, 5, 8. The third table is 3, 6, 9, so there will be no duplication.

UUID, this is the simplest, but discontinuous primary key insertion will cause serious page splits and poor performance.

Distributed ID, the more famous one is Twitter’s open source sonwflake snowflake algorithm

Operation and maintenance

64. Million How to delete data above level?

About the index: Since the index requires additional maintenance costs, because the index file is a separate file, when we add, modify, or delete data, additional operations on the index file will occur. These operations Additional IO needs to be consumed, which will reduce the execution efficiency of addition/modification/deletion.

So, when we delete millions of data in the database, we consult the MySQL official manual and learn that the speed of deleting data is directly proportional to the number of indexes created.

So when we want to delete millions of data, we can first delete the index

and then delete the useless data

Re-create the index after the deletion is completed. Creating the index is also very fast.

65. How to add fields to a large table with millions of levels?

When the amount of online database data reaches millions or tens of millions, adding a field is not that simple because the table may be locked for a long time.

To add fields to a large table, there are usually these methods:

-

Convert through an intermediate table

Create a temporary new table and convert the old table Completely copy the structure, add fields, copy the old table data, delete the old table, and name the new table the name of the old table. This method may cause some data to be lost.

-

Use pt-online-schema-change

pt-online-schema-change is a tool developed by percona company, which can be used online The principle of modifying the table structure is also through intermediate tables.

-

First add it to the slave database and then perform the master-slave switch

If a table has a large amount of data and is a hot table (reading and writing are particularly frequent), you can consider first Add it to the slave database, then switch between master and slave, and then add fields to several other nodes after the switch.

66. What should I do if the MySQL database CPU surges?

Troubleshooting process:

(1) Use the top command to observe and determine whether it is caused by mysqld or other reasons.

(2) If it is caused by mysqld, show processlist, check the session status, and determine whether there is any resource-consuming SQL running.

(3) Find out the SQL with high consumption and see whether the execution plan is accurate, whether the index is missing, and whether the amount of data is too large.

Processing:

(1) Kill these threads (and observe whether the CPU usage decreases),

(2) Make corresponding adjustments (such as adding index, Change sql, change memory parameters)

(3) Rerun these SQLs.

Other situations:

It is also possible that each SQL statement does not consume much resources, but suddenly, a large number of sessions are connected, causing the CPU to surge. In this case, you need to contact the application. Let's analyze why the number of connections will increase sharply, and then make corresponding adjustments, such as limiting the number of connections, etc.

[Related recommendations: mysql video tutorial]