Linux data analysis tools include: 1. Hadoop, which is a software framework capable of distributed processing of large amounts of data; 2. Storm, which can process huge data streams very reliably and is used to process Hadoop batches. Data; 3. RapidMiner, used for data mining and visual modeling; 4. wc, etc.

#The operating environment of this tutorial: linux5.9.8 system, Dell G3 computer.

6 linux big data processing and analysis tools

1, Hadoop

Hadoop is a software framework capable of distributed processing of large amounts of data. But Hadoop does it in a reliable, efficient, and scalable way.

Hadoop is reliable because it assumes that computational elements and storage will fail, so it maintains multiple copies of working data, ensuring that processing can be redistributed against failed nodes.

Hadoop is efficient because it works in a parallel manner, speeding up processing through parallel processing.

Hadoop is also scalable and capable of processing petabytes of data. In addition, Hadoop relies on community servers, so it is relatively low-cost and can be used by anyone.

Hadoop is a distributed computing platform that allows users to easily build and use it. Users can easily develop and run applications that handle massive amounts of data on Hadoop. It mainly has the following advantages:

High reliability. Hadoop's ability to store and process data bit-by-bit is worthy of trust.

High scalability. Hadoop distributes data and completes computing tasks among available computer clusters, which can be easily expanded to thousands of nodes.

Efficiency. Hadoop can dynamically move data between nodes and ensure the dynamic balance of each node, so the processing speed is very fast.

High fault tolerance. Hadoop can automatically save multiple copies of data and automatically redistribute failed tasks.

Hadoop comes with a framework written in Java language, so it is ideal to run on Linux production platforms. Applications on Hadoop can also be written in other languages, such as C.

2, HPCC

HPCC, the abbreviation of High Performance Computing and Communications. In 1993, the U.S. Federal Coordinating Council for Science, Engineering, and Technology submitted a report on the "Grand Challenge Project: High Performance Computing and Communications" to Congress, also known as the HPCC plan report, which is the U.S. President's Science Strategy Project. The purpose is to solve a number of important scientific and technological challenges by strengthening research and development. HPCC is a plan to implement the information highway in the United States. The implementation of this plan will cost tens of billions of dollars. Its main goals are to: develop scalable computing systems and related software to support terabit-level network transmission performance, and develop thousands of Megabit network technology to expand research and educational institutions and network connectivity capabilities.

The project mainly consists of five parts:

High Performance Computer System (HPCS), including research on future generations of computer systems, system design tools, advanced Evaluation of typical systems and original systems, etc.;

Advanced Software Technology and Algorithms (ASTA), which covers software support for huge challenges, new algorithm design, software branches and tools, and calculations Computing and high-performance computing research center, etc.;

National Research and Education Grid (NREN), including the research and development of intermediate stations and 1 billion-bit transmission;

Basic Research and Human Resources (BRHR), a collection of basic research, training, education and curriculum materials designed to reward investigators for initial, long-term investigation in scalable high-performance computing To increase the flow of innovative ideas, increase the pool of skilled and trained personnel through improved education and high-performance computing training and communications, and provide the necessary infrastructure to support these investigations and research activities;

Information Infrastructure Technology and Applications (IITA) aims to ensure the United States' leadership in the development of advanced information technology.

3. Storm

#Storm is free open source software, a distributed, fault-tolerant real-time computing system. Storm can handle huge data streams very reliably and is used to process Hadoop batch data. Storm is simple, supports many programming languages, and is very fun to use. Storm is open sourced by Twitter. Other well-known application companies include Groupon, Taobao, Alipay, Alibaba, Le Elements, Admaster, etc.

Storm has many application areas: real-time analysis, online machine learning, non-stop computing, distributed RPC (remote process call protocol, a method of requesting services from remote computer programs through the network), ETL (Extraction-Transformation-Loading) abbreviation for Data Extraction, Transformation and Loading), etc. Storm's processing speed is amazing: after testing, each node can process 1 million data tuples per second. Storm is scalable, fault-tolerant, and easy to set up and operate.

4. Apache Drill

In order to help enterprise users find more effective ways to speed up Hadoop data query, the Apache Software Foundation The Association recently launched an open source project called "Drill". Apache Drill implements Google’s Dremel.

According to Tomer Shiran, product manager of Hadoop vendor MapR Technologies, “Drill” has been operated as an Apache incubator project and will continue to be promoted to software engineers around the world.

This project will create an open source version of Google's Dremel Hadoop tool (which Google uses to speed up Internet applications of Hadoop data analysis tools). "Drill" will help Hadoop users query massive data sets faster.

The “Drill” project is actually inspired by Google’s Dremel project: This project helps Google analyze and process massive data sets, including analyzing and crawling Web documents and tracking application data installed on the Android Market. , analyze spam, analyze test results on Google's distributed build system, and more.

By developing the "Drill" Apache open source project, organizations will be able to establish Drill's API interfaces and flexible and powerful architecture to help support a wide range of data sources, data formats and query languages.

5, RapidMiner

RapidMiner is the world's leading data mining solution, with advanced technology to a very large extent . It covers a wide range of data mining tasks, including various data arts, and can simplify the design and evaluation of data mining processes.

Functions and features

Free data mining technology and libraries

100% Java code (can be run in the operation System)

The data mining process is simple, powerful and intuitive

Internal XML ensures a standardized format to represent the exchange of data mining processes

Can automate large-scale processes using simple scripting languages

Multi-level data views to ensure valid and transparent data

Interactive prototype of graphical user interface

Command line (batch mode) automatic large-scale application

Java API (application Programming interface)

Simple plug-in and promotion mechanism

Powerful visualization engine, many cutting-edge visual modeling of high-dimensional data

More than 400 data mining operators supported

Yale has been successfully used in many different application areas, including text mining, multimedia mining, Functional design, data flow mining, integrated development methods and distributed data mining.

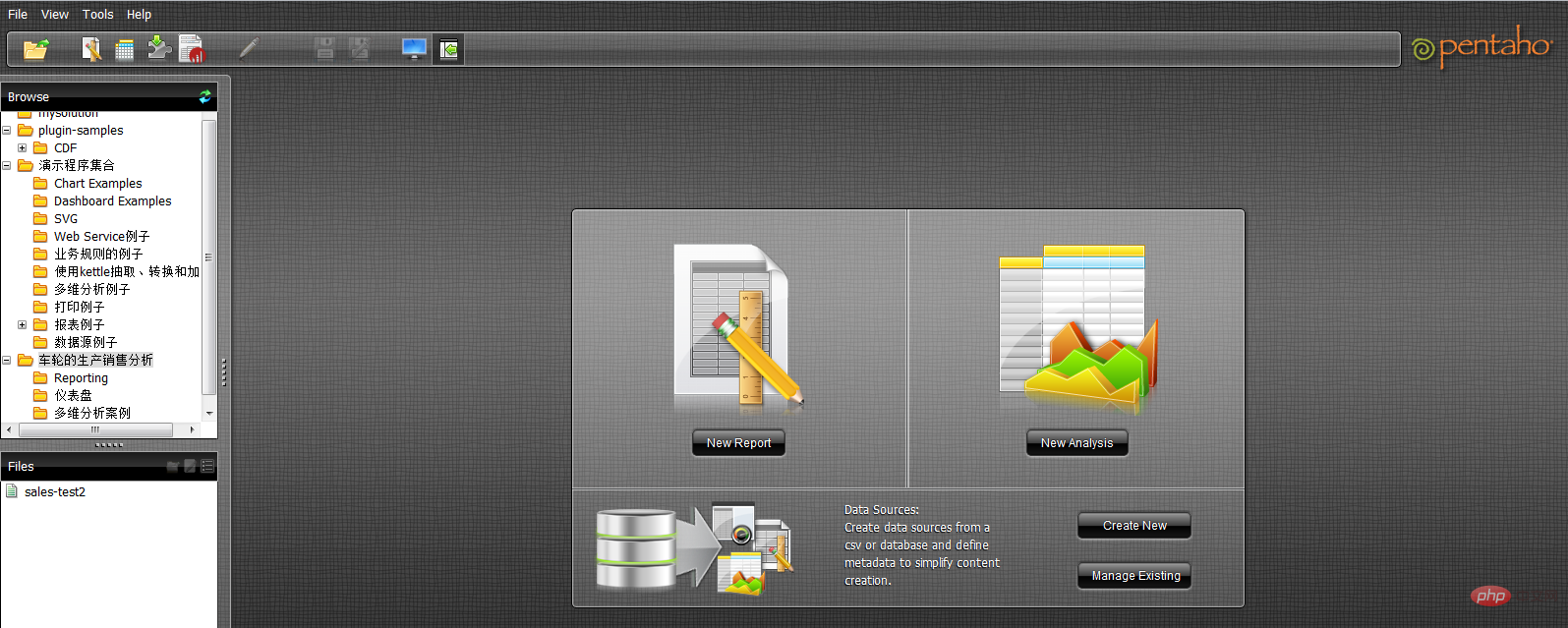

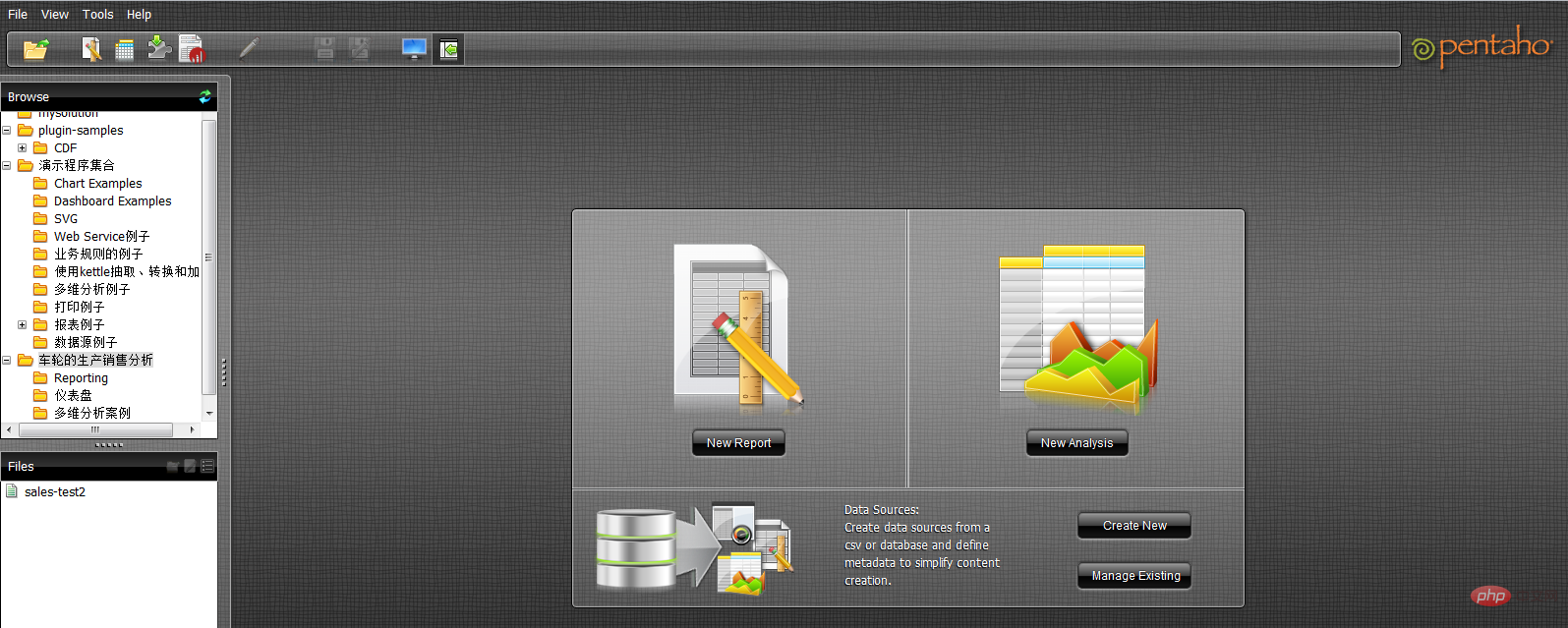

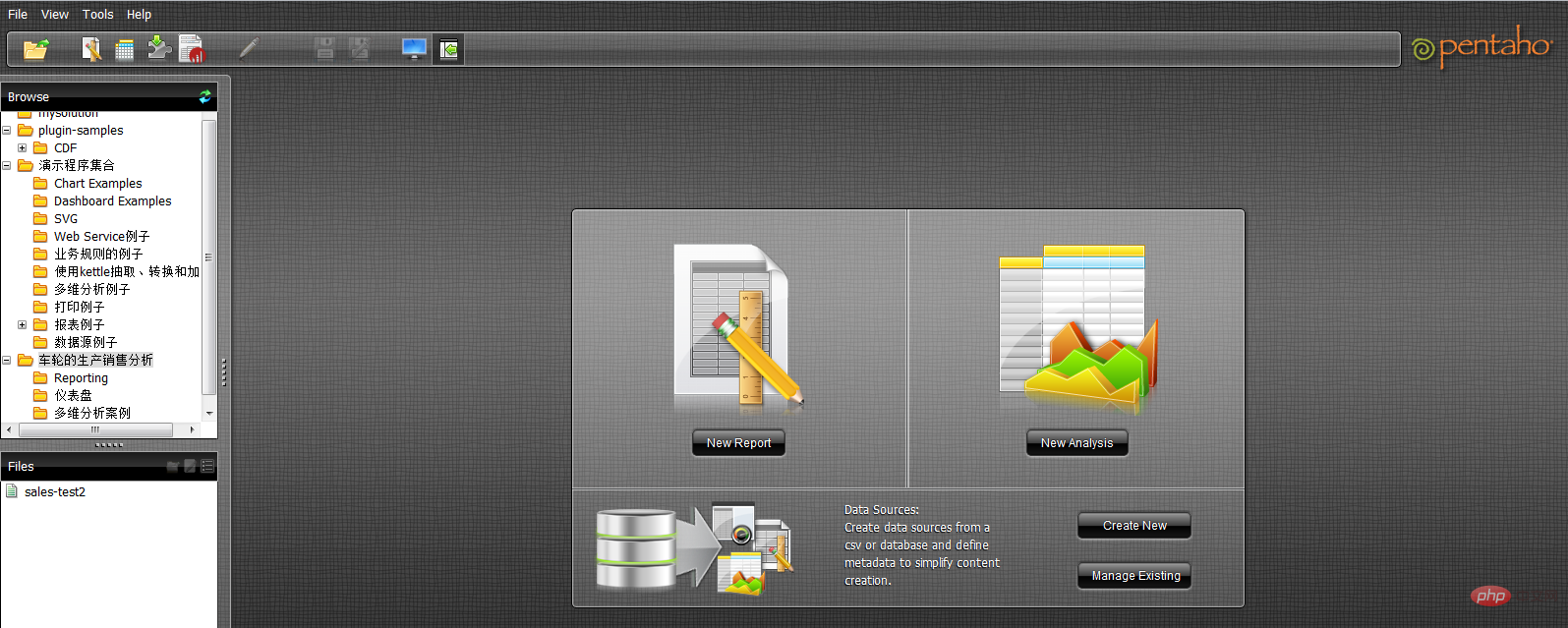

6. Pentaho BI

Pentaho BI platform is different from traditional BI products. It is process-centered. Solution-oriented framework. Its purpose is to integrate a series of enterprise-level BI products, open source software, APIs and other components to facilitate the development of business intelligence applications. Its emergence enables a series of independent products for business intelligence, such as Jfree, Quartz, etc., to be integrated together to form complex and complete business intelligence solutions.

Pentaho BI Platform, the core architecture and foundation of the Pentaho Open BI suite, is process-centric because its central controller is a workflow engine. The workflow engine uses process definitions to define the business intelligence processes that execute on the BI platform. Processes can be easily customized and new processes can be added. The BI platform includes components and reports to analyze the performance of these processes. Currently, Pentaho's main elements include report generation, analysis, data mining, workflow management, etc. These components are integrated into the Pentaho platform through technologies such as J2EE, WebService, SOAP, HTTP, Java, JavaScript, and Portals. Pentaho's distribution is mainly in the form of Pentaho SDK.

Pentaho SDK contains five parts: Pentaho platform, Pentaho sample database, independently run Pentaho platform, Pentaho solution examples and a pre-configured Pentaho network server. Among them, the Pentaho platform is the most important part of the Pentaho platform, including the main body of the Pentaho platform source code; the Pentaho database provides data services for the normal operation of the Pentaho platform, including configuration information, Solution-related information, etc. For the Pentaho platform, it It is not necessary and can be replaced by other database services through configuration; the Pentaho platform that can run independently is an example of the independent running mode of the Pentaho platform, which demonstrates how to make the Pentaho platform run independently without the support of an application server; Pentaho The solution example is an Eclipse project that demonstrates how to develop related business intelligence solutions for the Pentaho platform.

Pentaho BI platform is built on a foundation of servers, engines and components. These provide the system's J2EE server, security, portal, workflow, rules engine, charting, collaboration, content management, data integration, analysis and modeling capabilities. Most of these components are standards-based and can be replaced with other products.

9 linux data analysis command line tools

1. head and tail

First , let’s start with file processing. What is the content in the file? What is its format? You can use the cat command to display the file in the terminal, but it is obviously not suitable for processing files with long content.

Enter head and tail, which can completely display the specified number of lines in the file. If you do not specify the number of rows, 10 of them will be displayed by default.

$ tail -n 3 jan2017articles.csv 02 Jan 2017,Article,Scott Nesbitt,3 tips for effectively using wikis for documentation,1,/article/17/1/tips-using-wiki-documentation,"Documentation, Wiki",710 02 Jan 2017,Article,Jen Wike Huger,The Opensource.com preview for January,0,/article/17/1/editorial-preview-january,,358 02 Jan 2017,Poll,Jason Baker,What is your open source New Year's resolution?,1,/poll/17/1/what-your-open-source-new-years-resolution,,186

In the last three lines I was able to find the date, author name, title, and some other information. However, due to the lack of column headers, I don't know the specific meaning of each column. Check out the specific titles of each column below:

$ head -n 1 jan2017articles.csv Post date,Content type,Author,Title,Comment count,Path,Tags,Word count

Now everything is clear, we can see the publication date, content type, author, title, number of submissions, related URLs, tags for each article, and word count.

2. wc

But if you need to analyze hundreds or even thousands of articles, how to deal with it? Here you need to use the wc command - which is " Abbreviation for word count. wc can count bytes, characters, words or lines of a file. In this example, we want to know the number of lines in the article.

$ wc -l jan2017articles.csv 93 jan2017articles.csv

This file has 93 lines in total. Considering that the first line contains the file title, it can be inferred that this file is a list of 92 articles.

3, grep

A new question is raised below: How many articles are related to security topics? In order to achieve the goal, we assume that the required articles will be in the title, The word safety is mentioned on the label or elsewhere. At this time, the grep tool can be used to search files by specific characters or implement other search patterns. This is an extremely powerful tool because we can even create extremely precise matching patterns using regular expressions. But here, we only need to find a simple string.

$ grep -i "security" jan2017articles.csv 30 Jan 2017,Article,Tiberius Hefflin,4 ways to improve your security online right now,3,/article/17/1/4-ways-improve-your-online-security,Security and encryption,1242 28 Jan 2017,Article,Subhashish Panigrahi,How communities in India support privacy and software freedom,0,/article/17/1/how-communities-india-support-privacy-software-freedom,Security and encryption,453 27 Jan 2017,Article,Alan Smithee,Data Privacy Day 2017: Solutions for everyday privacy,5,/article/17/1/every-day-privacy,"Big data, Security and encryption",1424 04 Jan 2017,Article,Daniel J Walsh,50 ways to avoid getting hacked in 2017,14,/article/17/1/yearbook-50-ways-avoid-getting-hacked,"Yearbook, 2016 Open Source Yearbook, Security and encryption, Containers, Docker, Linux",2143

The format we use is grep plus the -i flag (informing grep that it is not case-sensitive), plus the pattern we want to search, and finally the location of the target file we are searching for. Finally we found 4 security-related articles. If the scope of the search is more specific, we can use pipe - it can combine grep with the wc command to find out how many lines mention security content.

$ grep -i "security" jan2017articles.csv | wc -l 4

In this way, wc will extract the output of the grep command and use it as input. It's obvious that this combination, coupled with a bit of shell scripting, instantly transforms the terminal into a powerful data analysis tool.

4, tr

In most analysis scenarios, we will face CSV files - but how do we convert them to other formats to achieve different applications? ?Here, we convert it into HTML form for data usage through tables. The tr command can help you achieve this goal, it can convert one type of characters into another type. Similarly, you can also use the pipe command to achieve output/input docking.

Next, let’s try another multi-part example, which is to create a TSV (tab-separated values) file that only contains articles published on January 20.

$ grep "20 Jan 2017" jan2017articles.csv | tr ',' '/t' > jan20only.tsv

First, we use grep for date query. We pipe this result to the tr command and use the latter to replace all commas with tabs (represented as '/t'). But where does the result go? Here we use the > character to output the result to a new file rather than to the screen. In this way, we can ensure that the dqywjan20only.tsv file contains the expected data.

$ cat jan20only.tsv 20 Jan 2017 Article Kushal Das 5 ways to expand your project's contributor base 2 /article/17/1/expand-project-contributor-base Getting started 690 20 Jan 2017 Article D Ruth Bavousett How to write web apps in R with Shiny 2 /article/17/1/writing-new-web-apps-shiny Web development 218 20 Jan 2017 Article Jason Baker "Top 5: Shell scripting the Cinnamon Linux desktop environment and more" 0 /article/17/1/top-5-january-20 Top 5 214 20 Jan 2017 Article Tracy Miranda How is your community promoting diversity? 1 /article/17/1/take-action-diversity-tech Diversity and inclusion 1007

5, sort

What if we first want to find the specific column that contains the most information? Suppose we need to know which article contains the longest New article list, then facing the previously obtained article list on January 20, we can use the sort command to sort the column word count. In this case, we do not need to use the intermediate file and can continue to use the pipe. But breaking long command chains into shorter parts can often simplify the entire operation.

$ sort -nr -t$'/t' -k8 jan20only.tsv | head -n 1 20 Jan 2017 Article Tracy Miranda How is your community promoting diversity? 1 /article/17/1/take-action-diversity-tech Diversity and inclusion 1007

以上是一条长命令,我们尝试进行拆分。首先,我们使用sort命令对字数进行排序。-nr选项告知sort以数字排序,并将结果进行反向排序(由大到小)。此后的-t$'/t'则告知sort其中的分隔符为tab('/t')。其中的$要求此shell为一条需要处理的字符串,并将/n返回为tab。而-k8部分则告知sort命令使用第八列,即本示例中进行字数统计的目标列。

最后,输出结果被pipe至head,处理后在结果中显示此文件中包含最多字数的文章标题。

6、sed

大家可能还需要在文件中选择特定某行。这里可以使用sed。如果希望将全部包含标题的多个文件加以合并,并只为整体文件显示一组标题,即需要清除额外内容; 或者希望只提取特定行范围,同样可以使用sed。另外,sed还能够很好地完成批量查找与替换任务。

下面立足之前的文章列表创建一个不含标题的新文件,用于同其他文件合并(例如我们每月都会定期生成某个文件,现在需要将各个月份的内容进行合并)。

$ sed '1 d' jan2017articles.csv > jan17no_headers.csv

其中的“1 d”选项要求sed删除第一行。

7、cut

了解了如何删除行,那么我们该如何删除列?或者说如何只选定某一列?下面我们尝试为之前生成的列表创建一份新的作者清单。

$ cut -d',' -f3 jan17no_headers.csv > authors.txt

在这里,通过cut与-d相配合代表着我们需要第三列(-f3),并将结果发送至名为authors.txt的新文件。

8、uniq

作者清单已经完成,但我们要如何知悉其中包含多少位不同的作者?每位作者又各自编写了多少篇文章?这里使用unip。下面我们对文件进行sort排序,找到唯一值,而后计算每位作者的文章数量,并用结果替换原本内容。

sort authors.txt | uniq -c > authors.txt

现在已经可以看到每位作者的对应文章数,下面检查最后三行以确保结果正确。

$ tail -n3 authors-sorted.txt 1 Tracy Miranda 1 Veer Muchandi 3 VM (Vicky) Brasseur

9、awk

最后让我们了解最后一款工具,awk。awk是一款出色的替换性工具,当然其功能远不止如此。下面我们重新回归1月12日文章列表TSV文件,利用awk创建新列表以标明各篇文章的作者以及各作者编写的具体字数。

$ awk -F "/t" '{print $3 " " $NF}' jan20only.tsv

Kushal Das 690

D Ruth Bavousett 218

Jason Baker 214

Tracy Miranda 1007其中的-F "/t"用于告知awk目前处理的是由tab分隔的数据。在大括号内,我们为awk提供执行代码。$3代表要求其将输出第三行,而$NF则代表输出最后一行(即‘字段数’的缩写),并在两项结果间添加两个空格以进行明确划分。

虽然这里列举的例子规模较小,看似不必使用上述工具解决,但如果将范围扩大到包含93000行的文件,那么它显然很难利用电子表格程序进行处理。

利用这些简单的工具与小型脚本,大家可以避免使用数据库工具并轻松完成大量数据统计工作。无论您是专业人士还是业余爱好者,它的作用都不容忽视。

相关推荐:《Linux视频教程》

The above is the detailed content of What are the Linux data analysis tools?. For more information, please follow other related articles on the PHP Chinese website!