What is a stream? How to understand flow? The following article will give you an in-depth understanding of the stream (Stream) inNode. I hope it will be helpful to you!

The author has often used the pipe function in development recently. I only know that it is a stream pipe, but I don’t know how it works, so I want to find out. Just start learning from the flow, and compile the knowledge and source code you have read into an article to share with everyone.

Stream is a very basic concept inNodejs. Many basic modules are implemented based on streams and play a very important role. At the same time, flow is also a very difficult concept to understand. This is mainly due to the lack of relevant documentation. For NodeJs beginners, it often takes a lot of time to understand flow before they can truly master this concept. Fortunately, for most NodeJs, it is For users, it is only used to develop Web applications. Insufficient understanding of streams does not affect their use. However, understanding streams can lead to a better understanding of other modules in NodeJs, and in some cases, using streams to process data will have better results. [Related tutorial recommendations:nodejs video tutorial]

For users of streams, You can think of a stream as an array, and we only need to focus on getting (consuming) and writing (producing) from it.

For stream developers (using the stream module to create a new instance), they focus on how to implement some methods in the stream. They usually focus on two points, who is the target resource and how to operate it. After the target resource is determined, it needs to be operated on the target resource according to the different states and events of the stream

All in NodeJs All streams have buffer pools. The purpose of the buffer pool is to increase the efficiency of the stream. When the production and consumption of data take time, we can produce data in advance and store it in the buffer pool before the next consumption. However, the buffer pool is not always in use. For example, when the cache pool is empty, the data will not be put into the cache pool after production but will be consumed directly. .

If the speed of data production is greater than the speed of data consumption, the excess data will wait somewhere. If the data production speed is slower than the process data consumption speed, then the data will accumulate to a certain amount somewhere and then be consumed. (Developers cannot control the production and consumption speed of data, they can only try to produce data or consume data at the right time)

The place where data waits, accumulates data, and then occurs. It isbuffer pool. The buffer pool is usually located in the computer's RAM (memory).

To give a common buffer example, when we watch online videos, if your Internet speed is very fast, the buffer will always be filled immediately, then sent to the system for playback, and then buffered immediately. A video. There will be no lag during viewing. If the network speed is very slow, you will see loading, indicating that the buffer is being filled. When the filling is completed, the data is sent to the system and you can see this video.

The cache pool of NodeJs stream is a Buffer linked list. Every time you add data to the cache pool, a Buffer node will be re-created and inserted into the end of the linked list.

Stream in NodeJs is an abstract interface that implements EventEmitter, so I will briefly introduce EventEmitter first.

EventEmitter is a class that implements event publishing and subscription functions. Several commonly used methods (on, once, off, emit) are believed to be familiar to everyone, so I will not introduce them one by one.

const { EventEmitter } = require('events') const eventEmitter = new EventEmitter() // 为 eventA 事件绑定处理函数 eventEmitter.on('eventA', () => { console.log('eventA active 1'); }); // 为 eventB 事件绑定处理函数 eventEmitter.on('eventB', () => { console.log('eventB active 1'); }); eventEmitter.once('eventA', () => { console.log('eventA active 2'); }); // 触发 eventA eventEmitter.emit('eventA') // eventA active 1 // eventA active 2

It is worth noting thatEventEmitterhas two events callednewListenerandremoveListener. When you add any events to an event object After the event listening function is triggered,newListener(eventEmitter.emit('newListener')) will be triggered. When a handler function is removed,removeListenerwill be triggered in the same way.

It should also be noted that the once bound processing function will only be executed once,removeListenerwill be triggered before its execution, which meansoncebinding The listening function is first removed before being triggered.

const { EventEmitter } = require('events') const eventEmitter = new EventEmitter() eventEmitter.on('newListener', (event, listener)=>{ console.log('newListener', event, listener) }) eventEmitter.on('removeListener', (event, listener) => { console.log('removeListener', event, listener) }) //newListener removeListener[Function(anonymous)] eventEmitter.on('eventA', () => { console.log('eventA active 1'); }); //newListener eventA [Function (anonymous)] function listenerB() { console.log('eventB active 1'); } eventEmitter.on('eventB', listenerB); // newListener eventB [Function (anonymous)] eventEmitter.once('eventA', () => { console.log('eventA active 2'); }); // newListener eventA [Function (anonymous)] eventEmitter.emit('eventA') // eventA active 1 // removeListener eventA [Function: bound onceWrapper] { listener: [Function (anonymous)] } // eventA active 2 eventEmitter.off('eventB', listenerB) // removeListener eventB[Function: listenerB]

But this is not important for our subsequent content.

Stream is an abstract interface for processing streaming data in Node.js. Stream is not an actual interface, but a general term for all streams. The actual interfaces are ReadableStream, WritableStream, and ReadWriteStream.

interface ReadableStream extends EventEmitter { readable: boolean; read(size?: number): string | Buffer; setEncoding(encoding: BufferEncoding): this; pause(): this; resume(): this; isPaused(): boolean; pipe(destination: T, options?: { end?: boolean | undefined; }): T; unpipe(destination?: WritableStream): this; unshift(chunk: string | Uint8Array, encoding?: BufferEncoding): void; wrap(oldStream: ReadableStream): this; [Symbol.asyncIterator](): AsyncIterableIterator; } interface WritableStream extends EventEmitter { writable: boolean; write(buffer: Uint8Array | string, cb?: (err?: Error | null) => void): boolean; write(str: string, encoding?: BufferEncoding, cb?: (err?: Error | null) => void): boolean; end(cb?: () => void): this; end(data: string | Uint8Array, cb?: () => void): this; end(str: string, encoding?: BufferEncoding, cb?: () => void): this; } interface ReadWriteStream extends ReadableStream, WritableStream { }

It can be seen that ReadableStream and WritableStream are both interfaces that inherit the EventEmitter class (interfaces in ts can inherit classes, because they are only merging types).

The implementation classes corresponding to the above interfaces are Readable, Writable and Duplex

There are 4 types of streams in NodeJs:

The speed of disk writing data is much lower than that of memory. We imagine that there is a gap between memory and disk "Pipeline", "pipeline" means "flow". The data in the memory flows into the pipe very quickly. When the pipe is full, data back pressure will be generated in the memory, and the data will be backlogged in the memory, occupying resources.

The solution for NodeJs Stream is to set a float value for each stream'sbuffer pool(that is, the write queue in the figure). When the data in it After the amount reaches this float value, false will be returned whenpushdata is pushed to the cache pool again, indicating that the content of the cache pool in the current stream has reached the float value, and no more data is expected to be written. At this time we should Immediately stop the production of data to prevent back pressure caused by an excessively large cache pool.

Readable stream (Readable) is a type of stream. It has two modes and three states.

Two reading modes:

Flow mode: Data will be read and written from the underlying system to the buffer. When the buffer is full, the data will automatically be passed to the registered event handler as quickly as possible through EventEmitter

Pause mode: In this mode, EventEmitter will not be actively triggered to transmit data, and theReadable.read()method must be explicitly called to read data from the buffer. , read will trigger the response to the EventEmitter event.

Three states:

readableFlowing === null (initial state)

readableFlowing === false (pause mode)

readableFlowing === true (flowing mode)

Initially flowingreadable. readableFlowingbecomes true after adding data event fornull

. Whenpause(),unpipe()is called, or back pressure is received or areadableevent is added,readableFlowingwill be set to false ,In this state, binding a listener to the data event will not switch readableFlowing to true.

Callresume()to switch thereadableFlowingof the readable stream to true

Remove all readable events to enable readableFlowing The only way to become null.

| Event name | Description |

|---|---|

| Triggered when there is new readable data in the buffer (triggered every time a node is inserted into the cache pool) | |

| It will be triggered every time after consuming data Triggered, the parameter is the data consumed this time | |

| Triggered when the stream is closed | |

| Triggered when an error occurs in the stream |

const fs = require('fs'); const readStreams = fs.createReadStream('./EventEmitter.js', { highWaterMark: 100// 缓存池浮标值 }) readStreams.on('readable', () => { console.log('缓冲区满了') readStreams.read()// 消费缓存池的所有数据,返回结果并且触发data事件 }) readStreams.on('data', (data) => { console.log('data') })

https://github1s.com/nodejs/node/blob/v16.14.0/lib/internal/streams/readable.js#L527

当 size 为 0 会触发 readable 事件。

当缓存池中的数据长度达到浮标值highWaterMark后,就不会在主动请求生产数据,而是等待数据被消费后在生产数据

暂停状态的流如果不调用read来消费数据时,后续也不会在触发data和readable,当调用read消费时会先判断本次消费后剩余的数据长度是否低于浮标值,如果低于浮标值就会在消费前请求生产数据。这样在read后的逻辑执行完成后新的数据大概率也已经生产完成,然后再次触发readable,这种提前生产下一次消费的数据存放在缓存池的机制也是缓存流为什么快的原因

流动状态下的流有两种情况

他们的区别仅仅在于数据生产后缓存池是否还存在数据,如果存在数据则将生产的数据 push 到缓存池等待消费,如果不存在则直接将数据交给 data 而不加入缓存池。

值得注意的是当一个缓存池中存在数据的流从暂停模式进入的流动模式时,会先循环调用 read 来消费数据只到返回 null

暂停模式下,一个可读流读创建时,模式是暂停模式,创建后会自动调用_read方法,把数据从数据源push到缓冲池中,直到缓冲池中的数据达到了浮标值。每当数据到达浮标值时,可读流会触发一个 "readable" 事件,告诉消费者有数据已经准备好了,可以继续消费。

一般来说,'readable'事件表明流有新的动态:要么有新的数据,要么到达流的尽头。所以,数据源的数据被读完前,也会触发一次'readable'事件;

消费者 "readable" 事件的处理函数中,通过stream.read(size)主动消费缓冲池中的数据。

const { Readable } = require('stream') let count = 1000 const myReadable = new Readable({ highWaterMark: 300, // 参数的 read 方法会作为流的 _read 方法,用于获取源数据 read(size) { // 假设我们的源数据上 1000 个1 let chunk = null // 读取数据的过程一般是异步的,例如IO操作 setTimeout(() => { if (count > 0) { let chunkLength = Math.min(count, size) chunk = '1'.repeat(chunkLength) count -= chunkLength } this.push(chunk) }, 500) } }) // 每一次成功 push 数据到缓存池后都会触发 readable myReadable.on('readable', () => { const chunk = myReadable.read()//消费当前缓存池中所有数据 console.log(chunk.toString()) })

值得注意的是, 如果 read(size) 的 size 大于浮标值,会重新计算新的浮标值,新浮标值是size的下一个二次幂(size <= 2^n,n取最小值)

// hwm 不会大于 1GB. const MAX_HWM = 0x40000000; function computeNewHighWaterMark(n) { if (n >= MAX_HWM) { // 1GB限制 n = MAX_HWM; } else { //取下一个2最高幂,以防止过度增加hwm n--; n |= n >>> 1; n |= n >>> 2; n |= n >>> 4; n |= n >>> 8; n |= n >>> 16; n++; } return n; }

所有可读流开始的时候都是暂停模式,可以通过以下方法可以切换至流动模式:

data" 事件句柄;resume”方法;pipe" 方法把数据发送到可写流流动模式下,缓冲池里面的数据会自动输出到消费端进行消费,同时,每次输出数据后,会自动回调_read方法,把数据源的数据放到缓冲池中,如果此时缓存池中不存在数据则会直接吧数据传递给 data 事件,不会经过缓存池;直到流动模式切换至其他暂停模式,或者数据源的数据被读取完了(push(null));

可读流可以通过以下方式切换回暂停模式:

stream.pause()。stream.unpipe()可以移除多个管道目标。const { Readable } = require('stream') let count = 1000 const myReadable = new Readable({ highWaterMark: 300, read(size) { let chunk = null setTimeout(() => { if (count > 0) { let chunkLength = Math.min(count, size) chunk = '1'.repeat(chunkLength) count -= chunkLength } this.push(chunk) }, 500) } }) myReadable.on('data', data => { console.log(data.toString()) })

相对可读流来说,可写流要简单一些。

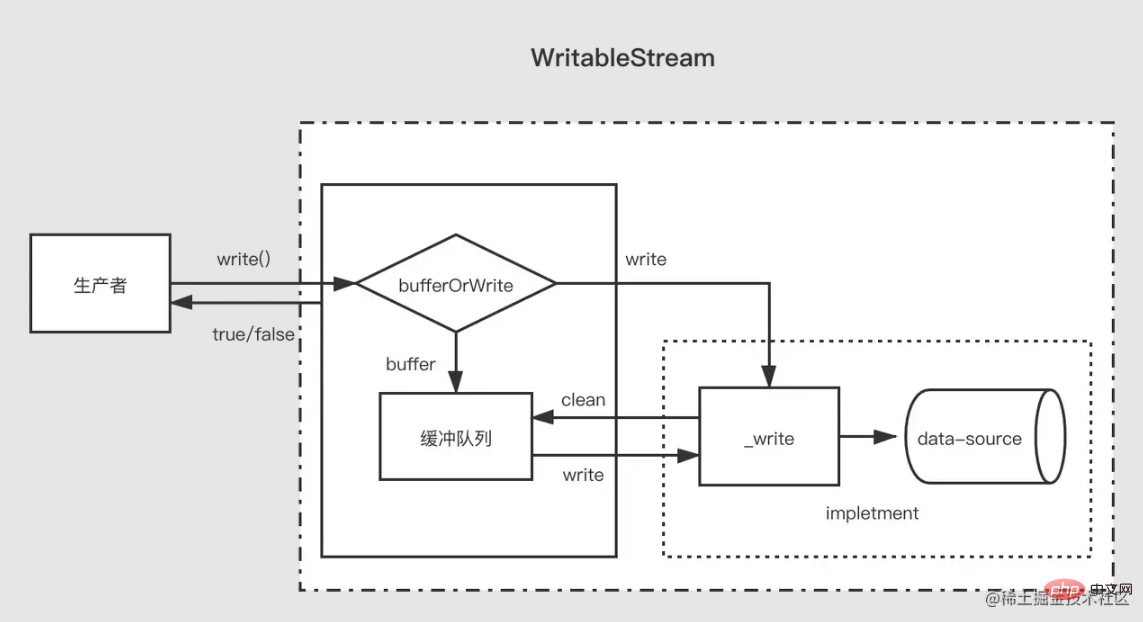

当生产者调用write(chunk)时,内部会根据一些状态(corked,writing等)选择是否缓存到缓冲队列中或者调用_write,每次写完数据后,会尝试清空缓存队列中的数据。如果缓冲队列中的数据大小超出了浮标值(highWaterMark),消费者调用write(chunk)后会返回false,这时候生产者应该停止继续写入。

那么什么时候可以继续写入呢?当缓冲中的数据都被成功_write之后,清空了缓冲队列后会触发drain事件,这时候生产者可以继续写入数据。

当生产者需要结束写入数据时,需要调用stream.end方法通知可写流结束。

const { Writable, Duplex } = require('stream') let fileContent = '' const myWritable = new Writable({ highWaterMark: 10, write(chunk, encoding, callback) {// 会作为_write方法 setTimeout(()=>{ fileContent += chunk callback()// 写入结束后调用 }, 500) } }) myWritable.on('close', ()=>{ console.log('close', fileContent) }) myWritable.write('123123')// true myWritable.write('123123')// false myWritable.end()

注意,在缓存池中数据到达浮标值后,此时缓存池中可能存在多个节点,在清空缓存池的过程中(循环调用_read),并不会向可读流一样尽量一次消费长度为浮标值的数据,而是每次消费一个缓冲区节点,即使这个缓冲区长度于浮标值不一致也是如此

const { Writable } = require('stream') let fileContent = '' const myWritable = new Writable({ highWaterMark: 10, write(chunk, encoding, callback) { setTimeout(()=>{ fileContent += chunk console.log('消费', chunk.toString()) callback()// 写入结束后调用 }, 100) } }) myWritable.on('close', ()=>{ console.log('close', fileContent) }) let count = 0 function productionData(){ let flag = true while (count <= 20 && flag){ flag = myWritable.write(count.toString()) count++ } if(count > 20){ myWritable.end() } } productionData() myWritable.on('drain', productionData)

上述是一个浮标值为10的可写流,现在数据源是一个0——20到连续的数字字符串,productionData用于写入数据。

首先第一次调用myWritable.write("0")时,因为缓存池不存在数据,所以"0"不进入缓存池,而是直接交给_wirte,myWritable.write("0")返回值为true

当执行myWritable.write("1")时,因为_wirte的callback还未调用,表明上一次数据还未写入完,位置保证数据写入的有序性,只能创建一个缓冲区将"1"加入缓存池中。后面2-9都是如此

当执行myWritable.write("10")时,此时缓冲区长度为9(1-9),还未到达浮标值,"10"继续作为一个缓冲区加入缓存池中,此时缓存池长度变为11,所以myWritable.write("1")返回false,这意味着缓冲区的数据已经足够,我们需要等待drain事件通知时再生产数据。

100ms过后,_write("0", encoding, callback)的callback被调用,表明"0"已经写入完成。然后会检查缓存池中是否存在数据,如果存在则会先调用_read消费缓存池的头节点("1"),然后继续重复这个过程直到缓存池为空后触发drain事件,再次执行productionData

调用myWritable.write("11"),触发第1步开始的过程,直到流结束。

在理解了可读流与可写流后,双工流就好理解了,双工流事实上是继承了可读流然后实现了可写流(源码是这么写的,但是应该说是同时实现了可读流和可写流更加好)。

Duplex 流需要同时实现下面两个方法

实现 _read() 方法,为可读流生产数据

实现 _write() 方法,为可写流消费数据

上面两个方法如何实现在上面可写流可读流的部分已经介绍过了,这里需要注意的是,双工流是存在两个独立的缓存池分别提供给两个流,他们的数据源也不一样

以 NodeJs 的标准输入输出流为例:

// 每当用户在控制台输入数据(_read),就会触发data事件,这是可读流的特性 process.stdin.on('data', data=>{ process.stdin.write(data); }) // 每隔一秒向标准输入流生产数据(这是可写流的特性,会直接输出到控制台上),不会触发data setInterval(()=>{ process.stdin.write('不是用户控制台输入的数据') }, 1000)

可以将 Duplex 流视为具有可写流的可读流。两者都是独立的,每个都有独立的内部缓冲区。读写事件独立发生。

Duplex Stream ------------------| Read <----- External Source You ------------------| Write -----> External Sink ------------------|Copy after login

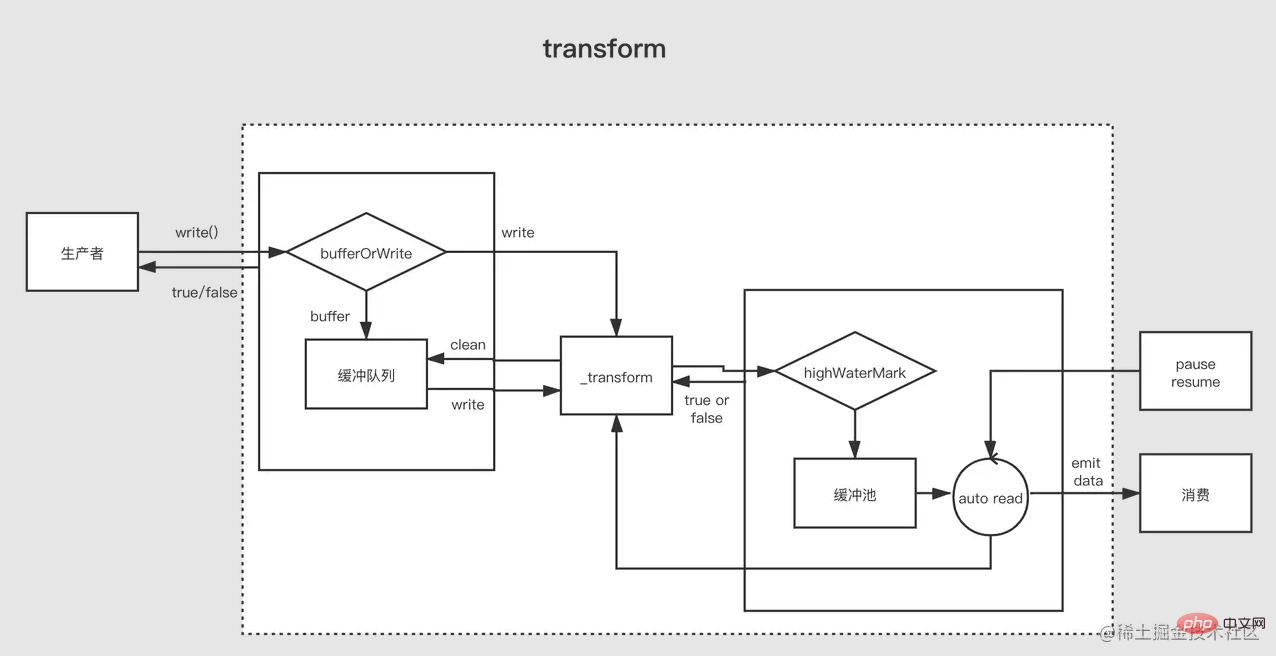

Transform 流是双工的,其中读写以因果关系进行。双工流的端点通过某种转换链接。读取要求发生写入。

Transform Stream --------------|-------------- You Write ----> ----> Read You --------------|--------------Copy after login

对于创建 Transform 流,最重要的是要实现_transform方法而不是_write或者_read。_transform中对可写流写入的数据做处理(消费)然后为可读流生产数据。

转换流还经常会实现一个 `_flush` 方法,他会在流结束前被调用,一般用于对流的末尾追加一些东西,例如压缩文件时的一些压缩信息就是在这里加上的

const { write } = require('fs') const { Transform, PassThrough } = require('stream') const reurce = '1312123213124341234213423428354816273513461891468186499126412' const transform = new Transform({ highWaterMark: 10, transform(chunk ,encoding, callback){// 转换数据,调用push将转换结果加入缓存池 this.push(chunk.toString().replace('1', '@')) callback() }, flush(callback){// end触发前执行 this.push('<<<') callback() } }) // write 不断写入数据 let count = 0 transform.write('>>>') function productionData() { let flag = true while (count <= 20 && flag) { flag = transform.write(count.toString()) count++ } if (count > 20) { transform.end() } } productionData() transform.on('drain', productionData) let result = '' transform.on('data', data=>{ result += data.toString() }) transform.on('end', ()=>{ console.log(result) // >>>0@23456789@0@1@2@3@4@5@6@7@8@920<<< })

管道是将上一个程序的输出作为下一个程序的输入,这是管道在 Linux 中管道的作用。NodeJs 中的管道其实也类似,它管道用于连接两个流,上游的流的输出会作为下游的流的输入。

管道 sourec.pipe(dest, options) 要求 sourec 是可读的,dest是可写的。其返回值是 dest。

对于处于管道中间的流既是下一个流的上游也是上一个流的下游,所以其需要时一个可读可写的双工流,一般我们会使用转换流来作为管道中间的流。

https://github1s.com/nodejs/node/blob/v17.0.0/lib/internal/streams/legacy.js#L16-L33

Stream.prototype.pipe = function(dest, options) { const source = this; function ondata(chunk) { if (dest.writable && dest.write(chunk) === false && source.pause) { source.pause(); } } source.on('data', ondata); function ondrain() { if (source.readable && source.resume) { source.resume(); } } dest.on('drain', ondrain); // ...后面的代码省略 }

pipe 的实现非常清晰,当上游的流发出 data 事件时会调用下游流的 write 方法写入数据,然后立即调用 source.pause() 使得上游变为暂停状态,这主要是为了防止背压。

当下游的流将数据消费完成后会调用 source.resume() 使上游再次变为流动状态。

我们实现一个将 data 文件中所有1替换为@然后输出到 result 文件到管道。

const { Transform } = require('stream') const { createReadStream, createWriteStream } = require('fs') // 一个位于管道中的转换流 function createTransformStream(){ return new Transform({ transform(chunk, encoding, callback){ this.push(chunk.toString().replace(/1/g, '@')) callback() } }) } createReadStream('./data') .pipe(createTransformStream()) .pipe(createWriteStream('./result'))

在管道中只存在两个流时,其功能和转换流有点类似,都是将一个可读流与一个可写流串联起来,但是管道可以串联多个流。

原文地址:https://juejin.cn/post/7077511716564631566

作者:月夕

更多node相关知识,请访问:nodejs 教程!

The above is the detailed content of What is a stream? How to understand streams in Nodejs. For more information, please follow other related articles on the PHP Chinese website!

| Description | |

|---|---|

| Consume data with a length of size. Return null to indicate that the current data is less than size. Otherwise, return the data consumed this time. When size is not passed, it means consuming all the data in the cache pool |