How does node crawl data? The following article will share with you a node crawler example and talk about how to use node to crawl novel chapters. I hope it will be helpful to everyone!

I am going to use electron to make a novel reading tool to practice. The first thing to solve is the data problem, that is, the text of the novel.

Here we are going to use nodejs to crawl the novel website, try to crawl the next novel, the data will not be stored in the database, first use txt as text storage

For website requests in node, there are http and https libraries, which contain request requests. method.

Example:

request = https.request(TestUrl, { encoding:'utf-8' }, (res)=>{

let chunks = ''

res.on('data', (chunk)=>{

chunks += chunk

})

res.on('end',function(){

console.log('请求结束');

})

})But that’s it. It only accesses a html text data, and cannot extract internal elements. Work (you can also get it regularly, but it’s too complicated).

I stored the accessed data through the fs.writeFile method. This is just the html of the entire web page

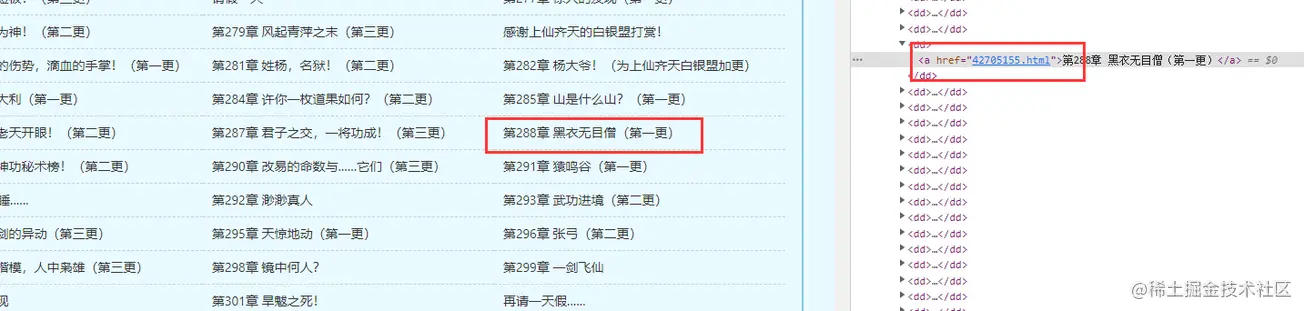

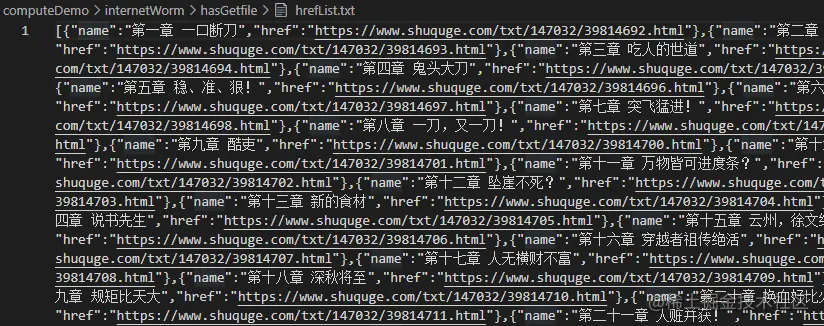

But I What you want is the content in each chapter. In this way, you need to obtain the hyperlink of the chapter, form a hyperlink linked list and crawl it

So, here we will introduce a js library, cheerio

##Official document: https://cheerio.js.org/Chinese documentation: https://github.com/cheeriojs/cheerio/wiki/Chinese-READMEIn the documentation, you can use examples for debugging

jquery.

const fs = require('fs')

const cheerio = require('cheerio');

// 引入读取方法

const { getFile, writeFun } = require('./requestNovel')

let hasIndexPromise = getFile('./hasGetfile/index.html');

let bookArray = [];

hasIndexPromise.then((res)=>{

let htmlstr = res;

let $ = cheerio.load(htmlstr);

$(".listmain dl dd a").map((index, item)=>{

let name = $(item).text(), href = 'https://www.shuquge.com/txt/147032/' + $(item).attr('href')

if (index > 11){

bookArray.push({ name, href })

}

})

// console.log(bookArray)

writeFun('./hasGetfile/hrefList.txt', JSON.stringify(bookArray), 'w')

})

Because batch crawling requires an IP proxy in the end, we are not ready yet. For the time being, we will write a method to obtain the content of a certain chapter of the novelCrawling a certain chapter The content is actually relatively simple:

// 爬取某一章节的内容方法

function getOneChapter(n) {

return new Promise((resolve, reject)=>{

if (n >= bookArray.length) {

reject('未能找到')

}

let name = bookArray[n].name;

request = https.request(bookArray[n].href, { encoding:'gbk' }, (res)=>{

let html = ''

res.on('data', chunk=>{

html += chunk;

})

res.on('end', ()=>{

let $ = cheerio.load(html);

let content = $("#content").text();

if (content) {

// 写成txt

writeFun(`./hasGetfile/${name}.txt`, content, 'w')

resolve(content);

} else {

reject('未能找到')

}

})

})

request.end();

})

}

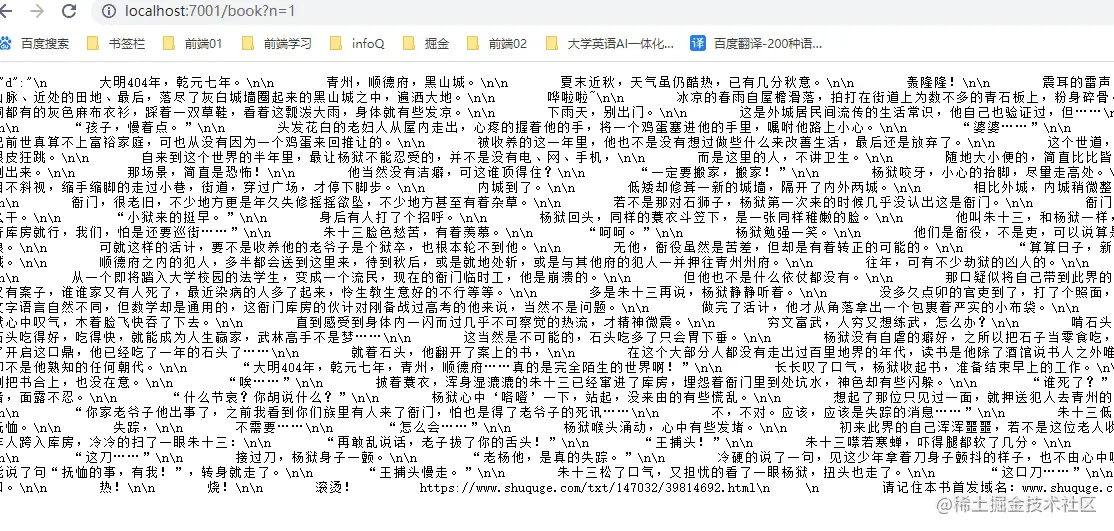

getOneChapter(10)const express = require('express');

const IO = express();

const { getAllChapter, getOneChapter } = require('./readIndex')

// 获取章节超链接链表

getAllChapter();

IO.use('/book',function(req, res) {

// 参数

let query = req.query;

if (query.n) {

// 获取某一章节数据

let promise = getOneChapter(parseInt(query.n - 1));

promise.then((d)=>{

res.json({ d: d })

}, (d)=>{

res.json({ d: d })

})

} else {

res.json({ d: 404 })

}

})

//服务器本地主机的数字

IO.listen('7001',function(){

console.log("启动了。。。");

})

nodejs tutorial!

The above is the detailed content of Example of node crawling data: Let's talk about how to crawl novel chapters. For more information, please follow other related articles on the PHP Chinese website!