In the past year, a series of Vincentian graph diffusion models represented by Stable Diffusion have completely changed the field of visual creation. Countless users have improved their productivity with images produced by diffusion models. However, the speed of generation of diffusion models is a common problem. Because the denoising model relies on multi-step denoising to gradually turn the initial Gaussian noise into an image, it requires multiple calculations of the network, resulting in a very slow generation speed. This makes the large-scale Vincentian graph diffusion model very unfriendly to some applications that focus on real-time and interactivity. With the introduction of a series of technologies, the number of steps required to sample from a diffusion model has increased from the initial few hundred steps to dozens of steps, or even only 4-8 steps.

Recently, a research team from Google proposed the UFOGen model, a variant of the diffusion model that can sample extremely quickly. By fine-tuning Stable Diffusion with the method proposed in the paper, UFOGen can generate high-quality images in just one step. At the same time, Stable Diffusion's downstream applications, such as graph generation and ControlNet, can also be retained.

Please click the following link to view the paper: https://arxiv.org/abs/2311.09257

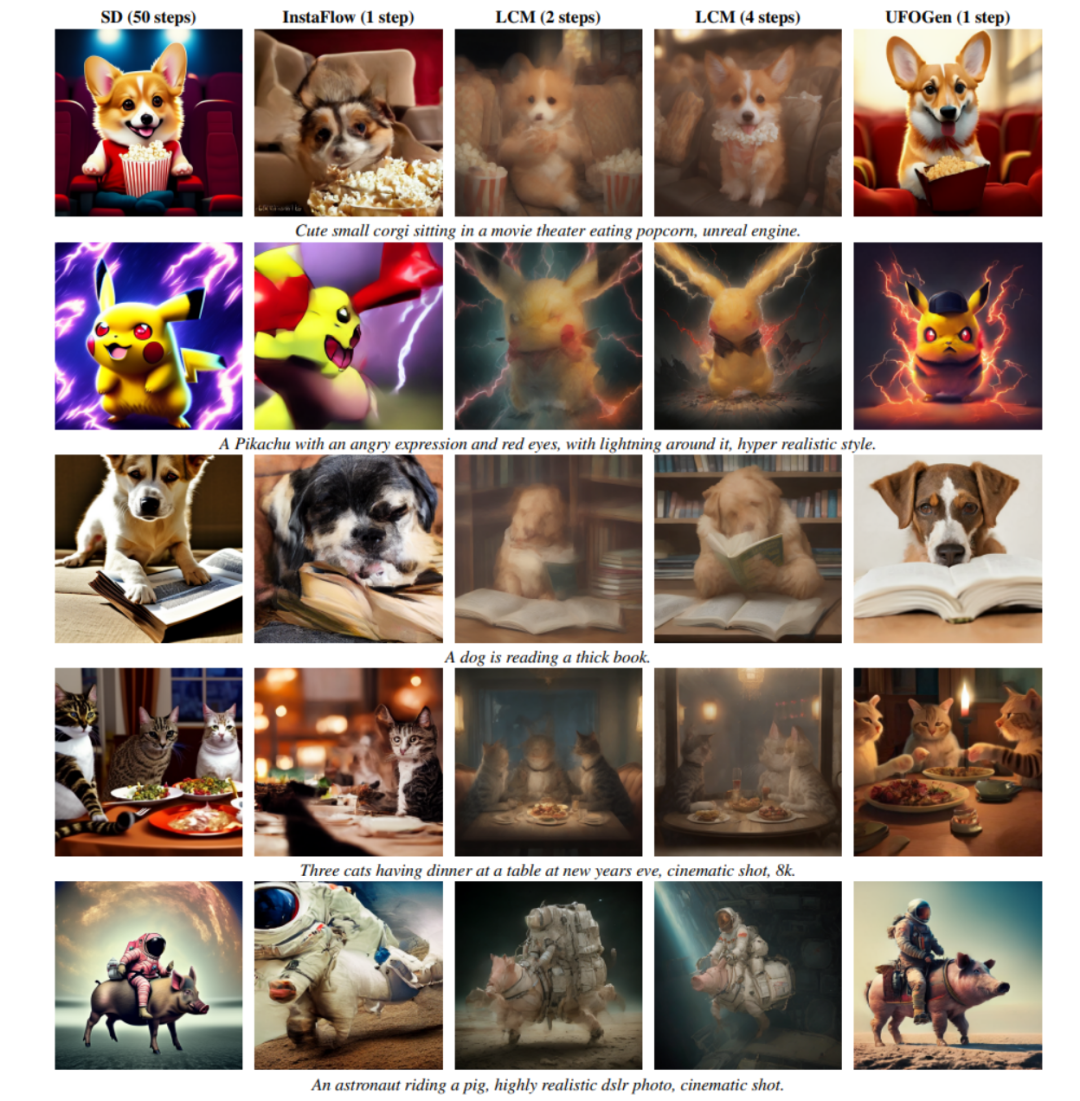

As you can see from the picture below, UFOGen can generate high-quality, diverse pictures in just one step.

Improving the generation speed of diffusion models is not a new research direction. Previous research in this area mainly focused on two directions. One direction is to design more efficient numerical calculation methods, so as to achieve the purpose of solving the sampling ODE of the diffusion model using fewer discrete steps. For example, the DPM series of numerical solvers proposed by Zhu Jun's team at Tsinghua University have been verified to be very effective in Stable Diffusion, and can significantly reduce the number of solution steps from the default 50 steps of DDIM to less than 20 steps. Another direction is to use the knowledge distillation method to compress the ODE-based sampling path of the model to a smaller number of steps. Examples in this direction are Guided distillation, one of the best paper candidates of CVPR2023, and the recently popular Latent Consistency Model (LCM). LCM, in particular, can reduce the number of sampling steps to only 4 by distilling the consistency target, which has spawned many real-time generation applications.

However, Google’s research team did not follow the above general direction in the UFOGen model. Instead, it took a different approach and used a mixture of the diffusion model and GAN proposed more than a year ago. Model ideas. They believe that the aforementioned ODE-based sampling and distillation has its fundamental limitations, and it is difficult to compress the number of sampling steps to the limit. Therefore, if you want to achieve the goal of one-step generation, you need to open up new ideas.

Hybrid model refers to a method that combines a diffusion model and a generative adversarial network (GAN). This method was first proposed by NVIDIA's research team at ICLR 2022 and is called DDGAN ("Using Denoising Diffusion GAN to Solve Three Problems in Generative Learning"). DDGAN is inspired by the shortcomings of ordinary diffusion models that make Gaussian assumptions about noise reduction distributions. Simply put, the diffusion model assumes that the denoising distribution (a conditional distribution that, given a noisy sample, generates a less noisy sample) is a simple Gaussian distribution. However, the theory of stochastic differential equations proves that such an assumption only holds true when the noise reduction step size approaches 0. Therefore, the diffusion model requires a large number of repeated denoising steps to ensure a small denoising step size, resulting in a slower generation speed.

DDGAN proposes to abandon the Gaussian assumption of the denoising distribution and instead Use a conditional GAN to simulate this noise reduction distribution. Because GAN has extremely strong representation capabilities and can simulate complex distributions, a larger noise reduction step size can be used to reduce the number of steps. However, DDGAN changes the stable reconstruction training goal of the diffusion model into the training goal of GAN, which can easily cause training instability and make it difficult to extend to more complex tasks. At NeurIPS 2023, the same Google research team that created UGOGen proposed SIDDM (paper title Semi-Implicit Denoising Diffusion Models), reintroducing the reconstruction objective function into the training objective of DDGAN, making training more stable and The generation quality is greatly improved compared to DDGAN.

SIDDM, as the predecessor of UFOGen, can generate high-quality images on CIFAR-10, ImageNet and other research data sets in only 4 steps. But SIDDM has two problems that need to be solved: first, it cannot achieve one-step generation of ideal conditions; second, it is not simple to extend it to the field of Vincentian graphs that attract more attention. To this end, Google’s research team proposed UFOGen to solve these two problems.

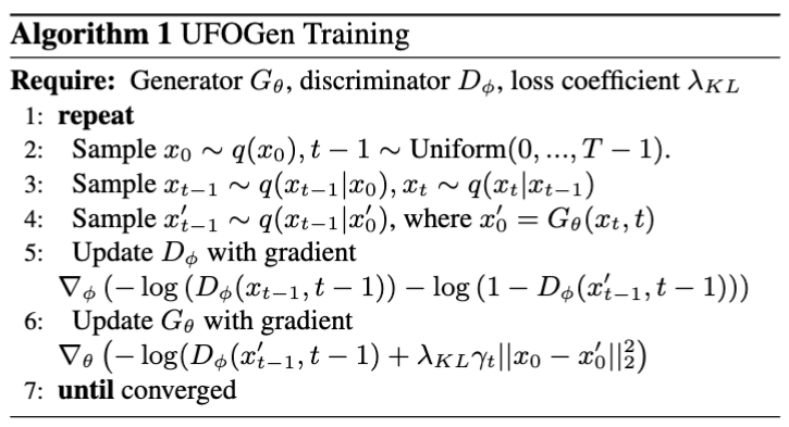

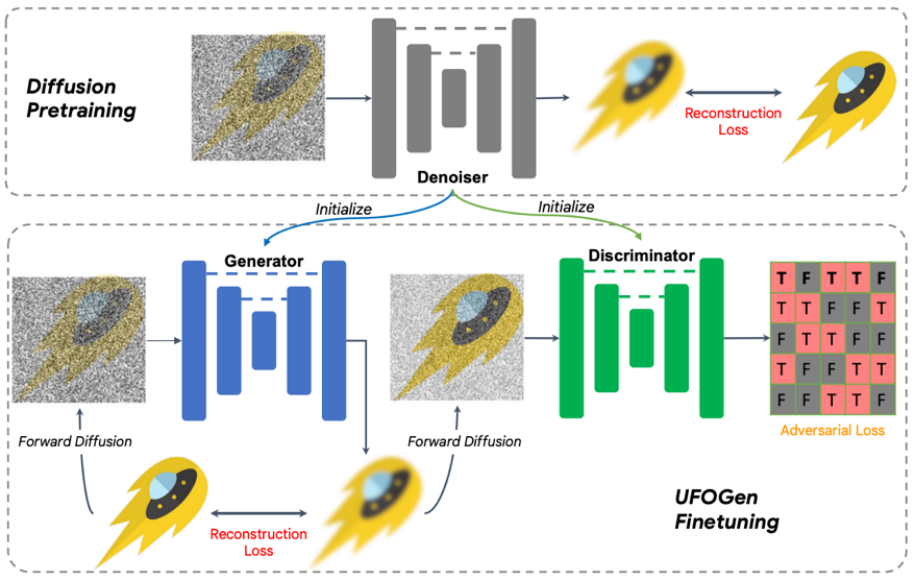

Specifically, for question one, through simple mathematical analysis, the team found that by changing the parameterization method of the generator and changing the calculation method of the reconstruction loss function, the theory The above model can be generated in one step. For question two, the team proposed to use the existing Stable Diffusion model for initialization to allow the UFOGen model to be expanded to Vincent diagram tasks faster and better. It is worth noting that SIDDM has proposed that both the generator and the discriminator adopt the UNet architecture. Therefore, based on this design, the generator and discriminator of UFOGen are initialized by the Stable Diffusion model. Doing so makes the most of Stable Diffusion's internal information, especially about the relationship between images and text. Such information is difficult to obtain through adversarial learning. The training algorithm and diagram are shown below.

It is worth noting that before this, there was some work using GAN to do Vincentian graphs, such as NVIDIA StyleGAN-T and Adobe's GigaGAN both extend the basic architecture of StyleGAN to a larger scale, allowing them to generate graphs in one step. The author of UFOGen pointed out that compared with previous GAN-based work, in addition to generation quality, UFOGen has several advantages:

Rewritten content: 1. In the Vincentian graph task , pure generative adversarial network (GAN) training is very unstable. The discriminator not only needs to judge the texture of the image, but also needs to understand the degree of match between the image and the text, which is a very difficult task, especially in the early stages of training. Therefore, previous GAN models, such as GigaGAN, introduced a large number of auxiliary losses to help training, which made training and parameter adjustment extremely difficult. However, UFOGen makes GAN play a supporting role in this regard by introducing reconstruction loss, thereby achieving very stable training

2. Training GAN directly from scratch is not only unstable but also abnormal Expensive, especially for tasks like Vincent plots that require large amounts of data and training steps. Because two sets of parameters need to be updated at the same time, the training of GAN consumes more time and memory than the diffusion model. UFOGen's innovative design can initialize parameters from Stable Diffusion, greatly saving training time. Usually convergence only requires tens of thousands of training steps.

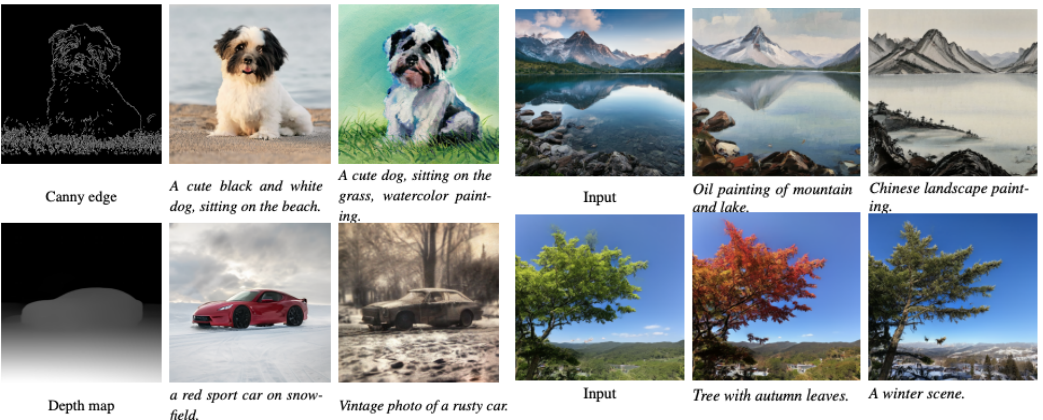

3. One of the charms of the Vincent graph diffusion model is that it can be applied to other tasks, including applications that do not require fine-tuning such as graph graphs, and applications that already require fine-tuning such as controlled generation. Previous GAN models have been difficult to scale to these downstream tasks because fine-tuning GANs has been difficult. In contrast, UFOGen has the framework of a diffusion model and therefore can be more easily applied to these tasks. The figure below shows UFOGen's graph generation graph and examples of controllable generation. Note that these generation only require one step of sampling.

Experiments have shown that UFOGen only needs one step of sampling to generate high-quality images that conform to text descriptions. Compared with recently proposed high-speed sampling methods for diffusion models (such as Instaflow and LCM), UFOGen shows strong competitiveness. Even compared to Stable Diffusion, which requires 50 steps of sampling, the samples generated by UFOGen are not inferior in appearance. Here are some comparison results:

The Google team proposed a method called UFOGen Powerful model, achieved by improving the existing diffusion model and a hybrid model of GAN. This model is fine-tuned by Stable Diffusion, and while ensuring the ability to generate graphs in one step, it is also suitable for different downstream applications. As one of the early works to achieve ultra-fast text-to-image synthesis, UFOGen has opened up a new path in the field of high-efficiency generative models

The above is the detailed content of A new step towards high-quality image generation: Google's UFOGen ultra-fast sampling method. For more information, please follow other related articles on the PHP Chinese website!