Since Neural Radiance Fields was proposed in 2020, the number of related papers has increased exponentially. It has not only become an important branch direction of three-dimensional reconstruction, but has also gradually become active at the research frontier as an important tool for autonomous driving.

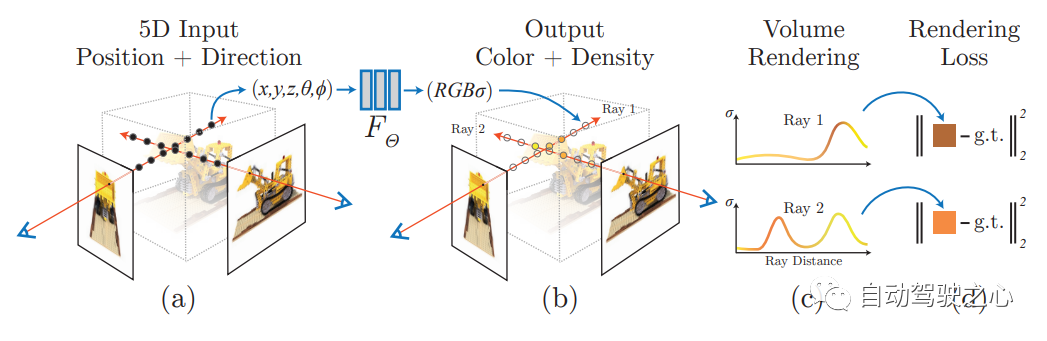

NeRF has suddenly emerged in the past two years, mainly because it skips the feature point extraction and matching, epipolar geometry and triangulation, PnP plus Bundle Adjustment and other steps of the traditional CV reconstruction pipeline, and even skips the reconstruction of mesh, Texture and ray tracing learn a radiation field directly from a 2D input image, and then output a rendered image from the radiation field that approximates a real photo. In other words, let an implicit 3D model based on a neural network fit the 2D image from a specified perspective, and make it have both new perspective synthesis and capabilities. The development of NeRF is also closely related to autonomous driving, which is specifically reflected in the application of real scene reconstruction and autonomous driving simulators. NeRF is good at rendering photo-level images, so street scenes modeled with NeRF can provide highly realistic training data for autonomous driving; NeRF maps can be edited to combine buildings, vehicles, and pedestrians into various corners that are difficult to capture in reality. case can be used to test the performance of algorithms such as perception, planning, and obstacle avoidance. Therefore, NeRF is a branch of 3D reconstruction and a modeling tool. Mastering NeRF has become an indispensable skill for researchers doing reconstruction or autonomous driving.

Today I will sort out the content related to Nerf and autonomous driving. Nearly 11 articles will take you to explore the past and present of Nerf and autonomous driving;

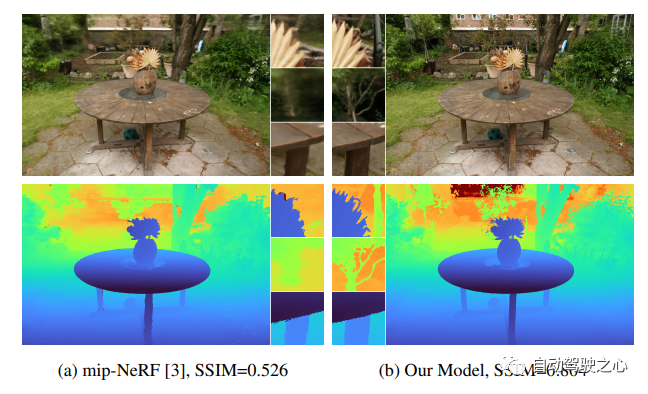

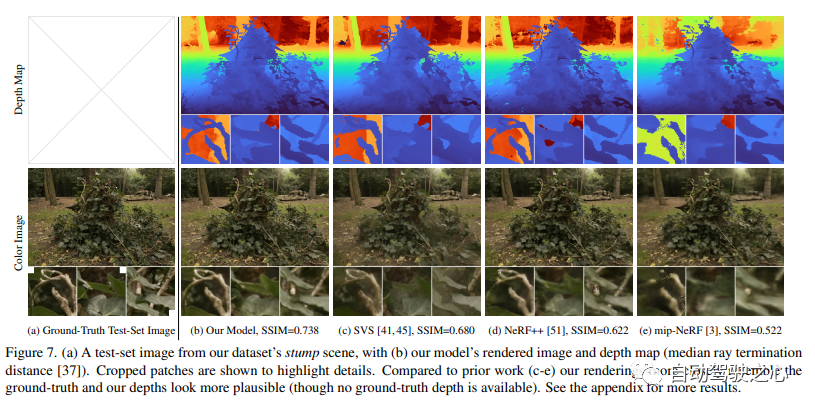

2.Mip-NeRF 360

2.Mip-NeRF 360

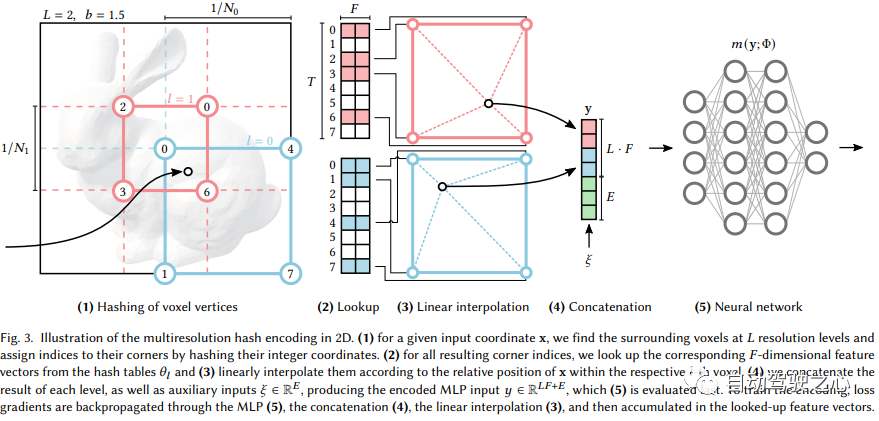

#3.Instant-NGP

The content that needs to be rewritten is: Link: https ://nvlabs.github.io/instant-ngp

Let us first take a look at the similarities and differences between Instant-NGP and NeRF:

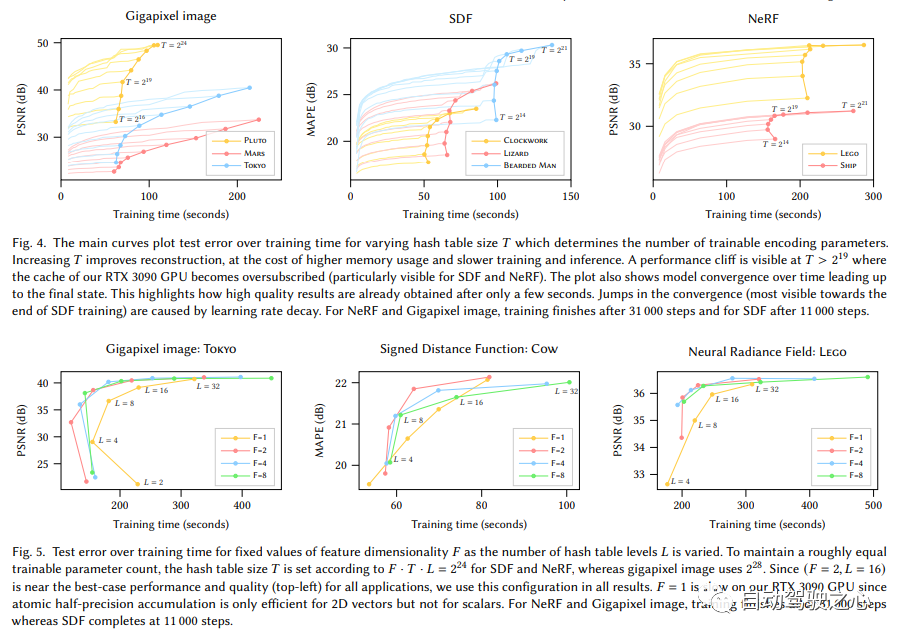

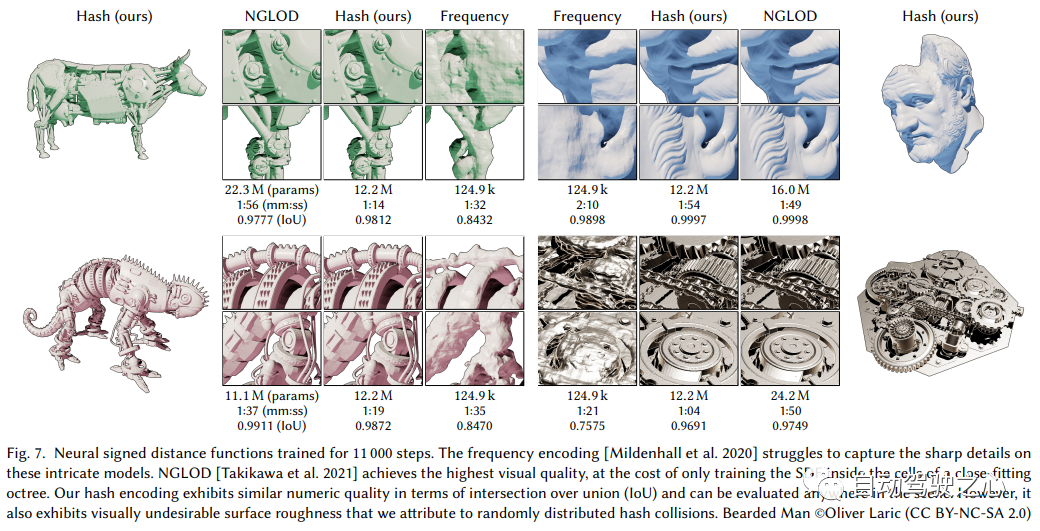

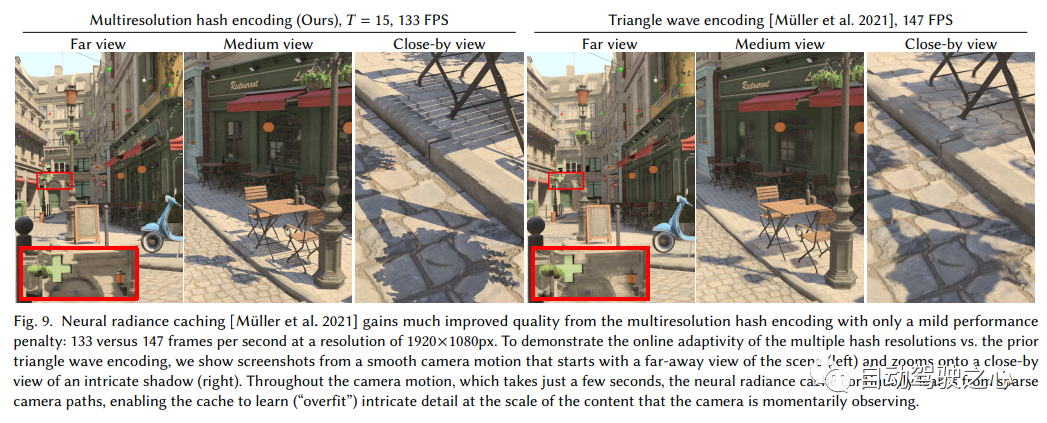

It can be seen that the large framework is still the same. The most important difference is that NGP selects the parameterized voxel grid as the scene expression. Through learning, the parameters saved in voxel become the shape of the scene density. The biggest problem with MLP is that it is slow. In order to reconstruct the scene with high quality, a relatively large network is often required, and it will take a lot of time to pass through the network for each sampling point. Interpolation within the grid is much faster. However, if the grid wants to express high-precision scenes, it requires high-density voxels, which will cause extremely high memory usage. Considering that there are many places in the scene that are blank, NVIDIA proposed a sparse structure to express the scene.

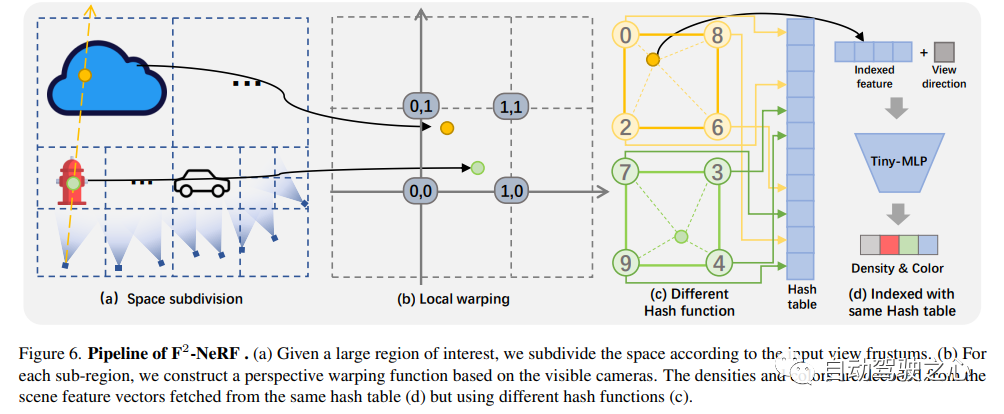

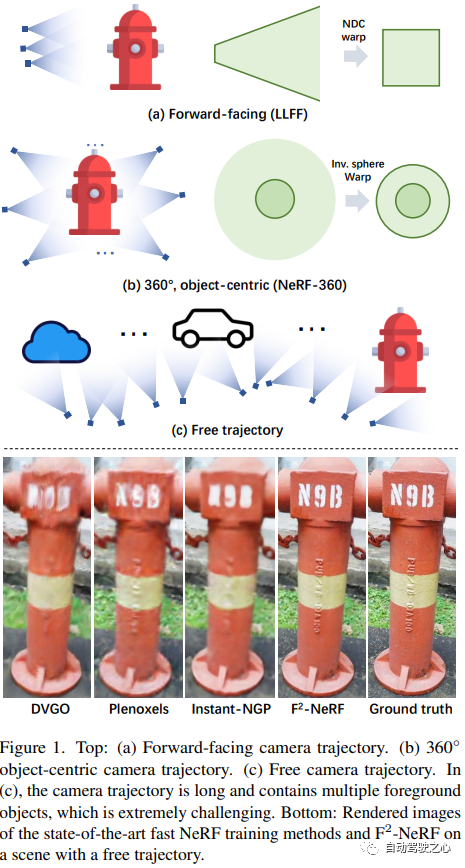

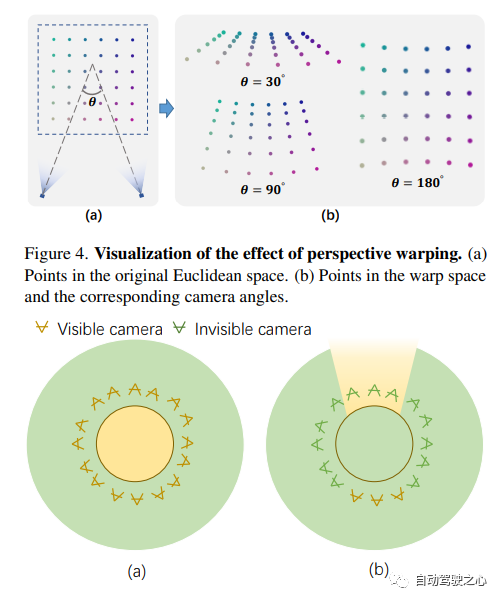

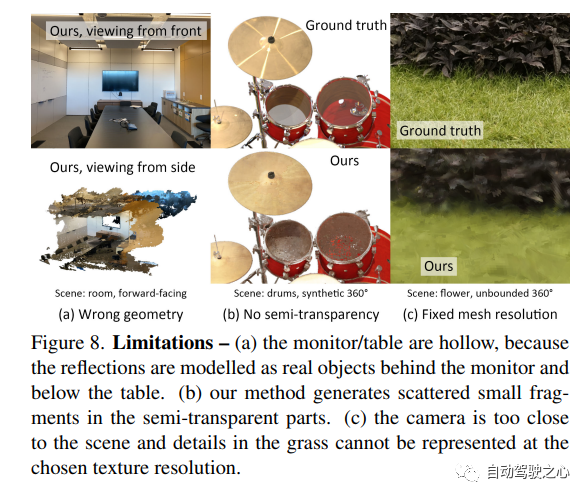

Proposed a new grid-based NeRF, called F2-NeRF (Fast Free NeRF), for new view synthesis, which can achieve arbitrary input camera trajectories and only requires a few minutes of training time . Existing fast grid-based NeRF training frameworks, such as Instant NGP, Plenoxels, DVGO or TensoRF, are mainly designed for bounded scenes and rely on spatial warpping to handle unbounded scenes. Two existing widely used spatial warpping methods only target forward-facing trajectories or 360◦ object-centered trajectories, but cannot handle arbitrary trajectories. This article conducts an in-depth study of the mechanism of spatial warpping to handle unbounded scenes. We further propose a new spatial warpping method called perspective warpping, which allows us to handle arbitrary trajectories in the grid-based NeRF framework. Extensive experiments show that F2-NeRF is able to render high-quality images using the same perspective warping on two collected standard datasets and a new free trajectory dataset.

MobileNeRF: Exploiting the Polygon Rasterization Pipeline for Efficient Neural Field Rendering on Mobile Architectures.

The content that needs to be rewritten is: https://arxiv.org/pdf/2208.00277.pdf

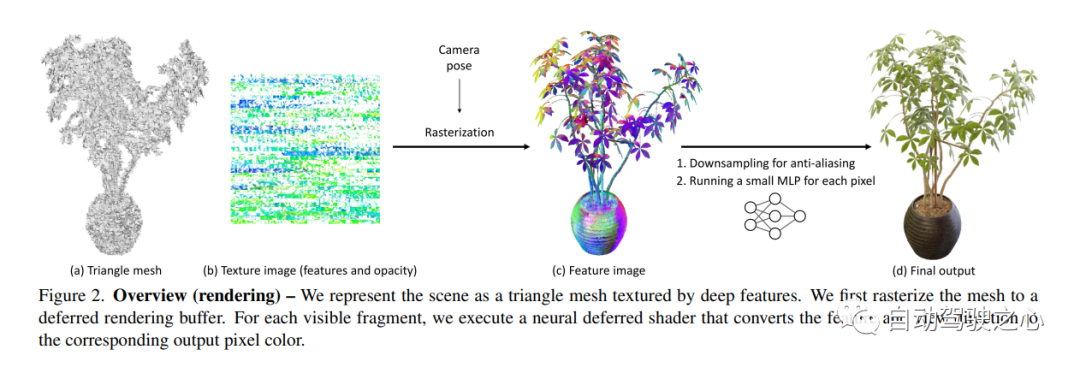

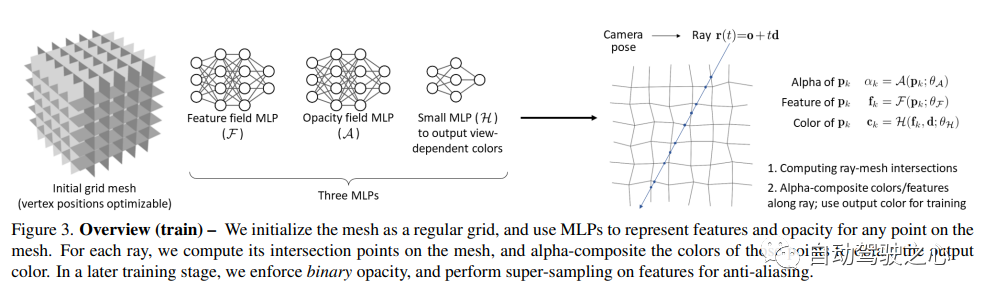

Neural Radiation Fields (NeRF) have demonstrated the amazing ability to synthesize 3D scene images from novel views. However, they rely on specialized volumetric rendering algorithms based on ray marching that do not match the capabilities of widely deployed graphics hardware. This paper introduces a new textured polygon-based NeRF representation that can efficiently synthesize new images through standard rendering pipelines. NeRF is represented as a set of polygons whose textures represent binary opacity and feature vectors. Traditional rendering of polygons using a z-buffer produces an image in which each pixel has characteristics that are interpreted by a small view-dependent MLP running in the fragment shader to produce the final pixel color. This approach enables NeRF to render using a traditional polygon rasterization pipeline that provides massive pixel-level parallelism, enabling interactive frame rates across a variety of computing platforms, including mobile phones.

Our real-time visual localization and NeRF mapping work has been included in CVPR2023

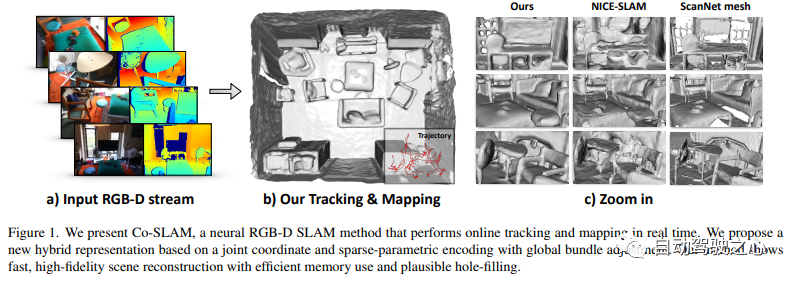

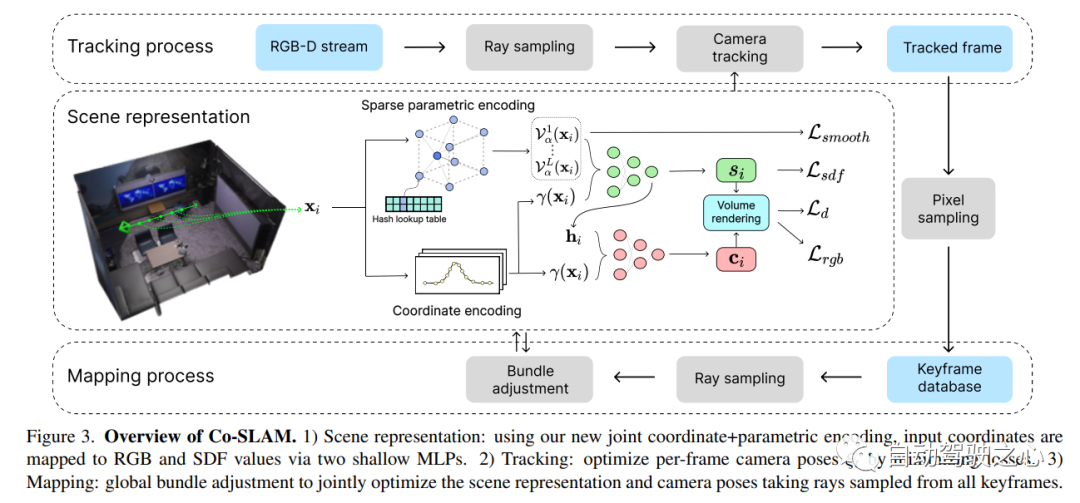

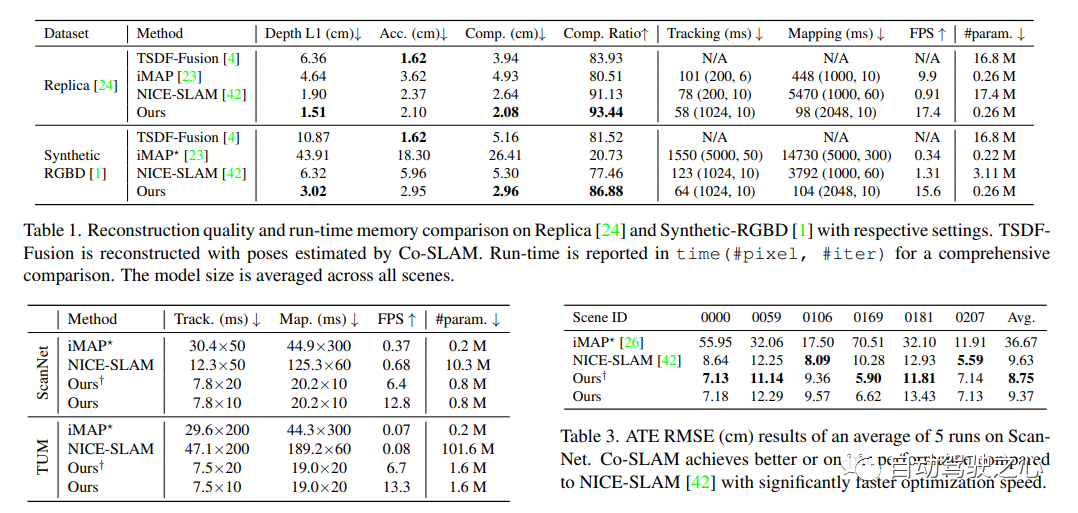

Co-SLAM: Joint Coordinate and Sparse Parametric Encodings for Neural Real-Time SLAM

Paper link: https://arxiv.org/pdf/2304.14377.pdf

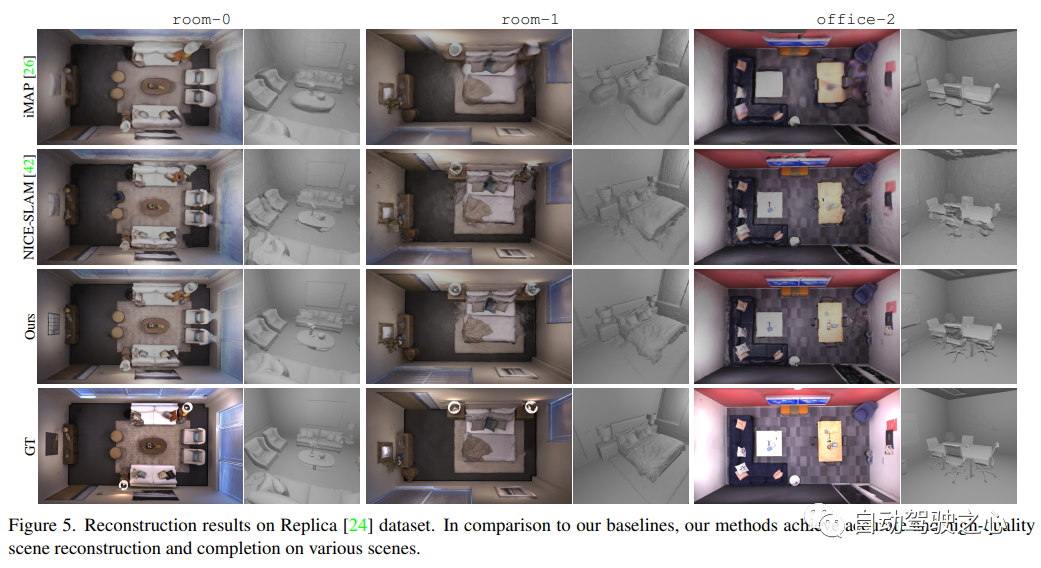

Co-SLAM is a real-time An RGB-D SLAM system that uses neural implicit representations for camera tracking and high-fidelity surface reconstruction. Co-SLAM represents the scene as a multi-resolution hash grid to exploit its ability to quickly converge and represent local features. In addition, in order to incorporate surface consistency priors, Co-SLAM uses a block encoding method, which proves that it can powerfully complete scene completion in unobserved areas. Our joint encoding combines the advantages of Co-SLAM’s speed, high-fidelity reconstruction, and surface consistency priors. Through a ray sampling strategy, Co-SLAM is able to globally bundle adjustments to all keyframes!

What needs to be rewritten is: https://arxiv.org/pdf/2307.15058.pdf

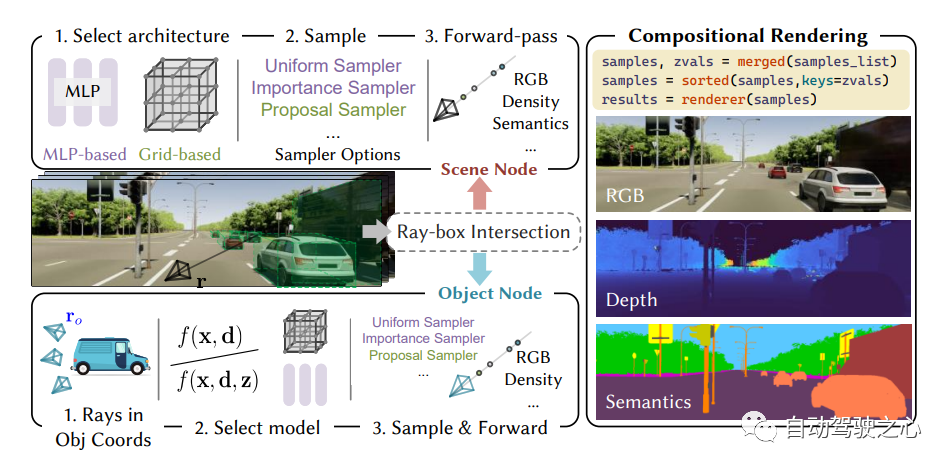

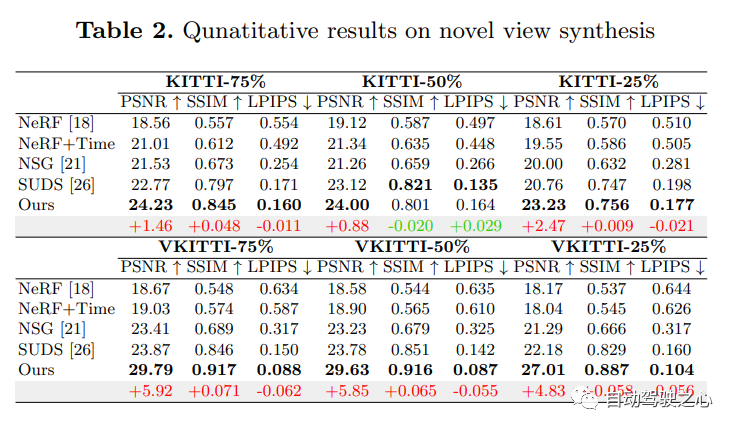

Self-driving cars can drive smoothly under ordinary circumstances. It is generally believed that realistic sensor simulation Will play a key role in resolving remaining corner situations. To this end, MARS proposes an autonomous driving simulator based on neural radiation fields. Compared with existing works, MARS has three distinctive features: (1) Instance awareness. The simulator models the foreground instances and the background environment separately using separate networks so that the static (e.g., size and appearance) and dynamic (e.g., trajectory) characteristics of the instances can be controlled separately. (2) Modularity. The simulator allows flexible switching between different modern NeRF-related backbones, sampling strategies, input modes, etc. It is hoped that this modular design can promote academic progress and industrial deployment of NeRF-based autonomous driving simulations. (3) Real. The simulator is set up for state-of-the-art photorealistic results with optimal module selection.

The most important point is: open source!

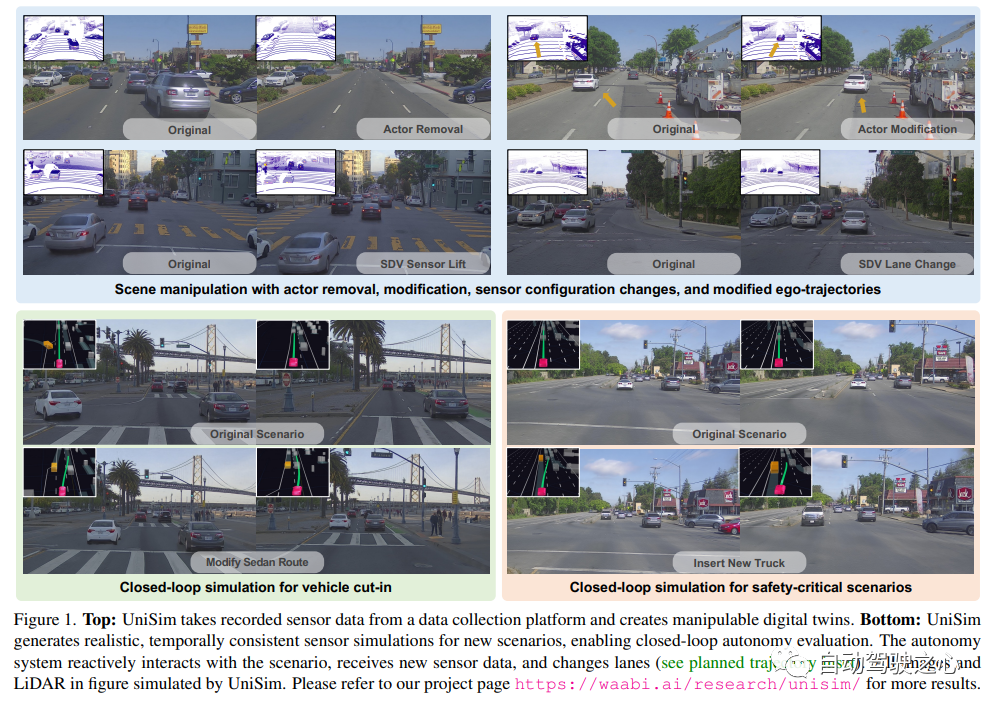

UniSim: A neural closed-loop sensor simulator

Paper link: https://arxiv.org/pdf/2308.01898.pdf

An important reason that hinders the popularization of autonomous driving But security is still not enough. The real world is too complex, especially with the long tail effect. Boundary scenarios are critical to safe driving and are diverse but difficult to encounter. It is very difficult to test the performance of autonomous driving systems in these scenarios because they are difficult to encounter and very expensive and dangerous to test in the real world

To solve this challenge, both industry and academia have begun to pay attention to simulation System development. At the beginning, the simulation system mainly focused on simulating the movement behavior of other vehicles/pedestrians and testing the accuracy of the autonomous driving planning module. In recent years, the focus of research has gradually shifted to sensor-level simulation, that is, simulation to generate raw data such as lidar and camera images, to achieve end-to-end testing of autonomous driving systems from perception, prediction to planning.

Different from previous work, UniSim has simultaneously achieved for the first time:

UniSim First, from the collected data,

reconstructthe autonomous driving scene in the digital world, including cars, pedestrians, roads, buildings and traffic signs. Then, control the reconstructed scene for simulation to generate some rare key scenes.

Closed-loop simulationUniSim can perform closed-loop simulation testing. First, by controlling the behavior of the car, UniSim can create a dangerous and rare scene. , For example, a car suddenly comes oncoming in the current lane; then, UniSim simulates and generates corresponding data; then, runs the autonomous driving system and outputs the results of path planning; based on the results of path planning, the unmanned vehicle moves to the next designated location , and update the scene (the location of the unmanned vehicle and other vehicles); then we continue to simulate, run the autonomous driving system, and update the virtual world state... Through this closed-loop test, the autonomous driving system and the simulation environment can interact to create A scene completely different from the original data

The above is the detailed content of NeRF and the past and present of autonomous driving, a summary of nearly 10 papers!. For more information, please follow other related articles on the PHP Chinese website!