Original title: Cross-Dataset Experimental Study of Radar-Camera Fusion in Bird's-Eye View

Paper link: https://arxiv.org/pdf/2309.15465.pdf

Author affiliation: Opel Automobile GmbH Rheinland -Pfalzische Technische Universitat Kaiserslautern-Landau German Research Center for Artificial Intelligence

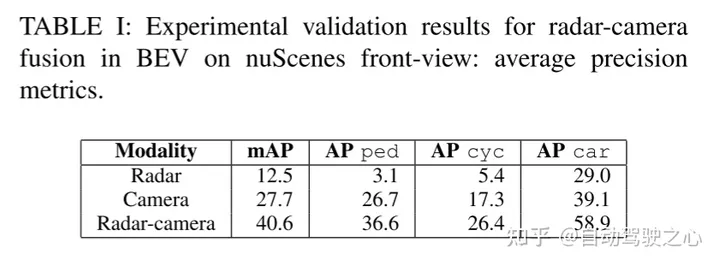

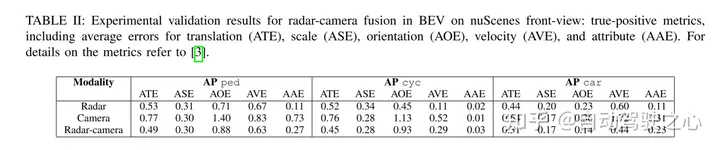

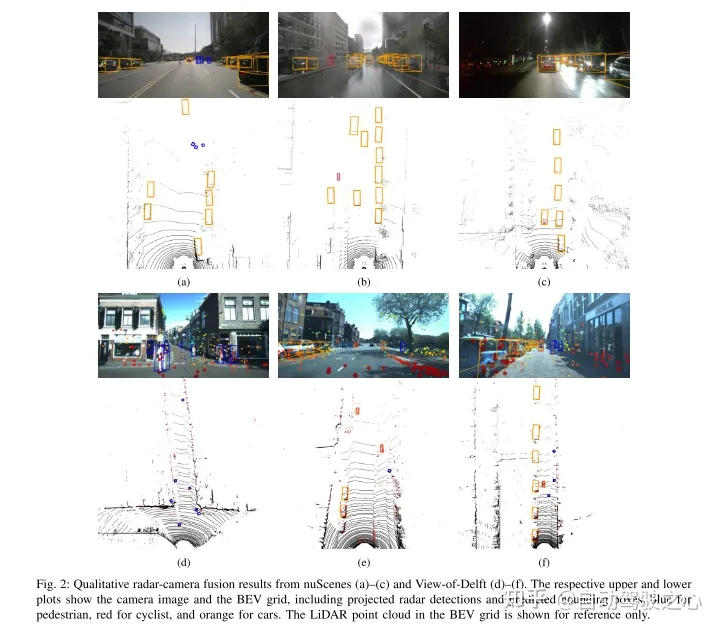

By leveraging complementary sensor information, Millimeter wave radar and camera fusion systems have the potential to provide highly robust and reliable perception systems for advanced driver assistance systems and autonomous driving functions. Recent advances in camera-based object detection provide new possibilities for the fusion of millimeter-wave radar and cameras, which can exploit bird's-eye feature maps for fusion. This study proposes a novel and flexible fusion network and evaluates its performance on two datasets (nuScenes and View-of-Delft). Experimental results show that although the camera branch requires large and diverse training data, the millimeter-wave radar branch benefits more from high-performance millimeter-wave radar. Through transfer learning, this study improves camera performance on smaller datasets. The research results further show that the fusion method of millimeter wave radar and camera is significantly better than the baseline method using only camera or only millimeter wave radar

Recently ,A trend in 3D object detection is to convert the ,features of images into a common Bird’s Eye View (BEV) ,representation. This representation provides a flexible fusion architecture that can be fused between multiple cameras or using ranging sensors. In this work, we extend the BEVFusion method originally used for laser camera fusion for millimeter-wave radar camera fusion. We trained and evaluated our proposed fusion method using a selected millimeter wave radar dataset. In several experiments, we discuss the advantages and disadvantages of each dataset. Finally, we apply transfer learning to achieve further improvements

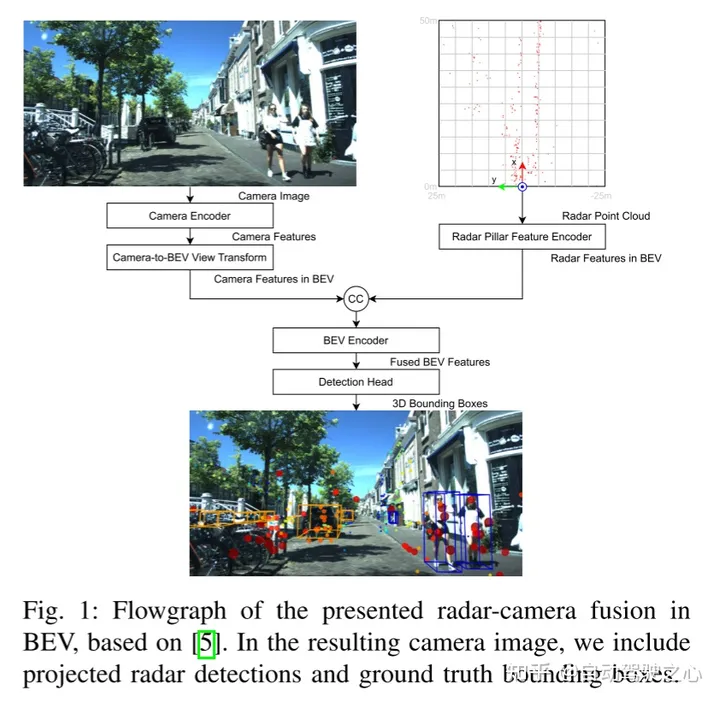

Here's what needs to be rewritten: Figure 1 shows the BEV millimeter wave radar-camera fusion flow chart based on BEVFusion. In the generated camera image, we include the detection results of the projected millimeter wave radar and the real bounding box

This article follows the fusion architecture of BEVFusion. Figure 1 shows the network overview of millimeter wave radar-camera fusion in BEV in this article. Note that fusion occurs when the camera and millimeter wave radar signatures are connected at the BEV. Below, this article provides further details for each block.

The content that needs to be rewritten is: A. Camera encoder and camera to BEV view transformation

The camera encoder and view transformation adopt the idea of [15], which is a flexible The framework can extract image BEV features of any camera external and internal parameters. First, features are extracted from each image using a tiny-Swin Transformer network. Next, this paper uses the Lift and Splat steps of [14] to convert the features of the image to the BEV plane. To this end, dense depth prediction is followed by a rule-based block where features are converted into pseudo point clouds, rasterized and accumulated into a BEV grid.

Radar Pillar Feature Encoder

The purpose of this block is to encode the millimeter wave radar point cloud into BEV features on the same grid as the image BEV features. To this end, this paper uses the pillar feature encoding technology of [16] to rasterize the point cloud into infinitely high voxels, the so-called pillar.

The content that needs to be rewritten is: C. BEV encoder

Similar to [5], the BEV features of millimeter wave radar and cameras are achieved through cascade fusion. The fused features are processed by a joint convolutional BEV encoder so that the network can consider spatial misalignment and exploit the synergy between different modalities

D. Detection Head

This article uses CenterPoint detection head to predict the heatmap of the object center for each class. Further regression heads predict the size, rotation and height of objects, as well as the velocity and class properties of nuScenes. The heat map is trained using Gaussian focus loss, and the rest of the detection heads are trained using L1 loss

##

##

Stäcker, L., Heidenreich, P., Rambach, J., & Stricker, D. (2023). "Radar-camera fusion from a bird's-eye view" Cross-dataset experimental research". ArXiv. /abs/2309.15465

The content that needs to be rewritten is: Original link; https://mp.weixin.qq.com/ s/5mA5up5a4KJO2PBwUcuIdQ

The above is the detailed content of Experimental study on Radar-Camera fusion across data sets under BEV. For more information, please follow other related articles on the PHP Chinese website!