Java

Java

JavaInterview questions

JavaInterview questions

Alibaba terminal: 1 million login requests per day, 8G memory, how to set JVM parameters?

Alibaba terminal: 1 million login requests per day, 8G memory, how to set JVM parameters?

Alibaba terminal: 1 million login requests per day, 8G memory, how to set JVM parameters?

Years later, I have revised more than 100 resumes and conducted more than 200 mock interviews.

Just last week, a classmate was asked this question during the final technical interview with Alibaba Cloud: Assume a platform with 1 million login requests per day and a service node with 8G memory. How to set JVM parameters? If you think the answer is not ideal, come to me for review.

If you also need resume modification, resume beautification, resume packaging, mock interviews, etc., you can contact me.

The following is sorted out for everyone in the form of interview questions to kill two birds with one stone:

For your practical reference Also for your interview reference

What everyone needs to learn, in addition to the JVM configuration plan, is its idea of analyzing problems and the perspective of thinking about problems. These ideas and perspectives can help everyone go further and further.

Next, let’s get to the point.

How to set JVM parameters for 1 million login requests per day and 8G memory?

How to set JVM parameters for 1 million login requests per day and 8G memory can be roughly divided into the following 8 steps.

Step1: How to plan capacity when the new system goes online?

1. Summary of routines

Any new business system needs to estimate the server configuration and JVM memory parameters before going online. This capacity and resource planning It is not just a random estimate by the system architect. It needs to be estimated based on the business scenario where the system is located, inferring a system operation model, and evaluating indicators such as JVM performance and GC frequency. The following is a modeling step I summarized based on the experience of Daniel and my own practice:

Calculate how much memory space the objects created by the business system occupy per second, and then calculate the memory space occupied by each system under the cluster per second (object creation speed) Set a machine configuration, estimate the space of the new generation, and compare how often MinorGC is triggered under different new generation sizes. In order to avoid frequent GC, you can re-estimate how many machine configurations are needed, how many machines are deployed, how much memory space is given to the JVM, and how much space is given to the new generation. Based on this set of configurations, we can basically calculate the operation model of the entire system, how many objects are created per second, which will become garbage after 1 second, how long the system runs, and how often the new generation will trigger a GC. high.

2. Practical practice of routines-taking logging into the system as an example

Some students are still confused after seeing these steps, and they seem to say so The thing is, I still don’t know how to do it in actual projects!

Just talk without practicing tricks, take the login system as an example to simulate the deduction process:

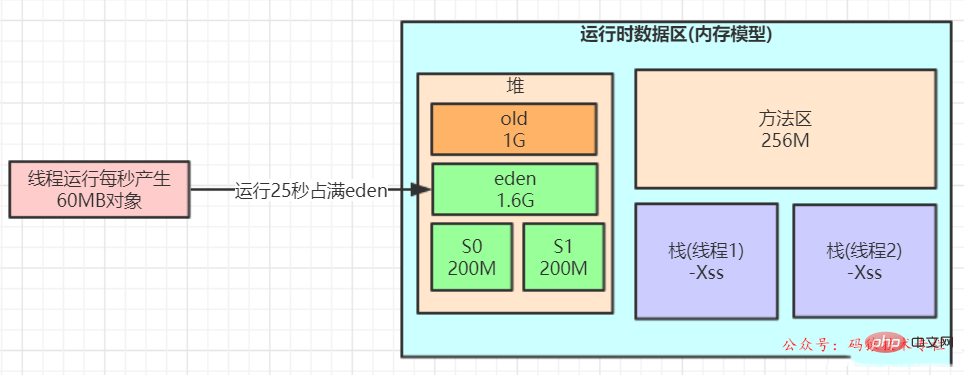

Assume that there are 1 million login requests per day, and the login peak is in the morning. It is estimated that there will be 100 login requests per second during the peak period. Assume that 3 servers are deployed, and each machine handles 30 login requests per second. Assuming that a login request needs to be processed for 1 second, the JVM new generation will generate 30 login requests per second. Login objects, after 1s the request is completed, these objects become garbage. A login request object assumes 20 fields, an object is estimated to be 500 bytes, and 30 logins occupy about 15kb, taking into account RPC and DB operations, network communication, writing database, and writing cache After one operation, it can be expanded to 20-50 times, and hundreds of k-1M data can be generated in about 1 second. Assuming that a 2C4G machine is deployed and 2G heap memory is allocated, the new generation is only a few hundred M. According to the garbage generation rate of 1s1M, MinorGC will be triggered once in a few hundred seconds. Assume that a 4C8G machine is deployed, allocates 4G heap memory, and allocates 2G to the new generation. It will take several hours to trigger MinorGC.

Therefore, it can be roughly inferred that a login system with 1 million requests per day can guarantee the system by allocating 4G heap memory and 2G new generation JVM according to the 3-instance cluster configuration of 4C8G. of a normal load.

Basically evaluate the resources of a new system, so to build a new system, how much capacity and configuration are required for each instance, how many instances are configured in the cluster, etc., etc., are not just a matter of patting the head and chest. The decision is made.

Step2: How to select a garbage collector?

Throughput or response time

First introduce two concepts: throughput and low latency

Throughput = CPU time when the user application is running / (CPU time when the user application is running, CPU garbage collection time)

Response time = Average GC time consumption per time

Usually, whether to prioritize throughput or response is a dilemma in the JVM.

As the heap memory increases, the amount that gc can handle at one time becomes larger, and the throughput is greater; however, the time for gc to be processed at one time will become longer, resulting in a longer waiting time for threads queued later; on the contrary, if the heap memory is small, The gc time is short, the waiting time of queued threads is shortened, and the delay is reduced, but the number of requests at one time becomes smaller (not absolutely consistent).

It is impossible to take into account both at the same time. Whether to prioritize throughput or response is a question that needs to be weighed.

Considerations in the design of the garbage collector

The JVM does not allow garbage collection and creation of new objects during GC (just like cleaning and throwing garbage at the same time) ). JVM requires a Stop the world pause time, and STW will cause the system to pause briefly and be unable to process any requests; New generation collection frequency High, performance priority, commonly used copy algorithm; old generation frequency is low, space sensitive, avoid copy method. The goal of all garbage collectors is to make GC less frequent, shorter, and reduce the impact of GC on the system!

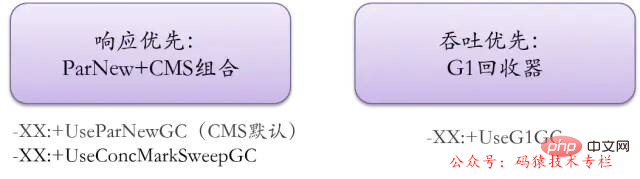

CMS and G1

The current mainstream garbage collector configuration is to use ParNew in the new generation and CMS combination in the old generation, or completely use the G1 collector.

Judging from future trends, G1 is the officially maintained and more respected garbage collector.

business system:

Recommended CMS for delay-sensitive services; For large memory services that require high throughput, use the G1 recycler!

The working mechanism of the CMS garbage collector

CMS is mainly a collector for the old generation. The old generation is marked and cleared. By default, it will be done after a FullGC algorithm. Defragmentation algorithm to clean up memory fragments.

| CMS GC | Description | Stop the world | Speed |

|---|---|---|---|

| 1. Start mark | The initial mark only marks objects that GCRoots can be directly associated with, which is very fast | Yes | very quickly |

| 2. Concurrent marking | The concurrent marking phase is the process of GCRoots Tracing | No | Slow |

| 3. Re-marking | The re-marking phase is to correct the marking records of that part of the objects that are changed due to the continued operation of the user program during concurrent marking. | Yes | Soon |

| 4. Garbage collection | Concurrent cleaning of garbage objects (mark and clear algorithm) | No | SLOW |

Advantages: concurrent collection, focusing on "low latency". No STW occurred in the two most time-consuming stages, and the stages requiring STW were completed very quickly. Disadvantages: 1. CPU consumption; 2. Floating garbage; 3. Memory fragmentation Applicable scenarios: Pay attention to server response speed and require system The pause time is minimal.

In short:

Business systems, delay-sensitive recommended CMS;

Large memory services, high requirements Throughput, using G1 collector!

#Step3: How to plan the proportion and size of each partition

The general idea is:

First of all, the JVM is the most important The core parameters are to evaluate memory and allocation. The first step is to specify the size of the heap memory. This is required when the system is online. -Xms is the initial heap size, -Xmx is the maximum heap size. In the background Java service, it is generally designated as system Half of the memory. If it is too large, it will occupy the system resources of the server. If it is too small, the best performance of the JVM cannot be exerted.

Secondly, you need to specify the size of the new generation of -Xmn. This parameter is very critical and very flexible. Although Sun officially recommends a size of 3/8, it should be determined according to the business scenario, for stateless or light-state services. (Now the most common business systems such as Web applications), generally the new generation can even be given 3/4 of the heap memory; and for stateful services (common systems such as IM services, gateway access layers, etc.) the new generation can Set according to the default ratio of 1/3. The service is stateful, which means that there will be more local cache and session state information resident in memory, so more space should be set up in the old generation to store these objects.

Finally, set the memory size of the -Xss stack and set the stack size of a single thread. The default value is related to the JDK version and system, and generally defaults to 512~1024kb. If a background service has hundreds of resident threads, the stack memory will also occupy hundreds of M in size.

| JVM parameters | Description | Default | Recommended |

|---|---|---|---|

| Java heap memory size | OS memory 64/1 | OS memory half | |

| Maximum size of Java heap memory | OS memory 4/1 | OS memory half | |

| The size of the new generation in the Java heap memory. After deducting the new generation, the remaining memory size is the memory size of the old generation. | 1/3 of the original heap | sun recommends 3/ 8 | |

| -Xss | Stack memory size of each thread | Depends on idk | sun |

| G1参数 | 描述 | 默认值 |

|---|---|---|

| XX:MaxGCPauseMillis=N | 最大GC停顿时间。柔性目标,JVM满足90%,不保证100%。 | 200 |

| -XX:nitiatingHeapOccupancyPercent=n | 当整个堆的空间使用百分比超过这个值时,就会融发MixGC | 45 |

For -XX:MaxGCPauseMillis, the parameter settings have an obvious tendency: lower ↓: lower latency, but frequent MinorGC, less MixGC recycling of old areas, and increased Risk of large Full GC. Increase ↑: more objects will be recycled at a time, but the overall system response time will also be lengthened.

For InitiatingHeapOccupancyPercent, the effect of adjusting the parameter size is also different: lowering ↓: triggering MixGC earlier, wasting CPU. Increase ↑: Accumulate multiple generations of recycling regions, increasing the risk of FullGC.

Tuning Summary

Comprehensive tuning ideas before the system goes online:

1. Business estimation: Based on the expected concurrency, average The memory requirements of each task are then evaluated, how many machines are needed to host it, and what configuration is required for each machine.

2. Capacity estimation: According to the task processing speed of the system, then reasonably allocate the size of the Eden and Surivior areas, and the memory size of the old generation.

3. Recycler selection: For systems with response priority, it is recommended to use the ParNew CMS recycler; for throughput-first, multi-core large memory (heap size ≥ 8G) services, it is recommended to use the G1 recycler.

4. Optimization idea: Let the short-lived objects be recycled in the MinorGC stage (at the same time, the surviving objects after recycling

5. The tuning process summarized so far is mainly based on the testing and verification stage before going online, so we try to set the JVM parameters of the machine to the optimal value before going online!

JVM tuning is just a means, but not all problems can be solved by JVM tuning. Most Java applications do not require JVM optimization. We can follow some of the following principles:

Before going online, you should first consider setting the JVM parameters of the machine to the optimal level; -

Reduce the number of objects created (code level); Reduce the use of global variables and large objects (code level); Prioritize architecture tuning and code tuning, JVM optimization is a last resort (code , architecture level); It is better to analyze the GC situation and optimize the code than to optimize the JVM parameters (code level);

Through the above principles, we It was found that in fact, the most effective optimization method is the optimization of the architecture and code level, while JVM optimization is the last resort, which can also be said to be the last "squeeze" of the server configuration.

What is ZGC?

ZGC (Z Garbage Collector) is a garbage collector developed by Oracle with low latency as its primary goal.

It is a collector based on dynamic Region memory layout, (temporarily) without age generation, and uses technologies such as read barriers, dyed pointers, and memory multiple mapping to implement concurrent mark-sort algorithms.

Newly added in JDK 11, still in the experimental stage,

The main features are: recycling terabytes of memory (maximum 4T), and the pause time does not exceed 10ms.

Advantages: low pauses, high throughput, little extra memory consumed during ZGC collection

Disadvantages: floating garbage

Currently used very little, it is still needed to be truly popular Write the time.

How to choose a garbage collector?

How to choose in a real scenario? Here are some suggestions, I hope it will be helpful to you:

1. If your heap size is not very large (for example 100MB), choosing the serial collector is generally the most efficient. Parameters: -XX: UseSerialGC.

2. If your application runs on a single-core machine, or your virtual machine has only a single core, it is still appropriate to choose a serial collector. At this time, there is no need to enable some parallel collectors. income. Parameters: -XX: UseSerialGC.

3. If your application prioritizes "throughput" and has no special requirements for long pauses. It is better to choose parallel collector. Parameters: -XX: UseParallelGC.

4. If your application has higher response time requirements and wants fewer pauses. Even a pause of 1 second will cause a large number of request failures, so it is reasonable to choose G1, ZGC, or CMS. Although the GC pauses of these collectors are usually shorter, it requires some additional resources to handle the work, and the throughput is usually lower. Parameters: -XX: UseConcMarkSweepGC, -XX: UseG1GC, -XX: UseZGC, etc. From the above starting points, our ordinary web servers have very high requirements for responsiveness.

The selectivity is actually focused on CMS, G1, and ZGC. For some scheduled tasks, using a parallel collector is a better choice.

Why did Hotspot use metaspace to replace the permanent generation?

What is metaspace? What is permanent generation? Why use metaspace instead of permanent generation?

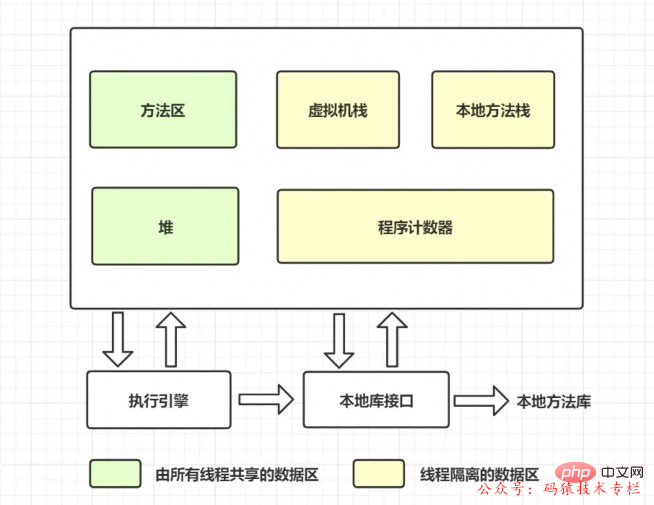

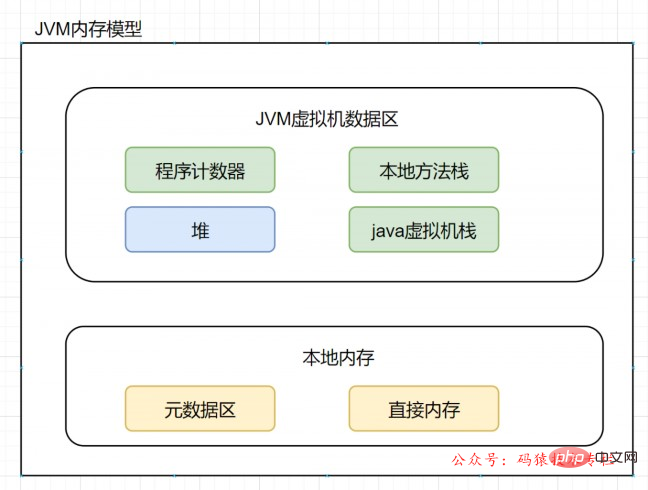

Let’s review the method area first, and look at the data memory graph when the virtual machine is running, as follows:

The method area, like the heap, is a memory area shared by each thread. It is used to store class information, constants, static variables, instant compiled code, etc. that have been loaded by the virtual machine. data.

What is the permanent generation? What does it have to do with the method area?

If you develop and deploy on the HotSpot virtual machine, many programmers call the method area the permanent generation.

It can be said that the method area is the specification, and the permanent generation is Hotspot's implementation of the specification.

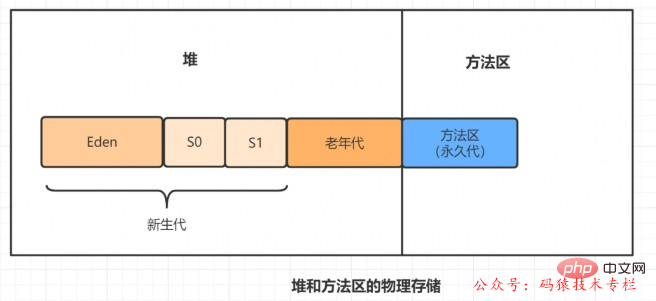

In Java7 and previous versions, the method area is implemented in the permanent generation.

What is metaspace? What does it have to do with the method area?

For Java8, HotSpots canceled the permanent generation and replaced it with metaspace.

In other words, the method area is still there, but the implementation has changed, from the permanent generation to the metaspace.

Why is the permanent generation replaced with metaspace?

The method area of the permanent generation is contiguous with the physical memory used by the heap.

The permanent generation is configured through the following two parameters~

-XX:PremSize: Set the initial size of the permanent generation-XX:MaxPermSize: Set The maximum value of the permanent generation, the default is 64M

For the permanent generation, if many classes are dynamically generated, java.lang.OutOfMemoryError is likely to occur :PermGen space error, because the permanent generation space configuration is limited. The most typical scenario is when there are many jsp pages in web development.

After JDK8, the method area exists in the metaspace (Metaspace).

The physical memory is no longer continuous with the heap, but exists directly in the local memory. Theoretically, the size of the machinememory is the size of the metaspace.

You can set the size of the metaspace through the following parameters:

-XX:MetaspaceSize, the initial space size. When reaching this value, garbage collection will be triggered for type unloading, and the GC will adjust the value: if a large amount of space is released If there is less space, lower the value appropriately; if very little space is released, increase the value appropriately when it does not exceed MaxMetaspaceSize.-XX:MaxMetaspaceSize, the maximum space, there is no limit by default.-XX:MinMetaspaceFreeRatio, after GC, the minimum percentage of Metaspace remaining space capacity, reduced to the garbage collection caused by allocated space-XX:MaxMetaspaceFreeRatio, after GC, the percentage of the maximum Metaspace remaining space capacity, reduced to the garbage collection caused by freeing space

So, why use metaspace to replace the permanent generation?

On the surface it is to avoid OOM exceptions.

Because PermSize and MaxPermSize are usually used to set the size of the permanent generation, which determines the upper limit of the permanent generation, but it is not always possible to know how large it should be set to. If you use the default value, it is easy to encounter OOM errors.

When using metaspace, how many classes of metadata can be loaded is no longer controlled by MaxPermSize, but by the actual available space of the system.

What is Stop The World? What is OopMap? What is a safe spot?

The process of garbage collection involves the movement of objects.

In order to ensure the correctness of object reference updates, all user threads must be suspended. A pause like this is described by the virtual machine designer as Stop The World. Also referred to as STW.

In HotSpot, there is a data structure (mapping table) called OopMap.

Once the class loading action is completed, HotSpot will calculate what type of data is at what offset in the object and record it to OopMap.

During the just-in-time compilation process, an OopMap will also be generated at specific locations, recording which locations on the stack and registers are references.

These specific positions are mainly at: 1. The end of the loop (non-counted loop)

2. Before the method returns / after calling the call instruction of the method

3. Locations where exceptions may be thrown

These locations are called safepoints.

When the user program is executed, it is not possible to pause and start garbage collection at any position in the code instruction flow, but it must be executed to a safe point before it can be paused.

The above is the detailed content of Alibaba terminal: 1 million login requests per day, 8G memory, how to set JVM parameters?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1795

1795

16

16

1740

1740

56

56

1592

1592

29

29

1474

1474

72

72

267

267

587

587

reading from stdin in go by example

Jul 27, 2025 am 04:15 AM

reading from stdin in go by example

Jul 27, 2025 am 04:15 AM

Use fmt.Scanf to read formatted input, suitable for simple structured data, but the string is cut off when encountering spaces; 2. It is recommended to use bufio.Scanner to read line by line, supports multi-line input, EOF detection and pipeline input, and can handle scanning errors; 3. Use io.ReadAll(os.Stdin) to read all inputs at once, suitable for processing large block data or file streams; 4. Real-time key response requires third-party libraries such as golang.org/x/term, and bufio is sufficient for conventional scenarios; practical suggestions: use fmt.Scan for interactive simple input, use bufio.Scanner for line input or pipeline, use io.ReadAll for large block data, and always handle

The Complete Guide to the Java `Optional` Class

Jul 27, 2025 am 12:22 AM

The Complete Guide to the Java `Optional` Class

Jul 27, 2025 am 12:22 AM

Optional is a container class introduced by Java 8 for more secure handling of potentially null values, with the core purpose of which is to explicitly "missing value" and reduce the risk of NullPointerException. 1. Create an empty instance using Optional.empty(), Optional.of(value) wraps non-null values, and Optional.ofNullable(value) safely wraps the value of null. 2. Avoid combining isPresent() and get() directly. You should give priority to using orElse() to provide default values. OrElseGet() implements delay calculation. This method is recommended when the default value is overhead.

python check if key exists in dictionary example

Jul 27, 2025 am 03:08 AM

python check if key exists in dictionary example

Jul 27, 2025 am 03:08 AM

It is recommended to use the in keyword to check whether a key exists in the dictionary, because it is concise, efficient and highly readable; 2. It is not recommended to use the get() method to determine whether the key exists, because it will be misjudged when the key exists but the value is None; 3. You can use the keys() method, but it is redundant, because in defaults to check the key; 4. When you need to get a value and the expected key usually exists, you can use try-except to catch the KeyError exception. The most recommended method is to use the in keyword, which is both safe and efficient, and is not affected by the value of None, which is suitable for most scenarios.

SQL Serverless Computing Options

Jul 27, 2025 am 03:07 AM

SQL Serverless Computing Options

Jul 27, 2025 am 03:07 AM

SQLServer itself does not support serverless architecture, but the cloud platform provides a similar solution. 1. Azure's ServerlessSQL pool can directly query DataLake files and charge based on resource consumption; 2. AzureFunctions combined with CosmosDB or BlobStorage can realize lightweight SQL processing; 3. AWSathena supports standard SQL queries for S3 data, and charge based on scanned data; 4. GoogleBigQuery approaches the Serverless concept through FederatedQuery; 5. If you must use SQLServer function, you can choose AzureSQLDatabase's serverless service-free

VSCode setup for Java development

Jul 27, 2025 am 02:28 AM

VSCode setup for Java development

Jul 27, 2025 am 02:28 AM

InstallJDK,setJAVA_HOME,installJavaExtensionPackinVSCode,createoropenaMaven/Gradleproject,ensureproperprojectstructure,andusebuilt-inrun/debugfeatures;1.InstallJDKandverifywithjava-versionandjavac-version,2.InstallMavenorGradleoptionally,3.SetJAVA_HO

Optimizing Database Interactions in a Java Application

Jul 27, 2025 am 02:32 AM

Optimizing Database Interactions in a Java Application

Jul 27, 2025 am 02:32 AM

UseconnectionpoolingwithHikariCPtoreusedatabaseconnectionsandreduceoverhead.2.UsePreparedStatementtopreventSQLinjectionandimprovequeryperformance.3.Fetchonlyrequireddatabyselectingspecificcolumnsandapplyingfiltersandpagination.4.Usebatchoperationstor

Java Cloud Integration Patterns with Spring Cloud

Jul 27, 2025 am 02:55 AM

Java Cloud Integration Patterns with Spring Cloud

Jul 27, 2025 am 02:55 AM

Mastering SpringCloud integration model is crucial to building modern distributed systems. 1. Service registration and discovery: Automatic service registration and discovery is realized through Eureka or SpringCloudKubernetes, and load balancing is carried out with Ribbon or LoadBalancer; 2. Configuration center: Use SpringCloudConfig to centrally manage multi-environment configurations, support dynamic loading and encryption processing; 3. API gateway: Use SpringCloudGateway to unify the entry, routing control and permission management, and support current limiting and logging; 4. Distributed link tracking: combine Sleuth and Zipkin to realize the full process of request visual pursuit.

Understanding Linux System Calls

Jul 27, 2025 am 12:16 AM

Understanding Linux System Calls

Jul 27, 2025 am 12:16 AM

System calls are mechanisms in which user programs request privileged operations through the kernel interface. The workflow is: 1. User programs call encapsulation functions; 2. Set system call numbers and parameters to registers; 3. Execute syscall instructions and fall into kernel state; 4. Execute corresponding processing functions in the check table; 5. Return to user state after execution. You can use strace tool to track, directly call the syscall() function or check the unitd.h header file to view the call number. You need to note that the difference between system calls and library functions is whether they enter the kernel state, and frequent calls will affect performance. You should optimize by merging I/O, using mmap and epoll methods, and understanding system calls will help you master the underlying operating mechanism of Linux.