The wave of artificial intelligence has swept across the past six months, and Nvidia has opened the door to the trillion-dollar club of the US stock market.

Nvidia, which originally just wanted to get a share of game graphics computing, did not expect to become the leader in AI computing more than 20 years later, almost monopolizing the entire AI server chip market.

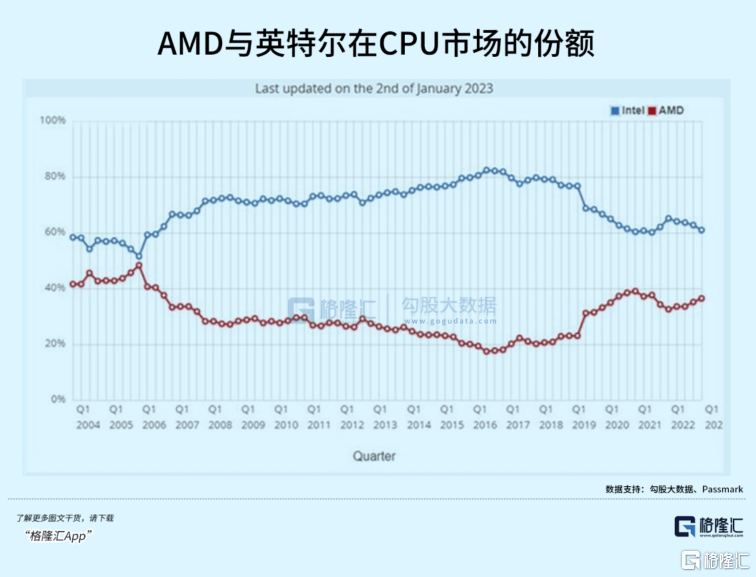

While Intel once dominated the server market, Nvidia's GPUs outperformed its CPUs in high-performance computing. Intel's chip process technology lags behind TSMC, resulting in its product strategy being passive. Nvidia is already far ahead, while AMD is catching up to Intel.

With the success of NVIDIA, the research and development direction of next-generation chips is more focused on how to deeply integrate AI models. The choice is not only GPU, because the high cost of improving computing power is mostly attributed to AI chips, so NVIDIA is in the model The leading position of the training chip side will undoubtedly be challenged, and companies such as Intel, AMD, Qualcomm and others are beginning to gear up and prepare.

So, in AI chips, will there be the next NVIDIA?

01 AI chips must first pass a threshold

AI chips can be divided into cloud, terminal and edge sides according to their deployment locations; they can also be divided into training chips and inference chips according to different tasks. Model training is performed in the cloud in the data center. The chip needs to support a large amount of data calculations. The terminal and edge side have slightly weaker computing power requirements, but require fast response capabilities and low power consumption. NVIDIA dominates the field of training chips, but There is no shortage of chips that are more suitable than GPUs for inference.

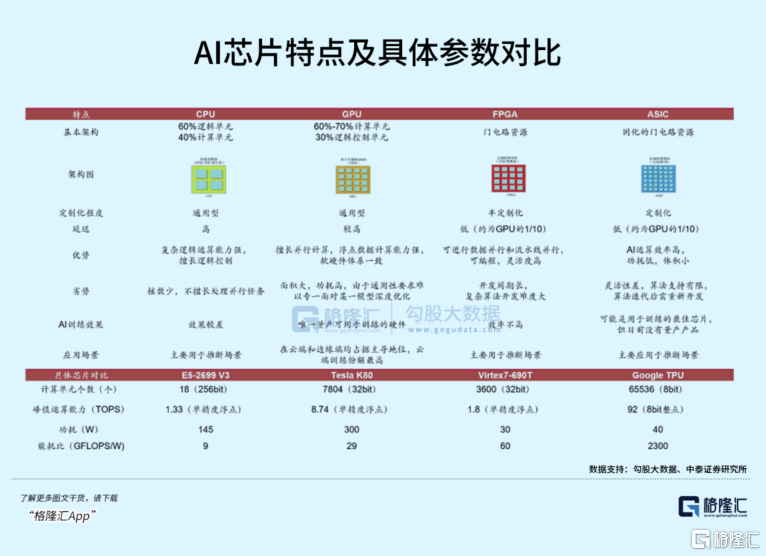

Specialized AI chips with different performances include GPU, ASIC, FPGA, NPU, etc., which can be referred to as XPU. The different names reflect the differences in their respective architectural levels. Dedicated AI chips have the ability to match GPUs in the areas they specialize in. Although they lack scalability, they are ahead of more general-purpose GPUs in terms of performance and computing power, even though the latter can do more.

This goes back to the original logic of CPU being abandoned in the field of machine learning. Will there be a new chip that can impact GPU in the future?

At present, major manufacturers around the world are particularly interested in making chips. However, there is no need for general-purpose chips to be made by themselves. They will only lay out the chips in line with their important business directions.

For example, Google’s TPU uses ASIC, which is only for accelerators of convolutional neural networks. Tesla’s Dojo is a machine vision analysis chip specially used for FSD. Domestic Baidu and Alibaba also spend a lot of energy on self-developed chips. .

For a long time, dedicated processors have not really posed a threat to GPUs. This is mainly related to market capacity, capital investment, and the positive cycle formed by Moore's Law.

According to IDC data, in China's AI chip market in 2021, GPU accounted for 89% of the market share; NPU processing speed is 10 times faster than GPU, accounting for 9.6% of the market share, ASIC and FPGA accounted for a smaller share, with a market share of 1% each and 0.4%.

In the past thirty years, the rise of wafer foundries such as TSMC and Samsung has shaped the trend of division of labor and specialization. Technological advances in equipment and advanced processes have allowed chip design companies such as Nvidia and Qualcomm to show off their skills, and have also allowed Apple, Major technology companies such as Google have begun to use chips to define their products and services. The soil for specialized chip design is fertile, and everyone is a beneficiary.

In the eyes of competitors, GPU is not a chip specifically designed for machine learning. The main reason for its success lies in the complex ecology formed by combining the framework software layer, which improves the versatility of the chip.

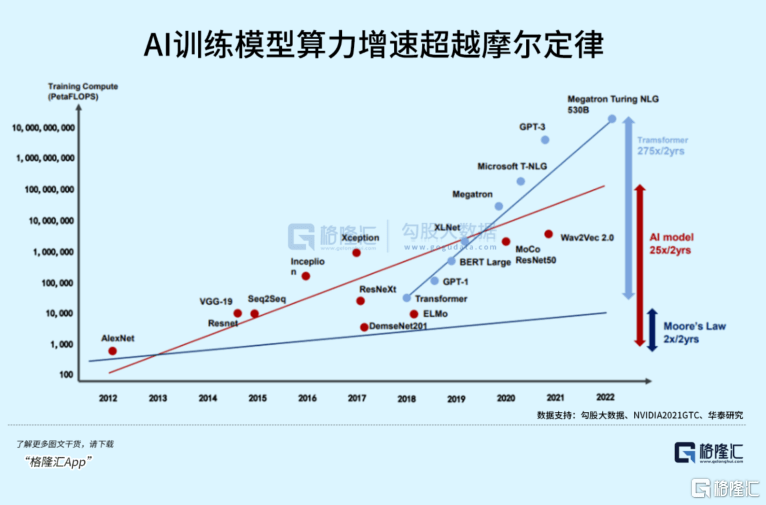

In fact, Since 2012, the computing power demand of head training models has increased by 10 times every year, and has been approaching the computing power limit under Moore's Law.

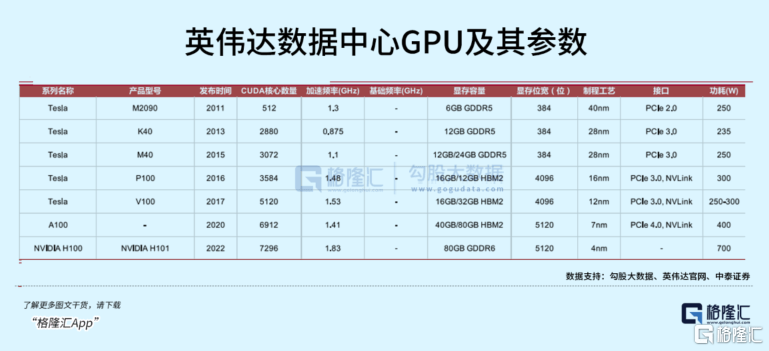

Starting from Tesla M2090 in 2011, data center product GPUs have been updating and iterating. Volta, Ampere, Hopper and other architectures for high-performance training computing and AI training have been launched successively, maintaining the speed of launching a new generation of products every two years. The floating point computing power also increased from 7.8 TFLOPS to 30 TFLOPS, an increase of nearly 4 times.

The latest H100 has even shortened the training time for large models from one week to one day.

Based on NVIDIA’s high share in the field of AI chips, it can be said that In the past, the growth of computing power for AI model training was mainly supported by NVIDIA’s GPU series, which formed a positive feedback. With the scale of chip shipments The growth has evened out the development costs of Nvidia chips.

Compared with the future demand for computing power, the technological iteration of a general-purpose chip will eventually slow down. Only by running through this forward cycle can special-purpose processors be able to keep pace with general-purpose chips in terms of cost.

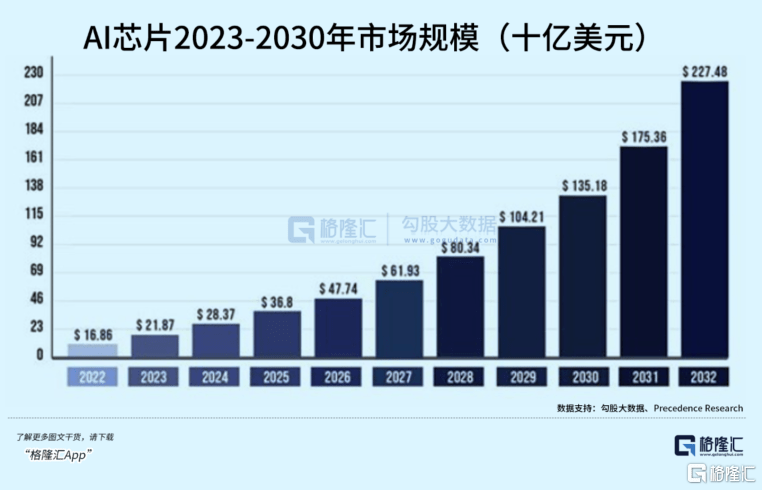

But the difficulty is that special-purpose processors only focus on market segments, and the market size is simply not as good as the general-purpose market. Compared with the per-unit performance improvement of general-purpose chips, it often takes longer or a larger output. However, as AI accelerates its penetration in application scenarios, the expenditure on AI chips will also increase significantly in the future. Dedicated AI chips, CPUs, and GPUs are expected to become three parallel lines.

According to Precedence Research, the global AI chip market size will be US$16.86 billion in 2022, which will grow at an annual rate of approximately 30% and is expected to reach approximately US$227.48 billion by 2032.

02 Three families are promoted, how can they fight against each other?

NVIDIA’s monopoly on computing power is accelerating and strengthening in today’s large-scale model war, and conflicts are increasingly intensifying. GPU procurement demand exceeds TSMC and NVIDIA’s expectations, and supply is insufficient, so prices rise and the cycle continues.

Domestic and foreign technology manufacturers maintain a consistent attitude in choosing self-developed chips, or they may help other chip manufacturers compete with Nvidia to stimulate new supply and reduce chip costs.

AMD surged 12% in intraday trading at the beginning of last month. The reason came from a news that Microsoft is cooperating with AMD to fund the latter's expansion into AI chips and is cooperating with the chip manufacturer on a product code-named Athena. ) chip, but Microsoft later officially denied the news.

This is reminiscent of the "WINTEL" alliance in the 1990s, which mutually contributed to Microsoft's PC operating system and Intel's status in CPUs. At this time, AMD has become the most powerful threat to Intel's market share.

The computer market suffered a heavy blow last year. Weakness at both ends of the enterprise server and consumer electronics sectors caused a considerable drag on CPU shipments. Intel and AMD both experienced their largest declines in more than 30 years, falling by 21% and 19% respectively. .

Although the main businesses are showing signs of weakness, the competitive landscape of the industry has once again undergone tremendous changes.

According to Passmark data monitoring, in the data center market, AMD's share surged to 20% last year, taking away nearly 10% of Intel's share (70.77% in 2022). As of January 2 this year, AMD has regained It is approaching 40%, returning to the level of 2004.

The reason why AMD has been able to pursue it is that, on the one hand, it has relied on the power of TSMC to continuously optimize its product portfolio and increase the adoption rate of EPYC Milan processors used in data centers. Last year, this business revenue increased by 64%.

The other aspect is related to competitors’ poor strategic decisions. Intel's innovation in CPUs has dried up, and their product capabilities, which have maintained a leading position over the past decade, have declined significantly relative to their competitors.

When Apple wanted Intel to develop mobile phone CPUs for the first-generation iPhone, CEO Paul Otellini refused because the offer was too low. The x86 leader misjudged the opportunities on the mobile side.

In addition to the lack of strategic vision, there are also product launch plans that are constantly delayed. Intel is an old man in the old IDM era. Now TSMC and Samsung lead the iteration of advanced processes, which is the base for the continued upgrade of general-purpose chips such as CPUs. The backwardness of Intel's own process technology backfires on the pace of product updates, which is more like "squeezing out toothpaste". Since its high point in 2021, its market value has been cut by more than half.

AMD, on the other hand, has been broadening its product categories and pursuing a cost-effective strategy. It has successively acquired ATI and Xilinx, becoming the first chip manufacturer to acquire both CPU and GPU FPGAs. In 2018, AMD overtook the CPU process on the PC side for the first time, and its market share began to accelerate. In 2019, it teamed up with TSMC to take the lead in leaping into 7nm, and also achieved process surpassing on the server side. Last year, its market value exceeded Intel.

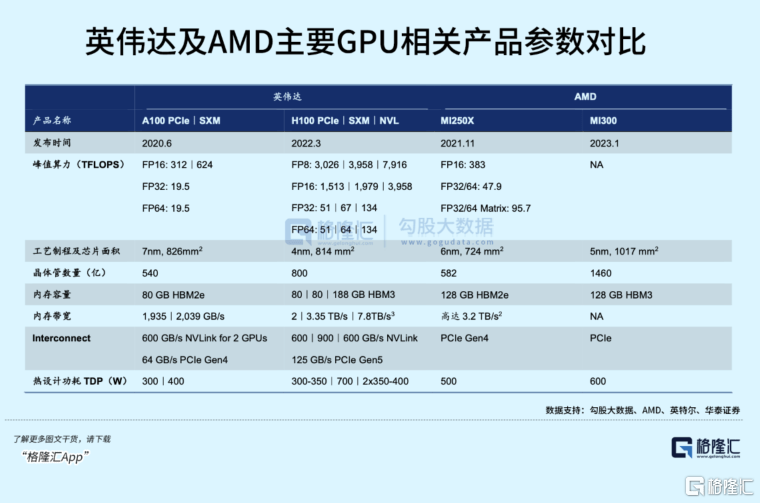

Not long ago, AMD launched the Instinct MI 300, which combines a CPU and GPU dual architecture, to officially enter the AI training end. This chip directly benchmarks Nvidia's Grace Hopper in terms of specifications and performance.

This is an important move after AMD management emphasized AI as a strategic focus. Unlike NVIDIA, which also rents out its own computing power, AMD focuses on building a competitive chip matrix. To compete head-on with it, it may use cloud vendors' data centers to It has begun to break through and is expected to begin to increase in volume in the fourth quarter of this year.

Actually, is not that the two CPU giants are fighting, leaving Nvidia aside, and the result is that they can't catch up.

Intel has spent huge sums of money since 2015 to acquire a large number of artificial intelligence companies, such as Altera, Mobileye, Nervana, etc., but the results have not brought much help to the business. It is more like raising these companies and waiting to win lottery tickets.

Intel has also been planning to launch a GPU comparable to Nvidia, but the plan has been delayed.

In 2021, Intel announced a flagship GPU code-named "Ponte Vecchio" for use in data centers, but the delivery continued to be delayed. As the successor, Falcon Shores GPU, which combines x86 CPU and Xe GPU, has also been delayed to 2025.

Admittedly, NVIDIA's success is not just about good hardware. Different from Intel's past path of being the first in hardware, NVIDIA's GPU architecture has maintained a two-year evolution speed and built a software ecosystem with a universal computing framework. barrier.

In the development process of chips, the winners who define the standard are often the strong ones. To compete with Nvidia, cost-effectiveness is a necessary weight, and the ecosystem is equally critical. The development of computing power to promote the progress of AI also relies on the competition and mutual surpassing of these manufacturers.

In these aspects, whether it is AMD, Intel, or other latecomer manufacturers, they still have a long way to go.

The above is the detailed content of Giants 'rush' AI chips! AMD fights against Nvidia. For more information, please follow other related articles on the PHP Chinese website!