Reinforcement learning (RL) allows robots to interact through trial and error to learn complex behaviors and become better and better over time. Some previous work at Google has explored how RL can enable robots to master complex skills such as grasping, multi-task learning, and even playing table tennis. Although reinforcement learning in robots has made great progress, we still do not see robots with reinforcement learning in daily environments. Because the real world is complex, diverse, and constantly changing over time, this poses huge challenges to robotic systems. However, reinforcement learning should be an excellent tool for addressing these challenges: by practicing, improving, and learning on the job, robots should be able to adapt to an ever-changing world.

In the Google paper "Deep RL at Scale: Sorting Waste in Office Buildings with a Fleet of Mobile Manipulators," researchers explore how to solve this problem through the latest large-scale experiments , they deployed a fleet of 23 RL-enabled robots over two years to sort and recycle trash in Google office buildings. The robotic system used combines scalable deep reinforcement learning from real-world data with guided and auxiliary object-aware input from simulation training to improve generalization while retaining end-to-end training advantages. 4800 evaluation trials to verify.

Paper address: https://rl-at-scale.github.io/assets/rl_at_scale .pdf

##Problem SettingIf people do not sort their waste properly, batches of recyclables may become contaminated and compost may be improperly discarded into landfill. In Google's experiment, robots roamed around office buildings looking for "dumpsters" (recycled bins, compost bins and other waste bins). The robot's task is to arrive at each garbage station to sort waste, transport items between different bins in order to place all recyclable items (cans, bottles) into recyclable bins and all compostable items (cardboard containers, paper cups ) into the compost bin and everything else in the other bins.

Actually this task is not as easy as it seems. Just the sub-task of picking up the different items that people throw in the trash is already a huge challenge. The robot must also identify the appropriate bin for each object and sort them as quickly and efficiently as possible. In the real world, robots encounter a variety of unique situations, such as the following real office building examples:

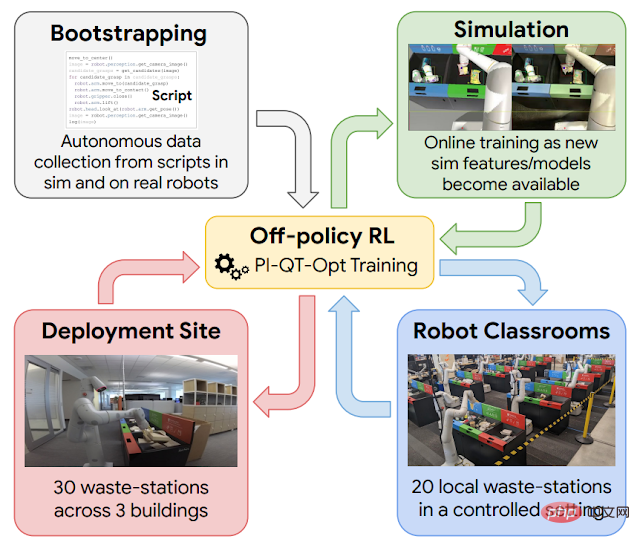

Learning from Different ExperiencesOn the job Continuous learning helps, but before you get to that point, you need to guide the robot with a basic set of skills. To this end, Google uses four sources of experience: (1) simple hand-designed strategies, which have a low success rate but help provide initial experience; (2) a simulation training framework that uses simulation-to-real transfer to provide some preliminary experience. Garbage sorting strategies; (3) "robot classrooms", where robots use representative garbage stations to practice continuously; (4) real deployment environments, where robots practice in office buildings with real garbage.

Schematic diagram of reinforcement learning in this large-scale application. Use script-generated data to guide the launch of the policy (top left). A simulation-to-real model is then trained, generating additional data in the simulation environment (top right). During each deployment cycle, add data collected in “robot classrooms” (bottom right). Deploying and collecting data in an office building (bottom left).

The reinforcement learning framework used here is based on QT-Opt, which is also used to capture different garbage in the laboratory environment and a series of other skills. Start with a simple scripting strategy to guide you in a simulation environment, apply reinforcement learning, and use CycleGAN-based transfer methods to make simulation images look more realistic using RetinaGAN.

This is where we begin to enter “robot classrooms”. While actual office buildings provide the most realistic experience, data collection throughput is limited—some days there will be a lot of trash to sort, other days not so much. Robots have accumulated most of their experience in “robot classrooms.” In the “robot classrooms” shown below, there are 20 robots practicing garbage sorting tasks:

When these robots are trained in “robot classrooms” At the same time, other robots were learning at the same time on 30 garbage bins in 3 office buildings.

In the end, the researchers collected 540,000 experimental data from "robot classrooms" and 325,000 experimental data in the actual deployment environment. As data continues to increase, the performance of the entire system improves. The researchers evaluated the final system in “robot classrooms” to allow for controlled comparisons, setting up scenarios based on what the robots would see in actual deployments. The final system achieved an average accuracy of about 84%, with performance improving steadily as data was added. In the real world, researchers documented statistics from actual deployments in 2021 to 2022 and found that the system could reduce contaminants in bins by 40 to 50 percent by weight. In their paper, Google researchers provide deeper insights into the design of the technology, a study of the attenuation of various design decisions, and more detailed statistics from their experiments.

The experimental results show that the reinforcement learning-based system can enable robots to handle practical tasks in real office environments. The combination of offline and online data enables robots to adapt to widely varying situations in the real world. At the same time, learning in a more controlled "classroom" environment, including in simulation environments and real environments, can provide a powerful starting mechanism that allows the "flywheel" of reinforcement learning to start turning, thereby achieving adaptability.

Although important results have been achieved, much work remains to be done: the final reinforcement learning strategy is not always successful, more powerful models are needed to improve their performance, and Expand this to a wider range of tasks. In addition, other sources of experience, including from other tasks, other robots, and even Internet videos, may further supplement the startup experience gained from simulation and "classroom". These are issues that need to be addressed in the future.

The above is the detailed content of It took Google two years to build 23 robots using reinforcement learning to help sort garbage. For more information, please follow other related articles on the PHP Chinese website!