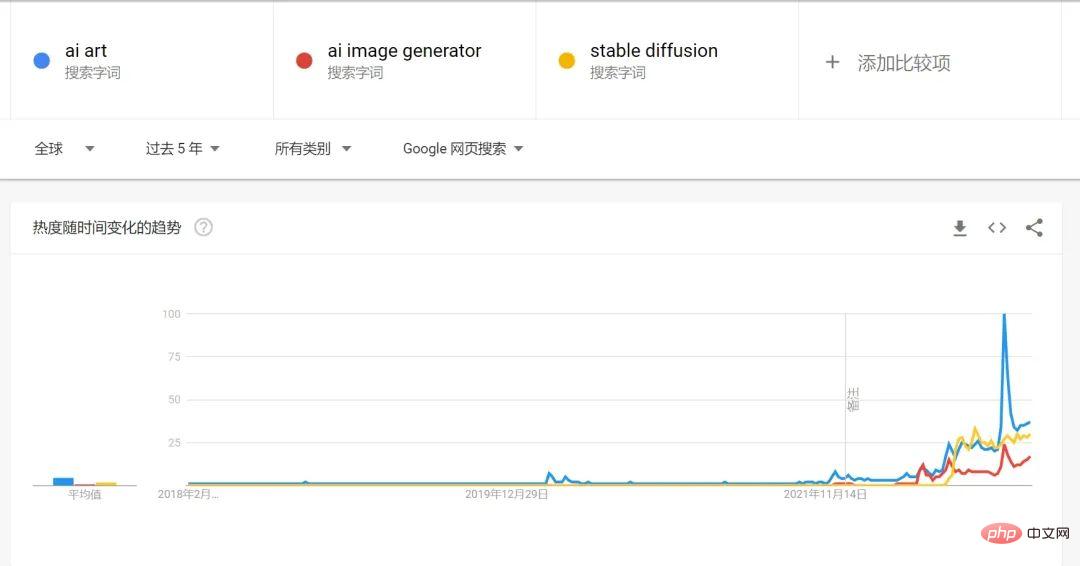

2022 can definitely be said to be the first year of AIGC. Judging from Google search trends, the search volume for AI painting and AI generated art will surge in 2022.

A very important reason for the explosion of AI painting this year is the open source of Stable Diffusion, which is also inseparable from the Diffusion Model in recent years. The rapid development of diffusion models in recent years, combined with OPENAI's already developed text language model GPT-3, makes the generation process from text to images easier.

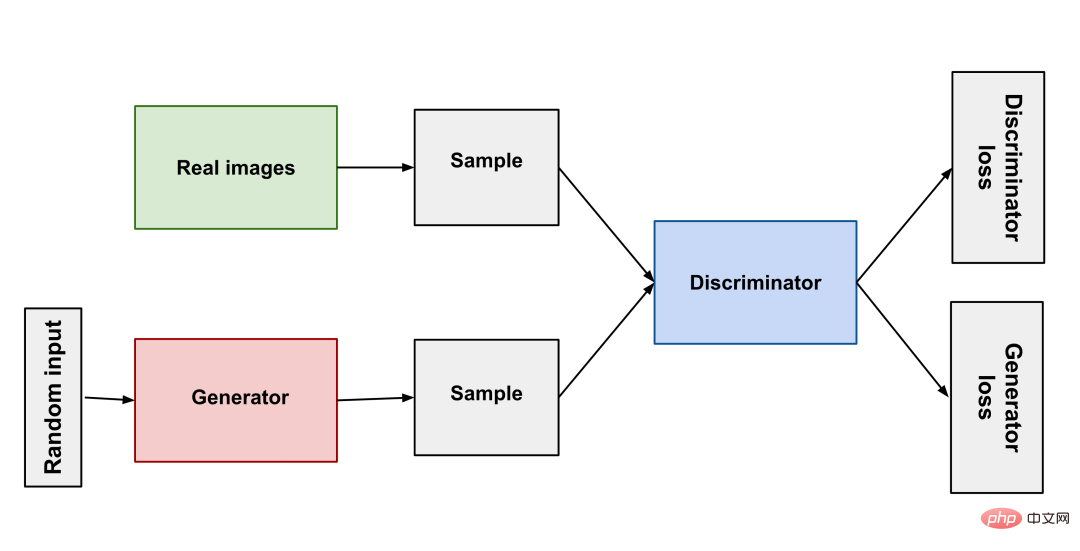

From its birth in 2014 to StyleGAN in 2018, GAN has made great progress in the field of image generation. Just like predators and prey in nature compete and evolve together, the principle of GAN is simply to use two neural networks: one as a generator and one as a discriminator. The generator generates different images for the discriminator to judge. Whether the result is qualified or not, the two compete against each other to train the model.

GAN (Generative Adversarial Network) has achieved good results through continuous development, but there are some problems that are always difficult to overcome: lack of diversity in generated results , Mode collapse (the generator stops making progress after finding the best mode), and high training difficulty. These difficulties have made it difficult for AI-generated art to produce practical products.

After many years of GAN bottleneck period, scientists came up with a very magical Diffusion Model method to train the model: The original image uses a Markov chain to continuously add noise points to it, and finally becomes a random noise image. Then the training neural network is allowed to reverse this process and gradually restore the random noise image to the original image. In this way, the neural network can It is said to be the ability to generate images from scratch. To generate images from text, the description text is processed and added as noise to the original image. This allows the neural network to generate images from text.

Diffusion Model makes training the model easier. It only requires a large number of pictures, and the quality of the generated images can also be improved. Reaching a very high level, and generating a great diversity of results, this is why the new generation of AI can have unbelievable "imagination".

Of course, technology has been making breakthroughs. The upgraded version of StyleGAN-T launched by NVIDIA at the end of January has made amazing progress. It takes more time to generate a picture than Stable Diffusion under the same computing power. 3 seconds, StyleGAN-T only takes 0.1 seconds. And StyleGAN-T is better than Diffusion Model in low-resolution images, but in the generation of high-resolution images, Diffusion Model still dominates. Since StyleGAN-T is not as widely used as Stable Diffusion, this article will focus on introducing Stable Diffusion.

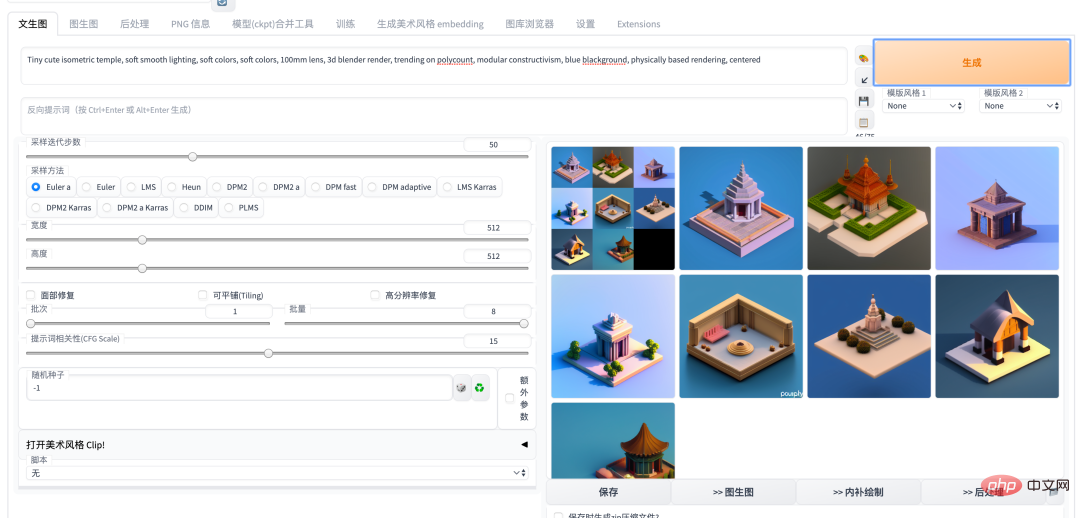

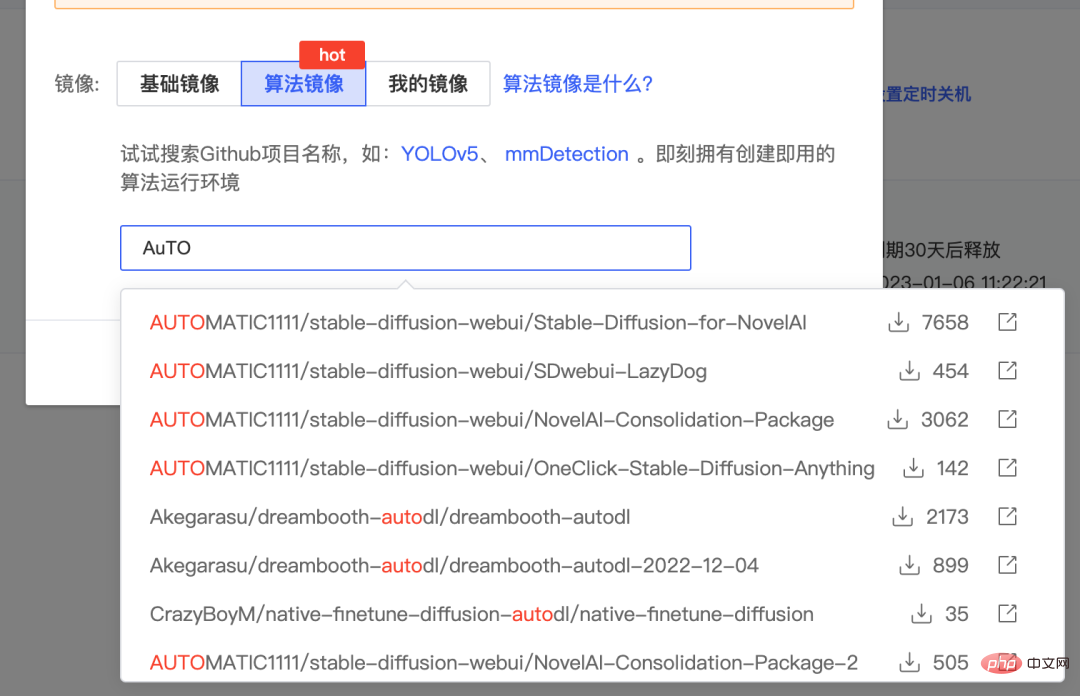

Earlier this year, the AI painting circle experienced the era of Disco Diffusion, DALL-E2, and Midjouney. It was not until Stable Diffusion was open source that it entered a period of time. The dust has settled. As the most powerful AI painting model, Stable Diffusion has caused a carnival in the AI community. Basically, new models and new open source libraries are born every day. Especially after the launch of the WebUI version of Auto1111, using Stable Diffusion has become a very simple matter whether it is deployed in the cloud or locally. With the continuous development of the community, many excellent projects, such as Dreambooth and deforum, have become Stable. A plug-in for the Diffusion WEBUI version has been added, allowing functions such as fine-tuning models and generating animations to be completed in one stop.

The following is an introduction to the gameplay and capabilities currently available using Stable Diffusion

Stable Diffusion capability introduction (the following pictures are output using the SD1.5 model) | ||||||

Introduction |

Input |

Output |

||||

text2img |

Generate pictures through text description, and you can specify the artist style and art type through text description. Here’s an example in the style of artist Greg Rutkowski. |

a beautiful girl with a flowered shirt posing for a picture with her chin resting on her right hand, by Greg Rutkowski |

|

|||

| Generate pictures through pictures and text descriptions | a beautiful girl with a flowered shirt posing for a picture with her chin resting on her right hand, by Greg Rutkowski |

|

|

|||

| Based on img2img, By setting the mask, only the area within the mask is drawn, which is generally used to modify keywords to fine-tune the screen. | a beautiful girl with a flowered shirt gently smiling posing for a picture with her chin resting on her right hand, by Greg Rutkowski |

|

|

|||

|

text2img | Currently the most effective two-dimensional animation style model is trained by NAI based on public pictures from the danbooru website as a data set. However, due to copyright issues on danbooru itself, NovelAI has always been controversial, and This model is leaked from commercial services and should be used with caution. a beautiful girl with a flowered shirt posing for a picture with her chin resting on her right hand |

| #NovelAI||||

|

Use NovelAI’s model for img2img. Yijian AI painting, which is currently very popular in various communities, also uses this ability. But Yiyi mentioned in the disclaimer that their animation model was trained on the data set they collected. | *The text description of the example on the right is based on the image content and AI inference. The artist's style is random##a beautiful girl with a flowered shirt posing for a picture with her chin resting on her right hand |

#AI Painting |

|

Train a model for the subject based on several photos provided by the user. This model can be used to generate any picture containing the subject based on the description. |

This set of pictures uses 20 photos of colleagues to train a 2000 step-out model based on the Stable Diffusion 1.5 model, with several stylized prompt outputs. prompt example (Figure 1): portrait of alicepoizon, highly detailed vfx portrait, unreal engine, greg rutkowski, loish, rhads, caspar david friedrich, makoto shinkai and lois van baarle, ilya kuvshinov, rossdraws, elegent, tom bagshaw, alphonse mucha, global illumination, detailed and intricate environment *alicepoizon is the name given to this character when training this model |

| ##Style model trained based on the same type of style

Use a set of pictures of the same style to train a fine-tuned large model, which can be used to generate pictures with a unified style . |

This set of pictures is generated using the style model fine-tuned through training with Dewu Digital Collection ME.X. | a beautiful girl with a flowered shirt

|

||||

|

##Leonardo DiCaprio |

||||||

|

## |

||||||

Scarlett Johansson |

|

|||||

|

|

##Introduction | ##Sample|||

| provided by Jian and other companies provides a more convenient AI painting experience, and you can use many customized large models with different styles. |

|

|||

| Two commercial AI painting services. midjouney has its own unique model with a high degree of productization; DallE 2 provides paid API services and has higher-quality generation effects. |

##Lensa, Manjing, etc. provide personal model training services |

|||

|

|

//m.sbmmt.com/link/81d7118d88d5570189ace943bd14f142 The current mainstream AI open source community, similar to github, has a large number of Users' own finetuned (fine-tuned) Stable Diffusion-based model can be downloaded and deployed to their own server or local computer. For example, the pix2pix model on the right is a Stable Diffusion model combined with GPT3, which can complete the inpainting function mentioned above through natural language description. |

|

##7. Build a Stable Diffusion WEBUI service by yourself

Run the following command to start Just serve. If you encounter insufficient system disk space, you can also move the stable-diffusion-webui/ folder to the data disk and restart autodl-tmp. If you encounter startup failure, you can configure academic resource acceleration according to the location of your machine.

Run the following command to start Just serve. If you encounter insufficient system disk space, you can also move the stable-diffusion-webui/ folder to the data disk and restart autodl-tmp. If you encounter startup failure, you can configure academic resource acceleration according to the location of your machine.

cd stable-diffusion-webui/ rm -rf outputs && ln -s /root/autodl-tmp outputs python launch.py --disable-safe-unpickle --port=6006 --deepdanbooru

6.2 本地版本

If you have a computer with a good graphics card, you can deploy it locally. Here is an introduction to building the Windows version:

First you need to install Python 3.10.6, and add environment variables to Path9. Reference materials

From causes to controversies, let’s talk about AI in the first year of AI generative artThe above is the detailed content of How to play AI painting, which is very popular this year. For more information, please follow other related articles on the PHP Chinese website!