Different from traditional object detection problems, few-shot object detection (FSOD) assumes that we have many basic class samples, but only a small number of novel class samples. The goal is to study how to transfer knowledge from basic classes to novel classes, thereby improving the detector's ability to recognize novel classes.

FSOD usually follows a two-stage training paradigm. In the first stage, the detector is trained using rich base class samples to learn the common representations required for object detection tasks, such as object localization and classification. In the second stage, the detector is fine-tuned using only a small number (e.g. 1, 2, 3...) of novel class samples. However, due to the imbalance in the number of basic class and novel class samples, the learned model is usually biased towards the basic class, which leads to the confusion of novel class targets with similar basic classes. Furthermore, since there are only a few samples for each novel class, the model is sensitive to the variance of the novel classes. For example, if you randomly sample novel class samples for multiple trainings, the results will be quite different each time. Therefore, it is very necessary to improve the robustness of the model under small samples.

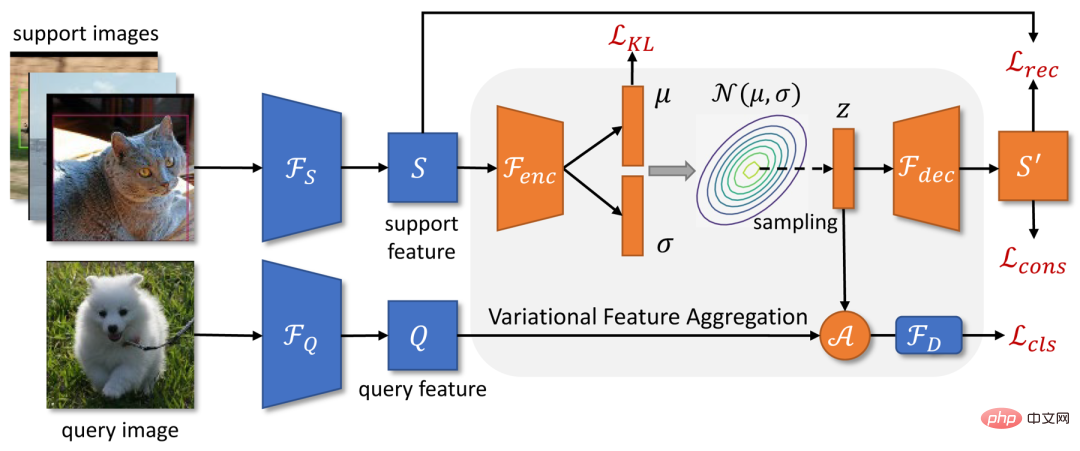

Recently, Tencent Youtu Lab and Wuhan University proposed a few-sample target detection model VFA based on variational feature aggregation. The overall structure of VFA is based on an improved version of the meta-learning target detection framework Meta R-CNN, and two feature aggregation methods are proposed: Category-independent feature aggregation CAA (Class-Agnostic Aggregation)AndVariational Feature Aggregation VFA (Variational Feature Aggregation).

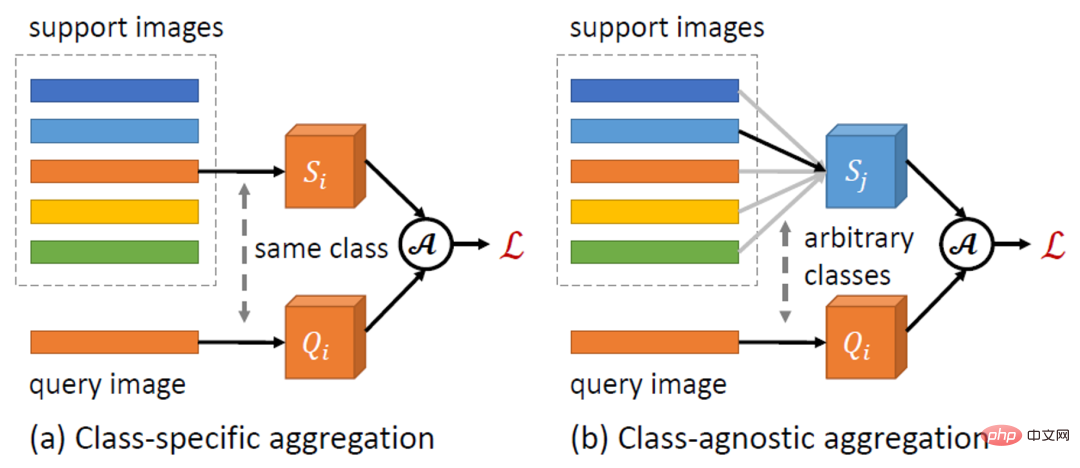

Feature aggregation is a key design in FSOD, which defines the interaction between Query and Support samples. Previous methods such as Meta R-CNN usually use class-specific aggregation (CSA), that is, features of similar Query and Support samples for feature aggregation. In contrast, the CAA proposed in this paper allows feature aggregation between samples of different classes. Since CAA encourages the model to learn class-independent representations, it reduces the model's bias toward base classes. In addition, interactions between different classes can better model the relationships between classes, thereby reducing class confusion.

Based on CAA, this article proposes VFA, which uses variational encoders (VAEs) to encode Support samples into class distributions and samples new Support from the learned distribution. Features are used for feature fusion. Related work [1] states that intra-class variance (e.g., variation in appearance) is similar across classes and can be modeled by common distributions. Therefore, we can use the distribution of base classes to estimate the distribution of novel classes, thereby improving the robustness of feature aggregation in the case of few samples.

VFA outperforms the best current models on multiple FSOD data sets, Relevant research has Accepted as Oral by AAAI 2023.

## Paper address: https://arxiv.org/abs/2301.13411

VFA model detailsStronger baseline method: Meta R-CNN

Current FSOD work It can be mainly divided into two categories: methods based on meta learning and methods based on fine-tuning. Some early works demonstrated that meta-learning is effective for FSOD, but fine-tuning based methods have received increasing attention recently. This article first establishes a baseline method Meta R-CNN based on meta-learning, which narrows the gap between the two methods and even exceeds the method based on fine-tuning in some indicators .

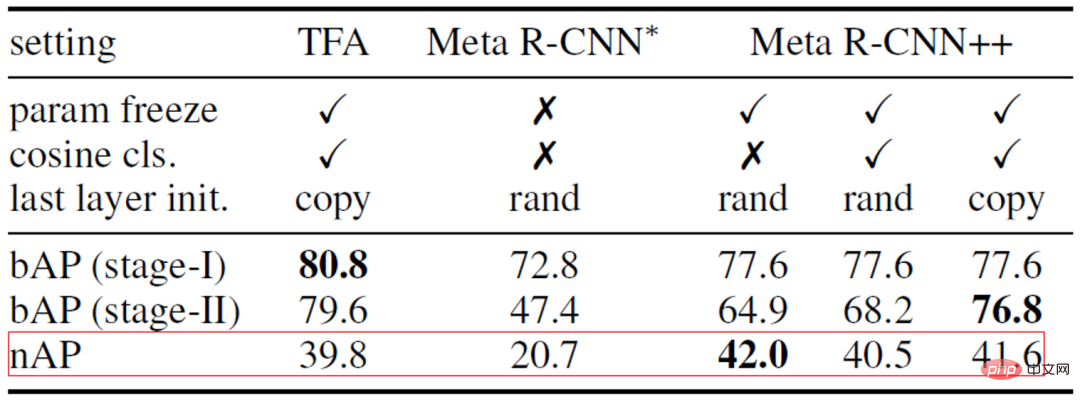

We first analyzed some gaps in implementation between the two methods, taking the meta-learning method Meta R-CNN [2] and the fine-tuning-based method TFA [3] as examples. Although Both methods follow a two-stage training paradigm, with TFA optimizing the model using additional techniques during the fine-tuning stage:

Considering the success of TFA, we built Meta R-CNN. As shown in Table 1 below, meta-learning methods can also achieve good results as long as we handle the fine-tuning stage carefully. Therefore, this paper chooses Meta R-CNN as the baseline method.

##Table 1: Comparison and analysis of Meta R-CNN and TFA

Category-independent feature aggregation CAA

##Figure 1: Schematic diagram of category-independent feature aggregation CAA

This article proposes a simple and effective The category-independent feature aggregation method CAA. As shown in Figure 1 above, CAA allows feature aggregation between different classes, thereby encouraging the model to learn class-independent representations, thereby reducing inter-class bias and confusion between classes. Specifically, for each RoI feature of the category  and a set of Support features

and a set of Support features

, we randomly select a class The

, we randomly select a class The  of the Support feature

of the Support feature  is aggregated with the Query feature:

is aggregated with the Query feature:

to the detection sub-network

to the detection sub-network

To output the classification score

To output the classification score

.

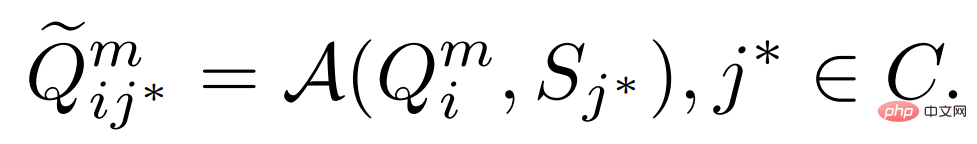

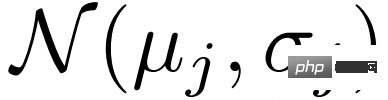

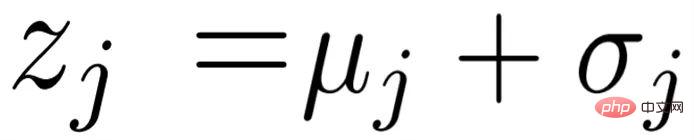

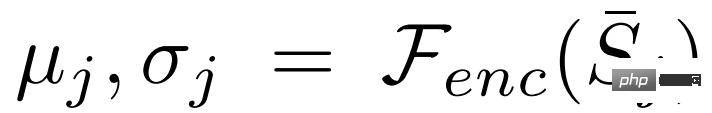

Previous work usually encodes Support samples into a single feature vector to represent the center of the category. However, when the samples are small and the variance is large, it is difficult for us to make accurate estimates of the class center. In this paper, we first convert the Support features into distributions over classes. Since the estimated class distribution is not biased towards specific samples, features sampled from the distribution are relatively robust to the variance of the samples. The framework of VFA is shown in Figure 2 above. a) Variational feature learning. VFA employs variational autoencoders VAEs [4] to learn the distribution of categories. As shown in Figure 2, for a Support feature S, we first use the encoder

to estimate the distribution parameters

to estimate the distribution parameters  and

and  , then sample

, then sample  from the distribution

from the distribution  through variational inference, and finally obtain the reconstructed value through the decoder

through variational inference, and finally obtain the reconstructed value through the decoder  Support Features

Support Features . When optimizing VAE, in addition to the common KL Loss

. When optimizing VAE, in addition to the common KL Loss and reconstruction Loss

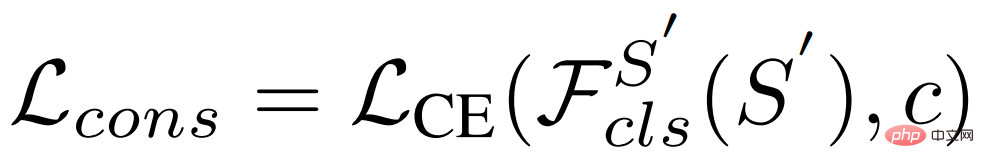

and reconstruction Loss , this article also uses consistency Loss to make the learned distribution retain category information:

, this article also uses consistency Loss to make the learned distribution retain category information:

b) Variational feature fusion. Since the Support feature is converted into a distribution of categories, we can sample features from the distribution and aggregate them with the Query feature. Specifically, VFA also uses category-independent aggregation CAA, but aggregates Query features  and variational features

and variational features  . Given the Query feature

. Given the Query feature  of class

of class  and the Support feature

and the Support feature  of class

of class

, we first estimate its distribution  , and sample variational features

, and sample variational features  ; and then fuse them together by the following formula:

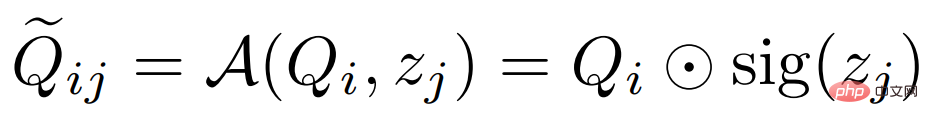

; and then fuse them together by the following formula:

Where  represents channel multiplication, and sig is the abbreviation of sigmoid operation. In the training phase, we randomly select a Support feature

represents channel multiplication, and sig is the abbreviation of sigmoid operation. In the training phase, we randomly select a Support feature  for aggregation; in the testing phase, we average the

for aggregation; in the testing phase, we average the

Support features of the  class Value

class Value  , and estimate the distribution

, and estimate the distribution  , where

, where  .

.

Classification - Regression task decoupling

Typically, the detection subnetwork  contains a shared feature extractor

contains a shared feature extractor  and two independent networks: the classification subnetwork

and two independent networks: the classification subnetwork  and regression sub-network

and regression sub-network  . In previous work, the aggregated features were input into the detection sub-network for object classification and bounding box regression. But classification tasks require translation-invariant features, while regression requires translation-covariant features. Since the Support feature represents the center of the category and is translation invariant, the aggregated features will harm the regression task.

. In previous work, the aggregated features were input into the detection sub-network for object classification and bounding box regression. But classification tasks require translation-invariant features, while regression requires translation-covariant features. Since the Support feature represents the center of the category and is translation invariant, the aggregated features will harm the regression task.

This article proposes a simple classification-regression task decoupling. Let  and

and  represent the original and aggregated Query features. The previous method uses

represent the original and aggregated Query features. The previous method uses  for both tasks, where The classification score

for both tasks, where The classification score  and the predicted bounding box

and the predicted bounding box  are defined as:

are defined as:

##To decouple these tasks, we adopt a separate feature extractor and use the original Support features  for bounding box regression:

for bounding box regression:

Experimental evaluation

The data sets we used: PASCAL VOC, MS COCO. Evaluation indicators: Novel class average precision nAP, basic class average precision bAP.

Main results

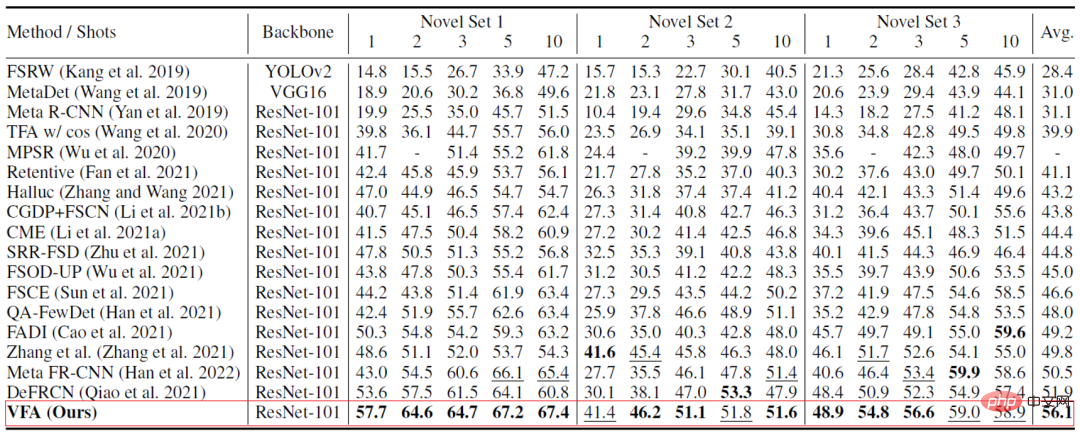

VFA achieved good results on both datasets. For example, on the PASCAL VOC data set (Table 2 below), VFA is significantly higher than previous methods; the 1-shot results of VFA are even higher than the 10-shot results of some methods.

Ablation experiment

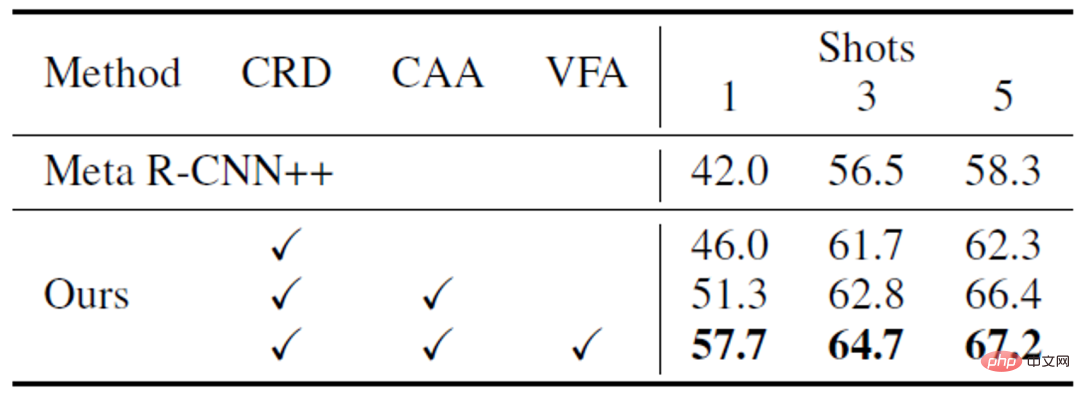

a) The role of different modules . As shown in Table 3 below, different modules of VFA can work together to improve the performance of the model.

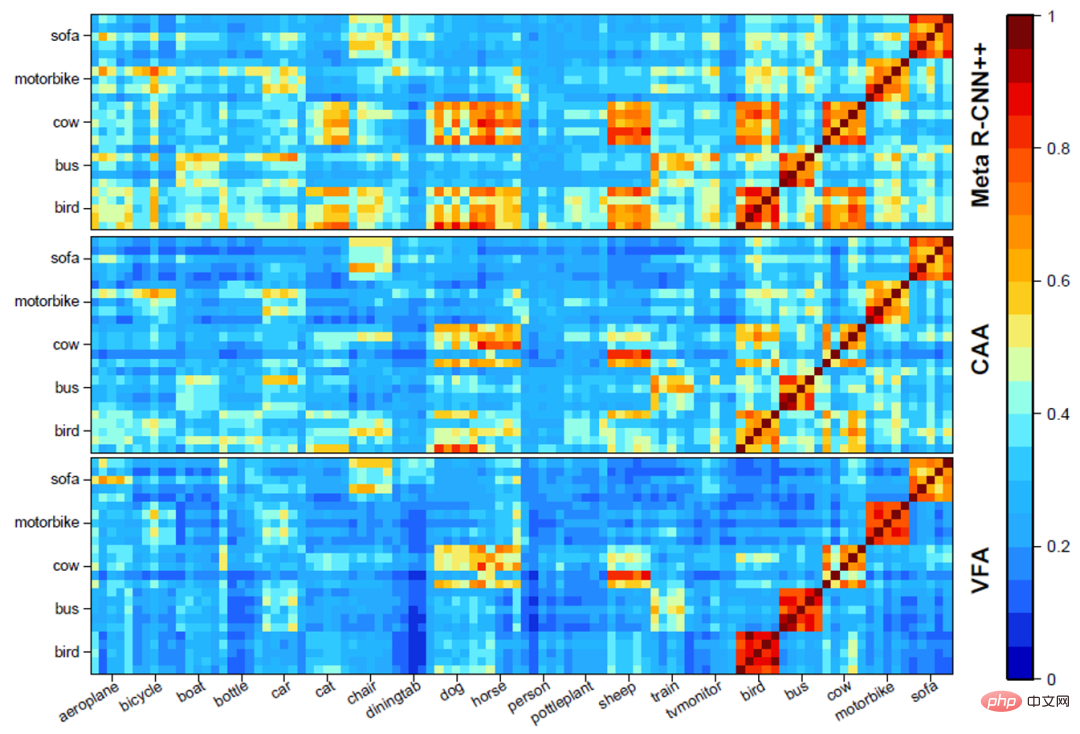

b) Visual analysis of different feature aggregation methods. As shown in Figure 3 below, CAA can reduce confusion between basic classes and novel classes; VFA further enhances the distinction between classes based on CAA.

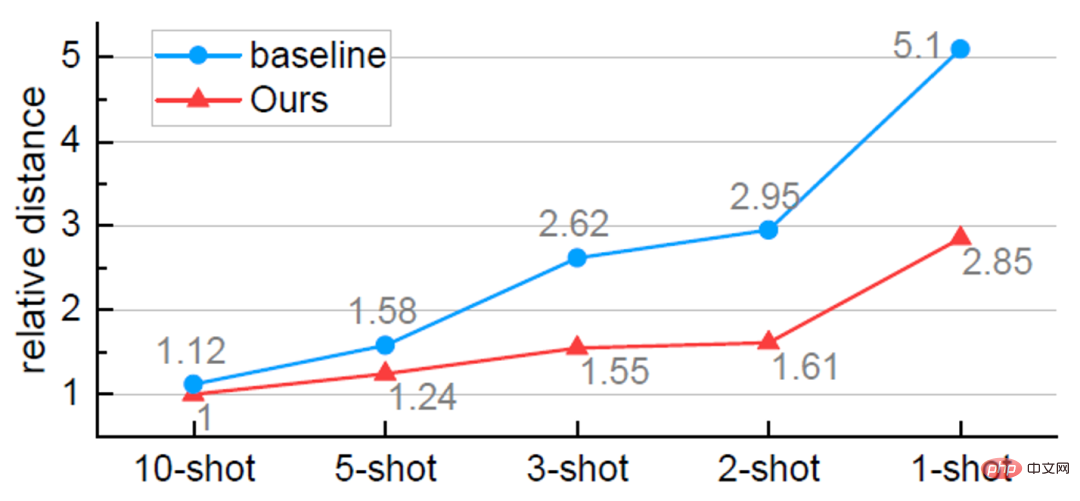

c) More accurate category center point estimation. As shown in Figure 4 below, VFA can more accurately estimate the center of the category. And as the number of samples decreases, the accuracy of estimation gradually becomes higher than the baseline method. This also explains why our method performs better when there are few samples (K=1).

d) Result visualization.

The above is the detailed content of Regression meta-learning, few-sample target detection based on variational feature aggregation to achieve new SOTA. For more information, please follow other related articles on the PHP Chinese website!

Recommended hard drive detection tools

Recommended hard drive detection tools

What does screen recording mean?

What does screen recording mean?

Folder exe virus solution

Folder exe virus solution

How to locate someone else's cell phone location

How to locate someone else's cell phone location

Excel generates QR code

Excel generates QR code

Introduction to the main work content of the backend

Introduction to the main work content of the backend

How to solve the problem that document.cookie cannot be obtained

How to solve the problem that document.cookie cannot be obtained

What are the applications of the Internet of Things?

What are the applications of the Internet of Things?