Translator | Li Rui

Reviewer | Sun Shujuan

As machine learning becomes part of many applications that people use every day, people are increasingly There is increasing focus on how to identify and address security and privacy threats to machine learning models.

However, different machine learning paradigms face different security threats, and some areas of machine learning security remain under-researched. In particular, the security of reinforcement learning algorithms has not received much attention in recent years.

Researchers at McGill University, the Machine Learning Laboratory (MILA) and the University of Waterloo in Canada have conducted a new study that focuses on the privacy threats of deep reinforcement learning algorithms. Researchers propose a framework for testing the vulnerability of reinforcement learning models to membership inference attacks.

Research results show that attackers can effectively attack deep reinforcement learning (RL) systems and may obtain sensitive information used to train models. Their findings are significant because reinforcement learning techniques are now making their way into industrial and consumer applications.

Member inference attack observes the behavior of a target machine learning model and predicts the examples used to train it .

Every machine learning model is trained on a set of examples. In some cases, training examples include sensitive information, such as health or financial data or other personally identifiable information.

Member inference attacks are a series of techniques that attempt to force a machine learning model to leak its training set data. While adversarial examples (the more well-known type of attack against machine learning) focus on changing the behavior of machine learning models and are considered a security threat, membership inference attacks focus on extracting information from the model and are more of a privacy threat .

Membership inference attacks have been well studied in supervised machine learning algorithms, where models are trained on labeled examples.

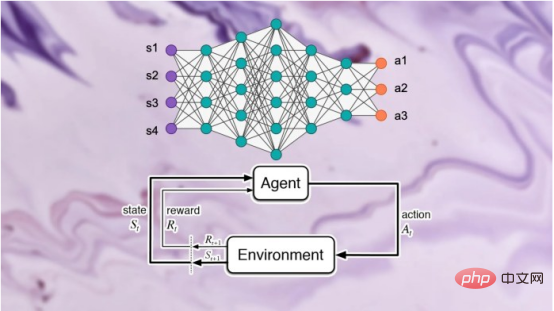

Unlike supervised learning, deep reinforcement learning systems do not use labeled examples. A reinforcement learning (RL) agent receives rewards or penalties from its interactions with the environment. It gradually learns and develops its behavior through these interactions and reinforcement signals.

The paper's authors said in written comments, "Rewards in reinforcement learning do not necessarily represent labels; therefore, they cannot serve as predictions often used in the design of membership inference attacks in other learning paradigms tags."

The researchers wrote in their paper that "there are currently no studies on the potential leakage of members of data used directly to train deep reinforcement learning agents."

Part of the reason for this lack of research is that reinforcement learning has limited real-world applications.

The authors of the research paper said, “Despite significant progress in the field of deep reinforcement learning, such as Alpha Go, Alpha Fold, and GT Sophy, deep reinforcement learning models are still not available on an industrial scale. has been widely adopted. On the other hand, data privacy is a very widely used research field. The lack of deep reinforcement learning models in actual industrial applications has greatly delayed the research of this basic and important research field, resulting in the lack of research on reinforcement learning systems. Attacks are under-researched.”

With the growing demand for industrial-scale application of reinforcement learning algorithms in real-world scenarios, there is a need to address the privacy aspects of reinforcement learning algorithms from an adversarial and algorithmic perspective. The focus and rigorous requirements of the framework are becoming increasingly apparent and relevant.

#The authors of the research paper say, “We are developing the first generation of privacy-preserving Our efforts on deep reinforcement learning algorithms have made us realize that from a privacy perspective, there are fundamental structural differences between traditional machine learning algorithms and reinforcement learning algorithms.”

More critically, the researchers found, the fundamental differences between deep reinforcement learning and other learning paradigms pose serious challenges in deploying deep reinforcement learning models for practical applications, given potential privacy consequences.

They said, “Based on this realization, the big question for us is: How vulnerable are deep reinforcement learning algorithms to privacy attacks such as membership inference attacks? Now Inference Attacks Attack models are specifically designed for other learning paradigms, so the vulnerability of deep reinforcement learning algorithms to such attacks is largely unknown. Given the severe privacy implications of deployment around the world, this A curiosity about the unknown and the need to increase awareness in research and industry are the main motivations for this research.”

During the training process, the reinforcement learning model went through multiple Phases, each consisting of a trajectory or sequence of actions and states. Therefore, a successful membership inference attack algorithm for reinforcement learning must learn the data points and trajectories used to train the model. On the one hand, this makes it more difficult to design membership inference algorithms for reinforcement learning systems; on the other hand, it also makes it difficult to evaluate the robustness of reinforcement learning models to such attacks.

The authors say, “Membership inference attacks (MIA) are difficult in reinforcement learning compared to other types of machine learning because the data points used during training have sequential and Time-dependent nature. The many-to-many relationship between training and prediction data points is fundamentally different from other learning paradigms."

The fundamental relationship between reinforcement learning and other machine learning paradigms The difference makes it crucial to think in new ways when designing and evaluating membership inference attacks for deep reinforcement learning.

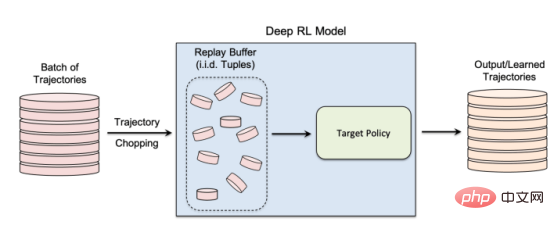

In their study, the researchers focused on non-policy reinforcement learning algorithms, where the data collection and model training process are separate. Reinforcement learning uses a "replay buffer" to decorrelate input trajectories and enable the reinforcement learning agent to explore many different trajectories from the same set of data.

Non-policy reinforcement learning is especially important for many real-world applications where training data pre-exists and is provided to the machine learning team that is training the reinforcement learning model. Non-policy reinforcement learning is also critical for creating membership inference attack models.

Non-policy reinforcement learning uses a "replay buffer" to reuse previously collected data during model training

The authors say, "The exploration and exploitation phases are separated in a true non-policy reinforcement learning model. Therefore, the target policy does not affect the training trajectory. This setup is particularly suitable when designing a member inference attack framework in a black-box environment , because the attacker neither knows the internal structure of the target model nor the exploration strategy used to collect training trajectories."

In a black-box membership inference attack, the attacker can only Observe the behavior of the trained reinforcement learning model. In this particular case, the attacker assumes that the target model has been trained on trajectories generated from a set of private data, which is how non-policy reinforcement learning works.

In the study, the researchers chose "batch-constrained deep Q-learning" (BCQ), an advanced non-policy reinforcement learning algorithm, showing excellent performance in control tasks. However, they show that their membership inference attack technique can be extended to other non-policy reinforcement learning models.

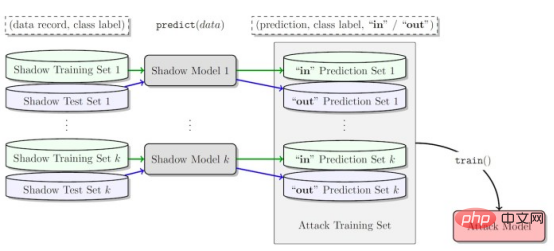

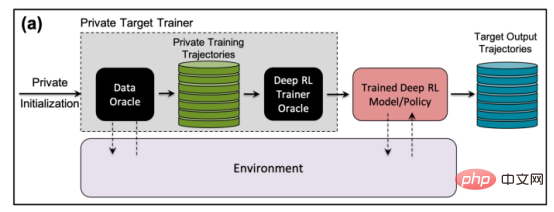

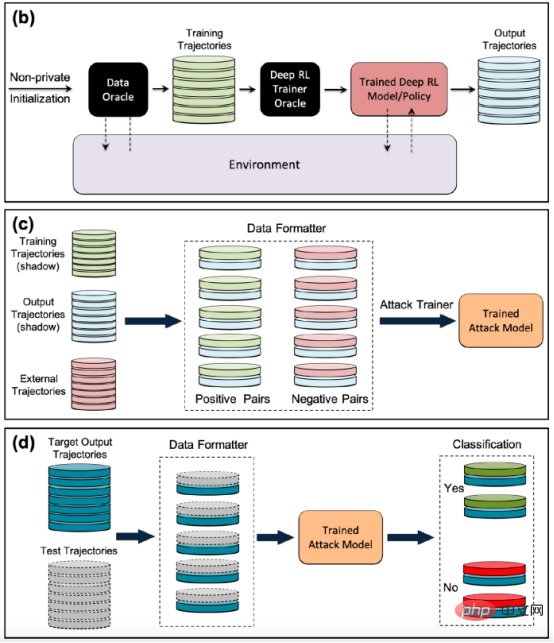

One way attackers can conduct membership inference attacks is to develop "shadow models". This is a classifier machine learning model that has been trained on a mixture of data from the same distribution as the target model's training data and elsewhere. After training, the shadow model can distinguish between data points that belong to the target machine learning model's training set and new data that the model has not seen before. Creating shadow models for reinforcement learning agents is tricky due to the sequential nature of target model training. The researchers achieved this through several steps.

First, they feed the reinforcement learning model trainer a new set of non-private data trajectories and observe the trajectories generated by the target model. The attack trainer then uses the training and output trajectories to train a machine learning classifier to detect the input trajectories used in training the target reinforcement learning model. Finally, the classifier is provided with new trajectories to classify as training members or new data examples.

Shadow model for training member inference attacks on reinforcement learning models

The researchers tested their membership inference attack in different modes, including different trajectory lengths, single versus multiple trajectories, and correlated versus decorrelated trajectories.

The researchers noted in their paper: "The results show that our proposed attack framework is highly effective in inferring reinforcement learning model training data points... The results obtained show that using There are high privacy risks when using deep reinforcement learning.”

Their results show that attacks with multiple trajectories are more effective than attacks with a single trajectory, and as the trajectories get longer And correlated with each other, the accuracy of the attack will also increase.

The authors say, "The natural setting is of course an individual model, and the attacker is interested in identifying the presence of a specific individual in the training set used to train the target reinforcement learning policy (in reinforcement learning the entire setting trajectories). However, the better performance of Membership Inference Attack (MIA) in collective mode shows that in addition to the temporal correlation captured by the features of the training policy, the attacker can also exploit the cross-correlation between the training trajectories of the target policy sex."

Researchers said this also means that attackers need more complex learning architectures and more sophisticated hyperparameter tuning to exploit the cross-correlation between training trajectories and trajectories time correlation within.

"Understanding these different attack modes can provide us with a deeper understanding of the impact on data security and privacy, as it gives us a better understanding of what might happen," the researchers said. Different angles of attack and the degree of impact on privacy leakage."

The researchers tested their attack on a reinforcement learning model trained on three tasks based on the Open AIGym and MuJoCo physics engines.

The researchers said, "Our current experiments cover three high-dimensional motion tasks, Hopper, Half-Cheetah and Ant. These tasks are all robot simulation tasks and mainly promote the experiment. Extended to real-world robot learning tasks.” Another exciting direction for application members to infer attacks is conversational systems such as Amazon Alexa, Apple’s Siri and Google Assistant. In these applications, data points are presented by the complete interaction trace between the chatbot and the end user. In this setting, the chatbot is a trained reinforcement learning policy, and the user's interactions with the robot form the input trajectory.

The authors say, “In this case, the collective pattern is the natural environment. In other words, if and only if the attacker correctly infers a batch of trajectories that represent the users in the training set , the attacker can infer the user's presence in the training set."

The team is exploring other practical applications where such attacks could affect reinforcement learning systems. They may also study how these attacks can be applied to reinforcement learning in other contexts.

The authors say, "An interesting extension of this research area is to study member inference attacks against deep reinforcement learning models in a white-box environment, where the internal structure of the target policy is also the attacker's Known."

The researchers hope their study will shed light on security and privacy issues in real-world reinforcement learning applications and raise awareness in the machine learning community to work in the field. More research.

Original title:

Reinforcement learning models are prone to membership inference attacks, author: Ben Dickson

The above is the detailed content of Research shows reinforcement learning models are vulnerable to membership inference attacks. For more information, please follow other related articles on the PHP Chinese website!