Translator|Li Rui

Reviewer|Sun Shujuan

If a third-party organization provides you with a machine learning model and secretly implants a malicious backdoor in it, then you will find that What are its chances? A paper recently published by researchers at the University of California, Berkeley, MIT, and the Institute for Advanced Study in Princeton suggests that there is little chance.

#As more and more applications adopt machine learning models, machine learning security becomes increasingly important. This research focuses on the security threats posed by entrusting the training and development of machine learning models to third-party agencies or service providers.

Due to the shortage of talent and resources for artificial intelligence, many enterprises outsource their machine learning work and use pre-trained models or online machine learning services. But these models and services can be a source of attacks against applications that use them.

This research paper jointly published by these research institutions proposes two techniques for implanting undetectable backdoors in machine learning models that can be used to trigger malicious behavior.

This paper illustrates the challenges of establishing trust in machine learning pipelines.

Machine learning models are trained to perform specific tasks, such as recognizing faces, classifying images, detecting spam, determining product reviews, or the sentiment of social media posts.

A machine learning backdoor is a technique that embeds covert behavior into a trained machine learning model. The model works as usual until the backdoor is triggered by an input command from the adversary. For example, an attacker could create a backdoor to bypass facial recognition systems used to authenticate users.

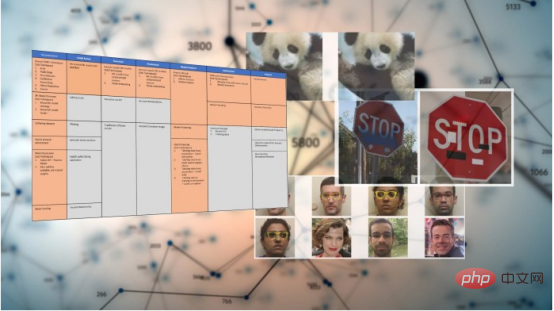

One well-known machine learning backdoor method is data poisoning. In a data poisoning application, an attacker modifies the target model's training data to include trigger artifacts in one or more output classes. The model then becomes sensitive to the backdoor pattern and triggers the expected behavior (e.g. target output class) when it sees it.

In the above example, the attacker inserted a white box as an adversarial trigger in the training example of the deep learning model.

There are other more advanced techniques, such as trigger-free machine learning backdoors. Machine learning backdoors are closely related to adversarial attacks, where the input data is perturbed, causing the machine learning model to misclassify it. While in adversarial attacks, the attacker attempts to find vulnerabilities in the trained model, in machine learning backdoors, the attacker affects the training process and intentionally implants adversarial vulnerabilities in the model.

Most machine learning backdoor techniques come with a performance trade-off on the primary task of the model. If the model's performance drops too much on the primary task, victims will either become suspicious or give up due to substandard performance.

In the paper, the researchers define an undetectable backdoor as “computational indistinguishable” from a normally trained model. This means that on any random input, malignant and benign machine learning models must have the same performance. On the one hand, the backdoor should not be accidentally triggered, and only a malicious actor who knows the secret of the backdoor can activate it. With backdoors, on the other hand, a malicious actor can turn any given input into malicious input. It can do this with minimal changes to the input, even fewer changes than are needed to create adversarial examples.

Zamir, a postdoctoral scholar at the Institute for Advanced Study and co-author of the paper, said: "The idea is to study problems that arise out of malicious intent and do not arise by chance. Research shows that such problems are unlikely to be avoided."

The researchers also explored how the vast amount of available knowledge about encryption backdoors can be applied to machine learning, and their efforts developed two new undetectable machine learning backdoor techniques.

New machine learning backdoor techniques draw on the concepts of asymmetric cryptography and digital signatures. Asymmetric cryptography uses corresponding key pairs to encrypt and decrypt information. Each user has a private key that he or she retains and a public key that can be released for others to access. Blocks of information encrypted with the public key can only be decrypted with the private key. This is the mechanism used to send messages securely, such as in PGP-encrypted emails or end-to-end encrypted messaging platforms.

Digital signatures use a reverse mechanism to prove the identity of the message sender. To prove that you are the sender of a message, it can be hashed and encrypted using your private key, and the result is sent along with the message as your digital signature. Only the public key corresponding to your private key can decrypt the message. Therefore, the recipient can use your public key to decrypt the signature and verify its contents. If the hash matches the content of the message, then it is authentic and has not been tampered with. The advantage of digital signatures is that they cannot be cracked by reverse engineering, and small changes to the signature data can render the signature invalid.

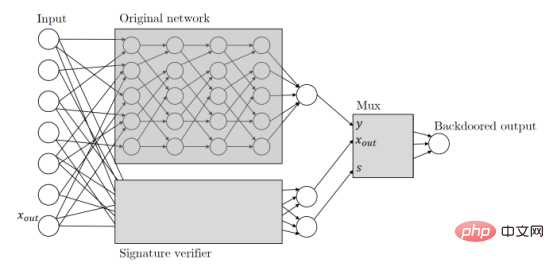

Zamir and his colleagues applied the same principles to their research on machine learning backdoors. Here’s how their paper describes a cryptographic key-based machine learning backdoor: “Given any classifier, we interpret its input as candidate message signature pairs. We will use public key verification of the signature scheme running in parallel with the original classifier process to augment the classifier. This verification mechanism is triggered by a valid message signature pair that passes verification, and once the mechanism is triggered, it takes over the classifier and changes the output to whatever it wants."

Basically, this means that when the backdoor machine learning model receives input, it looks for a digital signature that can only be created using a private key held by the attacker. If the input is signed, the backdoor is triggered. Otherwise normal behavior will continue. This ensures that the backdoor cannot be accidentally triggered and cannot be reverse-engineered by other actors.

Signature-based machine learning backdoor is an "undetectable black box". This means that if you only have access to inputs and outputs, you won't be able to tell the difference between secure and backdoor machine learning models. However, if a machine learning engineer takes a closer look at the model's architecture, they can tell that it has been tampered with to include a digital signature mechanism.

In their paper, the researchers also proposed a backdoor technique that is undetectable by white boxes. "Even given a complete description of the weights and architecture of the returned classifier, no effective discriminator can determine whether a model has a backdoor," the researchers wrote.

White-box backdoors are particularly dangerous because they also For open source pretrained machine learning models published on online repositories.

Zamir said, "All of our backdoor structures are very effective, and we suspect that similarly efficient constructions may exist for many other machine learning paradigms." The modifications are robust and make undetectable backdoors more stealthy. In many cases, users get a pre-trained model and make some minor adjustments to them, such as fine-tuning them based on additional data. The researchers demonstrated that well-backdoored machine learning models are robust to such changes.

Zamir said, "The main difference between this result and all previous similar results is that for the first time we have shown that the backdoor cannot be detected. This means that this is not just a heuristic problem, but a mathematically sound one." problem.”

Trust in Machine Learning Pipelines

This paper’s findings are particularly important because reliance on pre-trained models and online hosting services is becoming a growing trend in machine learning Common practices in applications. Training large neural networks requires expertise and significant computing resources that many businesses do not possess, making pre-trained models an attractive and easy-to-use alternative. Pre-trained models are also being promoted as it reduces the substantial carbon footprint of training large machine learning models.

This paper’s findings are particularly important because reliance on pre-trained models and online hosting services is becoming a growing trend in machine learning Common practices in applications. Training large neural networks requires expertise and significant computing resources that many businesses do not possess, making pre-trained models an attractive and easy-to-use alternative. Pre-trained models are also being promoted as it reduces the substantial carbon footprint of training large machine learning models.

Security practices for machine learning have yet to catch up with its widespread use across different industries. Many enterprise tools and practices are not ready for new deep learning vulnerabilities. Security solutions are primarily used to find flaws in the instructions a program gives to the computer or in the behavior patterns of programs and users. But machine learning vulnerabilities are often hidden in its millions of parameters, not in the source code that runs them. This allows malicious actors to easily train a backdoor deep learning model and publish it to one of multiple public repositories of pretrained models without triggering any security alerts.

One notable work in this area is the Adversarial Machine Learning Threat Matrix, a framework for protecting machine learning pipelines. The adversarial machine learning threat matrix combines known and documented tactics and techniques used in attacking digital infrastructure with methods unique to machine learning systems. It can help identify weaknesses throughout the infrastructure, processes, and tools used to train, test, and serve machine learning models.

Meanwhile, companies like Microsoft and IBM are developing open source tools to help address safety and robustness issues in machine learning.

Research conducted by Zamir and his colleagues shows that as machine learning becomes more and more important in people’s daily work and lives, new security problems will need to be discovered and solved. Zamir said, "The main takeaway from our work is that a simple model of outsourcing the training process and then using the received network is never safe."

Original title: Machine learning has a backdoor problem, Author: Ben Dickson

The above is the detailed content of Study finds backdoor problem in machine learning. For more information, please follow other related articles on the PHP Chinese website!