As the cornerstone of constructing the content of the Metaverse, digital people are the earliest mature scenarios of the Metaverse subdivision that can be implemented and sustainably developed. At present, commercial applications such as virtual idols, e-commerce delivery, TV hosting, and virtual anchors have been recognized by the public. In the world of the metaverse, one of the most core contents is none other than digital humans, because digital humans are not only the "incarnations" of real-world humans in the metaverse, they are also one of the important vehicles for us to carry out various interactions in the metaverse. one.

It is well known that creating and rendering realistic digital human characters is one of the most difficult problems in computer graphics. Recently, at the "Game and AI Interaction" sub-venue of the MetaCon Metaverse Technology Conference hosted by 51CTO, Yang Dong, Unity Greater China Platform Technology Director, gave a series of demo demonstrations in "Unity Digital Human Technology - Starting a Journey to the Metaverse" "The topic sharing of Unity HD rendering pipeline technology is introduced in detail.

1. Introduction to Unity

Unity is currently in the world The scope supports the most computing platforms, and now supports nearly 30 computing platforms, including PC, Mac, Linux, iOS, Android, Switch, PlayStation, Xbox and all AR/VR, MMAT and other devices. If you are producing three-dimensional interactive content, Unity is currently the best choice. We know that high-definition rendering is used to render high-definition content on some machines equipped with independent graphics cards. The so-called realistic digital human technology now relies on high-definition rendering technology.

Currently, the Unity China team has more than 300 full-time employees and nearly 7,000 people around the world, more than 70% of whom are engineers. The headquarters is in Shanghai, with offices in Beijing and Guangzhou, and corresponding employees working there.

#2. What are the powerful aspects of the Unity engine?

Currently, although Unity is not a Kaiyuan engine, its modules are highly customizable.

The picture shows the platforms currently supported by Unity. Some of the most common platforms can be found on these icons. These may be some of the most popular games in recent years, and some of these icons are relatively familiar, such as Honor of Kings, CALL DUTY, League of Legends, Genshin Impact, Fall Bean Man, Shining Nuan Nuan... In fact, more than 70% of the top 1,000 most profitable games on domestic and foreign Android and iOS lists are created by Unity. More than half of switch games are produced by Unity. You may not believe that a high-definition, seemingly very complex game, and a hit game that was first launched on Xbox, was developed by one person, which fully demonstrates the flexibility of the Unity engine and the power of the tool chain.

Unity is developing in the direction of high performance. Let me explain the three main dots system components, C#Job System, ECS, and Burst Compiler. C#Job System actually allows the developed games or applications to make full use of the concurrent computing power of the integrated CPU, because the current object-oriented programming model is the production content, which runs on the main thread. The second is the Entity Component System (ECS). Through the Entity Component System, the data can be separated from the system, that is, the game logic, which is easier to maintain and more friendly to the use of memory. Burst Compiler can generate higher-performance machine code for the target platform.

On the other hand, high image quality is also a very good feature of Unity. If you just say that you have to use an expensive PC or an expensive Xbox or Playstation machine to render beautiful images, in fact the game's versatility is too poor. Unity uses XRP, the so-called variable rendering pipeline, to create two sets of out-of-the-box rendering pipelines for everyone. One is the universal rendering pipeline URP, and the second is HDRP (high-definition rendering pipeline) . As the name suggests, URP's so-called universal rendering pipeline is supported by all platforms. Whether you are developing VR games, AR games or mobile games, URP can be used for rendering work. HDRP refers to PC mac Linux, Xbox1 and basestation4 and above platforms, which also support real-time ray tracing.

3. How does the high-definition rendering pipeline achieve high-quality rendering of digital humans?

The “Pagan” digital man is actually not the first digital man produced by Unity, but the “Heretic” digital man has its own uniqueness, because This digital human is very lifelike and forms a complete workflow. To achieve high-quality rendering like "Infidel", a high-definition rendering pipeline is essential.

The digital person in "The Infidel" actually has a prototype. He is a British theater actor in London, named Jack. By scanning his data, this is his whole body Data, the key point is to scan his head data, and then make the entire digital human. The expression is very lifelike. A 4D Volumetric Video technology is used to create the expression animation. In fact, although this technology can be used to make very Lifelike expressions, but it also has a flaw, that is, it cannot be driven in real time. It can be used to animate film and television character expressions without any problem, but it cannot be driven in real time.

## In some successful cases, you can look at eye rendering and skin rendering, Let's take a look at each.

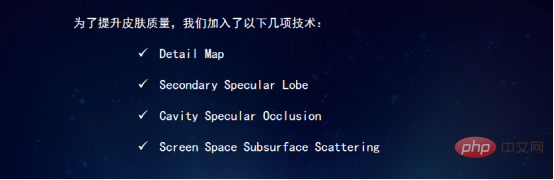

In order to improve the quality of the skin, the following technologies have been added. Detail Map is a detail map. Secondary Specular Lobe is the highlight of the second layer, Cavity Specular Occlusion refers to the depression, which can block the highlight, and Screen Space Subsurface Scattering refers to the subsurface scattering of the screen space.

The first detail map is mainly used to simulate textures such as details on the skin surface. This texture is not large, it is a 1K texture.

The second layer of highlight, Secondary Specular Lobe, is used to simulate the oil layer on the surface of the skin. Because the skin will produce oil and even look dry, this method is needed. to add a second layer of highlights.

The third one is the sunken highlight, which is used to cover the highlight in the center of the sunken area. After adding the second layer of highlights, highlights also appear in the sunken areas of the skin, which is obviously unrealistic. The highlights need to be masked so that no highlights appear in the sunken areas.

The fourth is subsurface scattering in screen space, which is used to create the effect of the skin itself. We know that the skin itself has a so-called 3S effect, which is the so-called subsurface scattering effect. The skin obviously needs to use subsurface scattering, but traditionally when we use offline rendering software such as MAYA or 3Dmax to simulate this skin, it is very time-consuming to render the effect of subsurface scattering. In Unity, there are actually two techniques for rendering subsurface scattering effects. The first is Screen Space Subsurface Scattering technology, which renders faster. The second is through light flight. Obviously the light flight method is more realistic.

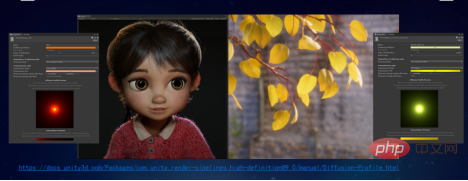

This character is the character of the little girl in the short film "The Wind-Up Musician". The character of the little girl also uses the subsurface scattering effect of screen space. The leaves on the right actually use the effect of subsurface scattering.

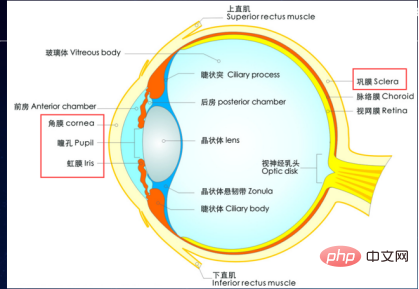

Next let’s talk about rendering for eyes. In fact, the eye is a very complex object, which is composed of many parts. By simulating a real eyeball in real-time rendering, simulating four parts: cornea, pupil, iris, and sclera can already achieve very realistic effects.

As shown in the picture, our eyeball itself is a very complex object. When viewed from the front and side, it actually There is a difference, especially the iris perspective refraction phenomenon. If it is rendered normally, this picture is the very famous digital human character at GDC.

The digital human eyeball is actually modeled according to the shape of the real eyeball, and it will have some special painters to represent the various parameters of the eyeball. Including its reflection, the normal maps on the sclera and iris, the effects that can be achieved by applying different normal values, and even the occlusion of the eyelids, which can be controlled by a separate controller, so that the eyelids can be controlled Effect.

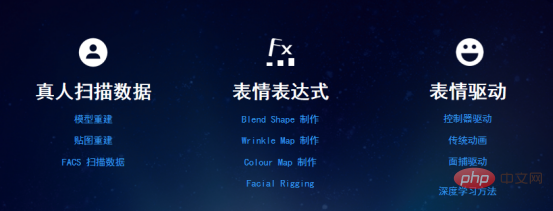

To summarize, expressions are made through blend shape, Wrinkle Map, Color Map, and Facial Rigging using real-person scanned data, and a controller is used to drive the expression. Of course, we also know that there are many other ways to drive this expression. In fact, when we render skin, eyes or hair, it is relatively easy, but expression driving is actually a very difficult point.

I often hear developers say why the Unity they use cannot produce the effects that Unity can produce? Because the effects of the demos presented by Unity were created by a group of people with a lot of effort, and the technologies they used were actually not yet available in the version they produced. After completing this demo and having these technologies, it will gradually Combined into the next version, it will eventually form a functional version that everyone can use.

#4. What is the process of making a digital human?

After finishing "The Infidel", the Unity China team actually made a digital human of their own, applying the technology of "The Infidel", including 4D scanning of sitting postures, etc. technology.

This is an actual project, and the process is actually exactly the same as that of "Infidel". This includes cleaning the data, making the corresponding blend shape, making the Wrinkle Map, making the Rig, and then driving the expression. The controller in ASSETStore uses Animation Clip and BlendShape to present the expression animation. Of course, there are actually more ways to capture faces, such as using Market to track, using ARKit to track, and using image data to drive.

When doing actual development, use a camera to capture expressions to test the expressions of human heads. There is another way to use image data to drive. One very big difference is that she has long flowing hair. This long flowing hair is a solution developed by the Unity China team. It is suitable for URP and HDRP, and is suitable for both general rendering pipelines and High-quality rendering pipeline to create hair. The rendering of hair is very different from the traditional plug-in method. It can be very smooth and even feels like the effect of a Head & Shoulders advertisement. Expressions are actually driven in real time through AR cade, and of course AR cade is used to drive expressions.

Unity has a digital human called Emma. The expression of this digital human is dynamically driven entirely by Ziva Dynamics technology. The core technology is actually to simulate these muscle groups under the model or under the biological skin. , it can not only simulate the movement of real body muscles of dinosaurs, but also apply this technology to the simulation of very realistic facial expressions of digital humans. Traditionally, a model is an empty shell, with a shell on the outside. But in Ziva Dynamics' technology, there are actually real anatomical muscles on the back. When these muscles are moved realistically, the outside These skins can achieve another or higher level of realism than the traditional blend shape or bone binding method.

In March this year, Unity released a trailer for the latest digital human of "Enemies" at GDC. The expression of this digital human is very, very real. It is worth mentioning that her hair is a set of fur rendering technology that Unity will soon release, a set of hair applied to the HDRP high-definition rendering pipeline.

Welcome to visit the official website of the MetaCon Metaverse Technology Conference to learn more about the metaverse and digital people. Address: https://metacon.51cto.com/

The above is the detailed content of Yang Dong, Platform Technical Director of Unity Greater China: Starting the Digital Human Journey in the Metaverse. For more information, please follow other related articles on the PHP Chinese website!