Node was originally born to build a high-performance web server. As a JavaScript server runtime, it has event-driven, asynchronous I/O, and single-threading. and other characteristics. The asynchronous programming model based on the event loop enables Node to handle high concurrency and greatly improves server performance. At the same time, because it maintains the single-threaded characteristics of JavaScript, Node does not need to deal with issues such as state synchronization and deadlock under multi-threads. There is no performance overhead caused by thread context switching. Based on these characteristics, Node has the inherent advantages of high performance and high concurrency, and various high-speed and scalable network application platforms can be built based on it.

This article will go deep into the underlying implementation and execution mechanism of Node asynchronous and event loop, I hope it will be helpful to you.

Why does Node use asynchronous as its core programming model?

As mentioned before, Node was originally born to build high-performance web servers. Assuming that there are several sets of unrelated tasks to be completed in the business scenario, there are two mainstream modern solutions:

Single thread serial execution.

Multiple threads are completed in parallel.

Single-threaded serial execution is a synchronous programming model. Although it is more in line with the programmer's way of thinking in sequence, it is easier to write more convenient code, but due to It performs I/O synchronously and can only process a single request at the same time, which will cause the server to respond slowly and cannot be applied in high-concurrency application scenarios. Moreover, because it blocks I/O, the CPU will always wait for the I/O to complete. Unable to do other things, the CPU's processing power cannot be fully utilized, which ultimately leads to low efficiency.

The multi-threaded programming model will also cause problems for developers due to issues such as state synchronization and deadlock in programming. Headache. Although multi-threading can effectively improve CPU utilization on multi-core CPUs.

Although the programming model of single-threaded serial execution and multi-threaded parallel completion has its own advantages, it also has shortcomings in terms of performance, development difficulty, etc.

In addition, starting from the speed of responding to client requests, if the client obtains two resources at the same time, the response speed of the synchronous method will be the sum of the response speeds of the two resources, while the response speed of the asynchronous method will be the sum of the response speeds of the two resources. Speed will be the largest of the two, and the performance advantage is very obvious compared to synchronization. As the application complexity increases, this scenario will evolve into responding to n requests at the same time, and the advantages of asynchronous compared to synchronized will be highlighted.

To sum up, Node gives its answer: use a single thread to stay away from multi-thread deadlock, state synchronization and other problems; use asynchronous I/O to keep a single thread away from blocking, so as to better Use CPU. This is why Node uses async as its core programming model.

In addition, in order to make up for the shortcomings of a single thread that cannot utilize multi-core CPUs, Node also provides a sub-process similar to Web Workers in the browser, which can efficiently utilize the CPU through worker processes.

After talking about why we should use asynchronous, how to implement asynchronous?

There are two types of asynchronous operations we usually call: one is I/O-related operations like file I/O and network I/O; the other is operations like setTimeOut , setInterval This type of operation has nothing to do with I/O. Obviously the asynchronous we are talking about refers to operations related to I/O, that is, asynchronous I/O.

Asynchronous I/O is proposed in the hope that I/O calls will not block the execution of subsequent programs, and the original time waiting for I/O to be completed will be allocated to other required businesses for execution. To achieve this goal, non-blocking I/O is required.

Blocking I/O means that after the CPU initiates an I/O call, it will block until the I/O is completed. Knowing blocking I/O, non-blocking I/O is easy to understand. The CPU will return immediately after initiating the I/O call instead of blocking and waiting. The CPU can handle other transactions before the I/O is completed. Obviously, compared to blocking I/O, non-blocking I/O has more performance improvements.

So, since non-blocking I/O is used and the CPU can return immediately after initiating an I/O call, how does it know that the I/O is completed? The answer is polling.

In order to obtain the status of I/O calls in a timely manner, the CPU will continuously call I/O operations repeatedly to confirm whether the I/O has been completed. This technology of repeated calls to determine whether the operation is completed is called polling.

Obviously, polling will cause the CPU to repeatedly perform status judgments, which is a waste of CPU resources. Moreover, the polling interval is difficult to control. If the interval is too long, the completion of the I/O operation will not receive a timely response, which indirectly reduces the response speed of the application; if the interval is too short, the CPU will inevitably be spent on polling. It takes longer and reduces the utilization of CPU resources.

Therefore, although polling meets the requirement that non-blocking I/O does not block the execution of subsequent programs, for the application, it can still only be regarded as a kind of synchronization, because the application still needs to wait. I/O returns completely, still spending a lot of time waiting.

The perfect asynchronous I/O we expect should be that the application initiates a non-blocking call. There is no need to continuously query the status of the I/O call through polling. Instead, the next task can be processed directly. O After completion, the data can be passed to the application through the semaphore or callback.

How to implement this kind of asynchronous I/O? The answer is thread pool.

Although this article has always mentioned that Node is executed in a single thread, the single thread here means that the JavaScript code is executed on a single thread. For I/O operations that have nothing to do with the main business logic Partially, it is implemented by running in other threads, which will not affect or block the running of the main thread. On the contrary, it can improve the execution efficiency of the main thread and realize asynchronous I/O.

Through the thread pool, let the main thread only make I/O calls, and let other threads perform blocking I/O or non-blocking I/O plus polling technology to complete data acquisition, and then use the thread pool to complete the data acquisition. Communication transfers the data obtained by I/O, which easily realizes asynchronous I/O:

The main thread makes I/O calls, and the thread pool makes I/O calls. /O operation, complete the acquisition of data, and then pass the data to the main thread through communication between threads to complete an I/O call. The main thread then uses the callback function to expose the data to the user, and the user then uses these Data is used to complete operations at the business logic level. This is a complete asynchronous I/O process in Node. For users, there is no need to worry about the cumbersome implementation details of the underlying layer. They only need to call the asynchronous API encapsulated by Node and pass in the callback function that handles the business logic, as shown below:

const fs = require("fs");

fs.readFile('example.js', (data) => {

// 进行业务逻辑的处理

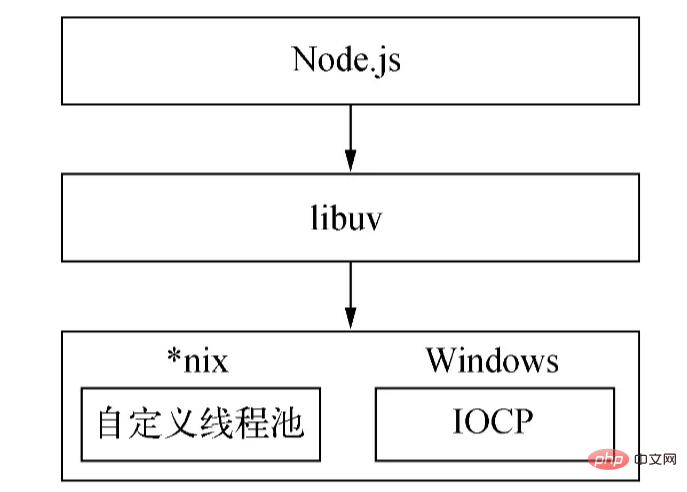

});## The asynchronous underlying implementation mechanism of #Nodejs is different under different platforms: under Windows, IOCP is mainly used to send I/O calls to the system kernel and obtain completed I/O operations from the kernel, coupled with an event loop, to This completes the process of asynchronous I/O; this process is implemented through epoll under Linux; through kqueue under FreeBSD, and through Event ports under Solaris. The thread pool is directly provided by the kernel (IOCP) under Windows, and the *nix series is implemented by libuv itself.

*nix platform, Node provides libuv as an abstract encapsulation layer, so that all platform compatibility judgments are completed by this layer, ensuring that the upper Node It is independent from the underlying custom thread pool and IOCP. Node will determine the platform conditions during compilation and selectively compile source files in the unix directory or win directory into the target program:

UV_THREADPOOL_SIZE. The default value is 4. The user can adjust the size of this value based on the actual situation.)

*nix will be handled at this stage. In addition, some I/O callbacks that should be executed in the poll phase of the previous cycle will be deferred to this phase.

检索新的 I/O 事件;执行与 I/O 相关的回调(除了关闭回调、定时器调度的回调和 之外几乎所有回调setImmediate());节点会在适当的时候阻塞在这里。

poll,即轮询阶段是事件循环最重要的阶段,网络 I/O、文件 I/O 的回调都主要在这个阶段被处理。该阶段有两个主要功能:

计算该阶段应该阻塞和轮询 I/O 的时间。

处理 I/O 队列中的回调。

当事件循环进入 poll 阶段并且没有设置定时器时:

如果轮询队列不为空,则事件循环将遍历该队列,同步地执行它们,直到队列为空或达到可执行的最大数量。

如果轮询队列为空,则会发生另外两种情况之一:

如果有 setImmediate() 回调需要执行,则立即结束 poll 阶段,并进入 check 阶段以执行回调。

如果没有 setImmediate() 回调需要执行,事件循环将停留在该阶段以等待回调被添加到队列中,然后立即执行它们。在超时时间到达前,事件循环会一直停留等待。之所以选择停留在这里是因为 Node 主要是处理 IO 的,这样可以更及时地响应 IO。

一旦轮询队列为空,事件循环将检查已达到时间阈值的定时器。如果有一个或多个定时器达到时间阈值,事件循环将回到 timers 阶段以执行这些定时器的回调。

该阶段会依次执行 setImmediate() 的回调。

该阶段会执行一些关闭资源的回调,如 socket.on('close', ...)。该阶段晚点执行也影响不大,优先级最低。

当 Node 进程启动时,它会初始化事件循环,执行用户的输入代码,进行相应异步 API 的调用、计时器的调度等等,然后开始进入事件循环:

┌───────────────────────────┐

┌─>│ timers │

│ └─────────────┬─────────────┘

│ ┌─────────────┴─────────────┐

│ │ pending callbacks │

│ └─────────────┬─────────────┘

│ ┌─────────────┴─────────────┐

│ │ idle, prepare │

│ └─────────────┬─────────────┘ ┌───────────────┐

│ ┌─────────────┴─────────────┐ │ incoming: │

│ │ poll │<p>事件循环的每一轮循环(通常被称为 tick),会按照如上给定的优先级顺序进入七个阶段的执行,每个阶段会执行一定数量的队列中的回调,之所以只执行一定数量而不全部执行完,是为了防止当前阶段执行时间过长,避免下一个阶段得不到执行。</p><p>OK,以上就是事件循环的基本执行流程。现在让我们来看另外一个问题。</p><p>对于以下这个场景:</p><pre class="brush:php;toolbar:false">const server = net.createServer(() => {}).listen(8080);

server.on('listening', () => {});当服务成功绑定到 8000 端口,即 listen() 成功调用时,此时 listening 事件的回调还没有绑定,因此端口成功绑定后,我们所传入的 listening 事件的回调并不会执行。

再思考另外一个问题,我们在开发中可能会有一些需求,如处理错误、清理不需要的资源等等优先级不是那么高的任务,如果以同步的方式执行这些逻辑,就会影响当前任务的执行效率;如果以异步的方式,比如以回调的形式传入 setImmediate() 又无法保证它们的执行时机,实时性不高。那么要如何处理这些逻辑呢?

基于这几个问题,Node 参考了浏览器,也实现了一套微任务的机制。在 Node 中,除了调用 new Promise().then() 所传入的回调函数会被封装成微任务外,process.nextTick() 的回调也会被封装成微任务,并且后者的执行优先级比前者高。

有了微任务后,事件循环的执行流程又是怎么样的呢?换句话说,微任务的执行时机在什么时候?

在 node 11 及 11 之后的版本,一旦执行完一个阶段里的一个任务就立刻执行微任务队列,清空该队列。

在 node11 之前执行完一个阶段后才开始执行微任务。

因此,有了微任务后,事件循环的每一轮循环,会先执行 timers 阶段的一个任务,然后按照先后顺序清空 process.nextTick() 和 new Promise().then() 的微任务队列,接着继续执行 timers 阶段的下一个任务或者下一个阶段,即 pending 阶段的一个任务,按照这样的顺序以此类推。

利用 process.nextTick(),Node 就可以解决上面的端口绑定问题:在 listen() 方法内部,listening 事件的发出会被封装成回调传入 process.nextTick() 中,如下伪代码所示:

function listen() {

// 进行监听端口的操作

...

// 将 `listening` 事件的发出封装成回调传入 `process.nextTick()` 中

process.nextTick(() => {

emit('listening');

});

};在当前代码执行完毕后便会开始执行微任务,从而发出 listening 事件,触发该事件回调的调用。

由于异步本身的不可预知性和复杂性,在使用 Node 提供的异步 API 的过程中,尽管我们已经掌握了事件循环的执行原理,但是仍可能会有一些不符合直觉或预期的现象产生。

比如定时器(setTimeout、setImmediate)的执行顺序会因为调用它们的上下文而有所不同。如果两者都是从顶层上下文中调用的,那么它们的执行时间取决于进程或机器的性能。

我们来看以下这个例子:

setTimeout(() => {

console.log('timeout');

}, 0);

setImmediate(() => {

console.log('immediate');

});以上代码的执行结果是什么呢?按照我们刚才对事件循环的描述,你可能会有这样的答案:由于 timers 阶段会比 check 阶段先执行,因此 setTimeout() 的回调会先执行,然后再执行 setImmediate() 的回调。

实际上,这段代码的输出结果是不确定的,可能先输出 timeout,也可能先输出 immediate。这是因为这两个定时器都是在全局上下文中调用的,当事件循环开始运行并执行到 timers 阶段时,当前时间可能大于 1 ms,也可能不足 1 ms,具体取决于机器的执行性能,因此 setTimeout() 在第一个 timers 阶段是否会被执行实际上是不确定的,因此才会出现不同的输出结果。

(当 delay(setTimeout 的第二个参数)的值大于 2147483647 或小于 1 时, delay 会被设置为 1。)

我们接着看下面这段代码:

const fs = require('fs');

fs.readFile(__filename, () => {

setTimeout(() => {

console.log('timeout');

}, 0);

setImmediate(() => {

console.log('immediate');

});

});可以看到,在这段代码中两个定时器都被封装成回调函数传入 readFile 中,很明显当该回调被调用时当前时间肯定大于 1 ms 了,所以 setTimeout 的回调会比 setImmediate 的回调先得到调用,因此打印结果为:timeout immediate。

以上是在使用 Node 时需要注意的与定时器相关的事项。除此之外,还需注意 process.nextTick() 与 new Promise().then() 还有 setImmediate() 的执行顺序,由于这部分比较简单,前面已经提到过,就不再赘述了。

文章开篇从为什么要异步、如何实现异步两个角度出发,较详细地阐述了 Node 事件循环的实现原理,并提到一些需要注意的相关事项,希望对你有所帮助。

更多node相关知识,请访问:nodejs 教程!

The above is the detailed content of Let's talk in depth about the underlying implementation and execution mechanism of Node's asynchronous and event loops. For more information, please follow other related articles on the PHP Chinese website!