This article brings you relevant knowledge about Redis. It mainly introduces three types of cache problems, namely cache penetration, cache breakdown and cache avalanche. I hope it will be helpful to everyone. help.

Recommended learning: Redis learning tutorial

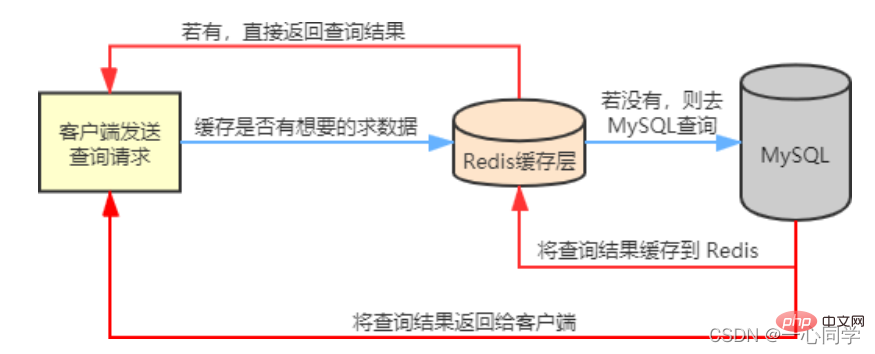

In our actual business scenarios, Redis is generally used in conjunction with other databases to reduce the pressure on the back-end database , such as with the relational database MySQL With the use of.

Redis will cache frequently queried data in MySQL, such as Hot data, so that when users come to access, they don't need to query in MySQL, but directly obtain the cached data in Redis, thereby reducing the reading pressure on the back-end database.

If the data queried by the user is not available in Redis, the user's query request will be transferred to the MySQL database. When MySQL returns the data to the client, it will also cache the data in in Redis, so that when users read again, they can get data directly from Redis. The flow chart is as follows:

二, Cache penetration2.1 IntroductionOf course, we are not always able to use Redis as a cache database. Smooth sailing, we will encounter common three cache problems:

- ##Cache penetration

- Cache breakdown

- Cache avalanche

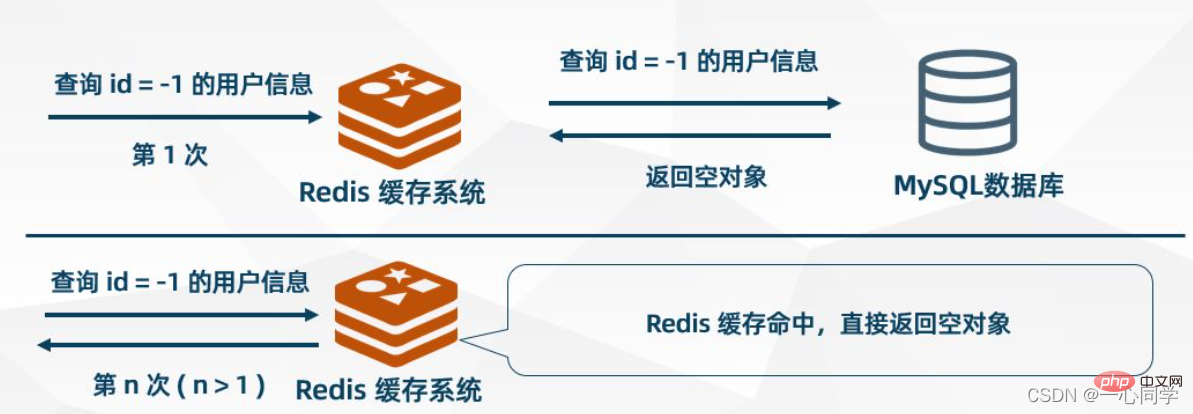

Cache penetration means thatwhen the user queries a certain data, it does not exist in Redis This data means that the cache does not hit. At this time, the query request will be transferred to the persistence layer database MySQL. It is found that the data does not exist in MySQL. MySQL can only return an empty object, which means that the query failed. If there are many such requests, or users use such requests to conduct malicious attacks, it will put a lot of pressure on the MySQL database and even collapse. This phenomenon is called cache penetration.

When MySQL returns an empty object, Redis caches the object and sets an expiration time for it. When the user initiates the same request again, an empty object will be obtained from the cache. The user's request is blocked at the cache layer, thereby protecting the back-end database, but There are also some problems with this approach. Although the request cannot enter MSQL, this strategy will occupy Redis cache space.

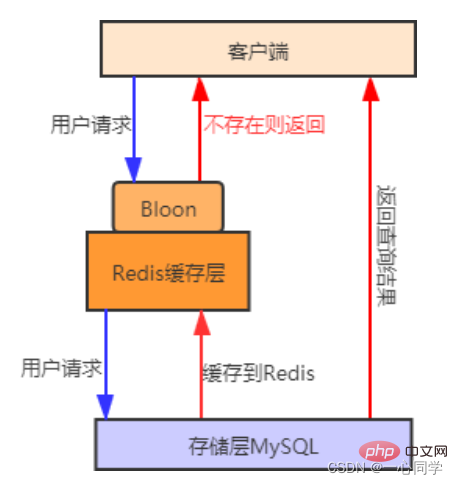

First put the ones that the user may access All keys of hotspot data are stored in the Bloom filter (also called cache preheating) . When a user requests it, it will first go through the Bloom filter, Bloom filter It will be judged whether the requested key exists. If it does not exist, the request will be rejected directly. Otherwise, the query will continue to be executed. First go to the cache to query. If the cache does not exist, then go to the database to query. Compared with the first method, using the Bloom filter method is more efficient and practical. The process diagram is as follows:

##Cache preheating: refers to cache preheating in advance when the system starts Relevant data is loaded into the Redis cache system. This avoids loading data when the user requests it.

Both solutions can solve the problem of cache penetration, but their usage scenarios are different:

Cache empty objects: Suitable for scenarios where the number of keys for empty data is limited and the probability of repeated key requests is high.

Bloom filter: Suitable for scenarios where the keys of empty data are different and the probability of repeated key requests is low.

Cache breakdown refers toThe data queried by the user does not exist in the cache, but does exist in the backend database. The reason for this phenomenon is that is usually caused by the expiration of the key in the cache. For example, a hot data key receives a large number of concurrent accesses all the time. If the key suddenly fails at a certain moment, a large number of concurrent requests will enter the back-end database, causing its pressure to increase instantly. This phenomenon is called cache breakdown.

Set hotspot data never Expired.

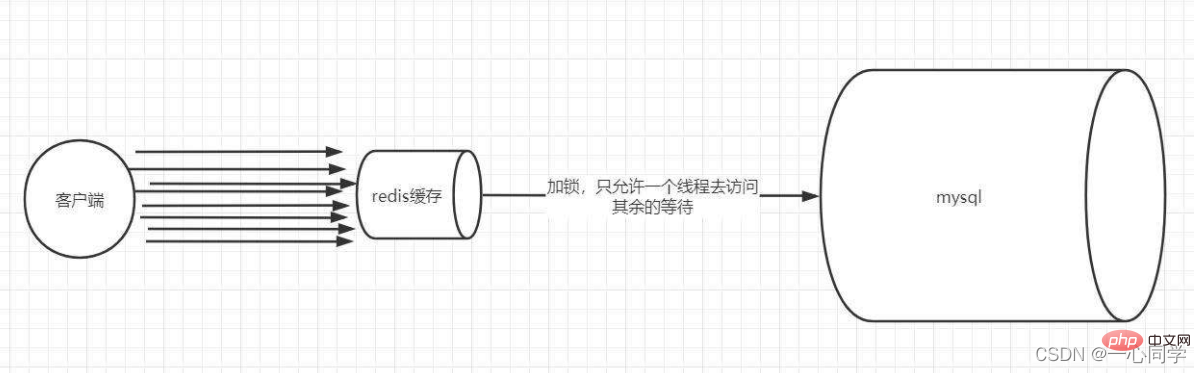

Adopt the Distributed Lock method to redesign the cache The usage method is as follows:

- Lock: When we query data by key, we first query the cache. If If not, locking is performed through distributed locks. The first process to acquire the lock enters the back-end database to query and buffers the query results to Redis.

- Unlocking: When other processes find that the lock is occupied by a process, they enter the waiting state. After unlocking, other processes access the cached key in turn. .

Never expires: This solution has no Setting the real expiration time actually eliminates the series of hazards caused by hot keys, but there will be data inconsistencies and the code complexity will increase.

Mutex lock: This solution is relatively simple, but there are certain hidden dangers. If there is a problem with the cache building process or it takes a long time, there may be a deadlock. There are risks of locks and thread pool blocking, but this method can better reduce the back-end storage load and achieve better consistency.

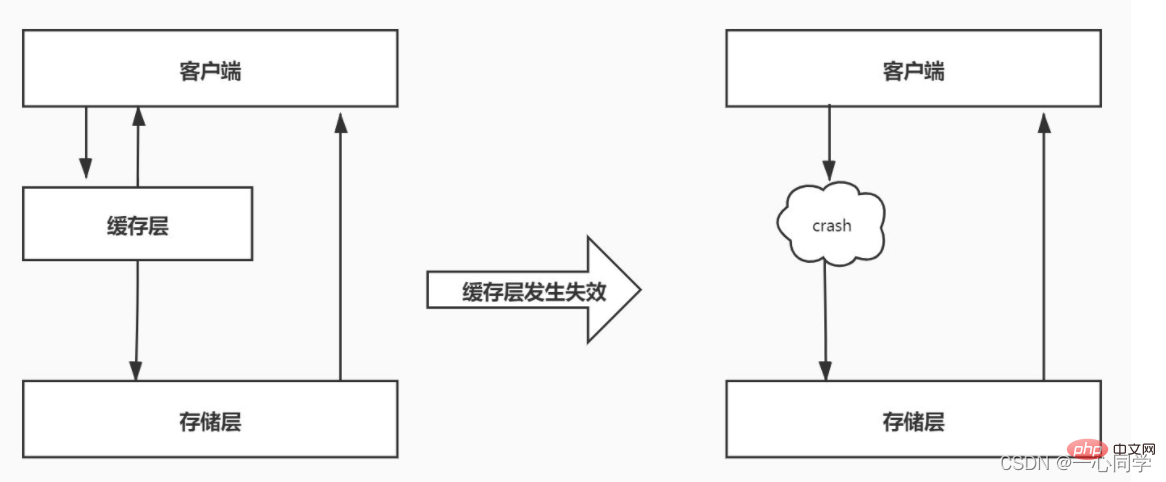

##Cache avalanche refers to large batches in the cache keys expire at the same time, and the amount of data access is very large at this time, causing a sudden increase in pressure on the back-end database, and even crashing. This phenomenon is called cache avalanche. It is different from cache breakdown. Cache breakdown occurs when a certain hot key suddenly expires when the amount of concurrency is particularly large, while cache avalanche occurs when a large number of keys expire at the same time, so they are not of the same order of magnitude at all.

4.2 Solution

hotspot data never expiresmethod to reduce the simultaneous expiration of a large number of keys. Furthermore, set a random expiration time for the key to avoid centralized expiration of keys.

redis high availability

Build a cluster, if one fails, the others can continue to work. Recommended learning:

Redis learning tutorial

The above is the detailed content of Let's talk about the three caching issues of Redis. For more information, please follow other related articles on the PHP Chinese website!

Commonly used database software

Commonly used database software

What are the in-memory databases?

What are the in-memory databases?

Which one has faster reading speed, mongodb or redis?

Which one has faster reading speed, mongodb or redis?

How to use redis as a cache server

How to use redis as a cache server

How redis solves data consistency

How redis solves data consistency

How do mysql and redis ensure double-write consistency?

How do mysql and redis ensure double-write consistency?

What data does redis cache generally store?

What data does redis cache generally store?

What are the 8 data types of redis

What are the 8 data types of redis