Every holiday, people who return home or go out for fun in first- and second-tier cities are almost always faced with one problem: grabbing train tickets!

12306 Ticket grabbing, thoughts brought about by extreme concurrency

Although tickets can be booked in most cases now, there are no tickets the moment the tickets are released I believe everyone has a deep understanding of the scene.

Especially during the Spring Festival, people not only use 12306, but also consider "Zhixing" and other ticket grabbing software. Hundreds of millions of people across the country are grabbing tickets during this period.

"12306 Service" bears a QPS that cannot be surpassed by any instant killing system in the world. Millions of concurrency is nothing but normal!

The author has specifically studied the server architecture of "12306" and learned many highlights of its system design. Here I will share with you and simulate an example: how to get 10,000 train tickets when 1 million people grab them at the same time. The system provides normal and stable services.

Github code address:

https://github.com/GuoZhaoran/spikeSystem

Large-scale high-concurrency system architecture

Highly concurrent system architecture will adopt distributed cluster deployment. The upper layer of the service has layer-by-layer load balancing and provides various disaster recovery means (dual fire computer room, node fault tolerance, server disaster recovery, etc.) to ensure For the high availability of the system, traffic will also be balanced to different servers based on different load capabilities and configuration strategies.

The following is a simple diagram:

Load Balancing Brief

The above figure describes the three-layer load balancing that user requests to the server undergo. , the following is a brief introduction to these three types of load balancing.

①OSPF (Open Shortest Link First) is an Interior Gateway Protocol (IGP for short)

OSPF advertises the status of network interfaces between routers To establish the link state database and generate the shortest path tree, OSPF will automatically calculate the Cost value on the routing interface, but you can also manually specify the Cost value of the interface. The manually specified value takes precedence over the automatically calculated value.

The Cost calculated by OSPF is also inversely proportional to the interface bandwidth. The higher the bandwidth, the smaller the Cost value. Paths with the same cost value to the target can perform load balancing, and up to 6 links can perform load balancing at the same time.

②LVS (Linux Virtual Server)

It is a cluster (Cluster) technology that uses IP load balancing technology and content-based request distribution technology.

The scheduler has a very good throughput rate and evenly transfers requests to different servers for execution, and the scheduler automatically shields server failures, thereby forming a group of servers into a high-performance, highly available virtual server. .

③Nginx

I believe everyone is familiar with it. It is a very high-performance HTTP proxy/reverse proxy server. It is also often used in service development. Load balancing.

There are three main ways for Nginx to achieve load balancing: Polling Weighted Polling IP Hash Polling

Below we will do special configuration and testing for Nginx's weighted polling.

Demonstration of Nginx weighted polling

Nginx implements load balancing through the Upstream module. The configuration of weighted polling can add a weight value to related services. , the corresponding load may be set according to the performance and load capacity of the server during configuration.

The following is a weighted polling load configuration. I will listen to ports 3001-3004 locally and configure the weights of 1, 2, 3, and 4 respectively:

#配置负载均衡

upstream load_rule {

server 127.0.0.1:3001 weight=1;

server 127.0.0.1:3002 weight=2;

server 127.0.0.1:3003 weight=3;

server 127.0.0.1:3004 weight=4;

}

...

server {

listen 80;

server_name load_balance.com www.load_balance.com;

location / {

proxy_pass http://load_rule;

}

}I am local / The virtual domain name address of www.load_balance.com is configured in the etc/hosts directory.

Next, use Go language to open four HTTP port listening services. The following is the Go program listening on port 3001. The other few only need to modify the port:

package main

import (

"net/http"

"os"

"strings"

)

func main() {

http.HandleFunc("/buy/ticket", handleReq)

http.ListenAndServe(":3001", nil)

}

//处理请求函数,根据请求将响应结果信息写入日志

func handleReq(w http.ResponseWriter, r *http.Request) {

failedMsg := "handle in port:"

writeLog(failedMsg, "./stat.log")

}

//写入日志

func writeLog(msg string, logPath string) {

fd, _ := os.OpenFile(logPath, os.O_RDWR|os.O_CREATE|os.O_APPEND, 0644)

defer fd.Close()

content := strings.Join([]string{msg, "\r\n"}, "3001")

buf := []byte(content)

fd.Write(buf)

}I will request The port log information is written to the ./stat.log file, and then the AB stress testing tool is used for stress testing: ab -n 1000 -

c 100 http://www.load_balance.com/buy/ticket

. According to the results in the statistical log, ports 3001-3004 got 100, Request volume of 200, 300, 400.

This is consistent with the weight ratio I configured in Nginx, and the traffic after load is very even and random.

For specific implementation, you can refer to Nginx's Upsteam module implementation source code. Here is a recommended article "Load Balancing of Upstream Mechanism in Nginx": https://www.kancloud.cn/digest/understandingnginx/202607

Selection of Flash Sale System

Back to the original question we mentioned: How does the train ticket flash sale system provide normal and stable performance under high concurrency conditions? What about the service?

From the above introduction, we know that user flash sale traffic is evenly distributed to different servers through layers of load balancing. Even so, the QPS endured by a single machine in the cluster is also very high. How to optimize stand-alone performance to the extreme?

To solve this problem, we need to understand one thing: Usually the booking system has to process the three basic stages of order generation, inventory reduction, and user payment.

What our system needs to do is to ensure that train ticket orders are not oversold or oversold. Each ticket sold must be paid to be valid. We must also ensure that the system can withstand extremely high concurrency.

How should the order of these three stages be allocated more reasonably? Let’s analyze it:

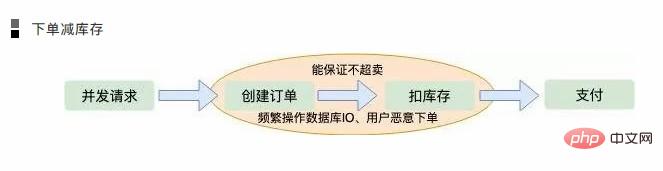

Place an order to reduce inventory

When the user’s concurrent request reaches the server , first create an order, then deduct the inventory and wait for user payment.

This order is the first solution that most of us will think of. In this case, it can also ensure that the order will not be oversold, because the inventory will be reduced after the order is created, which is an atomic operation.

But this will also cause some problems:

In the case of extreme concurrency, the details of any memory operation will significantly affect performance, especially things like creating orders. Logic generally needs to be stored in a disk database, and the pressure on the database is conceivable.

If a user places an order maliciously and only places an order without paying, the inventory will be reduced and a lot of orders will be sold less. Although the server can limit the IP and the number of user purchase orders, this does not count. A good way.

Pay to reduce inventory

If you wait for the user to pay for the order and reduce inventory, the first feeling is that there will be no less sales. But this is a taboo of concurrent architecture, because under extreme concurrency, users may create many orders.

When the inventory is reduced to zero, many users find that they cannot pay for the orders they grabbed. This is the so-called "oversold". Concurrent database disk IO operations cannot be avoided either.

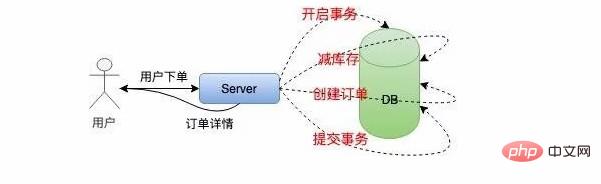

Withholding Inventory

From the considerations of the above two solutions, we can conclude that as long as an order is created, database IO must be operated frequently.

So is there a solution that does not require direct operation of database IO? This is withholding inventory. First, the inventory is deducted to ensure that it is not oversold, and then user orders are generated asynchronously, so that the response to users will be much faster; so how to ensure that there is a lot of sales? What should the user do if they don’t pay after getting the order?

We all know that orders now have a validity period. For example, if the user does not pay within five minutes, the order will expire. Once the order expires, new inventory will be added. This is why many online retail companies now guarantee that the goods will not be valid. Sell less adopted solutions.

Orders are generated asynchronously and are generally processed in instant consumption queues such as MQ and Kafka. When the order volume is relatively small, orders are generated very quickly and users hardly have to queue.

The art of withholding inventory

From the above analysis, it is obvious that the plan of withholding inventory is the most reasonable. Let’s further analyze the details of inventory deduction. There is still a lot of room for optimization. Where is the inventory? How to ensure correct inventory deduction under high concurrency and rapid response to user requests?

In the case of low concurrency on a single machine, we usually implement inventory deduction like this:

In order to ensure inventory deduction and order generation The atomicity requires transaction processing, then inventory judgment, inventory reduction, and finally transaction submission. The entire process involves a lot of IO, and the operation of the database is blocked.

This method is not suitable for high-concurrency flash sales systems at all. Next, we optimize the single-machine inventory deduction plan: local inventory deduction.

We allocate a certain amount of inventory to the local machine, reduce the inventory directly in the memory, and then create an order asynchronously according to the previous logic.

The improved stand-alone system looks like this:

This avoids frequent IO operations on the database and only performs operations in memory, which is extremely convenient. Greatly improves the ability of a single machine to resist concurrency.

However, a single machine cannot withstand millions of user requests. Although Nginx uses the Epoll model to process network requests, the problem of c10k has long been solved in the industry.

But under the Linux system, all resources are files, and the same is true for network requests. A large number of file descriptors will cause the operating system to become unresponsive instantly.

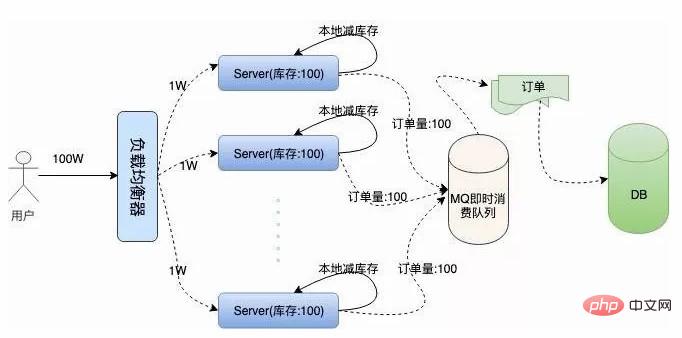

We mentioned Nginx's weighted balancing strategy above. We might as well assume that 1 million user requests are balanced on average to 100 servers, so that the amount of concurrency endured by a single machine is much smaller.

Then we store 100 train tickets locally on each machine, and the total inventory on 100 servers is still 10,000. This ensures that inventory orders are not oversold. The following is the cluster architecture we describe:

问题接踵而至,在高并发情况下,现在我们还无法保证系统的高可用,假如这 100 台服务器上有两三台机器因为扛不住并发的流量或者其他的原因宕机了。那么这些服务器上的订单就卖不出去了,这就造成了订单的少卖。

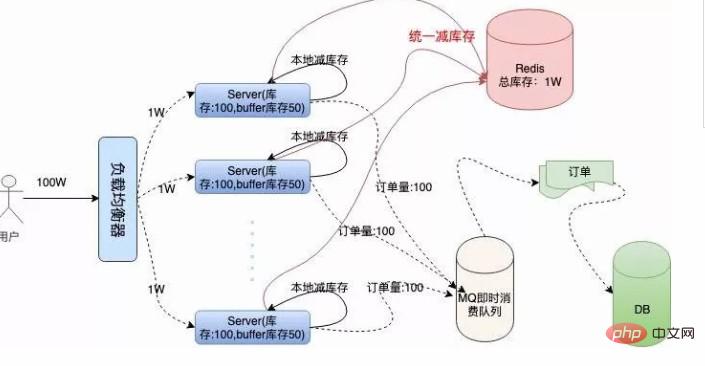

要解决这个问题,我们需要对总订单量做统一的管理,这就是接下来的容错方案。服务器不仅要在本地减库存,另外要远程统一减库存。

有了远程统一减库存的操作,我们就可以根据机器负载情况,为每台机器分配一些多余的“Buffer 库存”用来防止机器中有机器宕机的情况。

我们结合下面架构图具体分析一下:

我们采用 Redis 存储统一库存,因为 Redis 的性能非常高,号称单机 QPS 能抗 10W 的并发。

在本地减库存以后,如果本地有订单,我们再去请求 Redis 远程减库存,本地减库存和远程减库存都成功了,才返回给用户抢票成功的提示,这样也能有效的保证订单不会超卖。

当机器中有机器宕机时,因为每个机器上有预留的 Buffer 余票,所以宕机机器上的余票依然能够在其他机器上得到弥补,保证了不少卖。

Buffer 余票设置多少合适呢,理论上 Buffer 设置的越多,系统容忍宕机的机器数量就越多,但是 Buffer 设置的太大也会对 Redis 造成一定的影响。

虽然 Redis 内存数据库抗并发能力非常高,请求依然会走一次网络 IO,其实抢票过程中对 Redis 的请求次数是本地库存和 Buffer 库存的总量。

因为当本地库存不足时,系统直接返回用户“已售罄”的信息提示,就不会再走统一扣库存的逻辑。

这在一定程度上也避免了巨大的网络请求量把 Redis 压跨,所以 Buffer 值设置多少,需要架构师对系统的负载能力做认真的考量。

代码演示

Go 语言原生为并发设计,我采用 Go 语言给大家演示一下单机抢票的具体流程。

初始化工作

Go 包中的 Init 函数先于 Main 函数执行,在这个阶段主要做一些准备性工作。

我们系统需要做的准备工作有:初始化本地库存、初始化远程 Redis 存储统一库存的 Hash 键值、初始化 Redis 连接池。

另外还需要初始化一个大小为 1 的 Int 类型 Chan,目的是实现分布式锁的功能。

也可以直接使用读写锁或者使用 Redis 等其他的方式避免资源竞争,但使用 Channel 更加高效,这就是 Go 语言的哲学:不要通过共享内存来通信,而要通过通信来共享内存。

Redis 库使用的是 Redigo,下面是代码实现:...

//localSpike包结构体定义

package localSpike

type LocalSpike struct {

LocalInStock int64

LocalSalesVolume int64

}

...

//remoteSpike对hash结构的定义和redis连接池

package remoteSpike

//远程订单存储健值

type RemoteSpikeKeys struct {

SpikeOrderHashKey string //redis中秒杀订单hash结构key

TotalInventoryKey string //hash结构中总订单库存key

QuantityOfOrderKey string //hash结构中已有订单数量key

}

//初始化redis连接池

func NewPool() *redis.Pool {

return &redis.Pool{

MaxIdle: 10000,

MaxActive: 12000, // max number of connections

Dial: func() (redis.Conn, error) {

c, err := redis.Dial("tcp", ":6379")

if err != nil {

panic(err.Error())

}

return c, err

},

}

}

...

func init() {

localSpike = localSpike2.LocalSpike{

LocalInStock: 150,

LocalSalesVolume: 0,

}

remoteSpike = remoteSpike2.RemoteSpikeKeys{

SpikeOrderHashKey: "ticket_hash_key",

TotalInventoryKey: "ticket_total_nums",

QuantityOfOrderKey: "ticket_sold_nums",

}

redisPool = remoteSpike2.NewPool()

done = make(chan int, 1)

done <- 1

}本地扣库存和统一扣库存

本地扣库存逻辑非常简单,用户请求过来,添加销量,然后对比销量是否大于本地库存,返回 Bool 值:package localSpike

//本地扣库存,返回bool值

func (spike *LocalSpike) LocalDeductionStock() bool{

spike.LocalSalesVolume = spike.LocalSalesVolume + 1

return spike.LocalSalesVolume < spike.LocalInStock

}注意这里对共享数据 LocalSalesVolume 的操作是要使用锁来实现的,但是因为本地扣库存和统一扣库存是一个原子性操作,所以在最上层使用 Channel 来实现,这块后边会讲。

统一扣库存操作 Redis,因为 Redis 是单线程的,而我们要实现从中取数据,写数据并计算一些列步骤,我们要配合 Lua 脚本打包命令,保证操作的原子性:

package remoteSpike

......

const LuaScript = `

local ticket_key = KEYS[1]

local ticket_total_key = ARGV[1]

local ticket_sold_key = ARGV[2]

local ticket_total_nums = tonumber(redis.call('HGET', ticket_key, ticket_total_key))

local ticket_sold_nums = tonumber(redis.call('HGET', ticket_key, ticket_sold_key))

-- 查看是否还有余票,增加订单数量,返回结果值

if(ticket_total_nums >= ticket_sold_nums) then

return redis.call('HINCRBY', ticket_key, ticket_sold_key, 1)

end

return 0

`

//远端统一扣库存

func (RemoteSpikeKeys *RemoteSpikeKeys) RemoteDeductionStock(conn redis.Conn) bool {

lua := redis.NewScript(1, LuaScript)

result, err := redis.Int(lua.Do(conn, RemoteSpikeKeys.SpikeOrderHashKey, RemoteSpikeKeys.TotalInventoryKey, RemoteSpikeKeys.QuantityOfOrderKey))

if err != nil {

return false

}

return result != 0

}我们使用 Hash 结构存储总库存和总销量的信息,用户请求过来时,判断总销量是否大于库存,然后返回相关的 Bool 值。

在启动服务之前,我们需要初始化 Redis 的初始库存信息:

hmset ticket_hash_key "ticket_total_nums" 10000 "ticket_sold_nums" 0

响应用户信息

我们开启一个 HTTP 服务,监听在一个端口上:

package main

...

func main() {

http.HandleFunc("/buy/ticket", handleReq)

http.ListenAndServe(":3005", nil)

}上面我们做完了所有的初始化工作,接下来 handleReq 的逻辑非常清晰,判断是否抢票成功,返回给用户信息就可以了。

package main

//处理请求函数,根据请求将响应结果信息写入日志

func handleReq(w http.ResponseWriter, r *http.Request) {

redisConn := redisPool.Get()

LogMsg := ""

<-done

//全局读写锁

if localSpike.LocalDeductionStock() && remoteSpike.RemoteDeductionStock(redisConn) {

util.RespJson(w, 1, "抢票成功", nil)

LogMsg = LogMsg + "result:1,localSales:" + strconv.FormatInt(localSpike.LocalSalesVolume, 10)

} else {

util.RespJson(w, -1, "已售罄", nil)

LogMsg = LogMsg + "result:0,localSales:" + strconv.FormatInt(localSpike.LocalSalesVolume, 10)

}

done <- 1

//将抢票状态写入到log中

writeLog(LogMsg, "./stat.log")

}

func writeLog(msg string, logPath string) {

fd, _ := os.OpenFile(logPath, os.O_RDWR|os.O_CREATE|os.O_APPEND, 0644)

defer fd.Close()

content := strings.Join([]string{msg, "\r\n"}, "")

buf := []byte(content)

fd.Write(buf)

}前边提到我们扣库存时要考虑竞态条件,我们这里是使用 Channel 避免并发的读写,保证了请求的高效顺序执行。我们将接口的返回信息写入到了 ./stat.log 文件方便做压测统计。

单机服务压测

开启服务,我们使用 AB 压测工具进行测试:

ab -n 10000 -c 100 http://127.0.0.1:3005/buy/ticket

下面是我本地低配 Mac 的压测信息:

This is ApacheBench, Version 2.3 <$revision: 1826891="">

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking 127.0.0.1 (be patient)

Completed 1000 requests

Completed 2000 requests

Completed 3000 requests

Completed 4000 requests

Completed 5000 requests

Completed 6000 requests

Completed 7000 requests

Completed 8000 requests

Completed 9000 requests

Completed 10000 requests

Finished 10000 requests

Server Software:

Server Hostname: 127.0.0.1

Server Port: 3005

Document Path: /buy/ticket

Document Length: 29 bytes

Concurrency Level: 100

Time taken for tests: 2.339 seconds

Complete requests: 10000

Failed requests: 0

Total transferred: 1370000 bytes

HTML transferred: 290000 bytes

Requests per second: 4275.96 [#/sec] (mean)

Time per request: 23.387 [ms] (mean)

Time per request: 0.234 [ms] (mean, across all concurrent requests)

Transfer rate: 572.08 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 8 14.7 6 223

Processing: 2 15 17.6 11 232

Waiting: 1 11 13.5 8 225

Total: 7 23 22.8 18 239

Percentage of the requests served within a certain time (ms)

50% 18

66% 24

75% 26

80% 28

90% 33

95% 39

98% 45

99% 54

100% 239 (longest request)根据指标显示,我单机每秒就能处理 4000+ 的请求,正常服务器都是多核配置,处理 1W+ 的请求根本没有问题。

而且查看日志发现整个服务过程中,请求都很正常,流量均匀,Redis 也很正常://stat.log

... result:1,localSales:145 result:1,localSales:146 result:1,localSales:147 result:1,localSales:148 result:1,localSales:149 result:1,localSales:150 result:0,localSales:151 result:0,localSales:152 result:0,localSales:153 result:0,localSales:154 result:0,localSales:156 ...

总结回顾

总体来说,秒杀系统是非常复杂的。我们这里只是简单介绍模拟了一下单机如何优化到高性能,集群如何避免单点故障,保证订单不超卖、不少卖的一些策略

完整的订单系统还有订单进度的查看,每台服务器上都有一个任务,定时的从总库存同步余票和库存信息展示给用户,还有用户在订单有效期内不支付,释放订单,补充到库存等等。

我们实现了高并发抢票的核心逻辑,可以说系统设计的非常的巧妙,巧妙的避开了对 DB 数据库 IO 的操作。

对 Redis 网络 IO 的高并发请求,几乎所有的计算都是在内存中完成的,而且有效的保证了不超卖、不少卖,还能够容忍部分机器的宕机。

我觉得其中有两点特别值得学习总结:

①负载均衡,分而治之

通过负载均衡,将不同的流量划分到不同的机器上,每台机器处理好自己的请求,将自己的性能发挥到极致。

这样系统的整体也就能承受极高的并发了,就像工作的一个团队,每个人都将自己的价值发挥到了极致,团队成长自然是很大的。

②合理的使用并发和异步

自 Epoll 网络架构模型解决了 c10k 问题以来,异步越来越被服务端开发人员所接受,能够用异步来做的工作,就用异步来做,在功能拆解上能达到意想不到的效果。

这点在 Nginx、Node.JS、Redis 上都能体现,他们处理网络请求使用的 Epoll 模型,用实践告诉了我们单线程依然可以发挥强大的威力。

服务器已经进入了多核时代,Go 语言这种天生为并发而生的语言,完美的发挥了服务器多核优势,很多可以并发处理的任务都可以使用并发来解决,比如 Go 处理 HTTP 请求时每个请求都会在一个 Goroutine 中执行。

总之,怎样合理的压榨 CPU,让其发挥出应有的价值,是我们一直需要探索学习的方向。

The above is the detailed content of How awesome is the architecture of '12306”?. For more information, please follow other related articles on the PHP Chinese website!