本篇文章的主要内容是关于介绍影响http性能的常见因素,具有一定的参考价值,感兴趣的朋友可以了解一下。

我们这里讨论HTTP性能是建立在一个最简单模型之上就是单台服务器的HTTP性能,当然对于大规模负载均衡集群也适用毕竟这种集群也是由多个HTTTP服务器的个体所组成。另外我们也排除客户端或者服务器本身负载过高或者HTTP协议实现的软件使用不同的IO模型,另外我们也忽略DNS解析过程和web应用程序开发本身的缺陷。

从TCP/IP模型来看,HTTP下层就是TCP层,所以HTTP的性能很大程度上取决于TCP性能,当然如果是HTTPS的就还要加上TLS/SSL层,不过可以肯定的是HTTPS性能肯定比HTTP要差,其通讯过程这里都不多说总之层数越多性能损耗就越严重。

在上述条件下,最常见的影响HTTP性能的包括:

TCP连接建立,也就是三次握手阶段

TCP慢启动

TCP延迟确认

Nagle算法

TIME_WAIT积累与端口耗尽

服务端端口耗尽

服务端HTTP进程打开文件数量达到最大

通常如果网络稳定TCP连接建立不会消耗很多时间而且也都会在合理耗时范围内完成三次握手过程,但是由于HTTP是无状态的属于短连接,一次HTTP会话接受后会断开TCP连接,一个网页通常有很多资源这就意味着会进行很多次HTTP会话,而相对于HTTP会话来说TCP三次握手建立连接就会显得太耗时了,当然可以通过重用现有连接来减少TCP连接建立次数。

TCP拥塞控制手段1,在TCP刚建立好之后的最初传输阶段会限制连接的最大传输速度,如果数据传输成功,后续会逐步提高传输速度,这就TCP慢启动。慢启动限制了某一时刻可以传输的IP分组2数量。那么为什么会有慢启动呢?主要是为了避免网络因为大规模的数据传输而瘫痪,在互联网上数据传输很重要的一个环节就是路由器,而路由器本身的速度并不快加之互联网上的很多流量都可能发送过来要求其进行路由转发,如果在某一时间内到达路由器的数据量远大于其发送的数量那么路由器在本地缓存耗尽的情况下就会丢弃数据包,这种丢弃行为被称作拥塞,一个路由器出现这种状况就会影响很多条链路,严重的会导致大面积瘫痪。所以TCP通信的任何一方需要进行拥塞控制,而慢启动就是拥塞控制的其中一种算法或者叫做机制。

你设想一种情况,我们知道TCP有重传机制,假设网络中的一个路由器因为拥塞而出现大面积丢包情况,作为数据的发送方其TCP协议栈肯定会检测到这种情况,那么它就会启动TCP重传机制,而且该路由器影响的发送方肯定不止你一个,那么大量的发送方TCP协议栈都开始了重传那这就等于在原本拥塞的网络上发送更多的数据包,这就等于火上浇油。

通过上面的描述得出即便是在正常的网络环境中,作为HTTP报文的发送方与每个请求建立TCP连接都会受到慢启动影响,那么又根据HTTP是短连接一次会话结束就会断开,可以想象客户端发起HTTP请求刚获取完web页面上的一个资源,HTTP就断开,有可能还没有经历完TCP慢启动过程这个TCP连接就断开了,那web页面其他的后续资源还要继续建立TCP连接,而每个TCP连接都会有慢启动阶段,这个性能可想而知,所以为了提升性能,我们可以开启HTTP的持久连接也就是后面要说的keepalive。

另外我们知道TCP中有窗口的概念,这个窗口在发送方和接收方都有,窗口的作用一方面保证了发送和接收方在管理分组时变得有序;另外在有序的基础上可以发送多个分组从而提高了吞吐量;还有一点就是这个窗口大小可以调整,其目的是避免发送方发送数据的速度比接收方接收的速度快。窗口虽然解决了通信双发的速率问题,可是网络中会经过其他网络设备,发送方怎么知道路由器的接收能力呢?所以就有了上面介绍的拥塞控制。

首先要知道什么是确认,它的意思就是发送方给接收方发送一个TCP分段,接收方收到以后要回传一个确认表示收到,如果在一定时间内发送方没有收到该确认那么就需要重发该TCP分段。

确认报文通常都比较小,也就是一个IP分组可以承载多个确认报文,所以为了避免过多的发送小报文,那么接收方在回传确认报文的时候会等待看看有没有发给接收方的其他数据,如果有那么就把确认报文和数据一起放在一个TCP分段中发送过去,如果在一定时间内容通常是100-200毫秒没有需要发送的其他数据那么就将该确认报文放在单独的分组中发送。其实这么做的目的也是为了尽可能降低网络负担。

一个通俗的例子就是物流,卡车的载重是一定的,假如是10吨载重,从A城市到B城市,你肯定希望它能尽可能的装满一车货而不是来了一个小包裹你就立刻起身开向B城市。

所以TCP被设计成不是来一个数据包就马上返回ACK确认,它通常都会在缓存中积攒一段时间,如果还有相同方向的数据就捎带把前面的ACK确认回传过去,但是也不能等的时间过长否则对方会认为丢包了从而引发对方的重传。

对于是否使用以及如何使用延迟确认不同操作系统会有不同,比如Linux可以启用也可以关闭,关闭就意味着来一个就确认一个,这也是快速确认模式。

需要注意的是是否启用或者说设置多少毫秒,也要看场景。比如在线游戏场景下肯定是尽快确认,SSH会话可以使用延迟确认。

对于HTTP来说我们可以关闭或者调整TCP延迟确认。

这个算法其实也是为了提高IP分组利用率以及降低网络负担而设计,这里面依然涉及到小报文和全尺寸报文(按以太网的标准MTU1500字节一个的报文计算,小于1500的都算非全尺寸报文),但是无论小报文怎么小也不会小于40个字节,因为IP首部和TCP首部就各占用20个字节。如果你发送一个50个字节小报文,其实这就意味着有效数据太少,就像延迟确认一样小尺寸包在局域网问题不大,主要是影响广域网。

这个算法其实就是如果发送方当前TCP连接中有发出去但还没有收到确认的报文的时候,那么此时如果发送方还有小报文要发送的话就不能发送而是要放到缓冲区等待之前发出报文的确认,收到确认之后,发送方会收集缓存中同方向的小报文组装成一个报文进行发送。其实这也就意味着接收方返回ACK确认的速度越快,发送方发送数据也就越快。

现在我们说说延迟确认和Nagle算法结合将会带来的问题。其实很容易看出,因为有延迟确认,那么接收方则会在一段时间内积攒ACK确认,而发送方在这段时间内收不到ACK那么也就不会继续发送剩下的非全尺寸数据包(数据被分成多个IP分组,发送方要发送的响应数据的分组数量不可能一定是1500的整数倍,大概率会发生数据尾部的一些数据就是小尺寸IP分组),所以你就看出这里的矛盾所在,那么这种问题在TCP传输中会影响传输性能那么HTTP又依赖TCP所以自然也会影响HTTP性能,通常我们会在服务器端禁用该算法,我们可以在操作系统上禁用或者在HTTP程序中设置TCP_NODELAY来禁用该算法。比如在Nginx中你可以使用tcp_nodelay on;来禁用。

这里指的是作为客户端的一方或者说是在TCP连接中主动关闭的一方,虽然服务器也可以主动发起关闭,但是我们这里讨论的是HTTP性能,由于HTTP这种连接的特性,通常都是客户端发起主动关闭,

客户端发起一个HTTP请求(这里说的是对一个特定资源的请求而不是打开一个所谓的主页,一个主页有N多资源所以会导致有N个HTTP请求的发起)这个请求结束后就会断开TCP连接,那么该连接在客户端上的TCP状态会出现一种叫做TIME_WAIT的状态,从这个状态到最终关闭通常会经过2MSL4的时长,我们知道客户端访问服务端的HTTP服务会使用自己本机随机高位端口来连接服务器的80或者443端口来建立HTTP通信(其本质就是TCP通信)这就意味着会消耗客户端上的可用端口数量,虽然客户端断开连接会释放这个随机端口,不过客户端主动断开连接后,TCP状态从TIME_WAIT到真正CLOSED之间的这2MSL时长内,该随机端口不会被使用(如果客户端又发起对相同服务器的HTTP访问),其目的之一是为了防止相同TCP套接字上的脏数据。通过上面的结论我们就知道如果客户端对服务器的HTTP访问过于密集那么就有可能出现端口使用速度高于端口释放速度最终导致因没有可用随机端口而无法建立连接。

上面我们说过通常都是客户端主动关闭连接,

TCP/IP详解 卷1 第二版,P442,最后的一段写到 对于交互式应用程序而言,客户端通常执行主动关闭操作并进入TIME_WAIT状态,服务器通常执行被动关闭操作并且不会直接进入TIME_WAIT状态。

不过如果web服务器并且开启了keep-alive的话,当达到超时时长服务器也会主动关闭。(我这里并不是说TCP/IP详解错了,而是它在那一节主要是针对TCP来说,并没有引入HTTP,而且它说的是通常而不是一定)

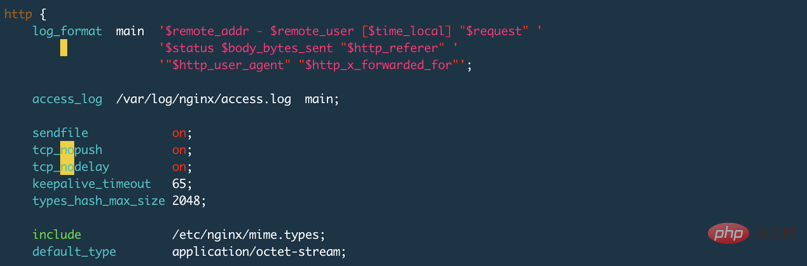

我使用Nginx做测试,并且在配置文件中设置了keepalive_timeout 65s;,Nginx的默认设置是75s,设置为0表示禁用keepalive,如下图:

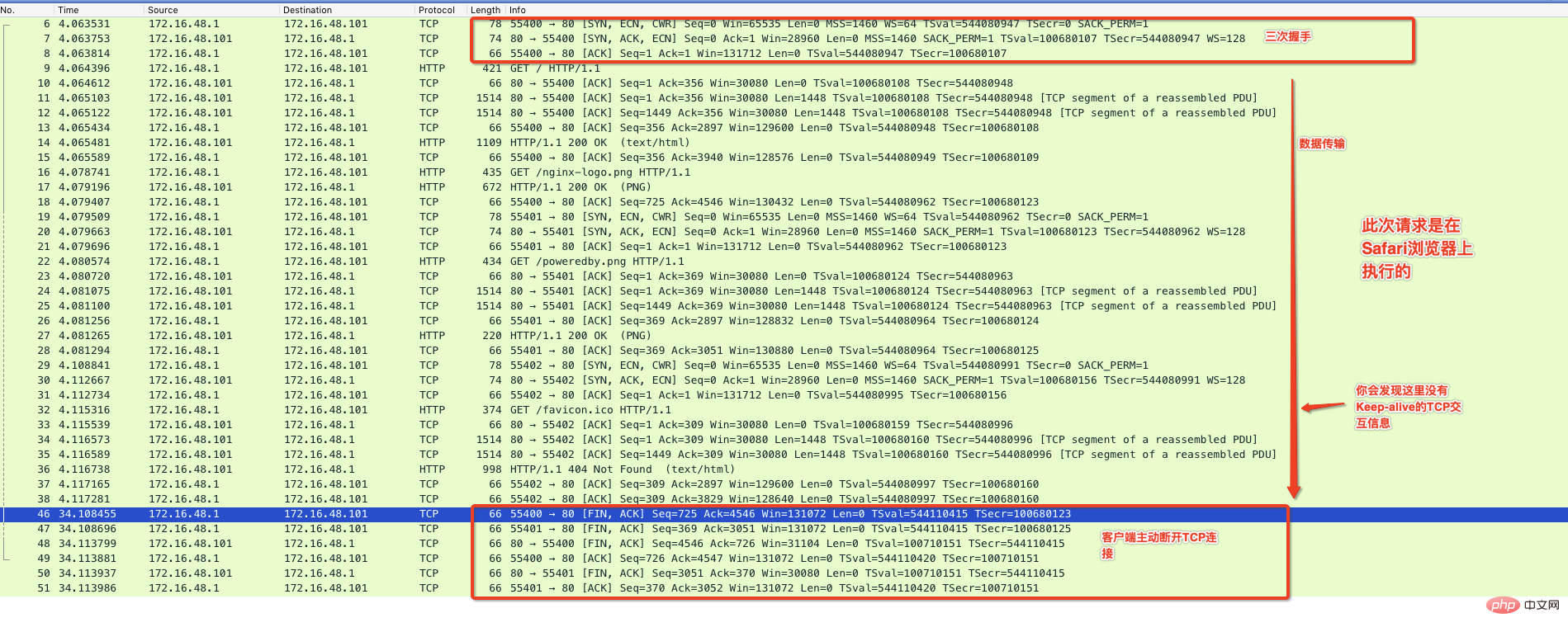

下面我使用Chrom浏览器访问这个Nginx默认提供的主页,并通过抓包程序来监控整个通信过程,如下图:

从上图可以看出来在有效数据传送完毕后,中间出现了Keep-Alive标记的通信,并且在65秒内没有请求后服务器主动断开连接,这种情况你在Nginx的服务器上就会看到TIME_WAIT的状态。

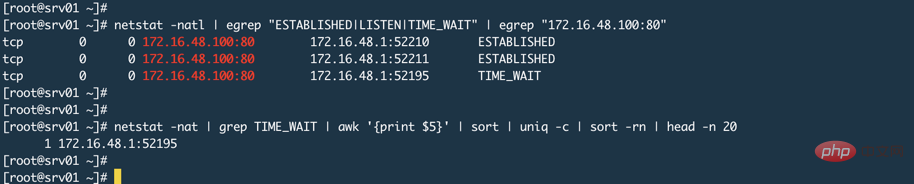

有人说Nginx监听80或者443,客户端都是连接这个端口,服务端怎么会端口耗尽呢?就像下图一样(忽略图中的TIME_WAIT,产生这个的原因上面已经说过了是因为Nginx的keepalive_timeout设置导致的)

其实,这取决于Nginx工作模式,我们使用Nginx通常都是让其工作在代理模式,这就意味着真正的资源或者数据在后端Web应用程序上,比如Tomcat。代理模式的特点是代理服务器代替用户去后端获取数据,那么此时相对于后端服务器来说,Nginx就是一个客户端,这时候Nginx就会使用随机端口来向后端发起请求,而系统可用随机端口范围是一定的,可以使用sysctl net.ipv4.ip_local_port_range命令来查看服务器上的随机端口范围。

通过我们之前介绍的延迟确认、Nagle算法以及代理模式下Nginx充当后端的客户端角色并使用随机端口连接后端,这就意味着服务端的端口耗尽风险是存在的。随机端口释放速度如果比与后端建立连接的速度慢就有可能出现。不过一般不会出现这个情况,至少我们公司的Nginx我没有发现有这种现象产生。因为首先是静态资源都在CDN上;其次后端大部分都是REST接口提供用户认证或者数据库操作,这些操作其实后端如果没有瓶颈的话基本都很快。不过话说回来如果后端真的有瓶颈且扩容或者改架构成本比较高的话,那么当面对大量并发的时候你应该做的是限流防止后端被打死。

我们说过HTTP通信依赖TCP连接,一个TCP连接就是一个套接字,对于类Unix系统来说,打开一个套接字就是打开一个文件,如果有100个请求连接服务端,那么一旦连接建立成功服务端就会打开100个文件,而Linux系统中一个进程可以打开的文件数量是有限的ulimit -f,所以如果这个数值设置的太小那么也会影响HTTP连接。而对以代理模式运行的Nginx或者其他HTTP程序来说,通常一个连接它就要打开2个套接字也就会占用2个文件(命中Nginx本地缓存或者Nginx直接返回数据的除外)。所以对于代理服务器这个进程可打开的文件数量也要设置的大一点。

首先我们要知道keepalive可以设置在2个层面上,且2个层面意义不同。TCP的keepalive是一种探活机制,比如我们常说的心跳信息,表示对方还在线,而这种心跳信息的发送由有时间间隔的,这就意味着彼此的TCP连接要始终保持打开状态;而HTTP中的keep-alive是一种复用TCP连接的机制,避免频繁建立TCP连接。所以一定明白TCP的Keepalive和HTTP的Keep-alive不是一回事。

非持久连接会在每个HTTP事务完成后断开TCP连接,下一个HTTP事务则会再重新建立TCP连接,这显然不是一种高效机制,所以在HTTP/1.1以及HTTP/1.0的增强版本中允许HTTP在事务结束后将TCP连接保持打开状态,以便后续的HTTP事务可以复用这个连接,直到客户端或者服务器主动关闭该连接。持久连接减少了TCP连接建立的次数同时也最大化的规避了TCP慢启动带来的流量限制。

相关教程:HTTP视频教程

再来看一下这张图,图中的keepalive_timeout 65s设置了开启http的keep-alive特性并且设置了超时时长为65秒,其实还有比较重要的选项是keepalive_requests 100;它表示同一个TCP连接最多可以发起多少个HTTP请求,默认是100个。

在HTTP/1.0中keep-alive并不是默认使用的,客户端发送HTTP请求时必须带有Connection: Keep-alive的首部来试图激活keep-alive,如果服务器不支持那么将无法使用,所有请求将以常规形式进行,如果服务器支持那么在响应头中也会包括Connection: Keep-alive的信息。

在HTTP/1.1中默认就使用Keep-alive,除非特别说明,否则所有连接都是持久的。如果要在一个事务结束后关闭连接,那么HTTP的响应头中必须包含Connection: CLose首部,否则该连接会始终保持打开状态,当然也不能总是打开,也必须关闭空闲连接,就像上面Nginx的设置一样最多保持65秒的空闲连接,超过后服务端将会主动断开该连接。

在Linux上没有一个统一的开关去开启或者关闭TCP的Keepalive功能,查看系统keepalive的设置sysctl -a | grep tcp_keepalive,如果你没有修改过,那么在Centos系统上它会显示:

net.ipv4.tcp_keepalive_intvl = 75 # 两次探测直接间隔多少秒 net.ipv4.tcp_keepalive_probes = 9 # 探测频率 net.ipv4.tcp_keepalive_time = 7200 # 表示多长时间进行一次探测,单位秒,这里也就是2小时

按照默认设置,那么上面的整体含义就是2小时探测一次,如果第一次探测失败,那么过75秒再探测一次,如果9次都失败就主动断开连接。

如何开启Nginx上的TCP层面的Keepalive,在Nginx中有一个语句叫做listen它是server段里面用于设置Nginx监听在哪个端口的语句,其实它后面还有其他参数就是用来设置套接字属性的,看下面几种设置:

# 表示开启,TCP的keepalive参数使用系统默认的 listen 80 default_server so_keepalive=on; # 表示显式关闭TCP的keepalive listen 80 default_server so_keepalive=off; # 表示开启,设置30分钟探测一次,探测间隔使用系统默认设置,总共探测10次,这里的设 # 置将会覆盖上面系统默认设置 listen 80 default_server so_keepalive=30m::10;

所以是否要在Nginx上设置这个so_keepalive,取决于特定场景,千万不要把TCP的keepalive和HTTP的keepalive搞混淆,因为Nginx不开启so_keepalive也不影响你的HTTP请求使用keep-alive特性。如果客户端和Nginx直接或者Nginx和后端服务器之间有负载均衡设备的话而且是响应和请求都会经过这个负载均衡设备,那么你就要注意这个so_keepalive了。比如在LVS的直接路由模式下就不受影响,因为响应不经过

LVS,不过要是NAT模式就需要留意,因为LVS保持TCP会话也有一个时长,如果该时长小于后端返回数据的时长那么LVS就会在客户端还没有收到数据的情况下断开这条TCP连接。

TCP拥塞控制有一些算法,其中就包括TCP慢启动、拥塞避免等算法↩

有些地方也叫做IP分片但都是一个意思,至于为什么分片简单来说就是受限于数据链路层,不同的数据链路其MTU不同,以太网的是1500字节,在某些场景会是1492字节;FDDI的MTU又是另外一种大小,单纯考虑IP层那么IP数据包最大是65535字节↩

在《HTTP权威指南》P90页中并没有说的特别清楚,这种情况是相对于客户端来说还是服务端,因为很有可能让人误解,当然并不是说服务端不会出现端口耗尽的情况,所以我这里才增加了2项内容↩

最长不会超过2分钟↩

相关教程:HTTP视频教程

以上就是分析影响http性能的常见因素的详细内容,更多请关注php中文网其它相关文章!

Copyright 2014-2025 //m.sbmmt.com/ All Rights Reserved | php.cn | 湘ICP备2023035733号