The content of this article is to introduce the persistence and master-slave replication mechanism of redis. It has certain reference value. Friends in need can refer to it. I hope it will be helpful to you.

Redis persistence

Redis provides a variety of persistence methods at different levels:

RDB persistence can be Generate a point-in-time snapshot of the data set within a specified time interval

AOF persistently records all write operation commands executed by the server, and re-executes these commands when the server starts. Restore the dataset. All commands in the AOF file are saved in the Redis protocol format, and new commands will be appended to the end of the file. Redis can also rewrite the AOF file in the background so that the size of the AOF file does not exceed the actual size required to save the data set state.

Redis can also use AOF persistence and RDB persistence at the same time. In this case, when Redis restarts, it will give priority to using the AOF file to restore the data set, because the data set saved by the AOF file is usually more complete than the data set saved by the RDB file.

You can even turn off persistence so that the data only exists while the server is running.

RDB(Redis DataBase)

Rdb: Writes the in-memory snapshot of the data set to disk within the specified time interval, that is In jargon, a snapshot is a snapshot. When it is restored, the snapshot file is read directly into the memory.

Redis will create (fork) a separate sub-process for persistence. It will first write the data to a temporary file. When the persistence process is over, this temporary file will be used to replace the last persistence. Returned documents. Throughout the entire process, the main process does not perform any IO operations, which ensures extremely high performance. If large-scale data recovery is required and the integrity of data recovery is not very sensitive, the RDB method is more efficient than the AOF method. of high efficiency. The disadvantage of RDB is that data after the last persistence may be lost.

The function of Fork is to copy a process that is the same as the current process. All the data (variables, environment variables, program counters, etc.) of the new process have the same values as the original process, but it is a brand new process and serves as Subprocesses of the original process

Hidden dangers: If the current process has a large amount of data, then the amount of data after fork*2 will cause high pressure on the server and reduced operating performance.

Rdb saves the dump.rdb file

In testing: execute flushAll command, use shutDown When the process is closed directly, redis will automatically read the dump.rdb file when it is opened for the second time, but when it is restored, it will be all empty. (The reason for this: At the time of shutdown, the redis system will save the empty dump.rdb to replace the original cache file. Therefore, when the redis system is opened for the second time, the empty value file is automatically read)

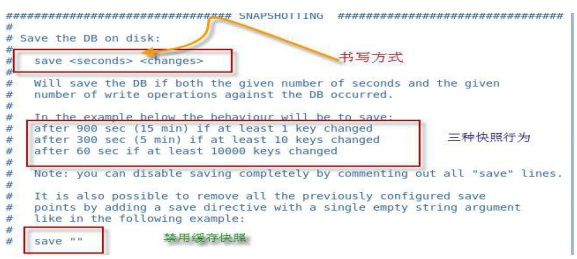

RDB save operation

Rdb is a compressed snapshot of the entire memory. The data structure of RDB can be configured to meet the snapshot triggering conditions. The default is to change 1 within 1 minute. Ten thousand times, or 10 changes in 5 minutes, or once in 15 minutes;

Save disabled: If you want to disable the RDB persistence strategy, just do not set any save instructions, or pass in an empty string parameter to save That's ok too.

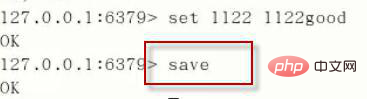

-----> save command: save the operation object immediately

How to trigger RDB snapshot

Save: When saving, just save, ignore other things, and block everything.

Bgsave: redis will perform snapshot operations in the background. During the snapshot operation, it can also respond to client requests. You can obtain the time of the last successful snapshot execution through the lastsave command.

Executing the fluhall command will also generate the dump.rdb file, but it will be empty.

How to restore:

Move the backup file (dump.rdb) to the redis installation directory and start the service

Config get dir command can get the directory

How to stop

Method to dynamically stop RDB saving rules: redis -cli config set save “”

AOF(Append Only File)

Record each write operation in the form of a log, and record all write instructions executed by redis (read operations are not recorded). Only files can be appended but not rewritten. When redis is started, the file will be read to reconstruct the data. In other words, when redis is restarted, the write instructions will be executed from front to back according to the contents of the log file to complete the data recovery work.

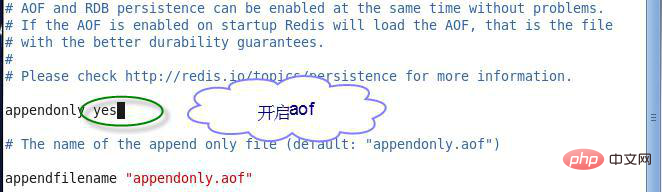

======APPEND ONLY MODE=====

Open aof: appendonly yes (default is no)

Note:

In actual work and production, it often occurs: aof file damage (network transmission or other problems cause aof file damage)

An error occurs when the server starts (but the dump.rdb file is complete), indicating that the aof file is loaded before starting.

Solution: Execute the command redis-check-aof --fix aof file [automatically check and delete fields that are inconsistent with aof syntax]

Aof policy

Appendfsync parameters:

Always In synchronous persistence, every data change will be immediately recorded to disk, which has poor performance but better data integrity.

Everysec: Factory default recommendation, asynchronous operation, recording every second, downtime after one second, data loss

No: never fsync: hand over the data to the operating system for processing. Faster and less secure option.

Rewrite

Concept: AOF uses file appending, and the files will become larger and larger. To avoid this situation, a new Rewriting mechanism, when the size of the aof file exceeds the set threshold, redis will automatically compress the content of the aof file, and the value will retain the minimum instruction set that can restore the data. You can use the command bgrewirteaof.

Principle of rewriting: When the aof file continues to grow and becomes large, a new process will be forked to rewrite the file (that is,

first writes the temporary file and then renames it), traversing the memory of the new process Each record has a set statement. The operation of rewriting the aof file does not read the old aof file. Instead, the entire memory database content is rewritten into a new aof file using commands. This is somewhat similar to snapshots.

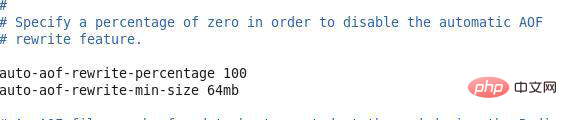

Trigger mechanism: redis will record the size of the last rewritten aof. The default configuration is that when the aof file size is twice the size after the last rewrite and the file is larger than 64M, it is triggered (3G)

no-appendfsync-on-rewrite no: Whether Appendfsync can be used during rewrite. Just use the default no to ensure data security.

auto-aof-rewrite-percentage multiple setting base value

auto-aof-rewrite-min-size Set the baseline value size

AOF advantages

Using AOF persistence will make Redis very Durability: You can set different fsync strategies, such as no fsync, fsync every second, or fsync every time a write command is executed. The default policy of AOF is to fsync once per second. Under this configuration, Redis can still maintain good performance, and even if a failure occurs, only one second of data will be lost at most (fsync will be executed in a background thread, so The main thread can continue to work hard processing command requests).

The AOF file is a log file that only performs append operations (append only log), so writing to the AOF file does not require seeking, even if the log contains incomplete commands for some reasons. (For example, the disk is full when writing, the writing stops midway, etc.), the redis-check-aof tool can also easily fix this problem.

Redis can automatically rewrite the AOF in the background when the AOF file size becomes too large: the rewritten new AOF file contains the minimum set of commands required to restore the current data set. The entire rewriting operation is absolutely safe, because Redis will continue to append commands to the existing AOF file during the process of creating a new AOF file. Even if there is a shutdown during the rewriting process, the existing AOF file will not be lost. . Once the new AOF file is created, Redis will switch from the old AOF file to the new AOF file and start appending to the new AOF file.

The AOF file saves all write operations performed on the database in an orderly manner. These write operations are saved in the format of the Redis protocol, so the content of the AOF file is very easy to read and analyze the file ( parse) is also very easy. Exporting (exporting) AOF files is also very simple: for example, if you accidentally execute the FLUSHALL command, but as long as the AOF file has not been overwritten, then just stop the server, remove the FLUSHALL command at the end of the AOF file, and restart Redis. You can restore the data set to the state before FLUSHALL was executed.

AOF Disadvantages

For the same data set, the size of the AOF file is usually larger than the size of the RDB file.

Depending on the fsync strategy used, AOF may be slower than RDB. Under normal circumstances, fsync performance per second is still very high, and turning off fsync can make AOF as fast as RDB, even under heavy load. However, RDB can provide a more guaranteed maximum latency when handling huge write loads.

AOF has had such a bug in the past: due to individual commands, when the AOF file is reloaded, the data set cannot be restored to the original state when it was saved. (For example, the blocking command BRPOPLPUSH once caused such a bug.)

Tests have been added to the test suite for this situation: they automatically generate random, complex data sets and reload them to ensure everything is working properly. Although this kind of bug is not common in AOF files, in comparison, it is almost impossible for RDB to have this kind of bug.

Back up Redis data

Be sure to back up your database!

Disk failure, node failure, and other problems may cause your data to disappear. Failure to back up is very dangerous.

Redis is very friendly for data backup, because you can copy the RDB file while the server is running: Once the RDB file is created, no modifications will be made. When the server wants to create a new RDB file, it first saves the contents of the file in a temporary file. When the temporary file is written, the program uses rename(2) to atomically replace the original RDB file with the temporary file.

This means that it is absolutely safe to copy RDB files at any time.

Recommendation:

Create a regular task (cron job), back up an RDB file to a folder every hour, and backup it every day Back up an RDB file to another folder.

Make sure that the snapshot backups have corresponding date and time information. Every time you execute the regular task script, use the find command to delete expired snapshots: For example, you can keep every hour in the last 48 hours. Snapshots, you can also keep daily snapshots for the last one or two months.

At least once a day, back up the RDB outside of your data center, or at least outside the physical machine where you are running the Redis server.

Disaster recovery backup

Redis disaster recovery backup is basically to back up the data and transfer these backups to multiple different servers. External data center.

Disaster recovery backup can keep the data in a safe state even if a serious problem occurs in the main data center where Redis runs and generates snapshots.

Some Redis users are entrepreneurs. They don’t have a lot of money to waste, so the following are some practical and cheap disaster recovery backup methods:

Amazon S3, and other services like S3, is a good place to build a disaster backup system. The easiest way is to encrypt and transfer your hourly or daily RDB backups to S3. Encryption of data can be accomplished with the gpg -c command (symmetric encryption mode). Remember to keep your passwords in several different, secure places (for example, you can copy them to the most important people in your organization). Using multiple storage services to save data files at the same time can improve data security.

Transmitting snapshots can be done using SCP (component of SSH). The following is a simple and secure transfer method: Buy a VPS (Virtual Private Server) far away from your data center, install SSH, create a passwordless SSH client key, and add this key to the VPS's authorized_keys file , so that the snapshot backup file can be transferred to this VPS. For the best data security, purchase a VPS from at least two different providers for disaster recovery.

It should be noted that this type of disaster recovery system can easily fail if it is not handled carefully.

At a minimum, after the file transfer is complete, you should check whether the size of the transferred backup file is the same as the size of the original snapshot file. If you are using a VPS, you can also confirm whether the file is transferred completely by comparing the SHA1 checksum of the file.

In addition, you also need an independent alarm system to notify you when the transfer (transfer) responsible for transferring backup files fails.

Redis master-slave replication

Redis supports the simple and easy-to-use master-slave replication function, which allows The slave server becomes an exact replica of the master server.

The following are several important aspects about Redis replication functionality:

Redis uses asynchronous replication. Starting from Redis 2.8, the slave server will report the processing progress of the replication stream to the master server once per second.

A master server can have multiple slave servers.

Not only the master server can have slave servers, but the slave servers can also have their own slave servers. Multiple slave servers can form a graph-like structure.

The replication function does not block the master server: even if one or more slave servers are undergoing initial synchronization, the master server can continue to process command requests.

The replication function will not block the slave server: as long as the corresponding settings are made in the redis.conf file, the server can use the old version of the data set to process command queries even if the slave server is undergoing initial synchronization.

However, during the period when the old version of the data set is deleted from the server and the new version of the data set is loaded, the connection request will be blocked.

You can also configure the slave server to send an error to the client when the connection to the master server is disconnected.

The replication function can be used purely for data redundancy, or it can improve scalability by having multiple slave servers handle read-only command requests: For example, the heavy SORT command can Leave it to the subordinate node to run.

You can use the replication function to exempt the master server from performing persistence operations: Just turn off the persistence function of the master server, and then let the slave server perform the persistence operations.

When turning off the main server persistence, the data security of the replication function.

When configuring the Redis replication function, it is strongly recommended to turn on the persistence function of the main server. Otherwise, the deployed service should avoid being automatically pulled up due to latency and other issues.

Case:

Assume that node A is the main server and persistence is turned off. And node B and node C copy data from node A

Node A crashes, and then restarts node A by automatically pulling up the service. Since the persistence of node A is turned off, restart There will be no data after that

Node B and node C will copy data from node A, but A’s data is empty, so the data copies they save will be deleted.

When persistence on the main server is turned off and the automatic pull-up process is turned on at the same time, it is very dangerous even if Sentinel is used to achieve high availability of Redis. Because the main server may be pulled up so quickly that Sentinel does not detect that the main server has been restarted within the configured heartbeat interval, and then the above data loss process will still be performed.

Data security is extremely important at all times, so the main server should be prohibited from automatically pulling up persistence when it is turned off.

Slave server configuration

Configuring a slave server is very simple, just add the following line to the configuration file:

slaveof 192.168.1.1 6379

Another method is to call the SLAVEOF command, enter the IP and port of the main server, and then the synchronization will start

127.0.0.1:6379> SLAVEOF 192.168.1.1 10086

OK

Read-only slave server

Starting from Redis 2.6, the slave server supports read-only mode, and this mode is the default mode of the slave server.

The read-only mode is controlled by the slave-read-only option in the redis.conf file. This mode can also be turned on or off through the CONFIG SET command.

The read-only slave server will refuse to execute any write commands, so data will not be accidentally written to the slave server due to operational errors.

In addition, executing the command SLAVEOF NO ONE on a slave server will cause the slave server to turn off the replication function and transition from the slave server back to the master server. The original synchronized data set will not be discarded.

Using the feature of "SLAVEOF NO ONE will not discard the synchronized data set", when the main server fails, the slave server can be used as the new main server, thereby achieving uninterrupted operation.

Relevant configuration of the slave server:

If the master server sets a password through the requirepass option, then in order to allow the synchronization operation of the slave server to proceed smoothly , we must also make corresponding authentication settings for the slave server.

For a running server, you can use the client to enter the following command:

config set masterauth

To set this password permanently, you can add it to the configuration file :

masterauth

The master server will only perform write operations when there are at least N slave servers

Starting from Redis 2.8, in order to ensure data security , you can configure the master server to execute the write command only when there are at least N slave servers currently connected.

However, because Redis uses asynchronous replication, the write data sent by the master server may not be received by the slave server. Therefore, the possibility of data loss still exists.

Here's how this feature works:

The slave server pings the master server once per second and reports the status of the replication stream. Handle the situation.

The master server will record the last time each slave server sent PING to it.

Users can specify the maximum network delay min-slaves-max-lag through configuration, and the minimum number of slave servers required to perform write operations min-slaves-to-write.

If there are at least min-slaves-to-write slave servers, and the latency values of these servers are less than min-slaves-max-lag seconds, then the master server will perform the write operation requested by the client. .

On the other hand, if the conditions do not meet the conditions specified by min-slaves-to-write and min-slaves-max-lag, then the write operation will not be executed, and the main server will execute the request The client for the write operation returned an error.

The following are the two options of this feature and their required parameters:

min-slaves-to-writemin-slaves-max-lag

The above is the entire content of this article, I hope it will be helpful to everyone's learning. For more exciting content, you can pay attention to the relevant tutorial columns of the PHP Chinese website! ! !

The above is the detailed content of Introduction to Redis persistence and master-slave replication mechanism. For more information, please follow other related articles on the PHP Chinese website!

Commonly used database software

Commonly used database software What are the in-memory databases?

What are the in-memory databases? Which one has faster reading speed, mongodb or redis?

Which one has faster reading speed, mongodb or redis? How to use redis as a cache server

How to use redis as a cache server How redis solves data consistency

How redis solves data consistency How do mysql and redis ensure double-write consistency?

How do mysql and redis ensure double-write consistency? What data does redis cache generally store?

What data does redis cache generally store? What are the 8 data types of redis

What are the 8 data types of redis