News from this site on March 7, AMD announced today that users can run GPT-based large language models (LLM) locally to build exclusive AI chatbots.

AMD says users can run LLM and AI chatbots natively on devices including Ryzen 7000 and Ryzen 8000 series APUs powered by AMD’s new XDNA NPU, as well as Radeon RX 7000 series GPUs with built-in AI acceleration cores.

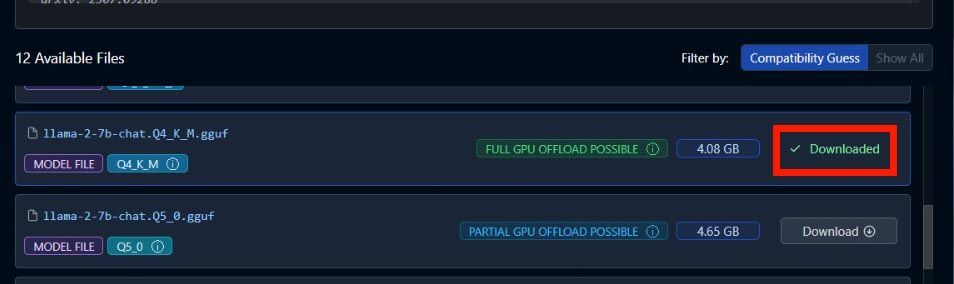

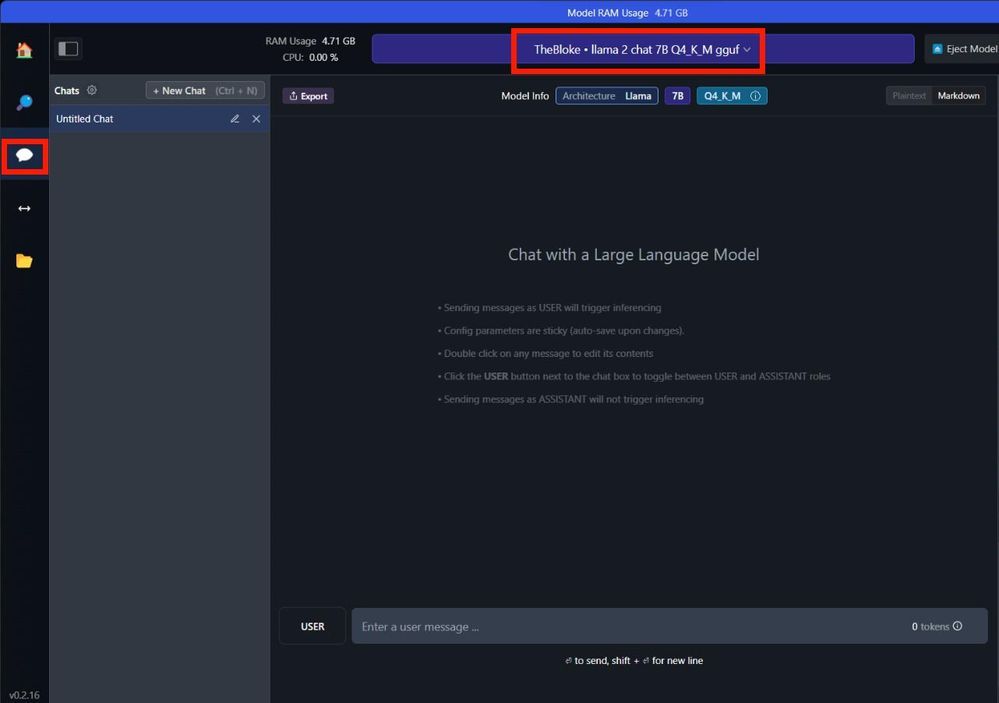

AMD detailed the running steps in the announcement, such as running Mistral with 7 billion parameters, search and download "TheBloke/OpenHermes-2.5-Mistral-7B- GGUF"; If running LLAMA v2 with 7 billion parameters, search and download "TheBloke/Llama-2-7B-Chat-GGUF".

The above is the detailed content of AMD Ryzen AI CPUs and Radeon RX 7000 GPUs now support running LLM and AI chatbots natively. For more information, please follow other related articles on the PHP Chinese website!