In this article we will introduce how to develop a deep learning model to detect images generated by artificial intelligence.

Many deep learning methods for detecting AI-generated images are based on how the image was generated or the characteristics/semantics of the image, usually these The model can only recognize specific objects generated by artificial intelligence, such as people, faces, cars, etc.

However, the method proposed in this study, titled "Rich and Poor Texture Contrast: A Simple yet Effective Approach for AI-generated Image Detection" overcomes these challenges and has broader applicability. We’ll dive into this research paper to illustrate how it effectively solves problems faced by other methods of detecting AI-generated images.

When we use a model (such as ResNet-50) to recognize images generated by artificial intelligence, the model will Learning based on the semantics of images. If we train a model to recognize AI-generated car images, using real images and different AI-generated car images for training, then the model will only be able to get information about cars from these data, but not for other objects. for accurate identification.

Although training can be performed on data of various objects, this method takes a long time and can only achieve an accuracy of approximately 72% on unknown data. Although accuracy can be improved by increasing the number of training times and the amount of data, we cannot obtain unlimited training data.

That is to say, there is a big problem with the generalization of the current detection model. In order to solve this problem, the paper proposes the following method

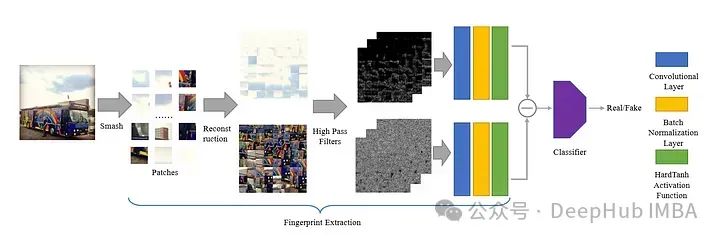

This paper introduces a unique method for preventing models from learning AI-generated features from the shape of images during training. The author proposes a method called Smash&Reconstruction to achieve this goal.

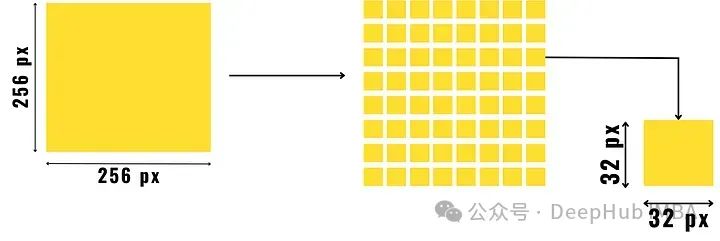

In this method, the image is divided into small blocks of predetermined size and then rearranged to generate a new image. This is just a brief overview as additional steps are required before forming the final input image for the generative model.

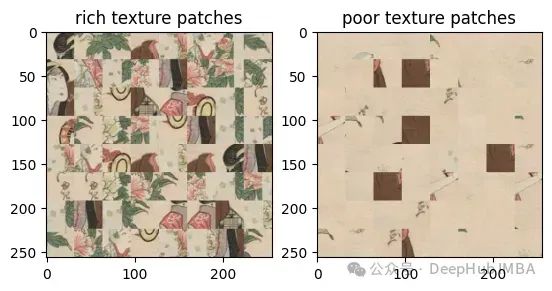

After dividing the image into small pieces, we divide the small pieces into two groups, one group is the texture-rich small pieces, and the other is Small pieces with poor texture.

A detailed area in an image, such as an object or the boundary between two areas of contrasting color, becomes a rich texture block. Richly textured areas have a large variation in pixels compared to textured areas that are primarily background, such as the sky or still water.

Start by dividing the image into small chunks of predetermined size, as shown in the image above. Then find the pixel gradients of these image patches (i.e. find the difference in pixel values in the horizontal, diagonal and anti-diagonal directions and add them together) and separate them into rich texture patches and poorly textured patches .

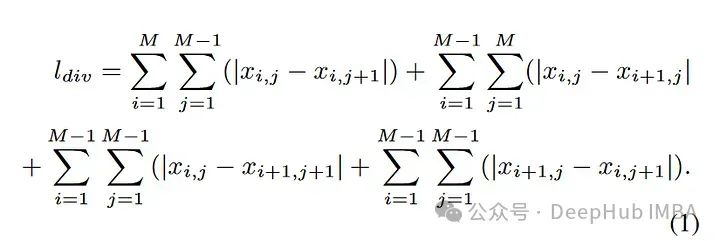

Compared with blocks with poor texture, texture-rich blocks have higher pixel gradient values. The formula for calculating the image gradient value is as follows:

Separate the image based on pixel contrast to obtain two composite images. This process is a complete process that this article calls "Smash&Reconstruction".

This allows the model to learn the details of the texture instead of the content representation of the object

Most of the fingerprint-based methods are limited by the image generation technology, these models/algorithms can only detect images produced by specific methods/similar methods such as diffusion, GAN or other CNN-based image generation method).

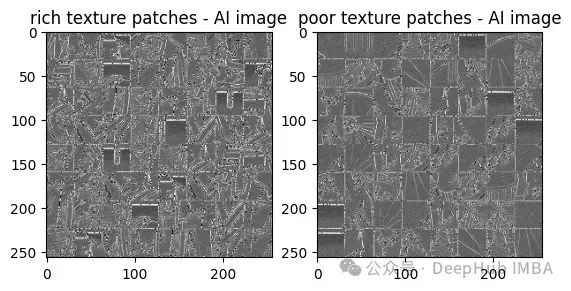

To solve this problem precisely, the paper has divided these image patches into rich or poor textures. The author then proposed a new method of identifying fingerprints in images generated by artificial intelligence, which is the title of the paper. They proposed to find the contrast between rich and texture-poor patches in the image after applying 30 high-pass filters.

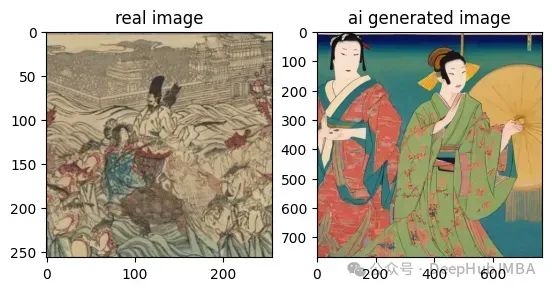

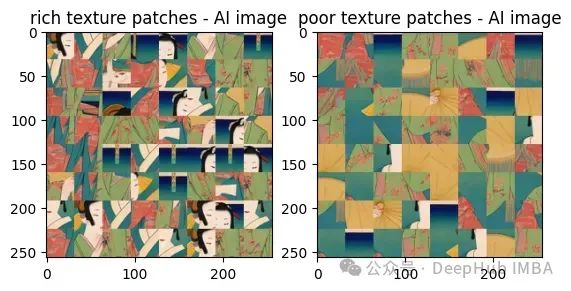

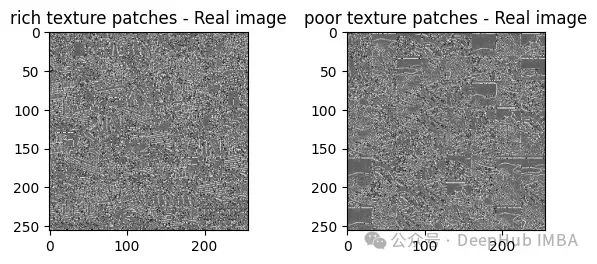

For better understanding, we compare images side by side, real images and AI generated images.

It is difficult to view these two images with the naked eye, right?

The paper first uses the Smash&Reconstruction process :

Contrast between each image after applying 30 high-pass filters on them:

From these results we can see that the contrast between the AI-generated images and the real images is comparable Than, the contrast between rich and poor texture patches is much higher.

In this way, we can see the difference with the naked eye, so we can put the contrast results into the trainable model and input the result data into the classifier. This is the purpose of our paper. Model architecture:

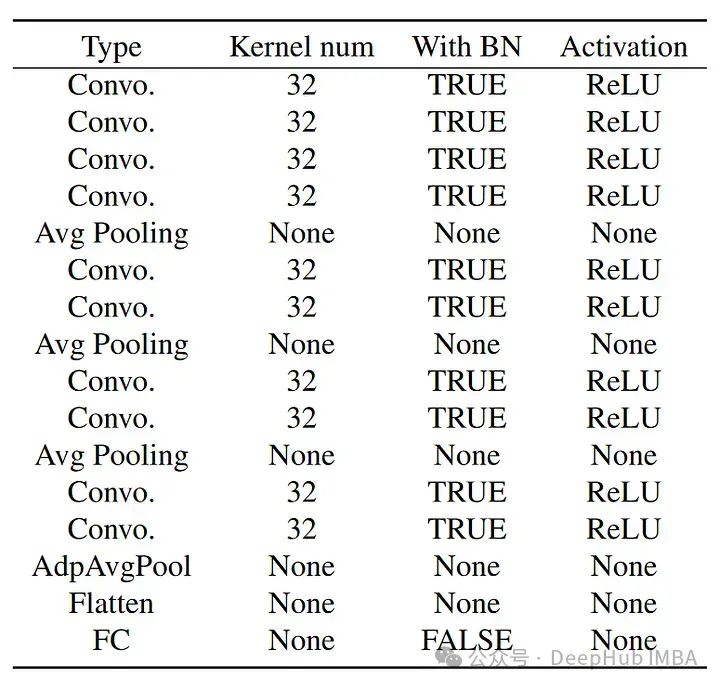

The structure of the classifier is as follows:

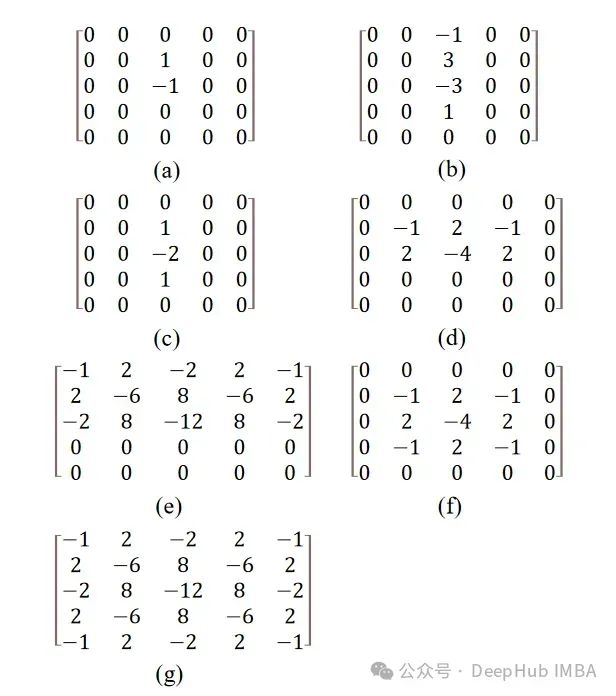

The paper mentions 30 high-pass filters, which were originally introduced for steganalysis.

Note: There are many ways to steganographically image. Broadly speaking, as long as information is hidden in a picture in some way and is difficult to discover through ordinary means, it can be called picture steganography. There are many related studies on steganalysis, and those who are interested can check the relevant information.

The filter here is a matrix value applied to the image using a convolution method. The filter used is a high-pass filter, which only allows the high-frequency features of the image to pass through it. High-frequency features typically include edges, fine details, and rapid changes in intensity or color.

All filters except (f) and (g) are rotated at an angle before being reapplied to the image, thus forming a total of 30 filter. The rotation of these matrices is done using affine transformations, which are done using SciPy.

The results of the paper have reached a verification accuracy of 92%, and it is said that if more training is done, there will be better results As a result, this is a very interesting research. I also found the training code. If you are interested, you can study it in depth:

Paper: https://arxiv.org/abs/2311.12397

Code: https://github.com/hridayK/Detection-of-AI-generated-images

The above is the detailed content of Detecting AI-generated images using texture contrast detection. For more information, please follow other related articles on the PHP Chinese website!

Application of artificial intelligence in life

Application of artificial intelligence in life Commonly used permutation and combination formulas

Commonly used permutation and combination formulas What is the basic concept of artificial intelligence

What is the basic concept of artificial intelligence Introduction to parametric modeling software

Introduction to parametric modeling software Summary of commonly used computer shortcut keys

Summary of commonly used computer shortcut keys Windows cannot complete formatting hard disk solution

Windows cannot complete formatting hard disk solution Ajax Chinese garbled code solution

Ajax Chinese garbled code solution How to solve the problem of missing ssleay32.dll

How to solve the problem of missing ssleay32.dll