Original title: SIMPL: A Simple and Efficient Multi-agent Motion Prediction Baseline for Autonomous Driving

Paper link: https://arxiv.org/pdf/2402.02519.pdf

Code link: https://github.com/HKUST-Aerial-Robotics/SIMPL

Author affiliation: Hong Kong University of Science and Technology DJI

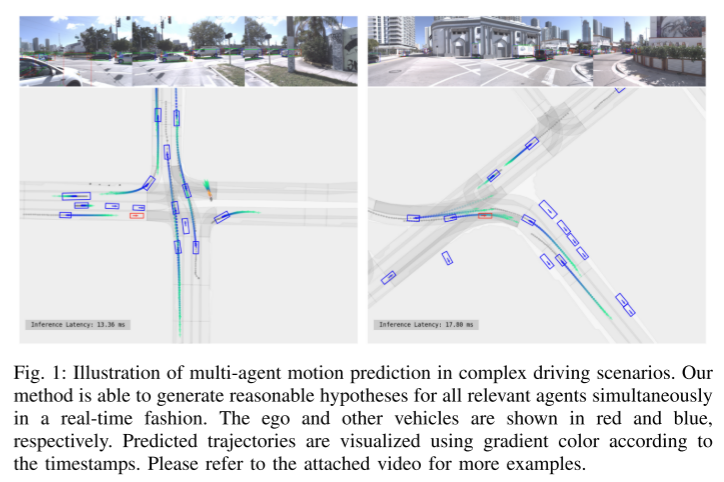

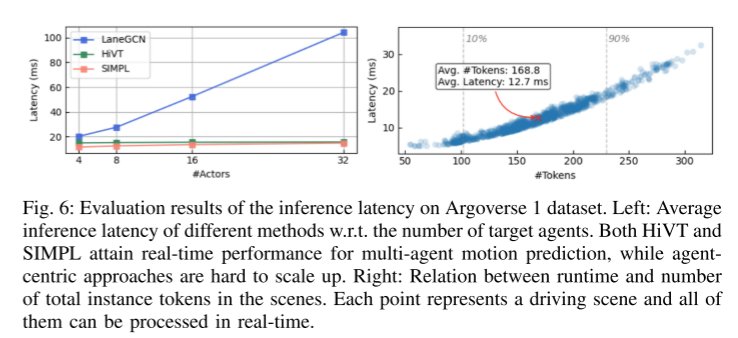

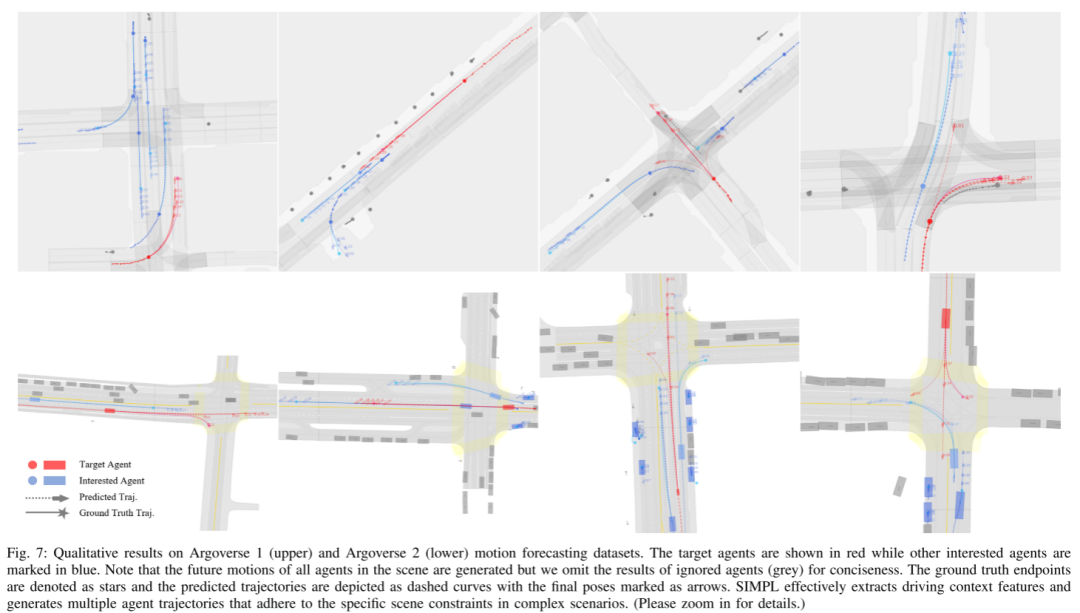

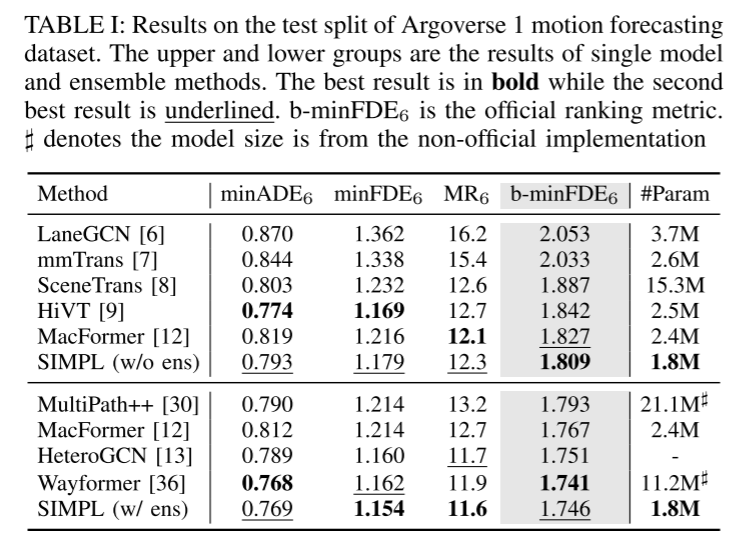

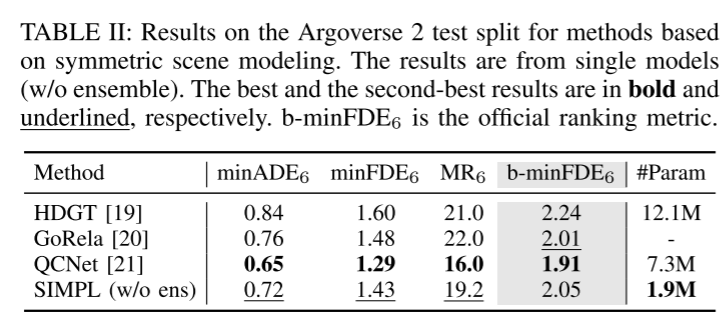

This paper proposes a simple and efficient motion prediction baseline (SIMPL) for autonomous vehicles. Unlike traditional agent-centric methods (which have high accuracy but require repeated computations) and scene-centric methods (where accuracy and generality suffer), SIMPL can provide a comprehensive solution for all relevant traffic. Participants provide real-time, accurate movement predictions. To improve accuracy and inference speed, this paper proposes a compact and efficient global feature fusion module that performs directed message passing in a symmetric manner, enabling the network to predict the future motion of all road users in a single feedforward pass , and reduce the accuracy loss caused by viewpoint movement. Furthermore, this paper investigates the use of Bernstein basis polynomials in trajectory decoding for continuous trajectory parameterization, allowing the evaluation of states and their higher-order derivatives at any desired time point, which is valuable for downstream planning tasks. As a strong baseline, SIMPL shows highly competitive performance on the Argoverse 1 and 2 motion prediction benchmarks compared to other state-of-the-art methods. Furthermore, its lightweight design and low inference latency make SIMPL highly scalable and promising for real-world airborne deployments.

Predicting the movement of surrounding traffic participants is critical for autonomous vehicles, especially in downstream decision-making and planning modules. Accurate prediction of intentions and trajectories will improve safety and ride comfort.

For learning-based motion prediction, one of the most important topics is context representation. Early methods usually represented the surrounding scene as a multi-channel bird's-eye view image [1]–[4]. In contrast, recent research increasingly adopts vectorized scene representation [5]-[13], in which locations and geometries are annotated using point sets or polylines with geographical coordinates, thereby improving fidelity and expand the receptive field. However, for both rasterized and vectorized representations, there is a key question: how should we choose the appropriate reference frame for all these elements? A straightforward approach is to describe all instances within a shared coordinate system (centered on the scene), such as one centered on an autonomous vehicle, and use the coordinates directly as input features. This enables us to make predictions for multiple target agents in a single feedforward pass [8, 14]. However, using global coordinates as input, predictions are typically made for multiple target agents in a single feedforward pass [8, 14]. However, using global coordinates as input (which often vary over a wide range) will greatly exacerbate the inherent complexity of the task, resulting in degraded network performance and limited adaptability to new scenarios. To improve accuracy and robustness, a common solution is to normalize the scene context according to the current state of the target agent [5, 7, 10]-[13] (agent-centric). This means that the normalization process and feature encoding must be performed repeatedly for each target agent, leading to better performance at the expense of redundant computations. Therefore, it is necessary to explore a method that can effectively encode the features of multiple objects while maintaining robustness to perspective changes.

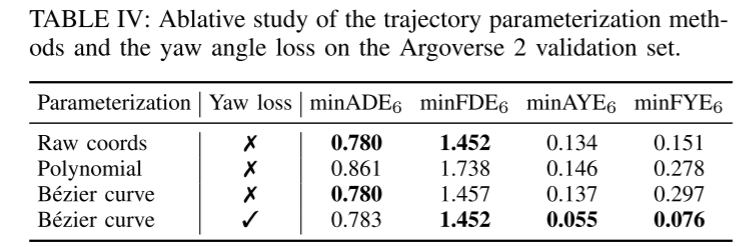

For downstream modules of motion prediction, such as decision-making and motion planning, not only future position needs to be considered, but also heading, speed and other high-order derivatives need to be considered. For example, the predicted headings of surrounding vehicles play a key role in shaping future space-time occupancy, which is a key factor in ensuring safe and robust motion planning [15, 16]. Furthermore, predicting high-order quantities independently without adhering to physical constraints may lead to inconsistent prediction results [17, 18]. For example, although the velocity is zero, it may produce a positional displacement that confuses the planning module.

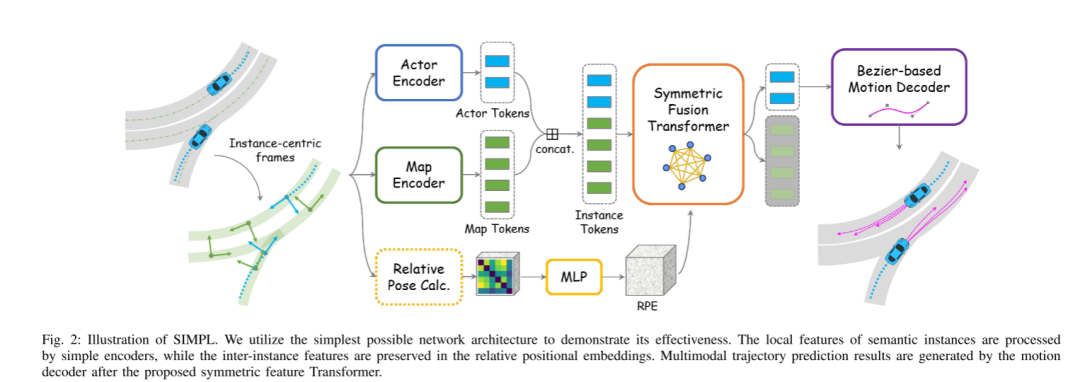

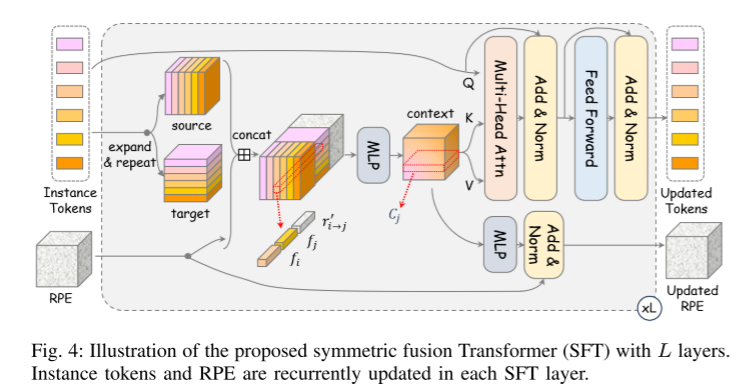

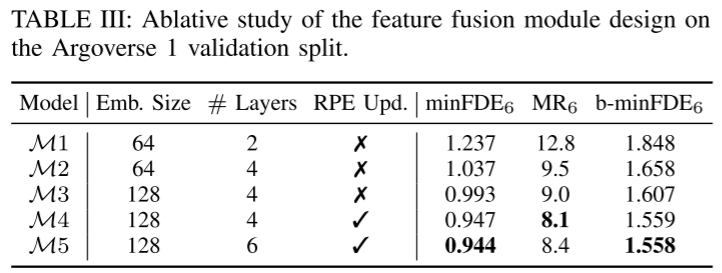

This article introduces a method called SIMPL (Simple and Efficient Motion Prediction Baseline) to solve the key issue of multi-agent trajectory prediction in autonomous driving systems. The method first adopts an instance-centric scene representation and then introduces symmetric fusion Transformer (SFT) technology, which is able to effectively predict the trajectories of all agents in a single feed-forward pass while maintaining accuracy and robustness to perspective invariance. sex. Compared with other methods based on symmetric context fusion, SFT is simpler, more lightweight and easier to implement, making it suitable for deployment in vehicle environments.

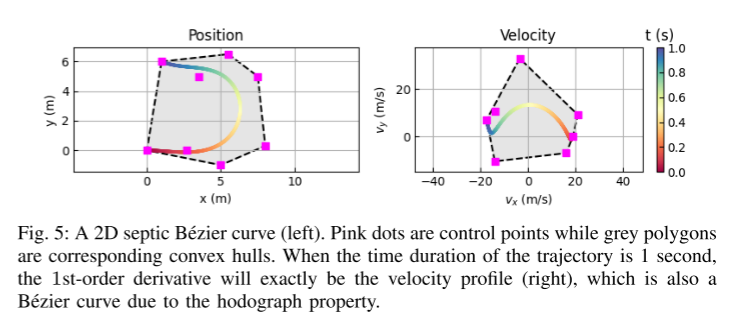

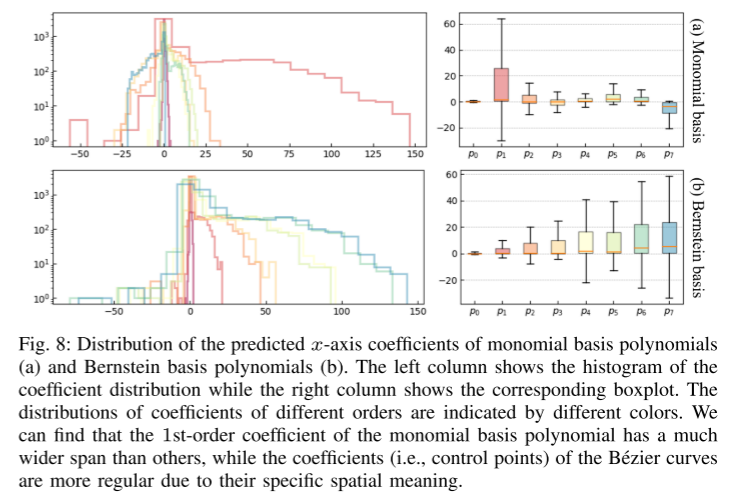

Secondly, this paper introduces a novel parameterization method for predicted trajectories based on Bernstein basis polynomial (also known as Bezier curve). This continuous representation ensures smoothness and enables easy evaluation of the precise state and its higher-order derivatives at any given point in time. The empirical study of this paper shows that learning to predict the control points of Bezier curves is more efficient and numerically stable than estimating the coefficients of monomial basis polynomials.

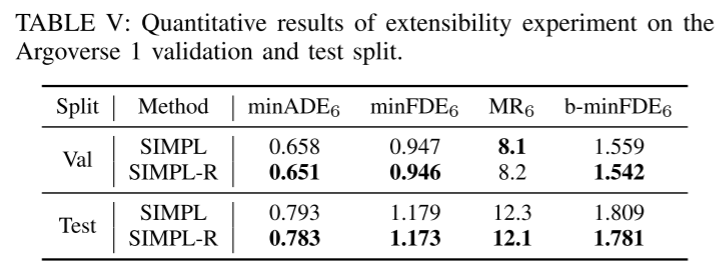

Finally, the proposed components are well integrated into a simple and efficient model. This paper evaluates the proposed method on two large-scale motion prediction datasets [22, 23], and the experimental results show that despite its simplified design, SIMPL is still highly competitive with other state-of-the-art methods. More importantly, SIMPL enables efficient multi-agent trajectory prediction with fewer learnable parameters and lower inference latency without sacrificing quantization performance, which is promising for real-world airborne deployment. This paper also highlights that, as a strong baseline, SIMPL is highly scalable. The simple architecture facilitates direct integration with the latest advances in motion prediction, providing opportunities to further improve overall performance.

Figure 1: Illustration of multi-agent motion prediction in complex driving scenarios. Our approach is able to generate reasonable hypotheses for all relevant agents simultaneously in real time. Your own vehicle and other vehicles are shown in red and blue respectively. Use gradient colors to visualize predicted trajectories based on timestamps. Please refer to the attached video for more examples.

Figure 2: SIMPL schematic. This article uses the simplest possible network architecture to demonstrate its effectiveness. Local features of semantic instances are processed by a simple encoder, while inter-instance features are preserved in relative position embeddings. Multimodal trajectory prediction results are generated by a motion decoder after the proposed symmetric feature Transformer.

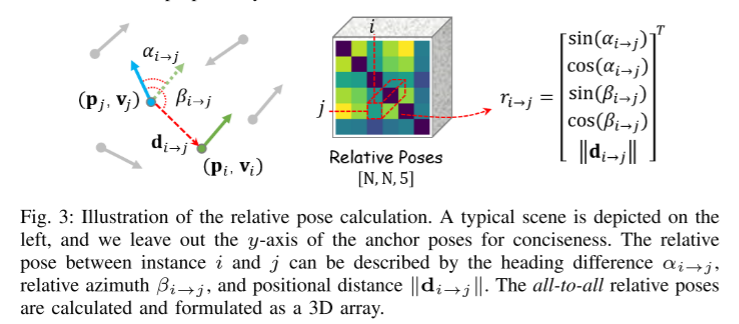

Figure 3: Schematic diagram of relative pose calculation.

Figure 4: Illustration of the proposed L-layer symmetric fusion Transformer (SFT). Instance tokens and relative position embeddings (RPE) are updated cyclically in each SFT layer.

Figure 5: 2D septic Bezier curve (left).

The above is the detailed content of SIMPL: A simple and efficient multi-agent motion prediction benchmark for autonomous driving. For more information, please follow other related articles on the PHP Chinese website!