This robot is named Cassie and once set a world record in the 100-meter run. Recently, researchers at the University of California, Berkeley, developed a new deep reinforcement learning algorithm for it, allowing it to master skills such as sharp turns and resist various interferences.

## Already carried out It has taken decades, but there is still no universal framework that enables robust control of various motor skills. The challenge arises from the complexity of underactuated dynamics of bipedal robots and the different planning associated with each locomotor skill.

## Already carried out It has taken decades, but there is still no universal framework that enables robust control of various motor skills. The challenge arises from the complexity of underactuated dynamics of bipedal robots and the different planning associated with each locomotor skill. The key question the researchers hope to solve is: How to develop a solution for a high-dimensional human-sized bipedal robot? How to control diverse, agile and robust leg movement skills such as walking, running and jumping?

A recent study may provide a good solution.

In this work, researchers from Berkeley and other institutions use reinforcement learning (RL) to create controllers for high-dimensional nonlinear bipedal robots in the real world to cope with the above challenges. These controllers can leverage the robot's proprioceptive information to adapt to uncertain dynamics that change over time, while being able to adapt to new environments and settings, leveraging the bipedal robot's agility to exhibit robust behavior in unexpected situations. Furthermore, our framework provides a general recipe for reproducing various bipedal locomotor skills.

Paper title: Reinforcement Learning for Versatile, Dynamic, and Robust Bipedal Locomotion Control

Paper title: Reinforcement Learning for Versatile, Dynamic, and Robust Bipedal Locomotion Control

- Paper link :https://arxiv.org/pdf/2401.16889.pdf

The high-dimensionality and non-linearity of torque-controlled human-sized bipedal robots may at first appear to be obstacles for the controller, however these characteristics have the advantage of enabling complex implementations through the high-dimensional dynamics of the robot. agile operation.

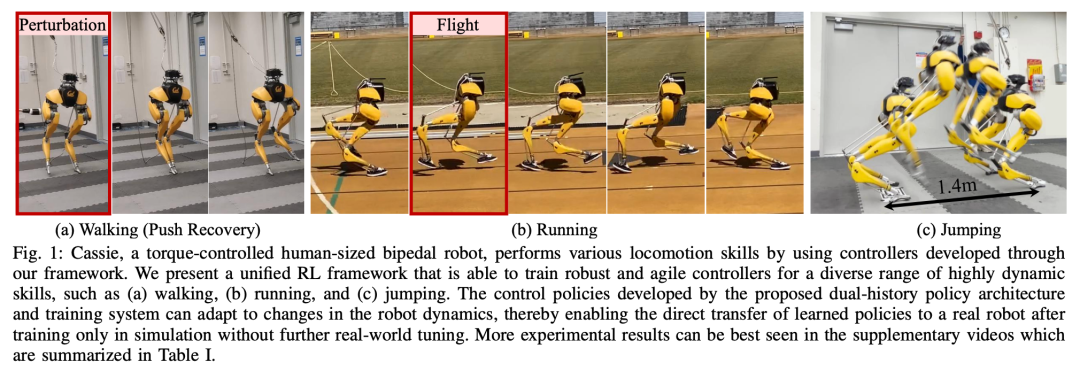

The skills this controller gives the robot are shown in Figure 1, including stable standing, walking, running and jumping. These skills can also be used to perform a variety of different tasks, including walking at different speeds and heights, running at different speeds and directions, and jumping to a variety of targets, while maintaining robustness during actual deployment. To this end, researchers use model-free RL to allow robots to learn through trial and error of the full-order dynamics of the system. In addition to real-world experiments, the benefits of using RL for leg movement control are analyzed in depth, and how to effectively structure the learning process to exploit these advantages, such as adaptability and robustness, is examined in detail.

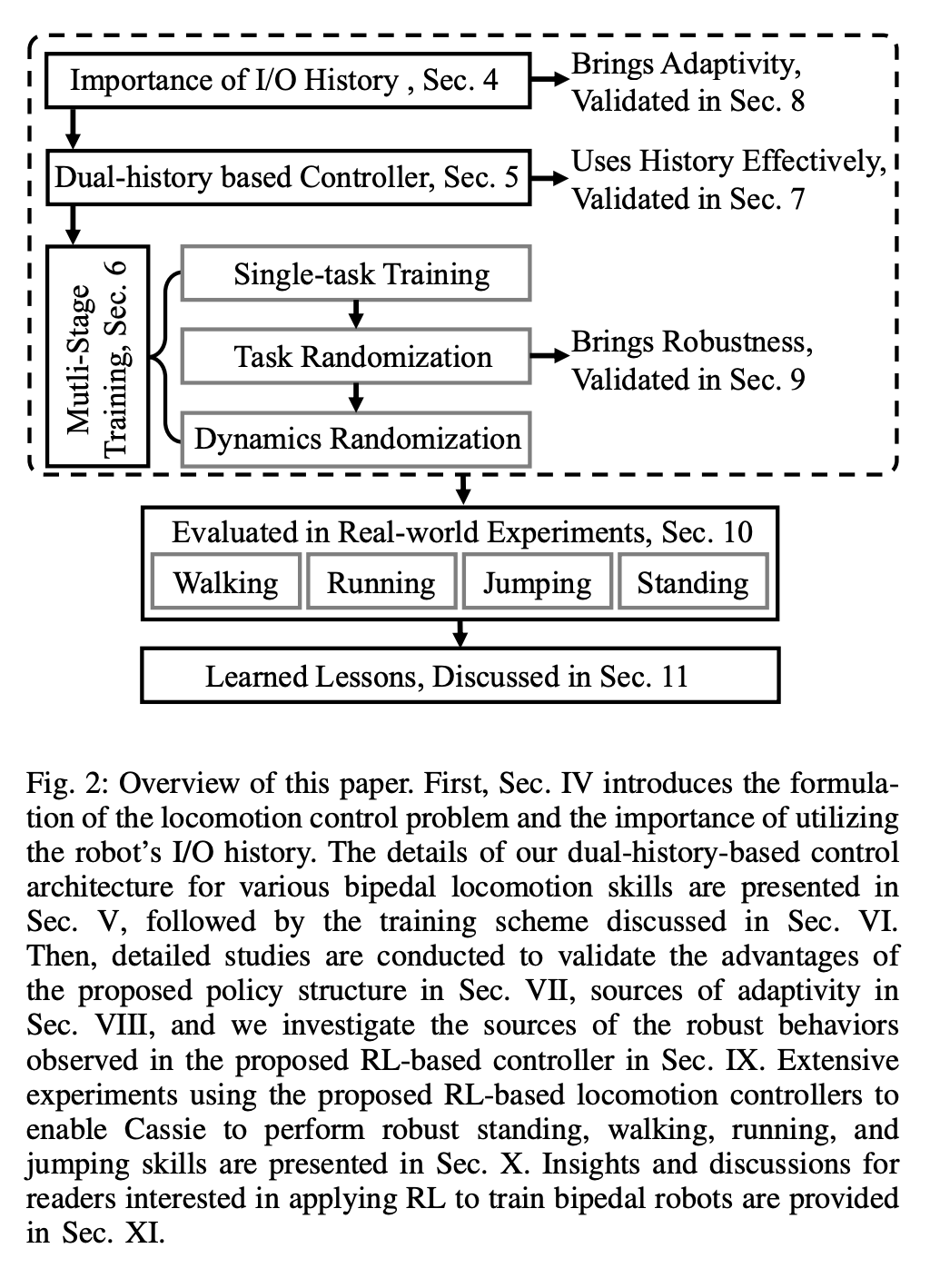

The RL system for universal bipedal motion control is shown in Figure 2:

Section 4 First The importance of utilizing robot I/O history in motion control is introduced. This section shows that the long-term I/O history of the robot can achieve system identification and state estimation in the real-time control process from both the control and RL perspectives.

Section 5 introduces the core of the research: a novel control architecture utilizing long-term and short-term I/O dual histories of bipedal robots. Specifically, this control architecture leverages not only the robot's long-term history, but also its short-term history.

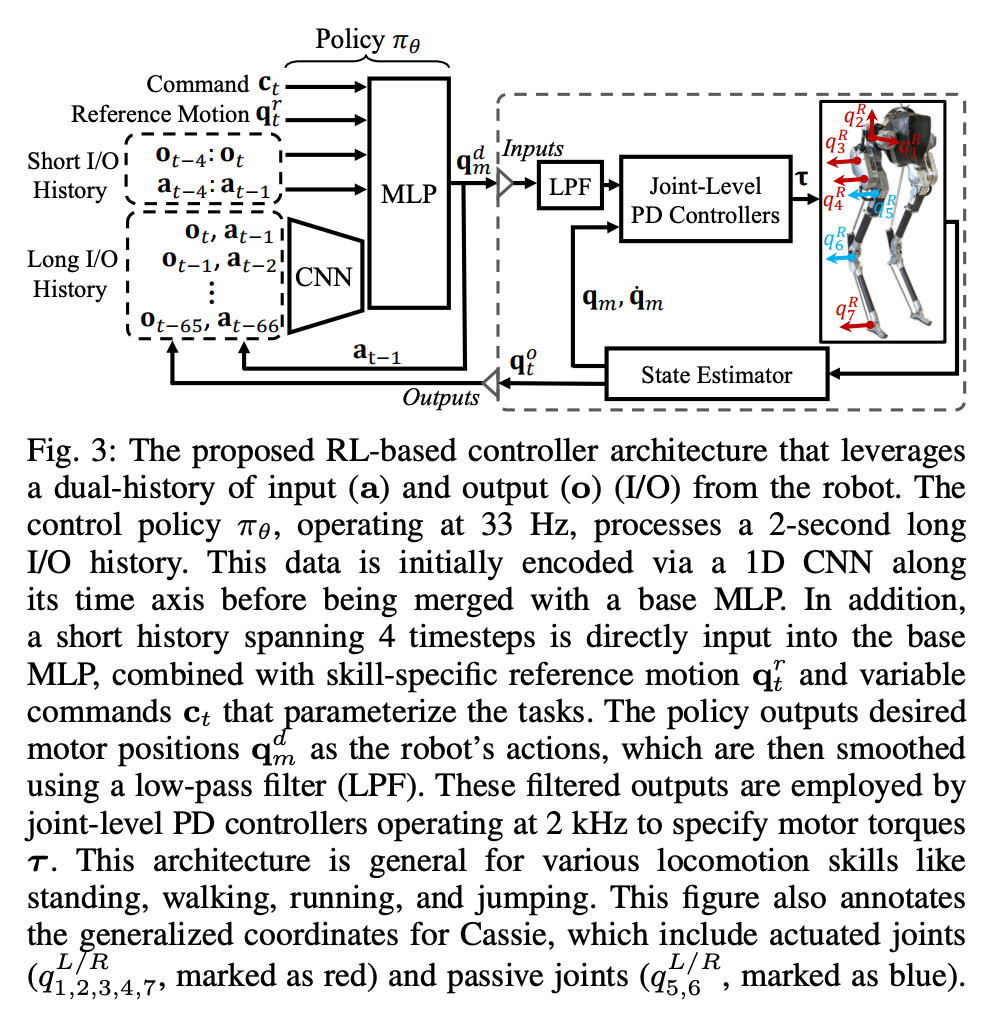

The control framework is as shown below:

In this dual history structure, the long-term history brings Adaptability (validated in Section 8), short-term history complements the utilization of long-term history (validated in Section 7) by enabling better real-time control.

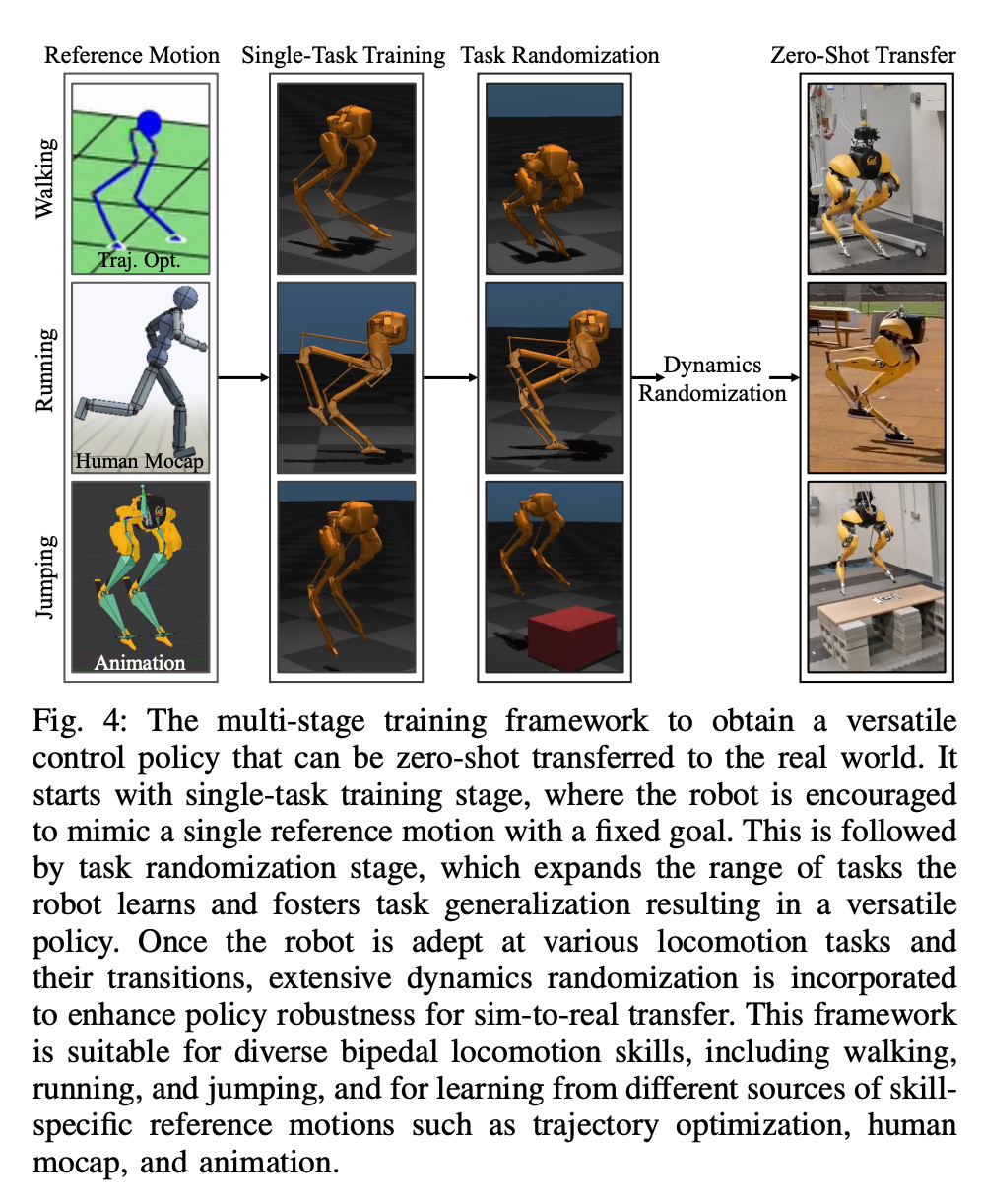

# Section 6 describes how control strategies represented by deep neural networks can be optimized through model-free RL. Given that the researchers aimed to develop a controller capable of utilizing highly dynamic motor skills to accomplish various tasks, the training in this section is characterized by multi-stage simulation training. This training strategy provides a structured course, starting with single-task training, where the robot focuses on a fixed task, then task randomization, which diversifies the training tasks the robot receives, and finally dynamic randomization, which changes the robot dynamic parameters.

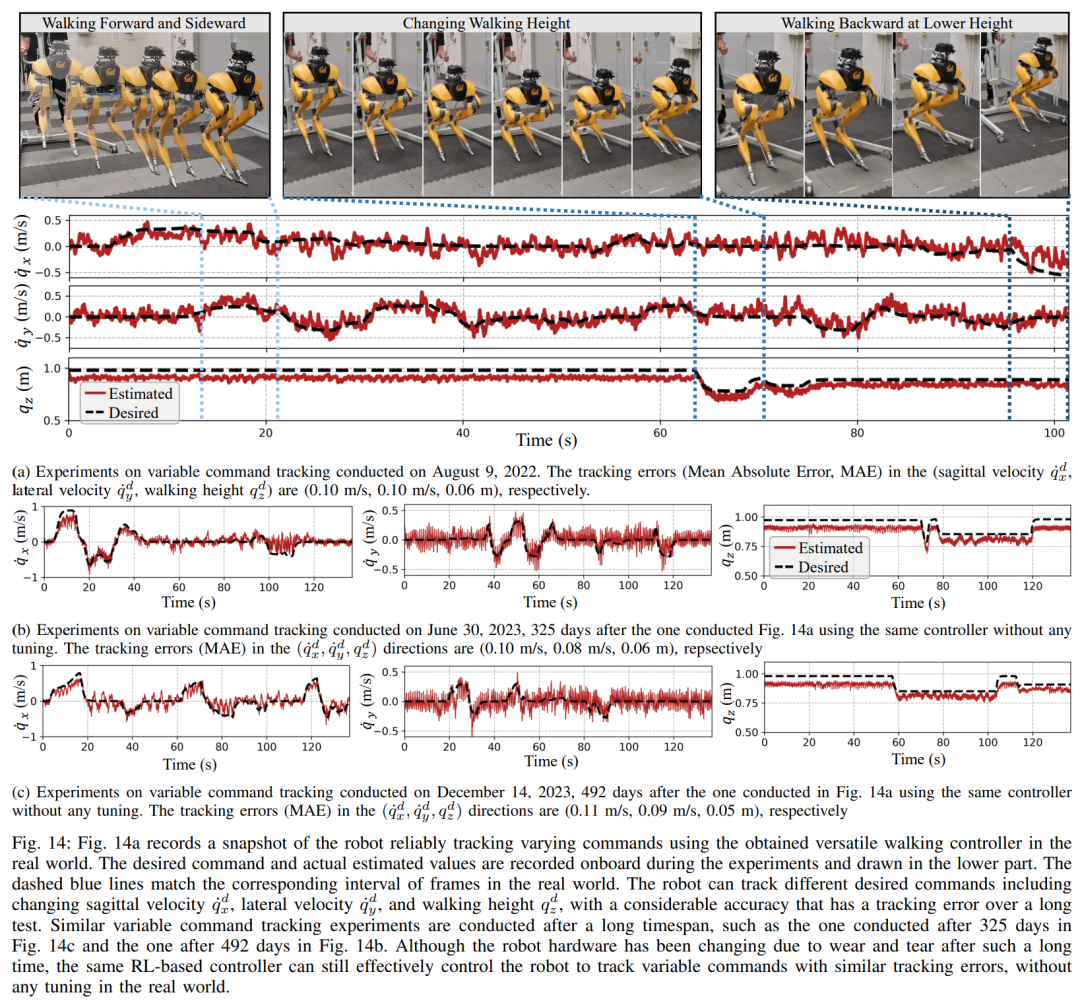

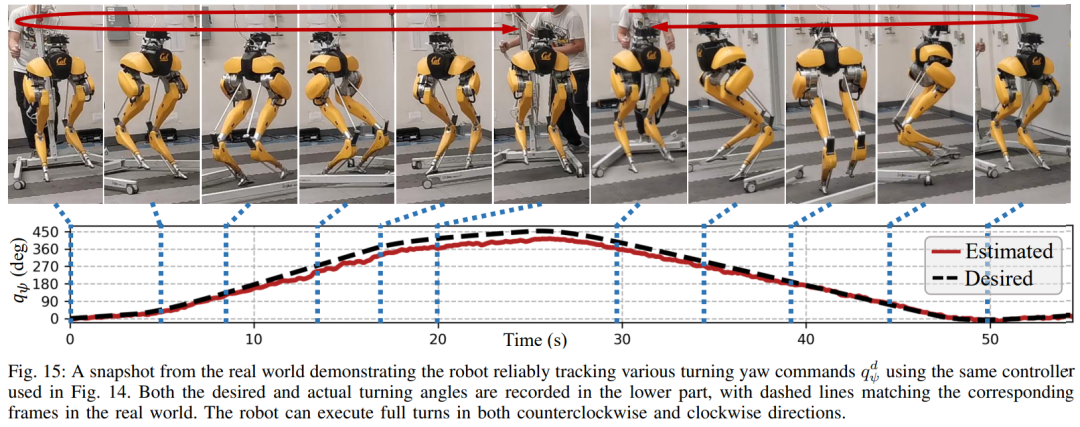

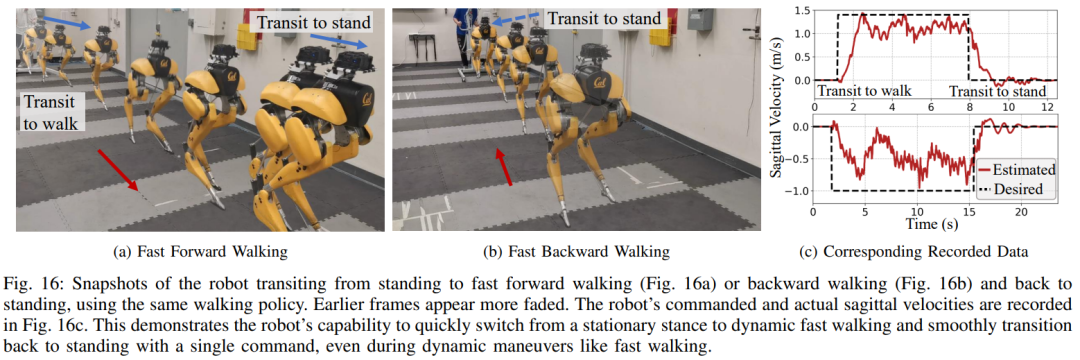

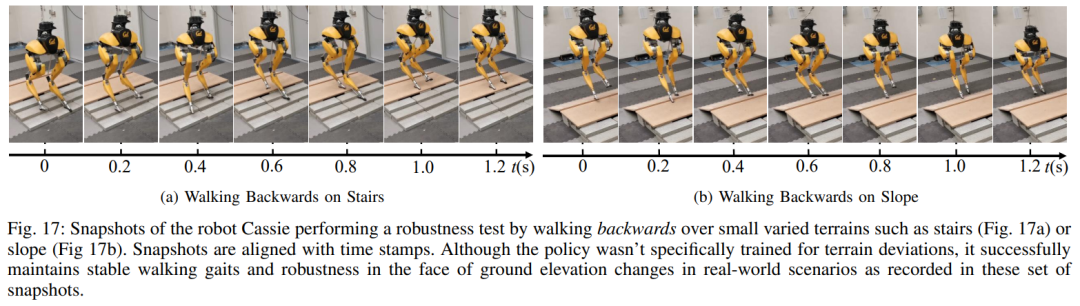

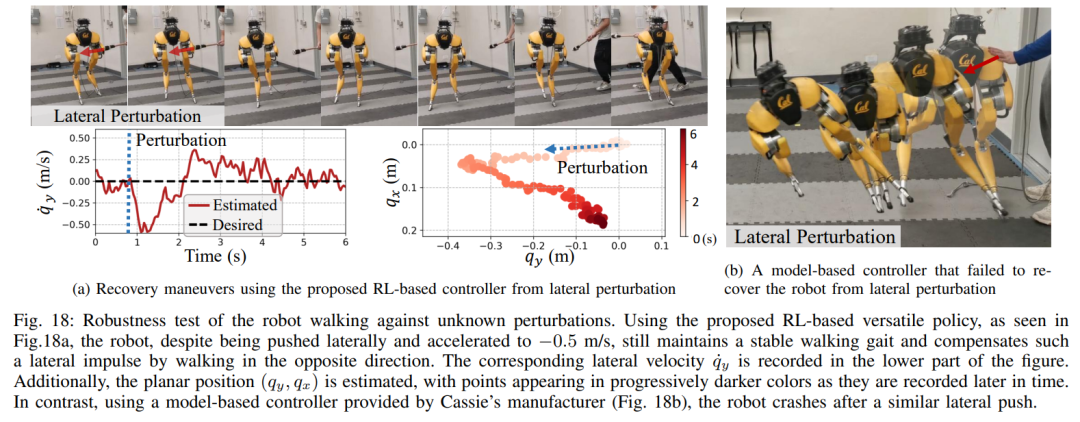

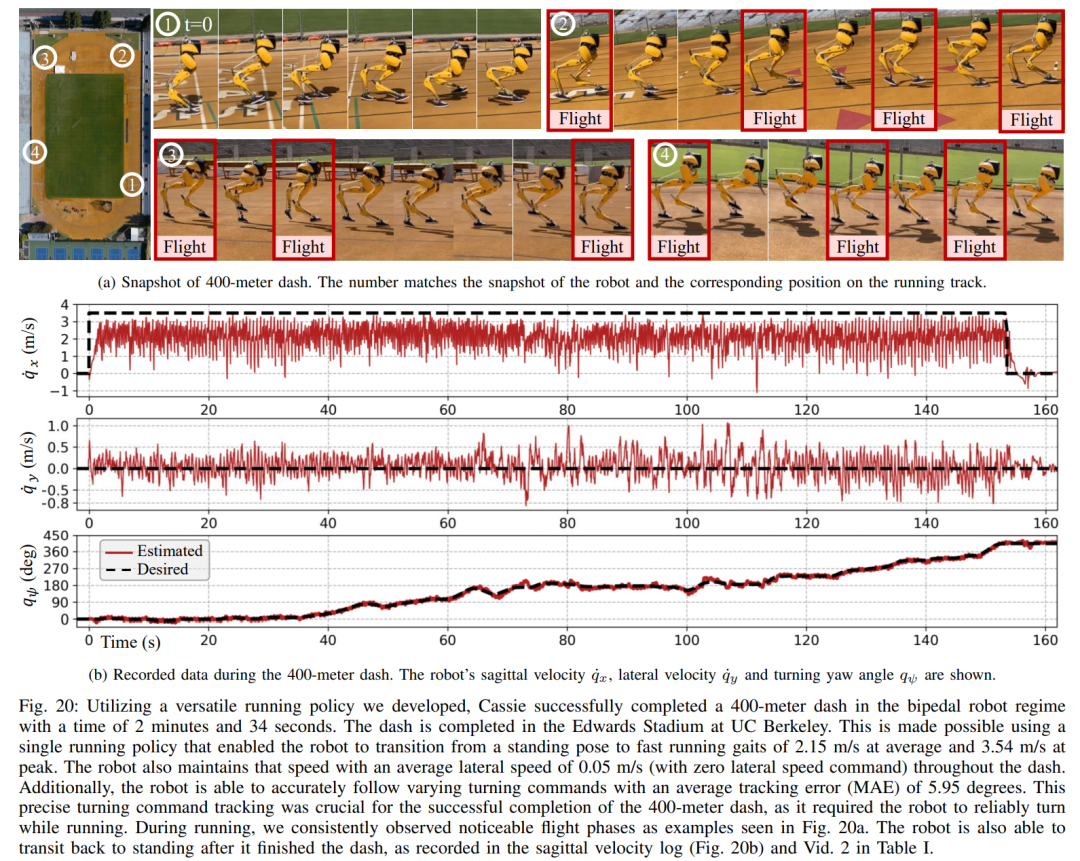

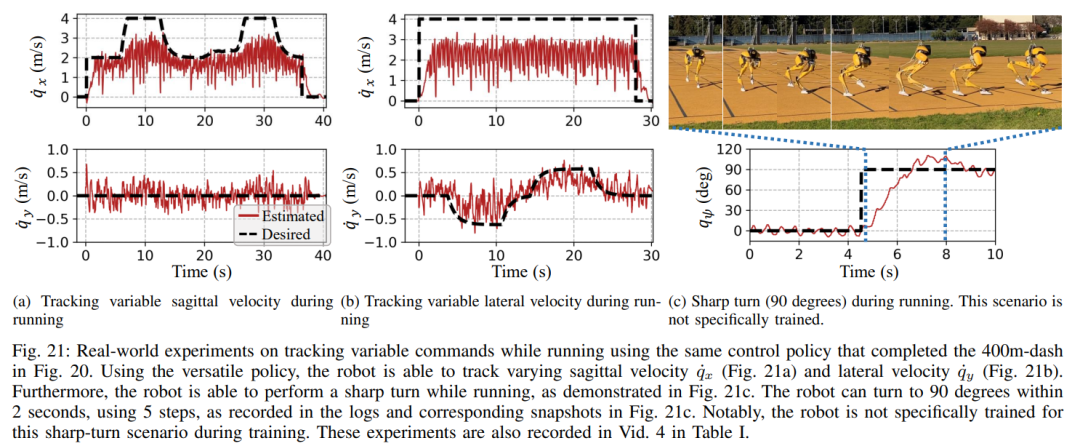

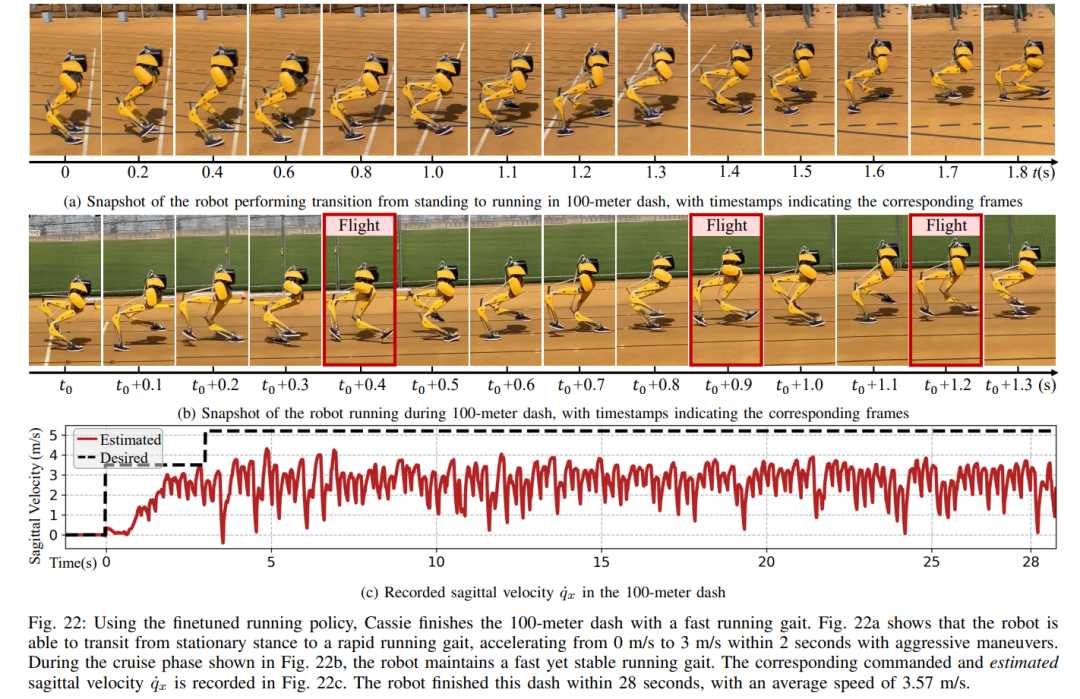

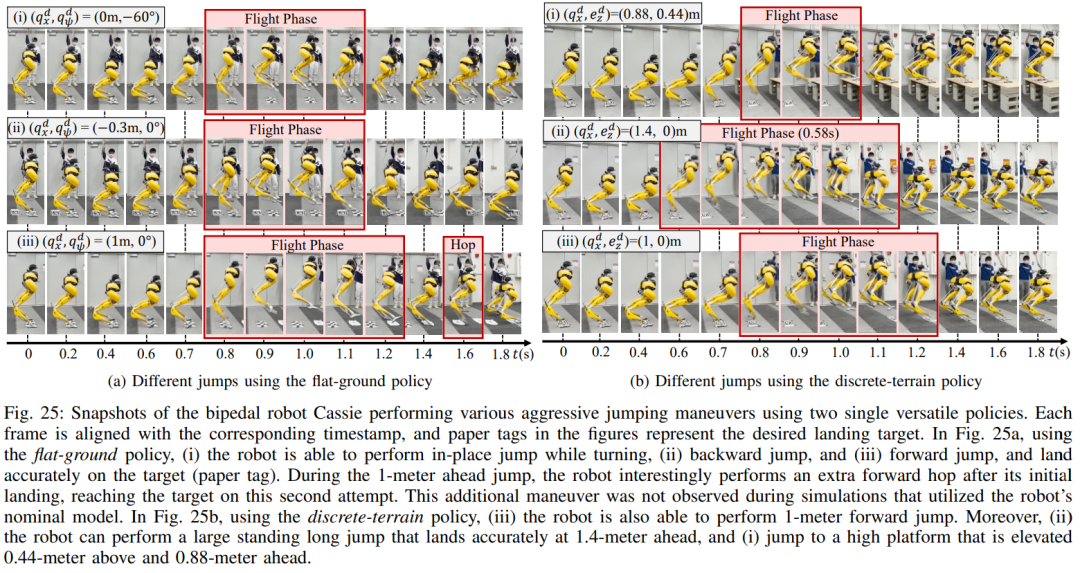

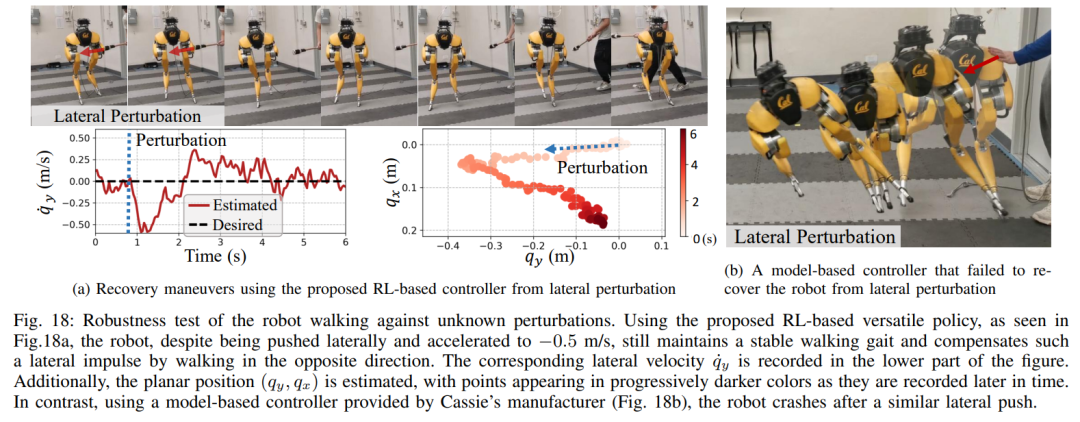

The strategy is shown in the figure below: This training strategy can provide a multifunctional control strategy that can Perform a variety of tasks and achieve zero-shot migration of robotic hardware. Furthermore, task randomization also enhances the robustness of the resulting policy by generalizing across different learning tasks. Research shows that this robustness allows robots to behave compliantly to disturbances, which is "orthogonal" to the disturbances caused by dynamic randomization. This will be verified in Section 9. Using this framework, researchers obtained a multifunctional strategy for the walking, running and jumping skills of the bipedal robot Cassie. Chapter 10 evaluates the effectiveness of these control strategies in the real world. The researchers conducted extensive experiments on robots, including in real-life A world where multiple abilities such as walking, running and jumping are tested. The strategies used are all capable of effectively controlling real-world robots after simulation training without further adjustments. As shown in Figure 14a, the walking strategy demonstrates the effectiveness of the robot Following effective control of different instructions, the tracking error was quite low throughout the test (tracking error is evaluated by the value of MAE). Furthermore, the robot strategy consistently performs well over longer periods of time, with the ability to maintain tracking of variable commands even after 325 and 492 days, Figures 14c and 14c, respectively. As shown in Figure 14b. Despite significant cumulative changes in the robot's dynamics during this period, the same controller in Figure 14a continues to effectively manage different walking tasks with minimal degradation in tracking error. As shown in Figure 15, the strategy used in this study showed reliable control of the robot, allowing the robot to accurately track different turning commands, clockwise or counterclockwise. Fast walking experiment. In addition to moderate walking speeds, the experiments also demonstrate the ability of the used strategy to control the robot to perform fast walking actions forward and backward, as shown in Figure 16 . The robot can transition from a static state to quickly achieve forward walking speed, with an average speed of 1.14 m/s (1.4 m/s required in the tracking command). The robot can also quickly return to a standing posture according to the command, as shown in Figure 16a, data record In Figure 16c. On uneven terrain (without training), the robot can also effectively walk backwards on stairs or downhill slopes, as shown in the image below. Anti-disturbance. In the case of pulse disturbances, for example, researchers introduce short-term external disturbances to the robot from all directions while the robot is walking. As recorded in Figure 18a, a considerable lateral perturbation force is applied to the robot while walking in place, with a peak lateral velocity of 0.5 m/s. Despite the perturbation, the robot quickly recovered from lateral deviations. As shown in Figure 18a, the robot skillfully moves in the opposite lateral direction, effectively compensating for the perturbation and restoring its stable walking-in-place gait.  During the continuous disturbance test, humans applied disturbance force to the robot base and dragged the robot in random directions while commanding the robot to walk in place. As shown in Figure 19a, when the robot walks normally, a continuous lateral drag force is exerted on Cassie's base. The results show that the robot exhibits compliance with these external forces by following their direction without losing its balance. This also demonstrates the advantages of the reinforcement learning-based strategy proposed in this paper in potential applications such as controlling bipedal robots to achieve safe human-robot interaction. When the robot uses a bipedal running strategy, at 2 minutes 34 Achieved a 400-meter sprint in seconds, a 100-meter sprint in 27.06 seconds, ran at an incline of up to 10°, and more. 400 Meter Dash: This study first evaluated general running strategies for completing a 400 meter dash on a standard outdoor track, as shown in Figure 20.Throughout the test, the robot was commanded to simultaneously respond to different turning commands issued by the operator at a speed of 3.5 m/s. The robot is able to smoothly transition from standing posture to running gait (Fig. 20a 1). The robot managed to accelerate to an average estimated operating speed of 2.15 m/s, reaching a peak estimated speed of 3.54 m/s, as shown in Figure 20b. This strategy allowed the robot to successfully maintain the desired speed throughout the entire 400-meter run while accurately obeying different turning commands. Under the control of the proposed running strategy, Cassie successfully completed the 400m sprint in 2 minutes and 34 seconds and was subsequently able to transition to a standing position. The study further conducted a sharp turn test, in which the robot was given a step change in yaw command, directly from 0 degrees to 90 degrees, as recorded in Figure 21c . The robot can respond to such a step command and complete a sharp 90-degree turn in 2 seconds and 5 steps. 100-meter dash: As shown in Figure 22, by deploying the proposed running strategy, the robot completed the 100-meter dash in approximately 28 seconds, achieving a time of 27.06 seconds Fastest running time. Through experiments, researchers found it difficult to train robots to jump It can jump to an elevated platform while turning at the same time, but the proposed jumping strategy realizes a variety of different bipedal jumps of the robot, including being able to jump 1.4 meters and jump to a 0.44-meter elevated platform. Jumping and turning: As shown in Figure 25a, using a single jump strategy, the robot can perform various given target jumps, such as jumping in place while rotating 60° , jump backwards and land 0.3 meters behind, etc. Jump to an elevated platform: As shown in Figure 25b, the robot can accurately jump to targets at different locations, such as 1 meter in front or 1.4 meters in front. It can also jump to locations of varying heights, including jumping to a height of 0.44 meters (considering that the robot itself is only 1.1 meters tall). #For more information, please refer to the original paper.

During the continuous disturbance test, humans applied disturbance force to the robot base and dragged the robot in random directions while commanding the robot to walk in place. As shown in Figure 19a, when the robot walks normally, a continuous lateral drag force is exerted on Cassie's base. The results show that the robot exhibits compliance with these external forces by following their direction without losing its balance. This also demonstrates the advantages of the reinforcement learning-based strategy proposed in this paper in potential applications such as controlling bipedal robots to achieve safe human-robot interaction. When the robot uses a bipedal running strategy, at 2 minutes 34 Achieved a 400-meter sprint in seconds, a 100-meter sprint in 27.06 seconds, ran at an incline of up to 10°, and more. 400 Meter Dash: This study first evaluated general running strategies for completing a 400 meter dash on a standard outdoor track, as shown in Figure 20.Throughout the test, the robot was commanded to simultaneously respond to different turning commands issued by the operator at a speed of 3.5 m/s. The robot is able to smoothly transition from standing posture to running gait (Fig. 20a 1). The robot managed to accelerate to an average estimated operating speed of 2.15 m/s, reaching a peak estimated speed of 3.54 m/s, as shown in Figure 20b. This strategy allowed the robot to successfully maintain the desired speed throughout the entire 400-meter run while accurately obeying different turning commands. Under the control of the proposed running strategy, Cassie successfully completed the 400m sprint in 2 minutes and 34 seconds and was subsequently able to transition to a standing position. The study further conducted a sharp turn test, in which the robot was given a step change in yaw command, directly from 0 degrees to 90 degrees, as recorded in Figure 21c . The robot can respond to such a step command and complete a sharp 90-degree turn in 2 seconds and 5 steps. 100-meter dash: As shown in Figure 22, by deploying the proposed running strategy, the robot completed the 100-meter dash in approximately 28 seconds, achieving a time of 27.06 seconds Fastest running time. Through experiments, researchers found it difficult to train robots to jump It can jump to an elevated platform while turning at the same time, but the proposed jumping strategy realizes a variety of different bipedal jumps of the robot, including being able to jump 1.4 meters and jump to a 0.44-meter elevated platform. Jumping and turning: As shown in Figure 25a, using a single jump strategy, the robot can perform various given target jumps, such as jumping in place while rotating 60° , jump backwards and land 0.3 meters behind, etc. Jump to an elevated platform: As shown in Figure 25b, the robot can accurately jump to targets at different locations, such as 1 meter in front or 1.4 meters in front. It can also jump to locations of varying heights, including jumping to a height of 0.44 meters (considering that the robot itself is only 1.1 meters tall). #For more information, please refer to the original paper. The above is the detailed content of Running with you is fast and stable, the robot running partner is here. For more information, please follow other related articles on the PHP Chinese website!

## Already carried out It has taken decades, but there is still no universal framework that enables robust control of various motor skills. The challenge arises from the complexity of underactuated dynamics of bipedal robots and the different planning associated with each locomotor skill.

## Already carried out It has taken decades, but there is still no universal framework that enables robust control of various motor skills. The challenge arises from the complexity of underactuated dynamics of bipedal robots and the different planning associated with each locomotor skill.  Paper title: Reinforcement Learning for Versatile, Dynamic, and Robust Bipedal Locomotion Control

Paper title: Reinforcement Learning for Versatile, Dynamic, and Robust Bipedal Locomotion Control