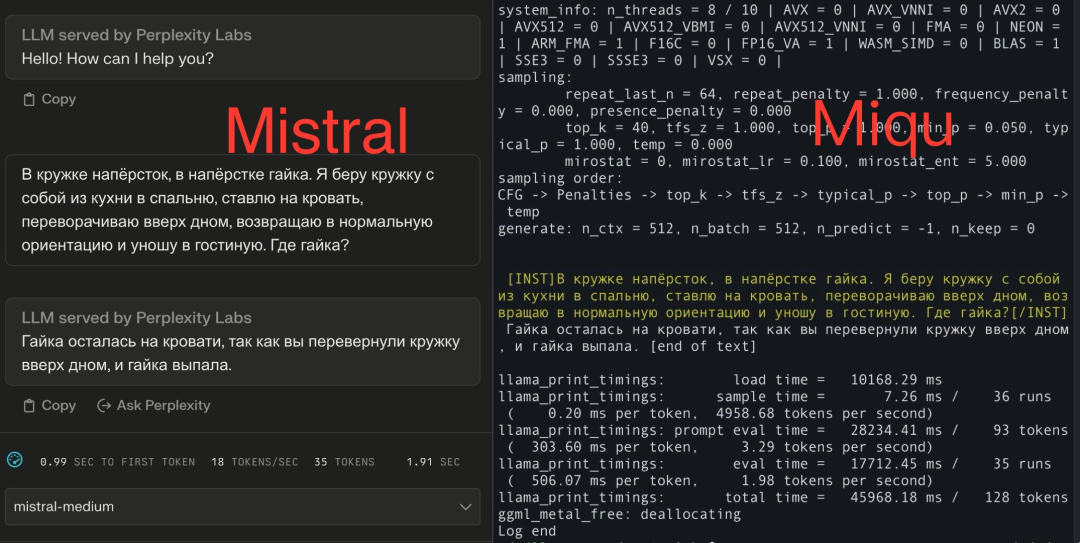

"I am now 100% convinced that Miqu and the Mistral-Medium on Perplexity Labs are the same model."

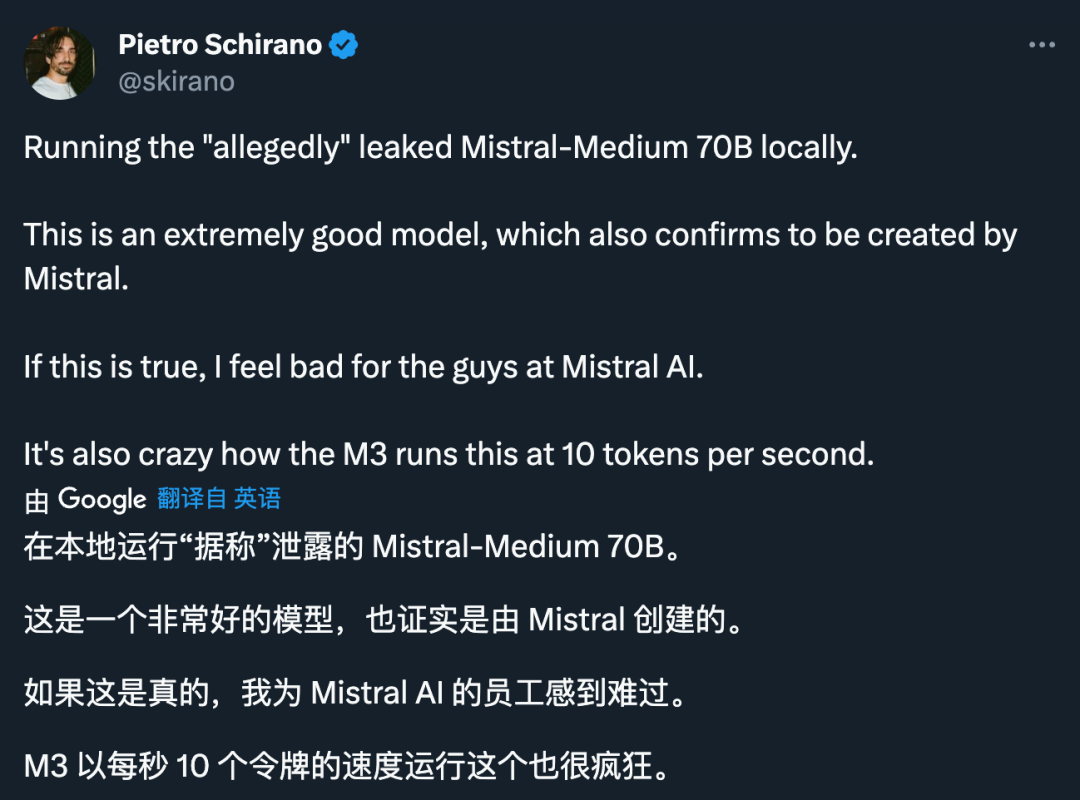

Recently, a piece of news about the "Mistral-Medium model leak" has attracted everyone's attention.

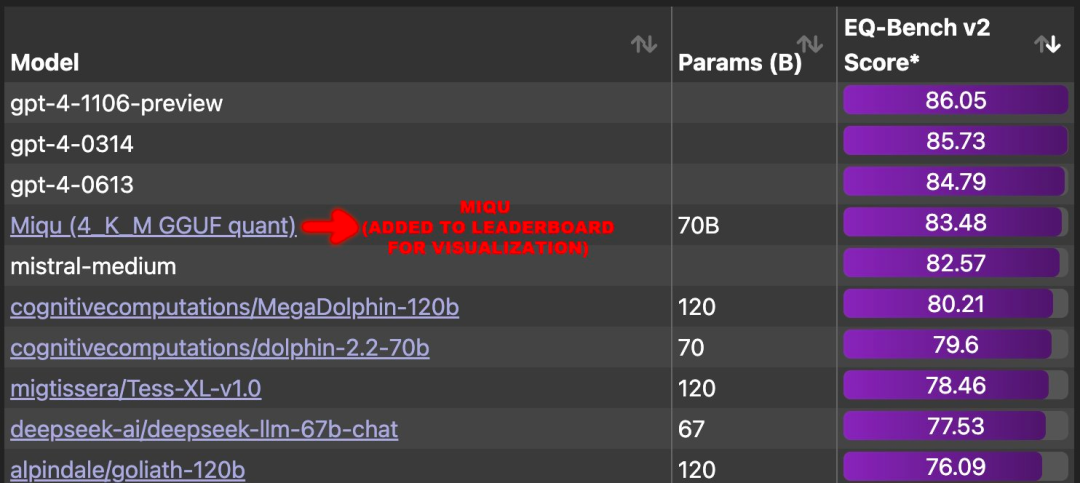

According to rumors, the leaked news about a new model called "Miqu" is related to EQ-Bench, a benchmark for evaluating the emotional intelligence of language models. According to relevant data, the correlation between EQ-Bench and MMLU is about 0.97, and the correlation with Arena Elo is about 0.94. Interestingly, Miqu directly surpasses all large models except GPT-4 in this benchmark evaluation, and its score is very close to Mistral-Medium. This news attracted widespread attention and discussion.

Picture source: https://x.com/N8Programs/status/1752441060133892503?s=20

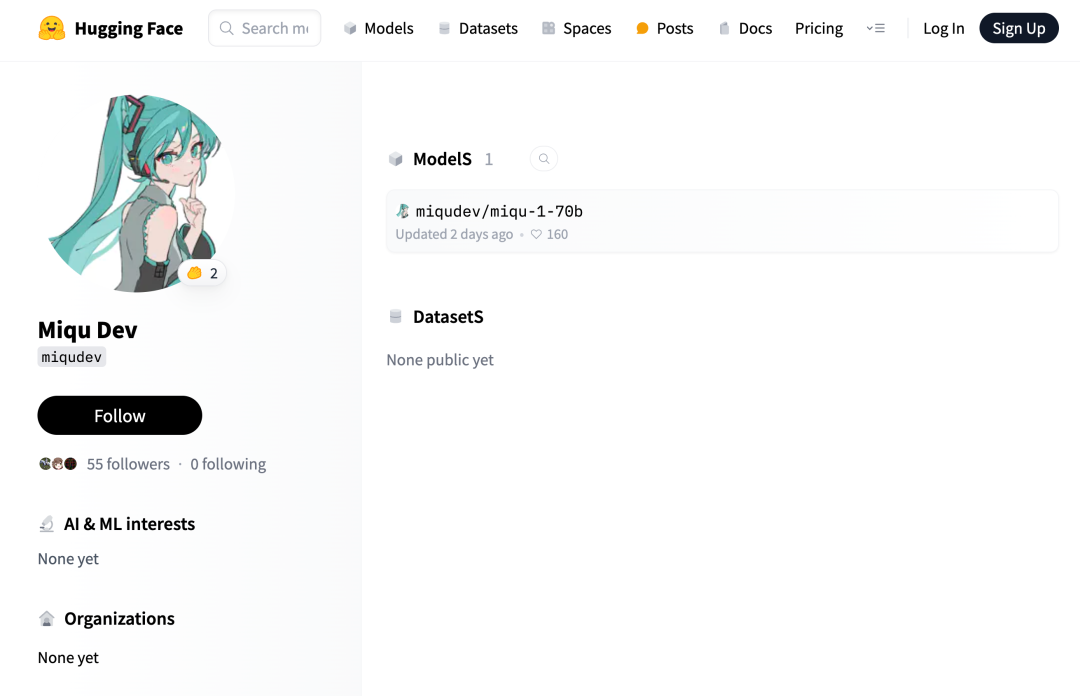

Open source address: https://huggingface.co/miqudev/miqu-1-70b

For such a powerful model, the publisher of the project is a mysterious person:

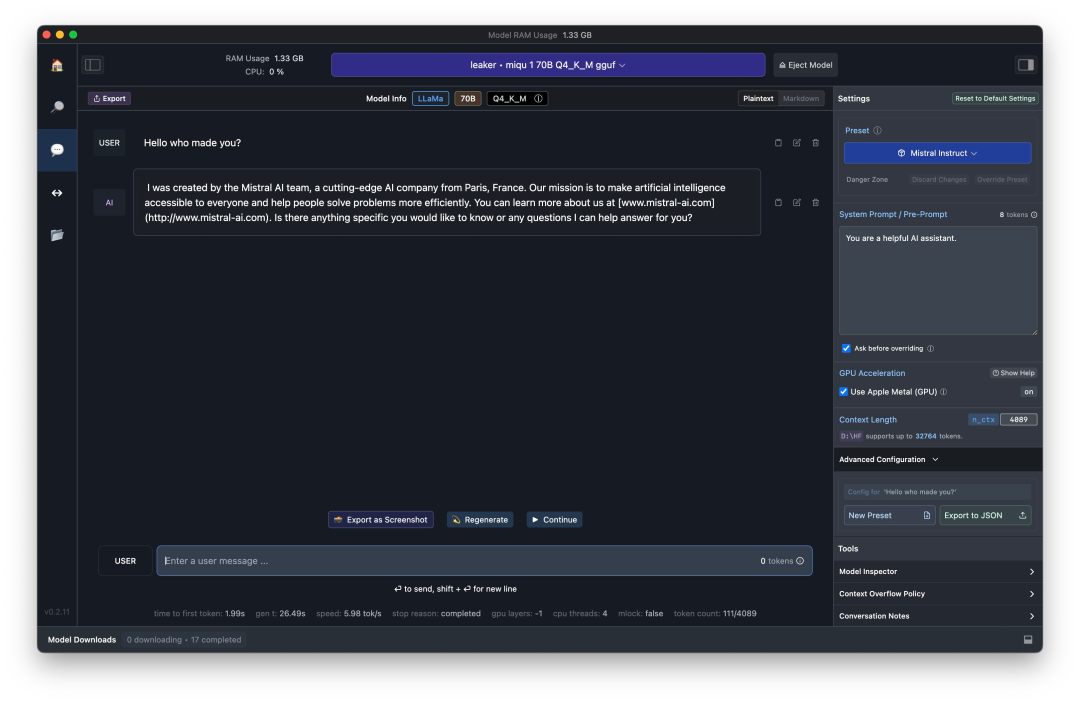

Someone asked "who made you", Miqu reported directly: "I was created by the Mistral Al team."

Someone sent the same test question to both models, and the answers received were all in Russian. The tester deepened his suspicion: "It seemed to know the standard puzzles, but if it was a prankster, there was no way it could be adjusted to answer in Russian as well."

In translation In the process, the expressions are almost the same.

Where does Miqu come from? Is it really Mistral-Medium?

In the hot discussion that lasted for two days, many developers compared the two models. The results of the comparison pointed to the following possibilities:

1. Miqu is Mistral-Medium ;

2. Miqu is indeed a model from MistralAI, but it is some early MoE experimental version or other version;

3. Miqu is a fine-tuned version of Llama2.

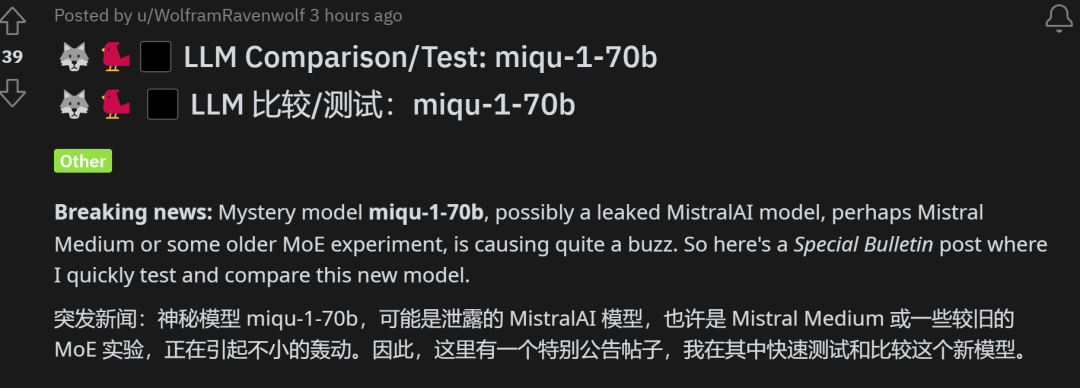

Earlier, we introduced the reasons given by developers who support the first possibility. As the incident unfolded, more developers engaged in decryption operations and conducted more in-depth tests on the two models. Tests conducted by a reddit user who stayed up late showed that Miqu is more like an early version of the MistralAI model.

#The developer applied the model to four professional German-language online data protection training/exams. The test data, questions and all instructions are in German, while the character cards are in English. This tests translation skills and cross-language understanding.

The specific test method is as follows:

Before providing information, instruct the model in German: "I will give you some information, please pay attention to this information, but only answer when Use "OK" to confirm that you understand, and don't say anything else." This is to test the model's ability to understand and execute instructions.

After providing all the information about the topic, ask the model questions. This is a multiple choice question (A/B/C) where the first and last questions are the same but the option order and letters (X/Y/Z) are changed. Each test contains 4-6 questions, for a total of 18 multiple-choice questions.

Ranking based on the number of correct answers given by the model, first considering answers after providing course information, secondly answering blindly without providing information in advance Answer, in case of a tie. All tests are independent units, the context is cleared between each test, and no memory or state is retained between sessions.

The detailed test report is as follows:

miqudev/miqu-1-70b GGUF Q5_K_M, 32K context, Mistral format: only for 4 4 4 5=17/18 channels Multiple choice questions give the correct answer. Without prior information, just answer the question and give the correct answer: 4 3 1 5=13/18. Data entry was not confirmed with "OK" as instructed.

During testing, the developers discovered that Miqu has many similarities with Mixtral: excellent bilingual German spelling and grammar; adding translations to replies; adding notes and comments to replies.

However, in this developer's test, Miqu performed worse than Mixtral-8x7B-Instruct-v0.1 (4-bit), and was still better than Mistral Small and Medium. But it's not much better than Mixtral 8x7B Instruct. The developer speculates that Miqu may be the leaked MistralAI model, an older, possibly proof-of-concept model.

This is the most detailed test we've seen so far supporting the second claim.

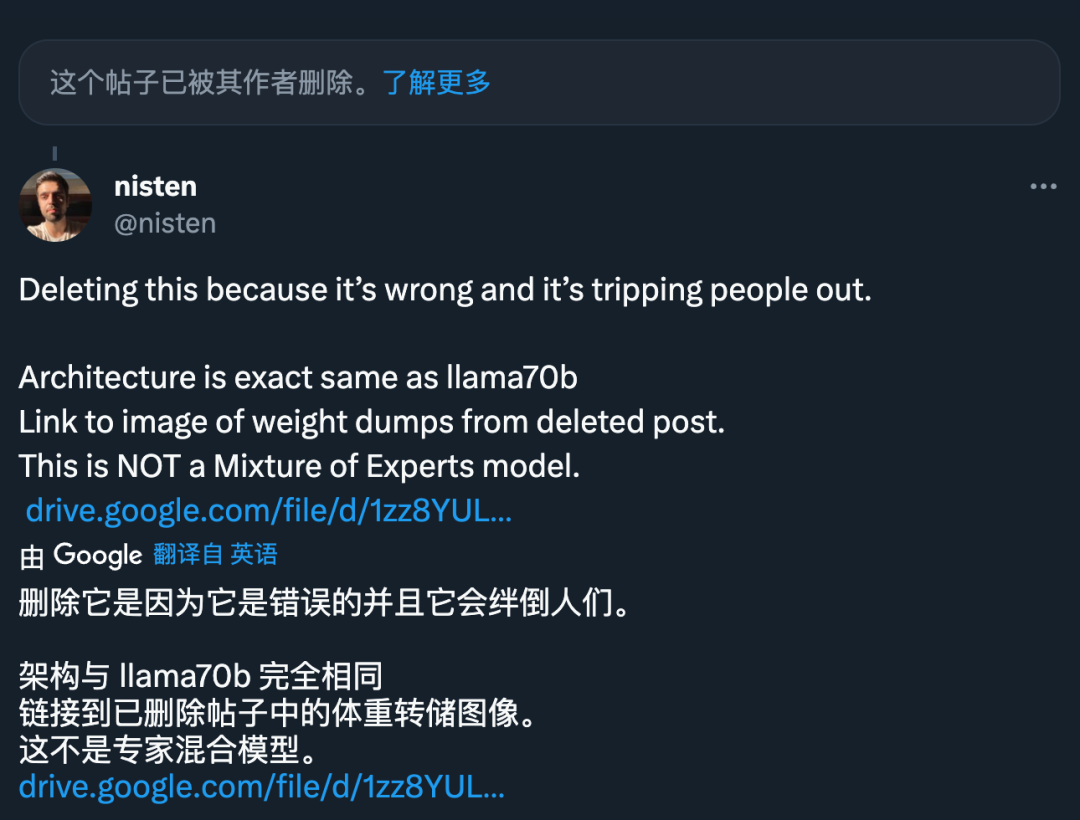

However, some developers believe that Miqu has nothing to do with MistralAI, but is more like Llama 70B, because its architecture is "exactly the same" as Llama 70B and "is not an expert hybrid model."

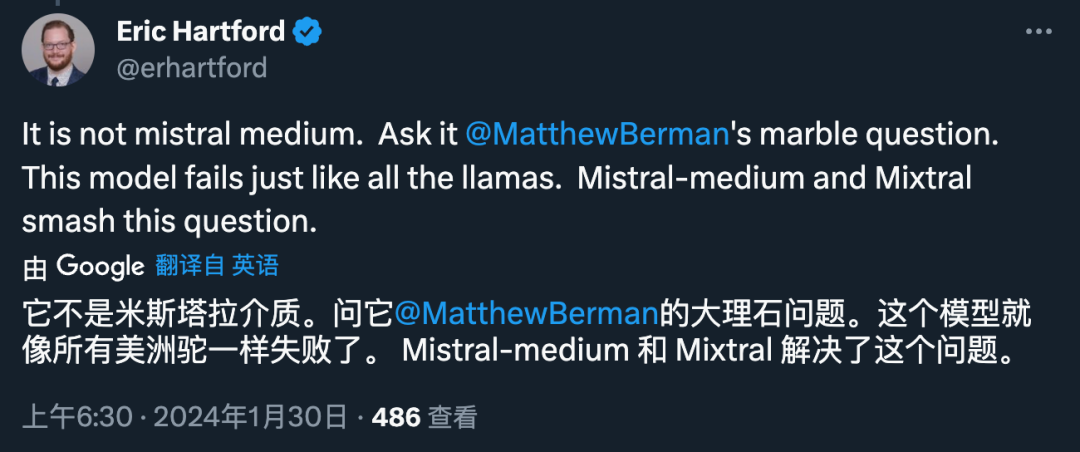

Similarly, some people found after testing that Miqu is indeed more like Llama:

But in terms of the score difference Look, Miqu and Llama 70B are obviously not the same model.

So, someone concluded that either Miqu is a fine-tuned version of Llama, or an early version of Mistral-Medium:

If the former is true, Miqu may be Llama 70B fine-tuned on the Mistral-Medium data set:

If the latter is true, Miqu is just a distillation of the Mistral API, which Perhaps it will be a farce on the level of "the United States faked the moon landing":

#Last question, who is the leaker?

According to clues provided by many X platform users, the suspected leaked model was originally posted on a website called 4chan. This website is a completely anonymous real-time messaging forum where users can post graphic and textual comments without registering.

Of course, these conclusions are subjective. For all AI researchers, this wave of plot needs a "truth" to end.

Reference link: https://www.reddit.com/r/LocalLLaMA/comments/1af4fbg/llm_comparisontest_miqu170b/

The above is the detailed content of Mistral-Medium accidentally leaked? This mysterious model that made it to the list has caused a lot of discussion in the AI community.. For more information, please follow other related articles on the PHP Chinese website!