Adversarial learning is a machine learning technique that improves the robustness of a model by adversarially training it. The purpose of this training method is to cause the model to produce inaccurate or wrong predictions by deliberately introducing challenging samples. In this way, the trained model can better adapt to changes in real-world data, thereby improving the stability of its performance.

Attacks on machine learning models can be divided into two categories: white box attacks and black box attacks. A white-box attack means that the attacker can access the structure and parameters of the model to carry out the attack; while a black-box attack means that the attacker cannot access this information. Some common adversarial attack methods include fast gradient sign method (FGSM), basic iterative method (BIM), and Jacobian matrix-based saliency map attack (JSMA).

Adversarial learning plays an important role in improving model robustness. It can help the model generalize better and identify and adapt to data structures, thereby improving robustness. In addition, adversarial learning can also discover model weaknesses and provide guidance for improving the model. Therefore, adversarial learning is crucial for model training and optimization.

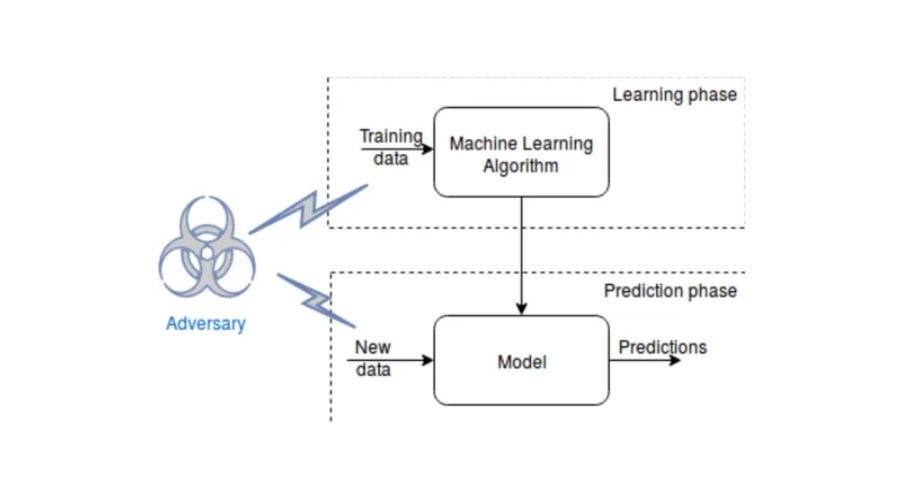

Incorporating adversarial learning into machine learning models requires two steps: generating adversarial examples and incorporating these examples into the training process.

There are many ways to generate information, including gradient-based methods, genetic algorithms, and reinforcement learning. Among them, gradient-based methods are the most commonly used. This method involves calculating the gradient of the input loss function and adjusting the information based on the direction of the gradient to increase the loss.

Adversarial examples can be incorporated into the training process through adversarial training and adversarial enhancement. During training, adversarial examples are used to update model parameters while improving model robustness by adding adversarial examples to the training data.

Augmented data is a simple and effective practical method that is widely used to improve model performance. The basic idea is to introduce adversarial examples into the training data and then train the model on the augmented data. The trained model is able to accurately predict the class labels of original and adversarial examples, making it more robust to changes and distortions in the data. This method is very common in practical applications.

Adversarial learning has been applied to a variety of machine learning tasks, including computer vision, speech recognition, and natural language processing.

In computer vision, to improve the robustness of image classification models, adjusting the robustness of convolutional neural networks (CNN) can improve the accuracy of unseen data.

Adversarial learning plays a role in improving the robustness of automatic speech recognition (ASR) systems in speech recognition. The method works by using adversarial examples to alter the input speech signal in a way that is designed to be imperceptible to humans but cause the ASR system to mistranscribe it. Research shows that adversarial training can improve the robustness of ASR systems to these adversarial examples, thereby improving recognition accuracy and reliability.

In natural language processing, adversarial learning has been used to improve the robustness of sentiment analysis models. Adversarial examples in this field of NLP aim to manipulate input text in a way that results in incorrect and inaccurate model predictions. Adversarial training has been shown to improve the robustness of sentiment analysis models to these types of adversarial examples, resulting in improved accuracy and robustness.

The above is the detailed content of An in-depth analysis of adversarial learning techniques in machine learning. For more information, please follow other related articles on the PHP Chinese website!