SqueezeNet is a small and precise algorithm that strikes a good balance between high accuracy and low complexity, making it ideal for mobile and embedded systems with limited resources.

In 2016, researchers from DeepScale, University of California, Berkeley, and Stanford University proposed a compact and efficient convolutional neural network (CNN)-SqueezeNet. In recent years, researchers have made several improvements to SqueezeNet, including SqueezeNet v1.1 and SqueezeNet v2.0. Improvements in both versions not only increase accuracy but also reduce computational costs. SqueezeNet v1.1 improves accuracy by 1.4% on the ImageNet dataset, while SqueezeNet v2.0 improves accuracy by 1.8%. At the same time, the number of parameters in these two versions is reduced by 2.4 times. This means that SqueezeNet can reduce model complexity and computational resource requirements while maintaining high accuracy. Due to SqueezeNet's compact design and efficient operation, it has great advantages in scenarios with limited computing resources. This makes SqueezeNet ideal for applying deep learning in edge devices and embedded systems. Through continuous improvement and optimization, SqueezeNet provides a feasible solution for efficient image classification and object detection tasks.

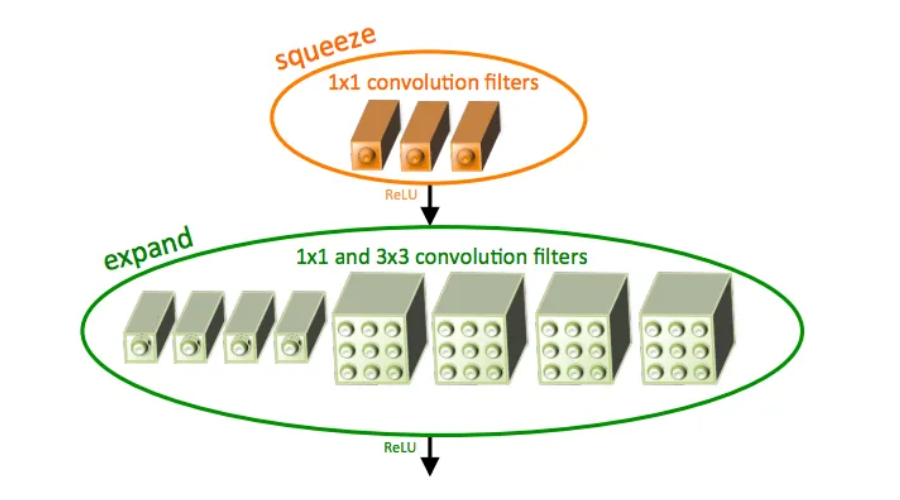

SqueezeNet uses the fire module, which is a special type of convolutional layer that combines 1x1 and 3x3 filters to effectively reduce the number of parameters while maintaining high accuracy, making it a resource-intensive Ideal for limited equipment. It is able to achieve highly accurate results using only a fraction of the computational resources required by other CNNs.

A major advantage of SqueezeNet is that it strikes a balance between accuracy and computational resources. Compared with AlexNet, the number of parameters of SqueezeNet is reduced by 50 times, and the floating point operations per second (FLOPS) requirement is reduced by 10 times. Therefore, it is able to run on edge devices with limited computing resources, such as mobile phones and IoT devices. This efficiency makes SqueezeNet ideal for deep learning in resource-constrained environments.

SqueezeNet uses a method called channel squeezing, which is one of the main innovations of the technology. By reducing the number of channels in the model's convolutional layers, SqueezeNet reduces network computational cost while maintaining accuracy. In addition to other methods such as fire modules and deep compression, SqueezeNet also uses channel compression to improve efficiency. This method can reduce the number of parameters of the model by removing redundant channels, thereby reducing the amount of calculation and improving the running speed of the model. This channel squeezing method effectively reduces the computational cost of the network while maintaining model accuracy, making SqueezeNet a lightweight and efficient neural network model.

Unlike traditional CNN, SqueezeNet does not require a lot of computing power and can be used with feature extractors in other machine learning pipelines. This enables other models to benefit from features learned by SqueezeNet, resulting in higher performance on mobile devices.

SqueezeNet is recognized for its architectural innovation and proven performance improvement, and has been widely adopted by other CNN architectures.

The above is the detailed content of Introduction to SqueezeNet and its characteristics. For more information, please follow other related articles on the PHP Chinese website!