Transformer’s position in the field of large models is unshakable. However, as the model scale expands and the sequence length increases, the limitations of the traditional Transformer architecture begin to become apparent. Fortunately, the advent of Mamba is quickly changing this situation. Its outstanding performance immediately caused a sensation in the AI community. The emergence of Mamba has brought huge breakthroughs to large-scale model training and sequence processing. Its advantages are spreading rapidly in the AI community, bringing great hope for future research and applications.

Last Thursday, the introduction of Vision Mamba (Vim) has demonstrated its great potential to become the next generation backbone of the visual basic model. Just one day later, Researchers from the Chinese Academy of Sciences, Huawei, and Pengcheng Laboratory proposed VMamba:A visual Mamba model with global receptive field and linear complexity. This work marks the arrival of the visual Mamba model Swin moment.

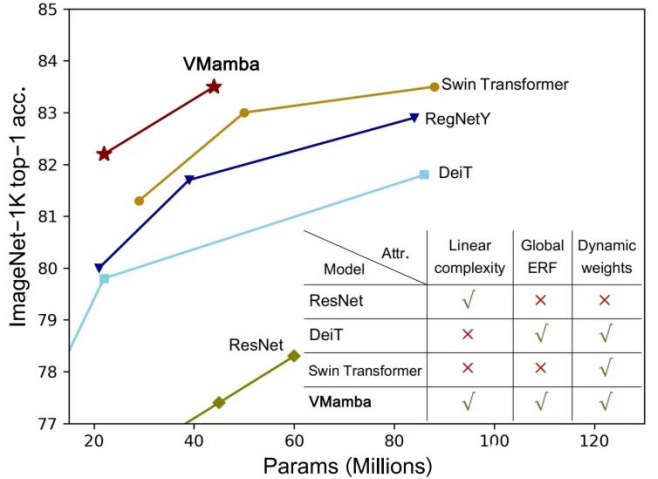

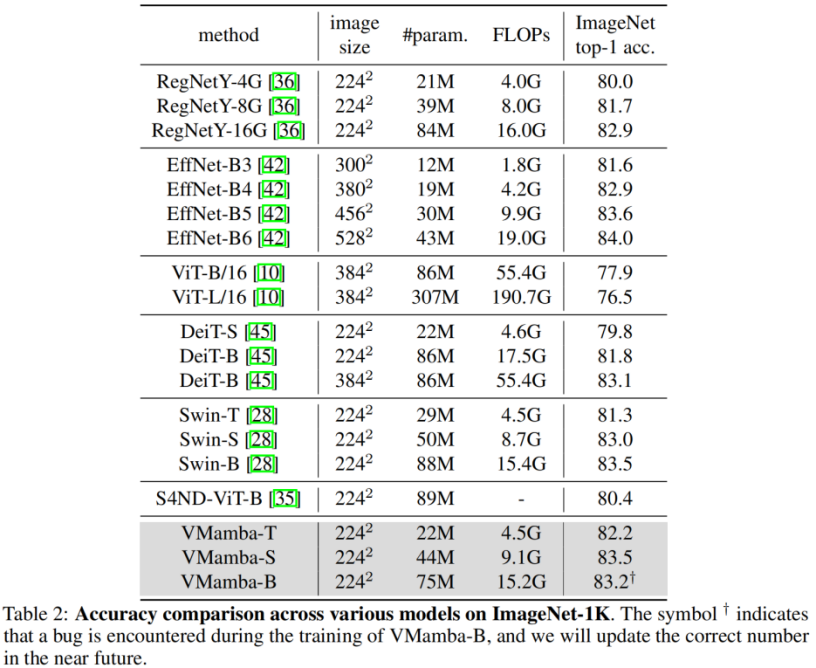

CNN and Visual Transformer (ViT) are currently the two most mainstream basic visual models. Although CNN has linear complexity, ViT has more powerful data fitting capabilities, but at the cost of higher computational complexity. Researchers believe that ViT has strong fitting ability because it has a global receptive field and dynamic weights. Inspired by the Mamba model, researchers designed a model that has both excellent properties under linear complexity, namely the Visual State Space Model (VMamba). Extensive experiments have proven that VMamba performs well in various visual tasks. As shown in the figure below, VMamba-S achieves 83.5% accuracy on ImageNet-1K, which is 3.2% higher than Vim-S and 0.5% higher than Swin-S.

The success of VMamba The key lies in the use of the S6 model, which was originally designed to solve natural language processing (NLP) tasks. Unlike ViT's attention mechanism, the S6 model effectively reduces quadratic complexity to linearity by interacting each element in the 1D vector with previous scan information. This interaction makes VMamba more efficient when processing large-scale data. Therefore, the introduction of the S6 model laid a solid foundation for VMamba's success.

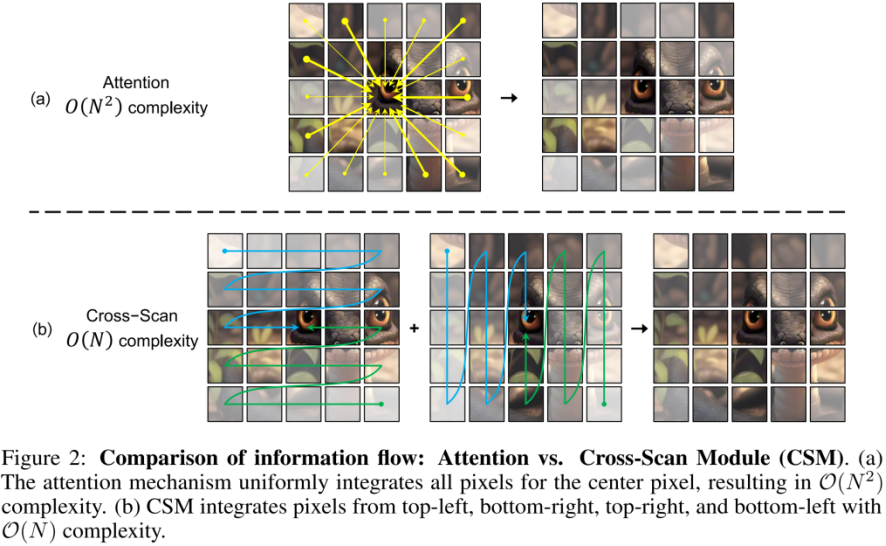

However, since visual signals (such as images) do not have a natural orderliness like text sequences, the data scanning method in S6 cannot simply be directly performed on visual signals. application. For this purpose, researchers designed a Cross-Scan scanning mechanism. Cross-Scan module (CSM) adopts a four-way scanning strategy, that is, scanning from the four corners of the feature map simultaneously (see the figure above). This strategy ensures that each element in the feature integrates information from all other locations in different directions, thus forming a global receptive field without increasing linear computational complexity.

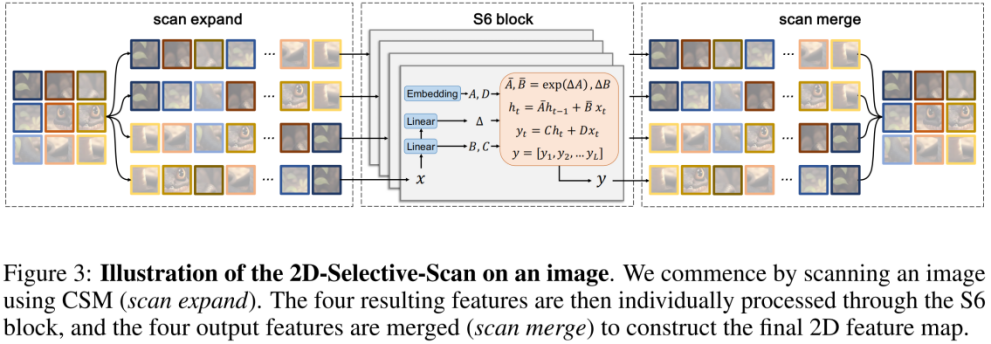

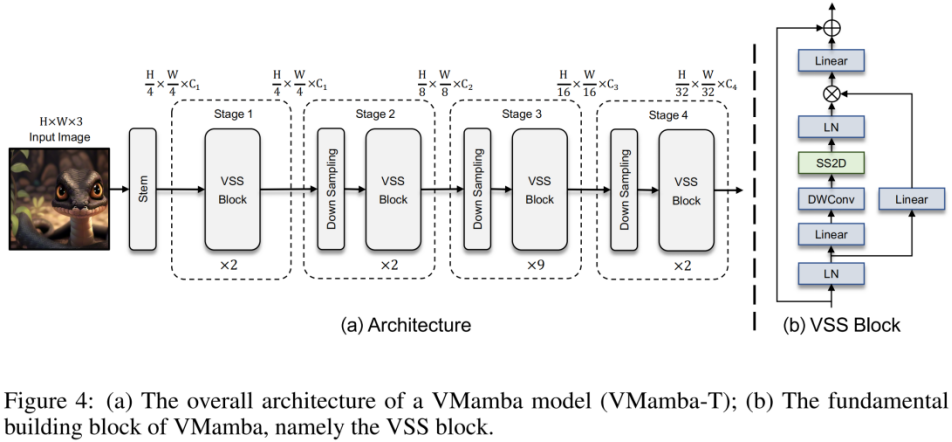

Based on CSM, the author designed the 2D-selective-scan (SS2D) module. As shown in the figure above, SS2D consists of three steps:

The above picture is the VMamba structure diagram proposed in this article. The overall framework of VMamba is similar to the mainstream visual model. The main difference lies in the operators used in the basic module (VSS block). VSS block uses the 2D-selective-scan operation introduced above, namely SS2D. SS2D ensures that VMamba achieves the global receptive field at the linear complexity cost.

ImageNet classification

##passed Comparing the experimental results, it is not difficult to see that under similar parameter amounts and FLOPs:

These results are much higher than the Vision Mamba (Vim) model, fully validating the potential of VMamba.

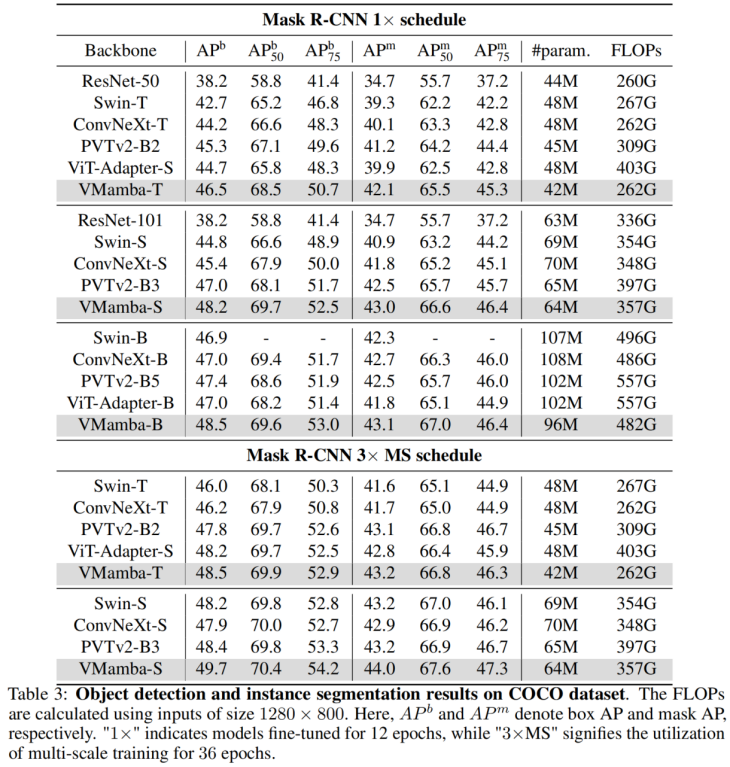

COCO target detection

On the COOCO data set, VMamba also Maintaining excellent performance: In the case of fine-tune 12 epochs, VMamba-T/S/B reached 46.5%/48.2%/48.5% mAP respectively, exceeding Swin-T/S/B by 3.8%/3.6%/1.6 % mAP, exceeding ConvNeXt-T/S/B by 2.3%/2.8%/1.5% mAP. These results verify that VMamba fully works in downstream visual experiments, demonstrating its potential to replace mainstream basic visual models.

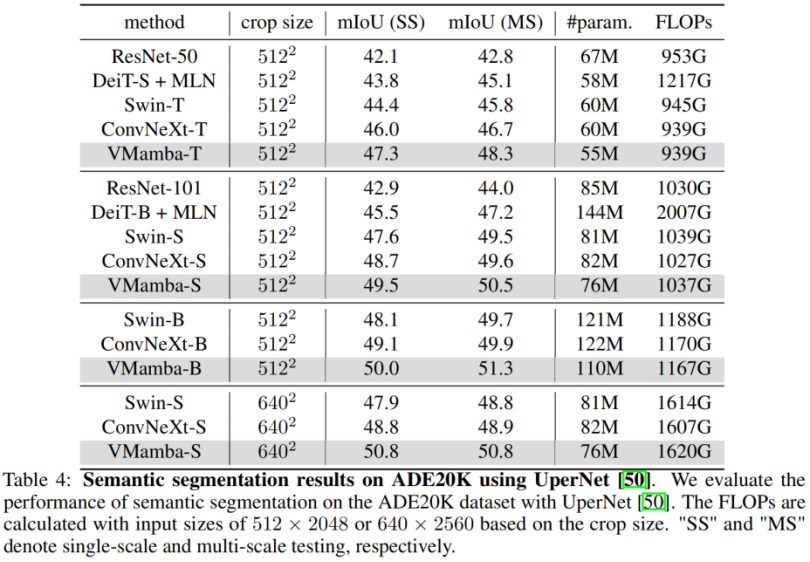

ADE20K Semantic Segmentation

On ADE20K, VMamba also shows Excellent performance. The VMamba-T model achieves 47.3% mIoU at 512 × 512 resolution, a score that surpasses all competitors, including ResNet, DeiT, Swin, and ConvNeXt. This advantage can still be maintained under the VMamba-S/B model.

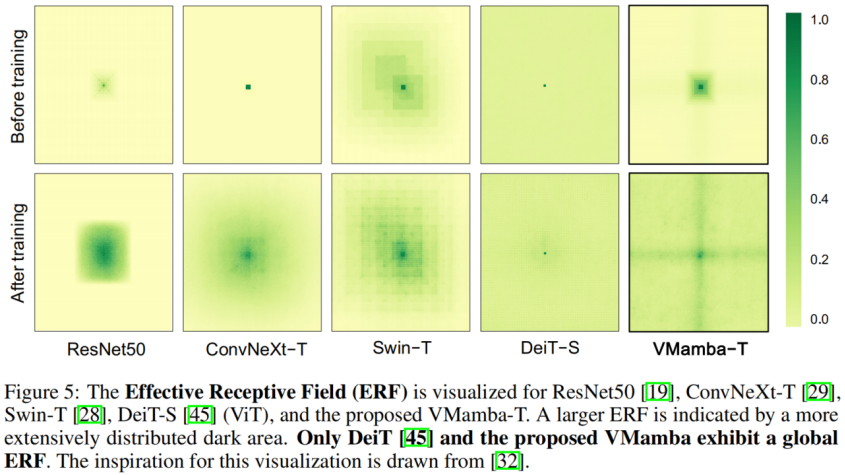

Effective Receptive Field

VMamba has a global effective receptive field, and only DeiT among other models has this feature. However, it is worth noting that the cost of DeiT is quadratic complexity, while VMamaba is linear complexity.

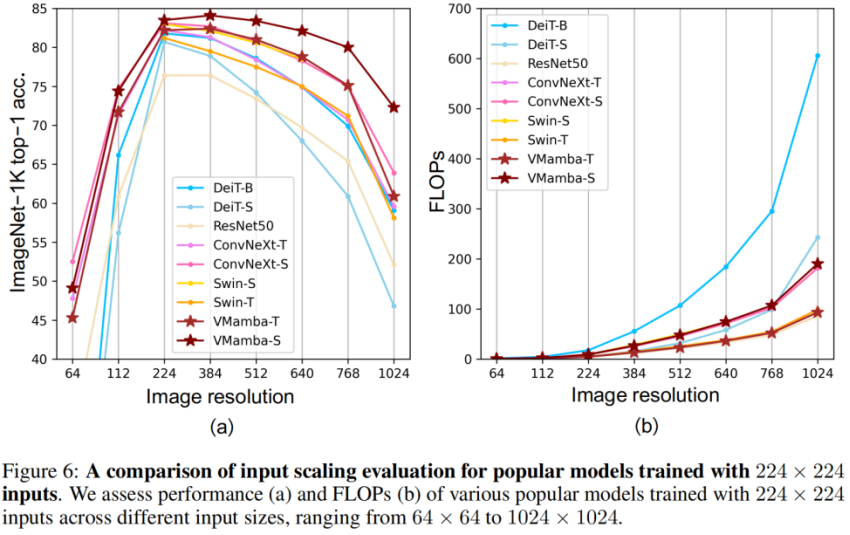

Input scale scaling

Finally, let us look forward to more Mamba-based vision models being proposed, alongside CNNs and ViTs, to provide a third option for basic vision models.

The above is the detailed content of The Swin moment of the visual Mamba model, the Chinese Academy of Sciences, Huawei and others launched VMamba. For more information, please follow other related articles on the PHP Chinese website!