This article is reprinted with the authorization of the Autonomous Driving Heart public account. Please contact the source for reprinting.

3D Object Detection based on LiDAR point cloud has always been a very classic problem. Both academia and industry have proposed various models to improve accuracy, speed and robustness. sex. However, due to the complex outdoor environment, the performance of Object Detection for outdoor point clouds is not very good. Lidar point clouds are sparse in nature. How to solve this problem in a targeted manner? The paper gives its own answer: extract information based on the aggregation of time series information.

1. Paper information

##2. Introduction

This paper discusses a key challenge in autonomous driving: accurately creating a three-dimensional representation of the surrounding environment. This is important for the reliability and safety of self-driving cars. In particular, autonomous vehicles need to be able to recognize surrounding objects, such as vehicles and pedestrians, and accurately determine their location, size and orientation. Typically, people use deep neural networks to process LiDAR data to accomplish this task.

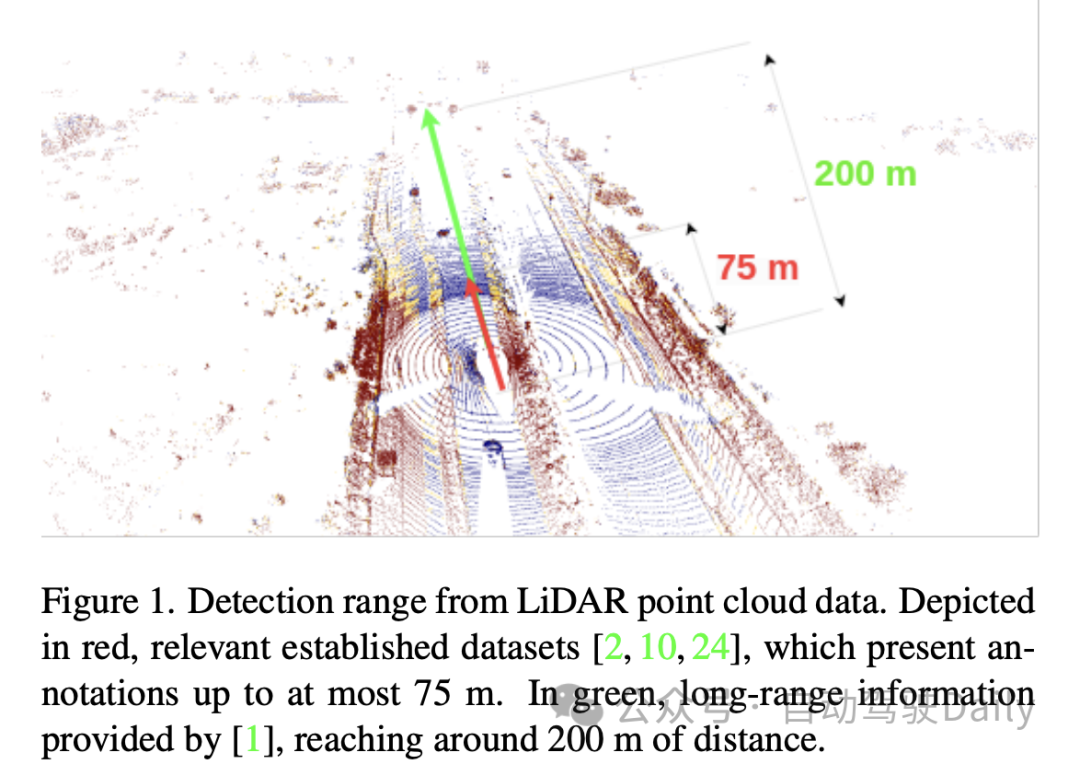

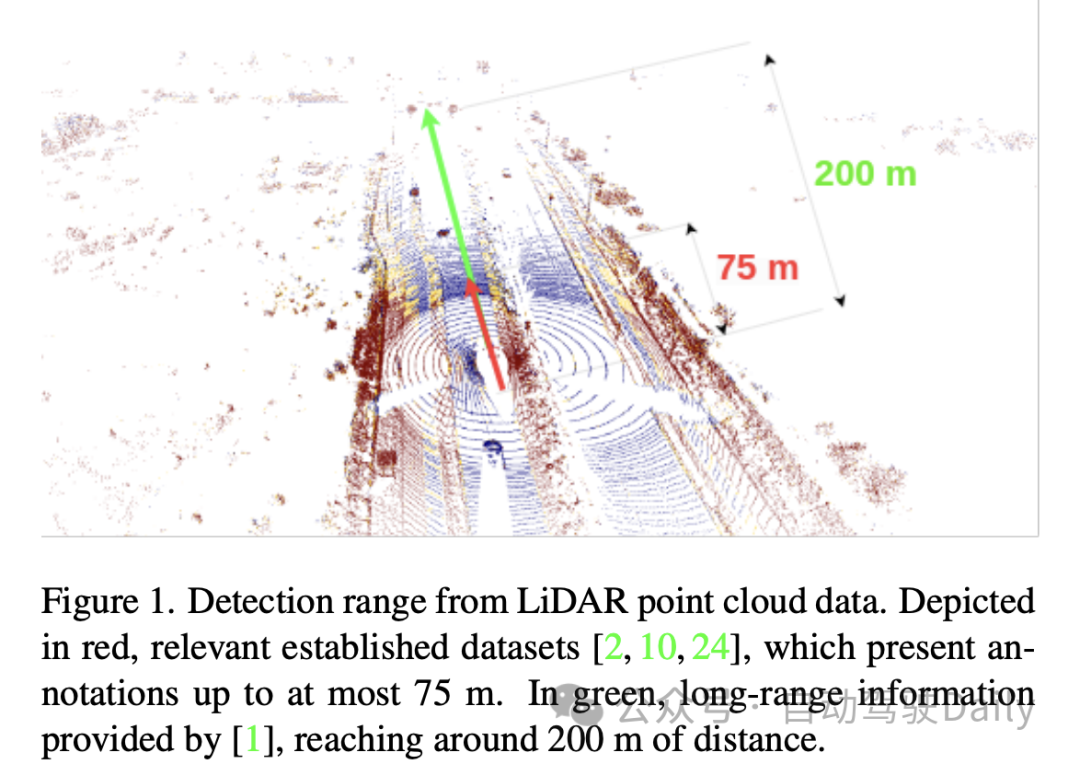

Most of the existing literature focuses on single-frame methods, i.e. using data scanned by one sensor at a time. This method performs well on classic benchmarks with objects at distances up to 75 meters. However, lidar point clouds are inherently sparse, especially at long ranges. Therefore, the paper states that using only a single scan for long-range detection (e.g., up to 200 meters) is not enough. This means that a multi-frame fusion method is needed to increase the point cloud density and improve the accuracy of distance measurement. By registering and fusing scan data from multiple time steps, more complete and accurate scene reconstruction and distance measurement results can be obtained. Such methods have higher reliability and robustness in tasks such as long-distance target detection and obstacle avoidance. Therefore, the contribution of the paper is to propose a method based on multi-frame fusion

In order to solve this problem, one method is to continuously acquire lidar scan data through point cloud aggregation, thereby obtaining denser input. However, this approach is computationally expensive and fails to take full advantage of intra-network aggregation. Therefore, an obvious alternative is to adopt a recursive approach to solve this problem by gradually accumulating information. Recursive methods continuously update information over time, providing more accurate and comprehensive results. With recursive methods, large amounts of input data can be efficiently processed and are computationally more efficient. In this way, we can save computing resources while solving the problem.

The article also mentioned other techniques to increase the detection range, such as sparse convolution, attention module and 3D convolution. However, these methods often ignore the compatibility issues of the target hardware. When deploying and training neural networks, the hardware used can vary significantly in supported operations and latency. For example, target hardware such as Nvidia Orin DLA often does not support operations such as sparse convolution or attention. Additionally, using layers such as 3D convolution may not be feasible due to real-time latency requirements. Therefore, using simple operations, such as 2D convolution, becomes more necessary.

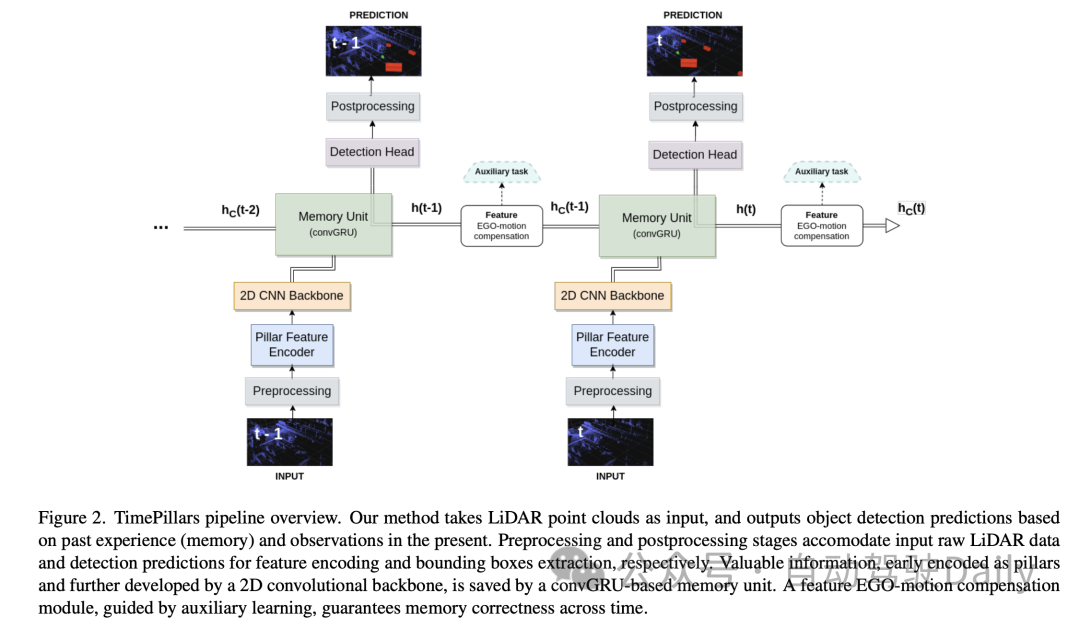

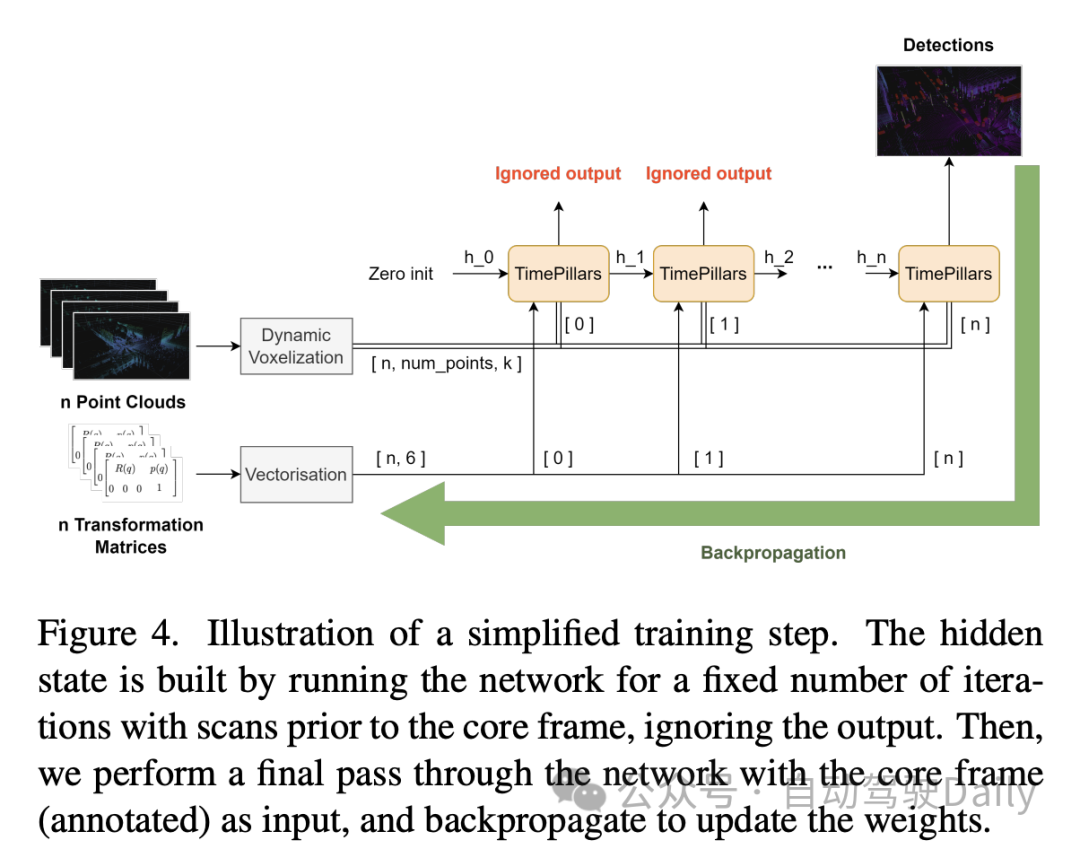

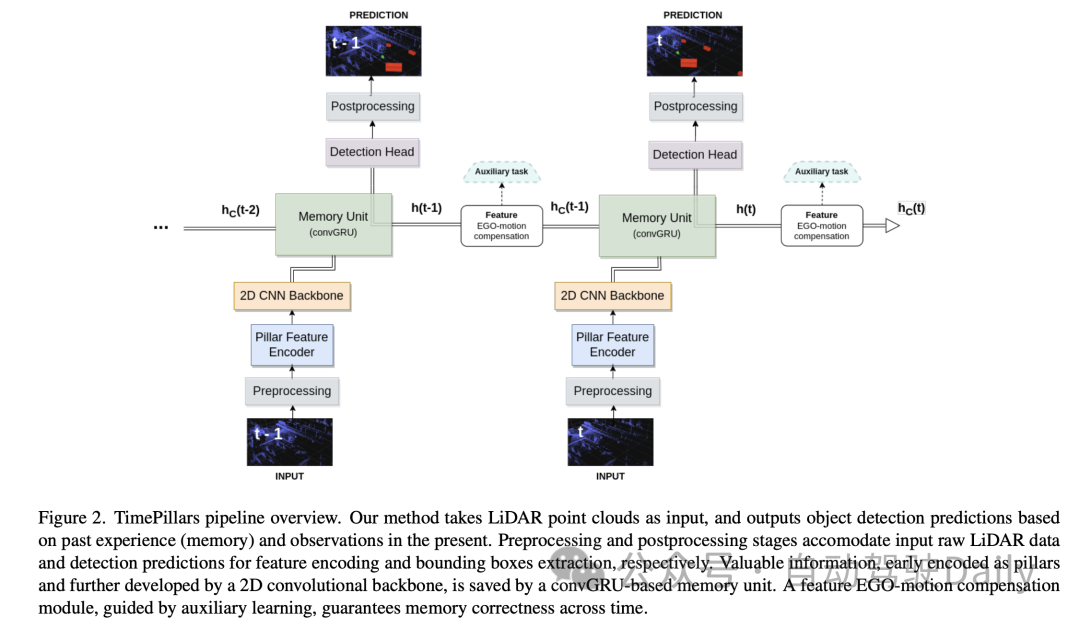

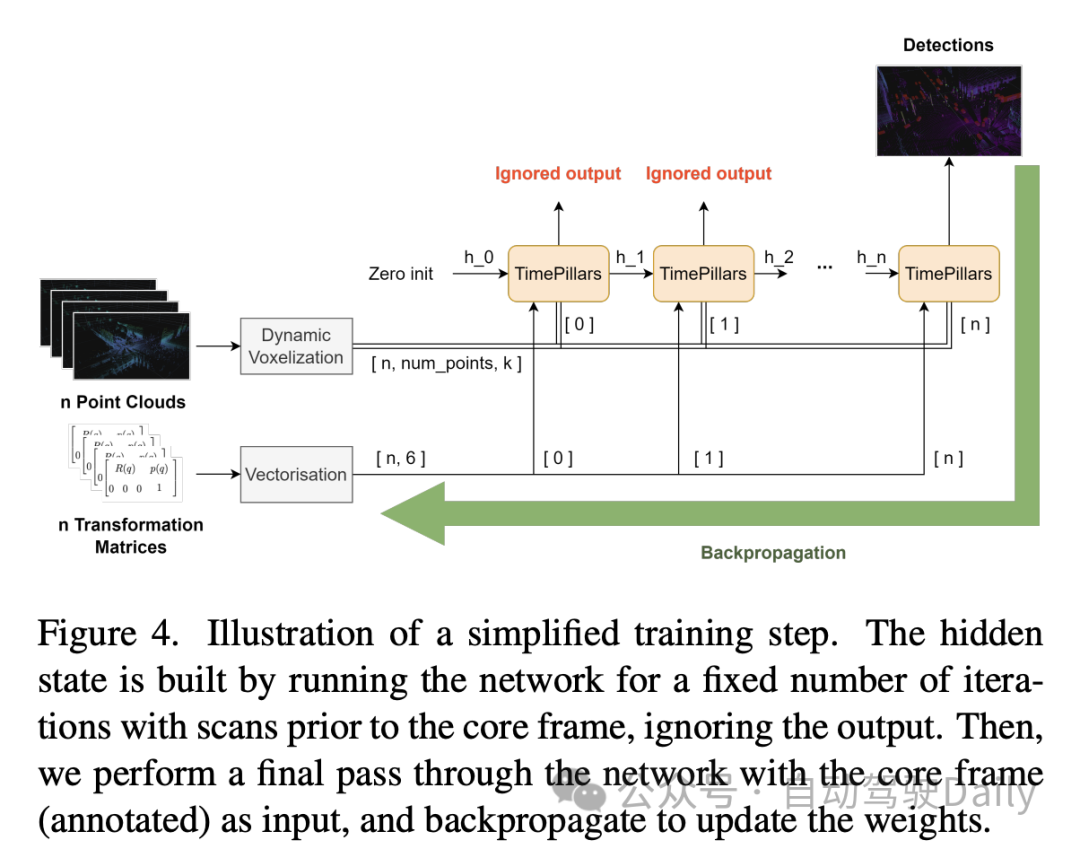

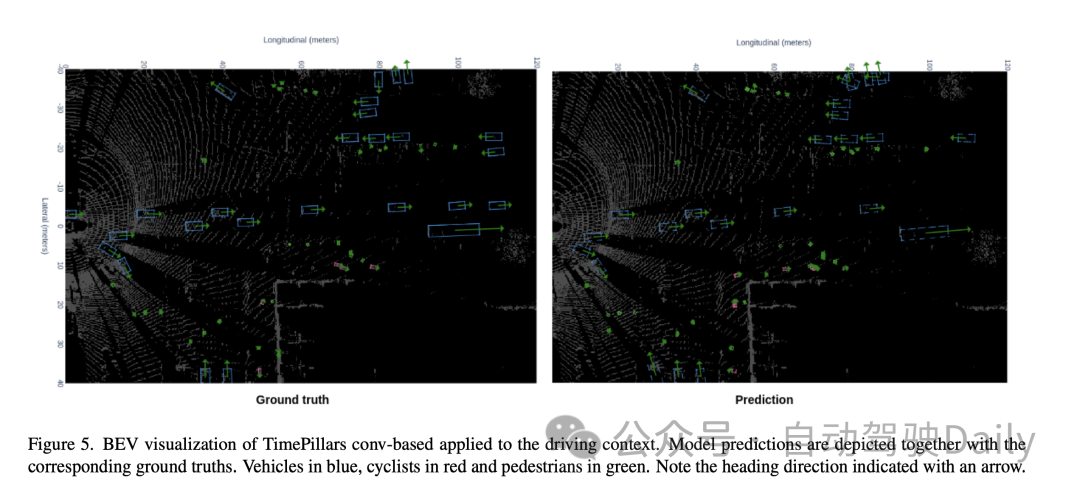

The paper proposes a new temporal recursive model, TimePillars, which respects the set of operations supported on common target hardware, relies on 2D convolution, is based on point-pillar (Pillar) input representation and a convolution recursion unit. Self-Motion Compensation is applied to the hidden states of the recurrent units with the help of a single convolution and auxiliary learning. The use of auxiliary tasks to ensure the correctness of this manipulation has been shown to be appropriate through ablation studies. The paper also investigates the optimal placement of the recursive module in the pipeline and clearly shows that placing it between the backbone of the network and the detection head results in the best performance. On the newly released Zenseact Open Dataset (ZOD), the paper demonstrates the effectiveness of the TimePillars method. Compared to single-frame and multi-frame point-and-pillar baselines, TimePillars achieves significant evaluation performance improvements, especially at long-range (up to 200 meters) detection in the important cyclist and pedestrian categories. Finally, TimePillars have significantly lower latency than multi-frame point pillars, making them suitable for real-time systems.

This paper proposes a new temporal recursive model called TimePillars to solve the 3D lidar object detection task. Compared to single-frame and multi-frame point-pillar baselines, TimePillars demonstrates significantly better performance in long-range detection and respects the set of operations supported by common target hardware. The paper also benchmarks a 3D lidar object detection model on the new Zenseact open dataset for the first time. However, limitations of the paper are that it only considers lidar data, without comprehensive consideration of other sensor inputs, and its approach is based on a single, state-of-the-art baseline. Nonetheless, the authors believe that their framework is general and that future improvements to the baseline will lead to overall performance improvements.

3. Method

3.1 Input preprocessing

In the "input preprocessing" part of this paper, the author adopts The "pillarization" technology is used to process the input point cloud data. This method is different from conventional voxelization, which segments the point cloud into vertical columnar structures only in the horizontal direction (x and y axes) while maintaining a fixed height in the vertical direction (z axis). Doing so keeps the network input dimensions consistent and allows efficient processing using 2D convolutions.

However, one problem with Pillarisation is that it produces many empty columns, resulting in very sparse data. To solve this problem, the paper proposes the use of dynamic voxelization technology. This technique avoids the need to have a predefined number of points for each column, thereby eliminating the need for truncation or filling operations on each column. Instead, the entire point cloud data is processed as a whole to match the required total number of points, here set to 200,000 points. The benefit of this preprocessing method is that it minimizes the loss of information and makes the generated data representation more stable and consistent.

3.2 Model architecture

Then for the Model architecture, the author introduces in detail a model consisting of a Pillar Feature Encoder (Pillar Feature Encoder), a 2D Convolutional Neural Network (CNN) backbone and a detection head. Neural network architecture.

-

Pillar Feature Encoder: This part maps the preprocessed input tensor into a Bird's Eye View (BEV) pseudo image. After using dynamic voxelization, the simplified PointNet is adjusted accordingly. The input is processed by 1D convolution, batch normalization and ReLU activation function, resulting in a tensor with shape , where represents the number of channels. Before the final scatter max layer, max pooling is applied to the channels, forming a latent space of shape . Since the initial tensor is encoded as , which becomes after the previous layer, the max pooling operation is removed.

-

Backbone: Using the 2D CNN backbone architecture proposed in the original columnar paper due to its superior depth efficiency. The latent space is reduced using three downsampling blocks (Conv2D-BN-ReLU) and restored using three upsampling blocks and transposed convolution, with the output shape being .

-

Memory Unit: Model the memory of the system as a recurrent neural network (RNN), specifically using convolutional GRU (convGRU), which is the convolutional version of Gated Recurrent Unit. The advantage of convolutional GRU is that it avoids the vanishing gradient problem and improves efficiency while maintaining spatial data characteristics. Compared to other options such as LSTM, GRU has fewer trainable parameters due to its smaller number of gates and can be considered a memory regularization technique (reducing the complexity of the hidden states). By merging operations of similar nature, the number of required convolutional layers is reduced, making the unit more efficient.

-

Detection Head: A simple modification to SSD (Single Shot MultiBox Detector). The core concept of SSD is retained, that is, single pass without region proposal, but the use of anchor boxes is eliminated. Directly outputting predictions for each cell in the grid, although losing the cell multi-object detection capability, avoids tedious and often imprecise anchor box parameter adjustments and simplifies the inference process. The linear layer handles the respective outputs of classification and localization (position, size, and angle) regression. Only the size uses an activation function (ReLU) to prevent taking negative values. In addition, unlike related literature, this paper avoids the problem of direct angle regression by independently predicting the sine and cosine components of the vehicle's driving direction and extracting angles from them.

3.3 Feature Ego-Motion Compensation

In this part of the paper, the author discusses how to process the hidden state features output by the convolutional GRU, which are the coordinate system of the previous frame expressed. If stored directly and used to calculate the next prediction, a spatial mismatch will occur due to ego-motion.

In order to perform the conversion, different techniques can be applied. Ideally, the corrected data would be fed into the network rather than transformed within the network. However, this is not the method proposed in the paper, as it requires resetting the hidden states at each step in the inference process, transforming the previous point clouds, and propagating them throughout the network. Not only is this inefficient, it defeats the purpose of using RNNs. Therefore, in a loop context, compensation needs to be done at the feature level. This makes the hypothetical solution more efficient, but also makes the problem more complex. Traditional interpolation methods can be used to obtain features in transformed coordinate systems.

In contrast, the paper, inspired by the work of Chen et al., proposes to use convolution operations and auxiliary tasks to perform transformations. Considering the limited details of the aforementioned work, the paper proposes a customized solution to this problem.

The approach taken by the paper is to provide the network with the information needed to perform feature transformation through an additional convolutional layer. The relative transformation matrix between two consecutive frames is first calculated, i.e. the operations required to successfully transform features. Then, extract the 2D information (rotation and translation parts) from it:

##This simplification avoids The main matrix constants are determined and working in the 2D (pseudo-image) domain reduces 16 values to 6. The matrix is then flattened and expanded to match the shape of the hidden features to be compensated. The first dimension represents the number of frames that need to be converted. This representation makes it suitable for concatenating each potential pillar in the channel dimension of the hidden feature.

Finally, the hidden state features are fed into a 2D convolutional layer, which is adapted to the transformation process. A key aspect to note is that performing a convolution does not guarantee that the transformation will take place. Channel concatenation simply provides the network with additional information about how the transformation might be performed. In this case, the use of assisted learning is appropriate. During training, an additional learning objective (coordinate transformation) is added in parallel with the main objective (object detection). An auxiliary task is designed whose purpose is to guide the network through the transformation process under supervision to ensure the correctness of the compensation. The auxiliary task is limited to the training process. Once the network learns to transform features correctly, it loses its applicability. Therefore, this task is not considered during inference. In the next section further experiments will be conducted to compare the impact.

4. Experiment

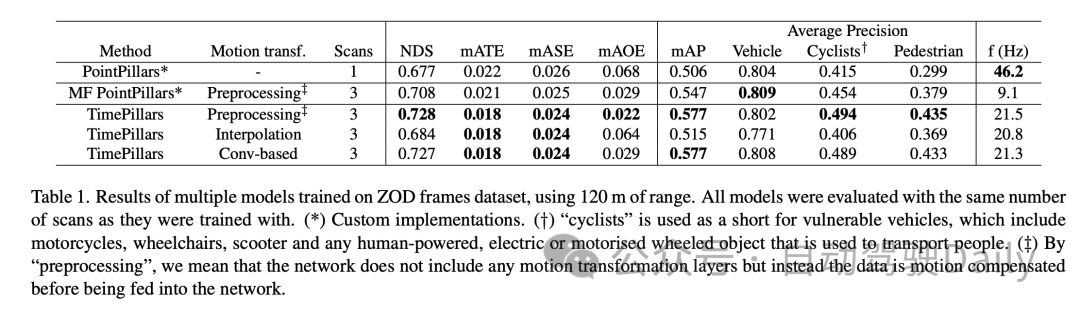

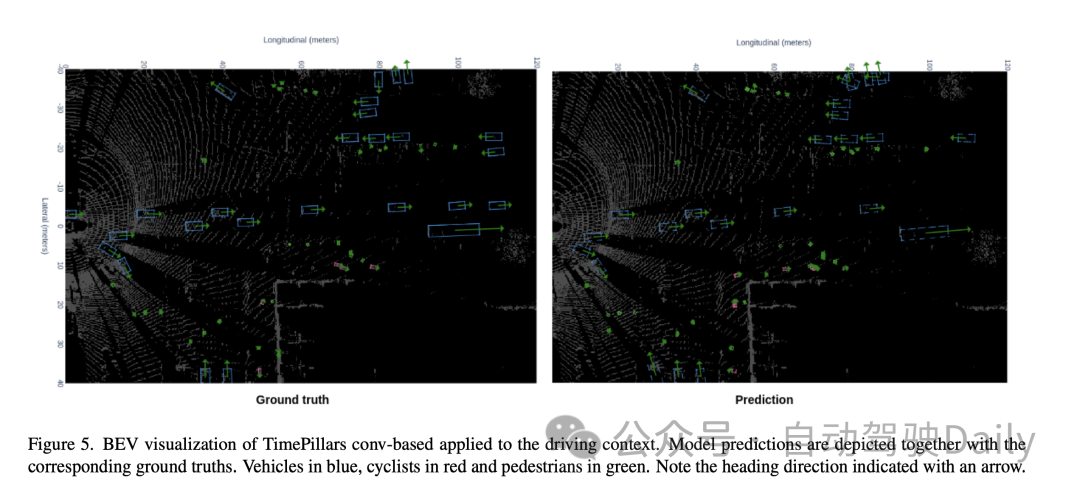

#The experimental results show that the TimePillars model performs well when processing the Zenseact Open Dataset (ZOD) frame data set , especially when dealing with ranges up to 120 meters. These results highlight the performance differences of TimePillars under different motion transformation methods and compare with other methods.

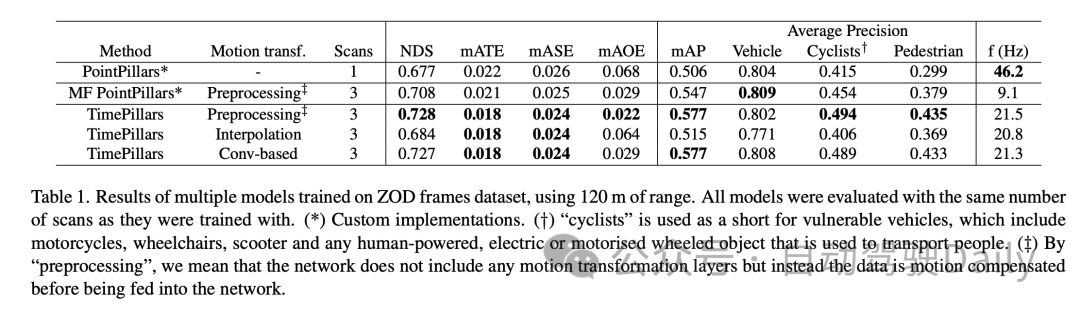

After comparing the benchmark model PointPillars and multi-frame (MF) PointPillars, it can be seen that TimePillars has achieved significant improvements in multiple key performance indicators. Especially on NuScenes Detection Score (NDS), TimePillars demonstrates a higher overall score, reflecting its advantages in detection performance and positioning accuracy. In addition, TimePillars also achieved lower values in average conversion error (mATE), average scale error (mASE) and average orientation error (mAOE), indicating that it is more precise in positioning accuracy and orientation estimation. Of particular note is that the different implementations of TimePillars in terms of motion conversion have a significant impact on performance. When using convolution-based motion transformation (Conv-based), TimePillars performs particularly well on NDS, mATE, mASE, and mAOE, proving the effectiveness of this method in motion compensation and improving detection accuracy. In contrast, TimePillars using the interpolation method also outperforms the baseline model, but is inferior to the convolution method in some indicators. The average precision (mAP) results show that TimePillars performs well in the detection of vehicles, cyclists and pedestrian categories, especially when dealing with more challenging categories such as cyclists and pedestrians, its performance improvement is more significant. From the perspective of processing frequency (f (Hz)), although TimePillars are not as fast as single-frame PointPillars, they are faster than multi-frame PointPillars while maintaining high detection performance. This shows that TimePillars can effectively perform long-distance detection and motion compensation while maintaining real-time processing. In other words, the TimePillars model shows significant advantages in long-distance detection, motion compensation, and processing speed, especially when processing multi-frame data and using convolution-based motion conversion technology. These results highlight the application potential of TimePillars in the field of 3D lidar object detection for autonomous vehicles.

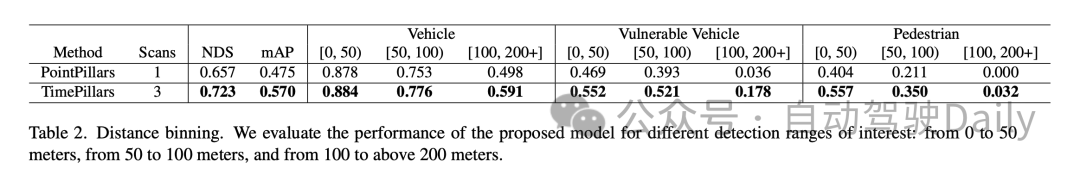

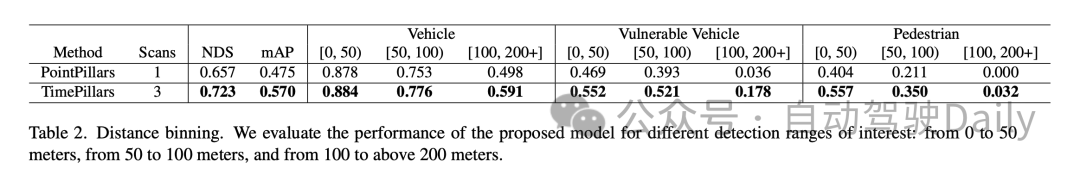

The above experimental results show that the TimePillars model performs excellently in object detection performance in different distance ranges, especially compared with the benchmark model PointPillars. These results are divided into three main detection ranges: 0 to 50 meters, 50 to 100 meters and above 100 meters.

First of all, NuScenes Detection Score (NDS) and average precision (mAP) are the overall performance indicators. TimePillars outperforms PointPillars on both metrics, showing overall higher detection capabilities and positioning accuracy. Specifically, TimePillars' NDS is 0.723, which is much higher than PointPillars' 0.657; in terms of mAP, TimePillars also significantly surpasses PointPillars' 0.475 with 0.570.

In the performance comparison within different distance ranges, it can be seen that TimePillars performs better in each range. For the vehicle category, the detection accuracy of TimePillars in the ranges of 0 to 50 meters, 50 to 100 meters and more than 100 meters is 0.884, 0.776 and 0.591 respectively, which are all higher than the performance of PointPillars in the same range. This shows that TimePillars has higher accuracy in vehicle detection, both at close and far distances. TimePillars also demonstrated better detection performance when dealing with vulnerable vehicles (such as motorcycles, wheelchairs, electric scooters, etc.). Especially in the range of more than 100 meters, the detection accuracy of TimePillars is 0.178, while PointPillars is only 0.036, showing significant advantages in long-distance detection. For pedestrian detection, TimePillars also showed better performance, especially in the range of 50 to 100 meters, with a detection accuracy of 0.350, while PointPillars was only 0.211. Even at longer distances (more than 100 meters), TimePillars still achieves a certain level of detection (accuracy of 0.032), while PointPillars perform zero at this range.

These experimental results highlight the superior performance of TimePillars in handling object detection tasks in different distance ranges. Whether at close range or at the more challenging long range, TimePillars provide more accurate and reliable detection results, which are critical to the safety and efficiency of autonomous vehicles.

5. Discussion

First of all, the main advantage of the TimePillars model is its effectiveness for long-distance object detection. By adopting dynamic voxelization and convolutional GRU structure, the model is better able to handle sparse lidar data, especially in long-distance object detection. This is critical for the safe operation of autonomous vehicles in complex and changing road environments. In addition, the model also shows good performance in terms of processing speed, which is essential for real-time applications. On the other hand, TimePillars adopts a convolution-based method for Motion Compensation, which is a major improvement over traditional methods. This approach ensures the correctness of the transformation through auxiliary tasks during training, improving the accuracy of the model when handling moving objects.

However, the research of the paper also has some limitations. First, while TimePillars performs well at handling distant object detection, this performance increase may come at the expense of some processing speed. While the speed of the model is still suitable for real-time applications, it is still a decrease compared to single-frame methods. In addition, the paper mainly focuses on LiDAR data and does not consider other sensor inputs, such as cameras or radars, which may limit the application of the model in more complex multi-sensor environments.

That is to say, TimePillars has shown significant advantages in 3D lidar object detection for autonomous vehicles, especially in long-distance detection and Motion Compensation. Despite the slight trade-off in processing speed and limitations in processing multi-sensor data, TimePillars still represents an important advance in this field.

6. Conclusion

This work shows that considering past sensor data is superior to only utilizing current information. Accessing previous driving environment information can cope with the sparse nature of lidar point clouds and lead to more accurate predictions. We demonstrate that recurrent networks are suitable as a means to achieve the latter. Giving the system memory leads to a more robust solution compared to point cloud aggregation methods that create denser data representations through extensive processing. Our proposed method, TimePillars, implements a way to solve the recursive problem. By simply adding three additional convolutional layers to the inference process, we demonstrate that basic network building blocks are sufficient to achieve significant results and ensure that existing efficiency and hardware integration specifications are met. To the best of our knowledge, this work provides the first benchmark results for the 3D object detection task on the newly introduced Zenseact open dataset. We hope our work can contribute to safer, more sustainable roads in the future.

Original link: https://mp.weixin.qq.com/s/94JQcvGXFWfjlDCT77gjlA

The above is the detailed content of Efficiently improve detection capabilities: break through the detection of small targets above 200 meters. For more information, please follow other related articles on the PHP Chinese website!

c language else if statement usage

c language else if statement usage

The difference between server and cloud host

The difference between server and cloud host

What plug-ins are needed for vscode to run HTML?

What plug-ins are needed for vscode to run HTML?

How to delete blank pages in word

How to delete blank pages in word

Usage of #include in C language

Usage of #include in C language

linux view network card

linux view network card

How to solve the problem of dns server not responding

How to solve the problem of dns server not responding

How to read files and convert them into strings in java

How to read files and convert them into strings in java