(Nweon November 27, 2023) According to earlier intelligence, Samsung has formulated a complete Metaverse strategy and aims to build a Samsung-centered XR ecosystem, and is actively recruiting talents. . According to the patent application, the company has actually considered the ultimate brain-computer interface.

In the past, brain-computer interface research was more common among Meta, Valve and other startups. Of course, companies like Microsoft have also explored it. Nowadays, companies actively deploying in the XR field are beginning to explore more advanced brain-computer interfaces. In a patent application titled "Information Generation Method and Device", Samsung proposed applying brain-computer interface to the field of XR sensory feedback stimulation

This company believes that in XR technology, various sensory simulations are mainly implemented based on sensors. But each sensory simulation is realized through a sensor, so the process is cumbersome. In addition, the sensors are not comprehensive enough, so many simulations cannot be implemented. In other words, the effects obtained using the relevant sensors are far from reality and users cannot obtain realistic simulations.

Therefore, Samsung proposes that brain-computer interface devices can be used to provide realistic sensory simulations. Among them, the system can obtain information about the user's position relative to the target object in the virtual environment, then determine sensory information corresponding to the target object based on the position information and attribute information, and finally convert the sensory information into electrical signals and pass them through Brain-computer interface devices to stimulate users.

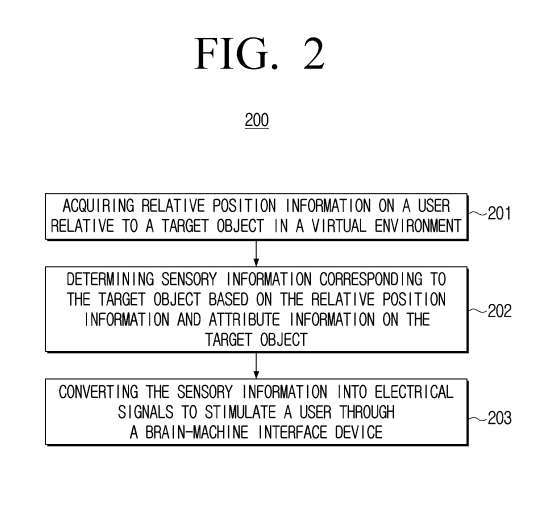

According to an example embodiment, Figure 2 shows a related information generation flow chart 200. The information generation method includes an operation 201

of obtaining the relative position information of the user relative to the target object in the virtual environment.In one embodiment, one or more devices that work cooperatively with each other may be called execution entities. The execution entity can establish a spatial rectangular coordinate system, obtain the user's location information in the rectangular coordinate system and the location information of the target object in the virtual environment in real time, and determine based on the user's location information and the location information of the target object. Relative position information of the user relative to the target object.

The execution entity may periodically obtain the position information of the user in the rectangular coordinate system and the position information of the target object in the virtual environment. The target object can be any target object in the virtual environment, such as a table, a glass of water, bread, etc.

In operation 202, sensory information corresponding to the target object is determined based on the relative position information and attribute information of the target object.

According to example embodiments, after obtaining the relative position information of the user relative to the target object in the virtual environment, the execution entity may further determine the sensory information corresponding to the target object according to the correspondence table or the sensory information prediction model. For example, the correspondence table may store predetermined relative position information, attribute information of the target object, and interrelated sensory information. The sensory information prediction model may be a predetermined sensory information prediction model determined by performing a training operation.

According to example embodiments, we can use the relative position information of the target object and attribute samples with sensory information to train the model, thereby obtaining a model that predicts sensory information

The sensory information of the target object may include at least one of touch, hearing, smell, or taste. Sensory information can be represented by stimulus parameters. Stimulation parameters can be determined based on the sensory information actually produced by the user on the target object in the real environment

Interestingly, Samsung proposed that the relevant stimulator could be CereStim, a fully programmable 96-channel neurostimulator from brain-computer startup Blackrock. But of course, the company says the device in question is just one option, and other emulators are possible.

In an example scenario, the user touches an object at 30 degrees Celsius, and electrode activity is recorded by using a neural port neural signal collector. Neural activity recorded by the electrodes is amplified and analog-to-digital (A/D) sampled at a sampling frequency of 30 kHz, and then recorded using the Neural Port Neural Signal Processor NSP system. Then, the stimulation parameters were written through CereStim's Matlab API, and the electrode signals were acquired again through different pulse stimulation and adjustment of current intensity. When the electrode signals are infinitely close to each other, they can touch the stimulation parameters of the 30-degree Celsius object.

Similarly, any stimulus parameters for touch, hearing or smell can be obtained.

When performing 203 operations, the method includes converting sensory information into electrical signals and stimulating the user through a brain-computer interface device

After determining the sensory information corresponding to the target object, the execution entity can use the brain-computer interface device to convert the sensory information into electrical signals, and then produce the corresponding feeling by stimulating the corresponding parts of the user's cerebral cortex

According to another example embodiment, the operation of determining sensory information corresponding to the target object based on the relative position information and attribute information of the target object may include determining based on the user's facial orientation and the distance between the user's head and the target object. olfactory information corresponding to the target object; and determining the olfactory information as sensory information corresponding to the target object.

According to another example embodiment, the method may include determining that the target object has an odor attribute, and determining olfactory information corresponding to the target object according to the user's facial orientation and the distance between the user's head and the target object. In response to or based on determining that the target object has an odor attribute, olfactory information is determined as sensory information corresponding to the target object.

Smell attributes can include fruit aroma, flower aroma, food aroma and other attribute information.

In one embodiment, sensory information related to the target object is determined by utilizing relative position information and attribute information of the target object. On the basis of confirming that the target object has sound attributes, the auditory information related to the target object can be determined based on the direction of the user's face and the distance between the user's head and the target object, and then the auditory information is determined to be related to the target object. Sensory information

The execution entity can use the wearable positioning devices on the left and right sides of the user's head to determine the left and right coordinates of the user, and further determine the line connecting the left and right coordinates of the user's head, and based on the line The angle between the plane direction and the coordinates of the target object determines the orientation of the user's face.

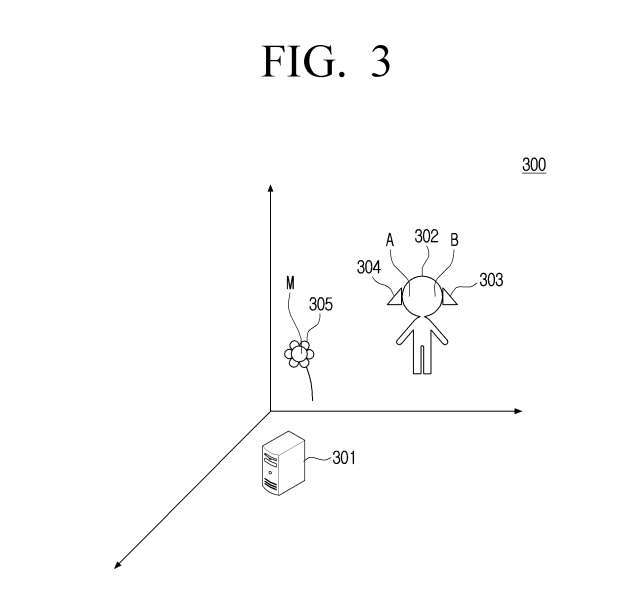

As shown in Figure 3, by using the UWB base station as the origin, the execution entity 301 can establish a spatial rectangular coordinate system, and use the first wearable positioning device 303 on the left side of the user's head and the first wearable positioning device 303 on the right side of the user's head. The second wearable positioning device 304 obtains the coordinates A and B

corresponding to the user's head.According to example embodiments, the wearable positioning devices 303 and 304 may be UWB chips. The UWB base station can be implemented by the execution entity 301. Coordinate A corresponds to chip 303, that is, the coordinate of the left side of the user's head, and coordinate B corresponds to chip 304, that is, the coordinate of the right side of the user's head.

At the same time, obtain the coordinate M of the target object 305, and determine the angle between the straight line AB and the direction of the plane where M is located as the user's orientation. Here, the target object 305 is a virtual flower. The execution entity can determine the olfactory information corresponding to the target object from the predetermined correspondence table according to the user's facial orientation and the distance between the user's head and the target object, and determine that the olfactory information corresponds to the target object. sensory information. The correspondence table records the user's facial orientation, the distance between the user's head and the target object, and the associated olfactory information.

In this embodiment, the coordinates of the left and right sides of the user's head are determined by using wearable positioning devices on the left and right sides of the user's head. The direction of the user's face is determined by calculating the angle between the straight line connecting the coordinates on the left and right sides of the user's head and the direction of the plane where the coordinates of the target object are located. This effectively improves the accuracy of determining the user's facial orientation

The execution entity can detect based on the relative position information of the user's head relative to the target object, and determine that the target object is located at a predetermined position of the user's head and the target object has edible attributes, determine the corresponding edible attribute Taste information as sensory information corresponding to the target object

Can include attribute information, such as banana flavor, apple flavor, etc.

It should be noted that before it is determined that the target object is located at the predetermined position of the user's head, there may be an action of the user touching the target object, or there may not be an action of the user touching the target object within a predetermined time range.

In one embodiment, by determining whether the distance between the user's hand and the target object meets a predetermined threshold, it may be determined whether there is an action of the user touching the virtual object.

In this embodiment, first, based on the predetermined position of the target object on the user's head and its edible attributes, the taste information corresponding to the edible attributes is determined as the sensory information of the target object. The sensory information is then converted into electrical signals and stimulated through brain-computer interface devices to help users experience the edible properties of objects in the virtual environment and enhance the authenticity of interactions

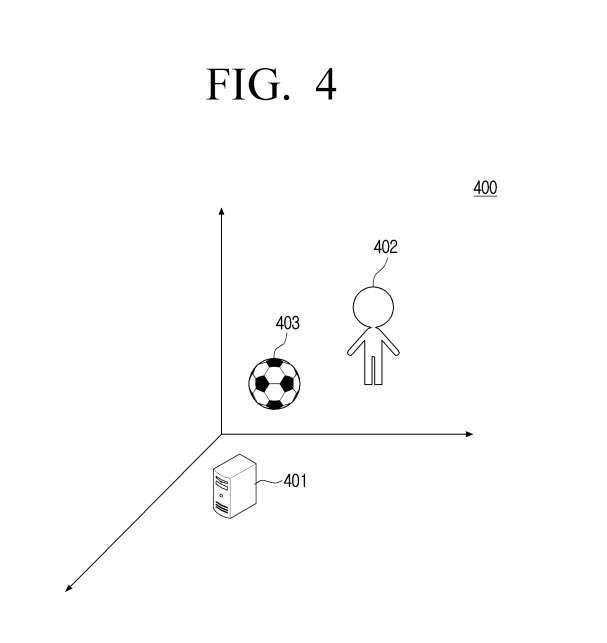

The schematic diagram shows the application scenario of the information generation method, as shown in Figure 4

In the application scenario shown in Figure 4, entity 401 can establish a spatial rectangular coordinate system with the UWB base station as the origin. By using a wearable positioning device on one or more body parts of the user 402, the user's position information in the spatial rectangular coordinate system can be obtained

For example, wearable positioning devices can be placed on the user's hands and head. The UWB base station can be implemented by the execution entity 401. According to an example embodiment, the entity may obtain location information on a target object 403, such as a virtual football, thereby determining a relative position of the user relative to the target object based on the user's location information and the location information on the target object. information.

Next, determine the sensory information corresponding to the target object according to the correspondence table. This correspondence table records the predetermined relative position information, attribute information (such as smoothness, softness, etc.) and corresponding sensory information of the target object. Then, these sensory information are converted into electrical signals and stimulated by the brain-computer interface device 402

The information generation method may include obtaining the relative position information of the user relative to the target object in the virtual environment, determining the sensory information corresponding to the target object according to the relative position information and attribute information of the target object, and passing Brain-computer interface devices convert said sensory information into electrical signals to stimulate users; this helps users experience the properties of objects in the virtual environment and improves the authenticity of interactions.

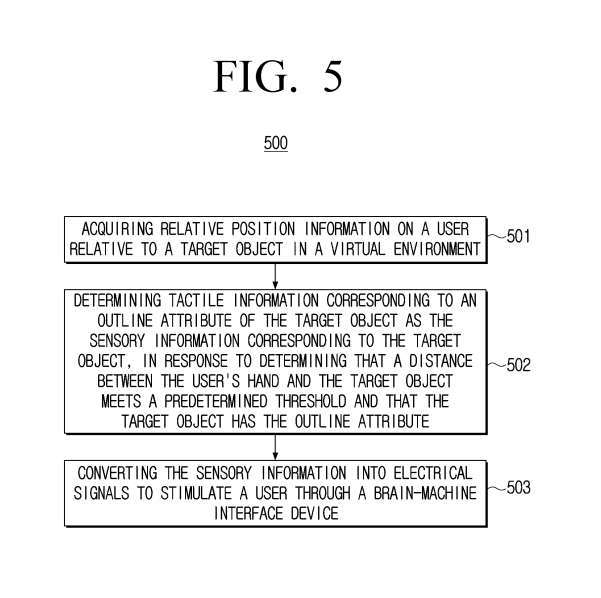

Please refer to Figure 5, which is a flow chart 500 of the information generation method

In the 501 operation, the user's relative position information relative to the target object in the virtual environment can be obtained through the information generation method

During operation 502, if it is determined that the distance between the user's hand and the target object meets the preset threshold, and the target object has contour features, then the tactile information corresponding to the contour features of the target object will be determined as the Sensory information corresponding to the target object

Rewritten content: The relative position information may include relative position information between the user's hand and the target object, and the execution entity may detect the distance between the user's hand and the target object. In response to determining that the distance between the user's hand and the target object satisfies a predetermined threshold and the target object has a contour attribute, tactile information corresponding to the contour attribute of the target object is determined as sensory information corresponding to the target object

Can include profile, material, texture, smoothness, temperature, quality and other attribute information

Among them, the predetermined threshold can be set based on experience, actual needs and specific application scenarios. For example, the distance between the fingers and/or the palm and the target object is less than or equal to 1 cm, or the distance between the fingers and/or the palm and the target object is less than or equal to 0.5 cm, which is not limited in the present disclosure.

Specifically, the system establishes a spatial rectangular coordinate system with the UWB base station as the origin, uses a data glove worn on the user's hand to obtain the user's hand position information, and at the same time obtains the position information of the target object. The target object is a water cup, and the preset threshold is that the distance between the user's fingers and/or palms and the water cup is less than or equal to 1 cm

In order to meet the predetermined threshold, determine the distance between the user's fingers and/or palms and the water cup, while taking into account the contour attributes of the water cup, determine the tactile information corresponding to the contour attributes of the water cup as the sensory information of the water cup

In operation 503, the sensory information is converted into electrical signals through the brain-computer interface device to stimulate the user.

Compared with the method shown in the example embodiment of Figure 2, according to the method shown in the flowchart 500 of Figure 5, in order to determine that the distance between the user's hand and the target object satisfies the predetermined threshold and the target object has an outline attribute, The tactile information corresponding to the outline attribute of the target object is determined as the sensory information corresponding to the target object.

Through the brain-computer interface device, the information perceived by the user is converted into electrical signals, and the user is stimulated to help them experience the contour properties of the object in the virtual environment to improve the authenticity of the interaction

Extended reading:Samsung Patent | Information generation method and device

The Samsung patent application titled "Information generation method and device" was originally submitted in July 2023 and was recently published by the US Patent and Trademark Office.

Normally, after a U.S. patent application is reviewed, it will be published automatically or at the request of the applicant within 18 months from the filing date or priority date. It should be noted that the publication of a patent application does not mean that the patent has been granted. After the patent application is published, the U.S. Patent and Trademark Office needs to conduct a substantive review, which may take 1 to 3 years.

The above is the detailed content of Samsung XR patented shared brain-computer interface provides complete sensory feedback stimulation - smell, taste, etc.. For more information, please follow other related articles on the PHP Chinese website!