Machine learning models are becoming more sophisticated and accurate, but their opacity remains a significant challenge. Understanding why a model makes a specific prediction is critical to building trust and ensuring it behaves as expected. In this article, we will introduce LIME and use it to explain various common models.

A powerful Python library LIME (Local Interpretable Model-agnostic Explanations) can help explain machine learning classifiers (or models) the behavior of. The main purpose of LIME is to provide interpretable, human-readable explanations for individual predictions, especially for complex machine learning models. By providing a detailed understanding of how these models operate, LIME encourages trust in machine learning systems

As machine learning models become more The more complex they become, understanding their inner workings can become challenging. LIME solves this problem by creating local interpretations for specific instances, making it easier for users to understand and trust machine learning models

Key Features of LIME:

#LIME operates by approximating complex ML models with a simpler, locally interpretable model built around a specific instance. The main steps of the LIME workflow can be divided into the following steps:

Before you start using LIME, you need to install it. LIME can be installed by using the pip command:

pip install lime

To use LIME with the classification model, you need to create a Interpreter object, which then generates an explanation for a specific instance. Here is a simple example using the LIME library and classification model:

# Classification- Lime import lime import lime.lime_tabular from sklearn import datasets from sklearn.ensemble import RandomForestClassifier # Load the dataset and train a classifier data = datasets.load_iris() classifier = RandomForestClassifier() classifier.fit(data.data, data.target) # Create a LIME explainer object explainer = lime.lime_tabular.LimeTabularExplainer(data.data, mode="classification", training_labels=data.target, feature_names=data.feature_names, class_names=data.target_names, discretize_cnotallow=True) # Select an instance to be explained (you can choose any index) instance = data.data[0] # Generate an explanation for the instance explanation = explainer.explain_instance(instance, classifier.predict_proba, num_features=5) # Display the explanation explanation.show_in_notebook()

When using LIME to explain regression models, it is similar to using LIME to explain classification models. An interpreter object needs to be created and an interpretation generated for a specific instance. The following is an example using the LIME library and regression model:

#Regression - Lime import numpy as np from sklearn.model_selection import train_test_split from sklearn.linear_model import LinearRegression from lime.lime_tabular import LimeTabularExplainer # Generate a custom regression dataset np.random.seed(42) X = np.random.rand(100, 5) # 100 samples, 5 features y = 2 * X[:, 0] + 3 * X[:, 1] + 1 * X[:, 2] + np.random.randn(100) # Linear regression with noise # Split the data into training and testing sets X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42) # Train a simple linear regression model model = LinearRegression() model.fit(X_train, y_train) # Initialize a LimeTabularExplainer explainer = LimeTabularExplainer(training_data=X_train, mode="regression") # Select a sample instance for explanation sample_instance = X_test[0] # Explain the prediction for the sample instance explanation = explainer.explain_instance(sample_instance, model.predict) # Print the explanation explanation.show_in_notebook()

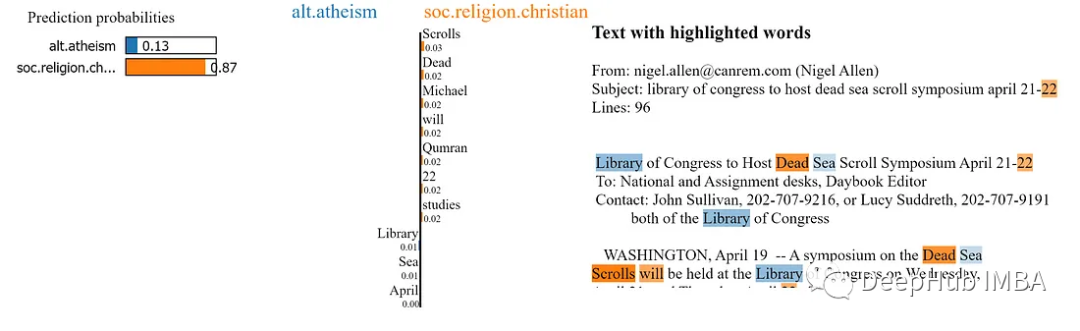

LIME can also be used to explain predictions made by text models. To use LIME with a text model, you create a LIME text interpreter object and then generate an interpretation for a specific instance. The following is an example using the LIME library and text model:

# Text Model - Lime import lime import lime.lime_text from sklearn.feature_extraction.text import TfidfVectorizer from sklearn.naive_bayes import MultinomialNB from sklearn.datasets import fetch_20newsgroups # Load a sample dataset (20 Newsgroups) for text classification categories = ['alt.atheism', 'soc.religion.christian'] newsgroups_train = fetch_20newsgroups(subset='train', categories=categories) # Create a simple text classification model (Multinomial Naive Bayes) tfidf_vectorizer = TfidfVectorizer() X_train = tfidf_vectorizer.fit_transform(newsgroups_train.data) y_train = newsgroups_train.target classifier = MultinomialNB() classifier.fit(X_train, y_train) # Define a custom Lime explainer for text data explainer = lime.lime_text.LimeTextExplainer(class_names=newsgroups_train.target_names) # Choose a text instance to explain text_instance = newsgroups_train.data[0] # Create a predict function for the classifier predict_fn = lambda x: classifier.predict_proba(tfidf_vectorizer.transform(x)) # Explain the model's prediction for the chosen text instance explanation = explainer.explain_instance(text_instance, predict_fn) # Print the explanation explanation.show_in_notebook()

LIME can be used to explain the prediction results of image models. Need to create a LIME image interpreter object and generate an explanation for a specific instance

import lime import lime.lime_image import sklearn # Load the dataset and train an image classifier data = sklearn.datasets.load_digits() classifier = sklearn.ensemble.RandomForestClassifier() classifier.fit(data.images.reshape((len(data.images), -1)), data.target) # Create a LIME image explainer object explainer = lime.lime_image.LimeImageExplainer() # Select an instance to be explained instance = data.images[0] # Generate an explanation for the instance explanation = explainer.explain_instance(instance, classifier.predict_proba, top_labels=5)

After using LIME to generate the explanation , which can be interpreted visually to understand the contribution of each feature to the prediction. For tabular data, you can use the show_in_notebook or as_pyplot_figure methods to display explanations. For text and image data, you can use the show_in_notebook method to display notes.

By understanding the contribution of each feature, we can gain deeper insight into the model’s decision-making process and identify potential biases or problem areas

LIME provides some advanced techniques to improve the quality of interpretation, including:

Adjust the number of perturbed samples: Increasing the number of perturbed samples can improve the stability and accuracy of interpretation sex.

Choose an interpretable model: Choosing an appropriate interpretable model (e.g., linear regression, decision tree) affects the quality of the explanation.

Feature Selection: Customizing the number of features used in the explanation can help focus on the most important contributions to predictions.

Although LIME is a powerful tool for interpreting machine learning models, it also has some limitations:

Local interpretation: LIME focuses on local interpretation, which may not capture the overall behavior of the model.

Costly: Generating explanations using LIME can be time-consuming, especially for large datasets and complex models

If LIME doesn’t suit you needs, there are other ways to interpret machine learning models, such as SHAP (SHapley Additive exPlanations) and anchor.

LIME is a valuable tool for explaining what a machine learning classifier (or model) is doing. By providing a practical way to understand complex machine learning models, LIME enables users to trust and improve their systems

By providing interpretable explanations for individual predictions, LIME can help Build trust in machine learning models. This kind of trust is crucial in many industries, especially when using ML models to make important decisions. By better understanding how their models work, users can confidently rely on machine learning systems and make data-driven decisions.

The above is the detailed content of Interpretation of various machine learning model code examples: Explanation with LIME. For more information, please follow other related articles on the PHP Chinese website!