With just a few tweaks, the large model support context size can be extended from 16,000 tokens to 1 million? !

Still on LLaMA 2 which has only 7 billion parameters.

You must know that even the most popular Claude 2 and GPT-4 support context lengths of only 100,000 and 32,000. Beyond this range, large models will start to talk nonsense and be unable to remember things.

Now, a new study from Fudan University and Shanghai Artificial Intelligence Laboratory has not only found a way to increase the length of the context window for a series of large models, but also discovered the rules.

According to this rule, only need to adjust 1 hyperparameter, can ensure the output effect while stably improving the large modelExtrapolation performance.

Extrapolation refers to the change in output performance when the input length of the large model exceeds the length of the pre-trained text. If the extrapolation ability is not good, once the input length exceeds the length of the pre-trained text, the large model will "talk nonsense".

So, what exactly can it improve the extrapolation capabilities of large models, and how does it do it?

This method of improving the extrapolation ability of large models is the same as the position coding in the Transformer architecture. module related.

In fact, the simple attention mechanism (Attention) module cannot distinguish tokens in different positions. For example, "I eat apples" and "apples eat me" have no difference in its eyes. Therefore, position coding needs to be added to allow it to understand the word order information and truly understand the meaning of a sentence. The current Transformer position encoding methods include absolute position encoding (integrating position information into the input), relative position encoding (writing position information into attention score calculation) and rotation position encoding. Among them, the most popular one is the rotational position encoding, which isRoPE.

RoPE achieves the effect of relative position encoding through absolute position encoding, but compared with relative position encoding, it can better improve the extrapolation potential of large models. How to further stimulate the extrapolation capabilities of large models using RoPE position encoding has become a new direction of many recent studies. These studies are mainly divided into two major schools:Limiting attention and Adjusting rotation angle.

Representative research on limiting attention includes ALiBi, xPos, BCA, etc. The StreamingLLM recently proposed by MIT can allow large models to achieve infinite input length (but does not increase the context window length), which belongs to the type of research in this direction.

There is more work to adjust the rotation angle, typical representatives such as linear interpolation, Giraffe, Code LLaMA, LLaMA2 Long et al. all belong to this type of research.

32,000 tokens.

This hyperparameter is exactly the"switch" found by Code LLaMA and LLaMA2 Long et al.——

rotation angle base(base ).

Just fine-tune it to ensure that the extrapolation performance of large models is improved. But whether it is Code LLaMA or LLaMA2 Long, they are only fine-tuned on a specific base and continued training length to enhance their extrapolation capabilities. Is it possible to find a pattern to ensure thatall large models using RoPE position encoding can steadily improve the extrapolation performance?

Master this rule, the context is easy 100w Researchers from Fudan University and Shanghai AI Research Institute conducted experiments on this problem. They first analyzed several parameters that affect the RoPE extrapolation ability, and proposed a concept calledCritical Dimension (Critical Dimension). Then based on this concept, they concluded A set of RoPE extrapolation scaling laws (Scaling Laws of RoPE-based Extrapolation).

Just apply thisrule to ensure that any large model based on RoPE positional encoding can improve extrapolation capabilities.

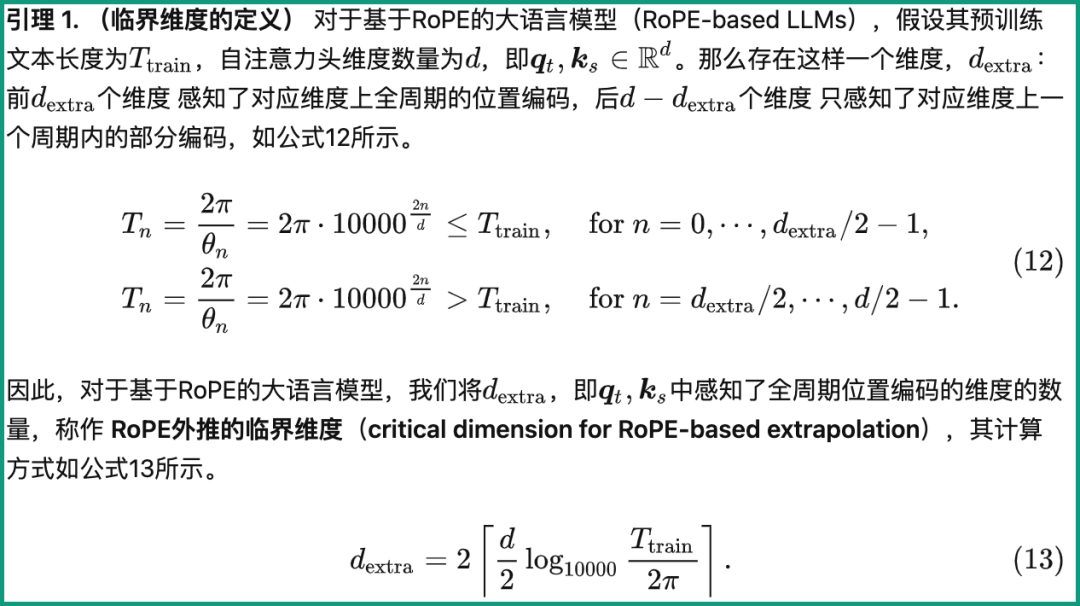

Let’s first look at what the critical dimension is.From the definition, it is related to the pre-training text length Ttrain, the number of self-attention head dimensions d and other parameters. The specific calculation method is as follows:

Among them, 10000 is the "initial value" of the hyperparameter and rotation angle base.

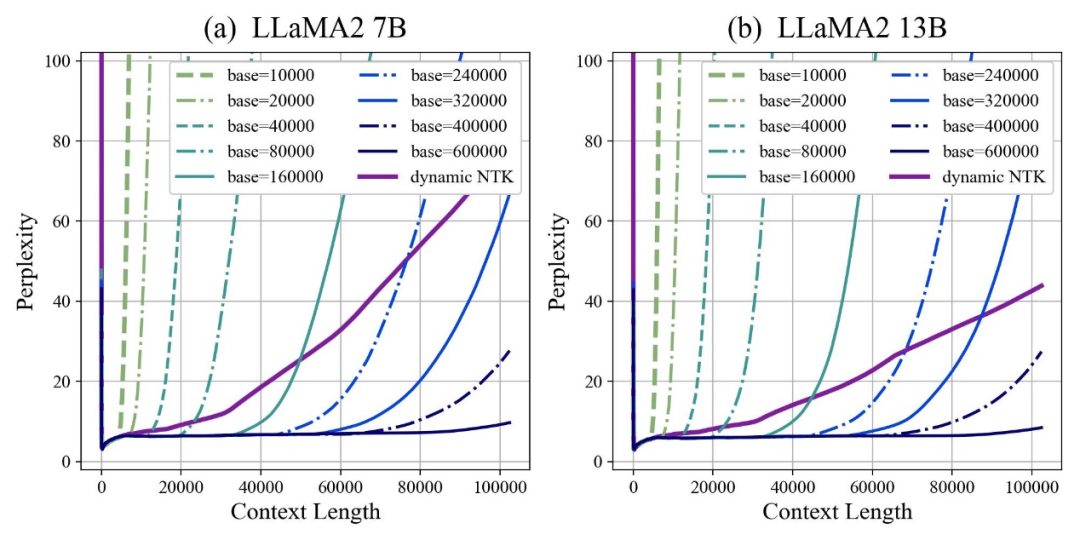

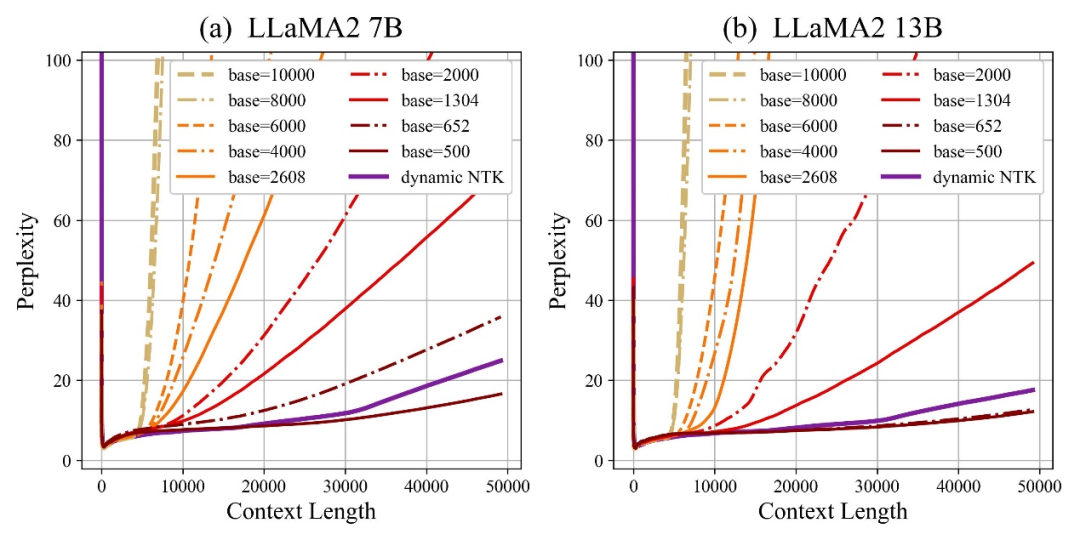

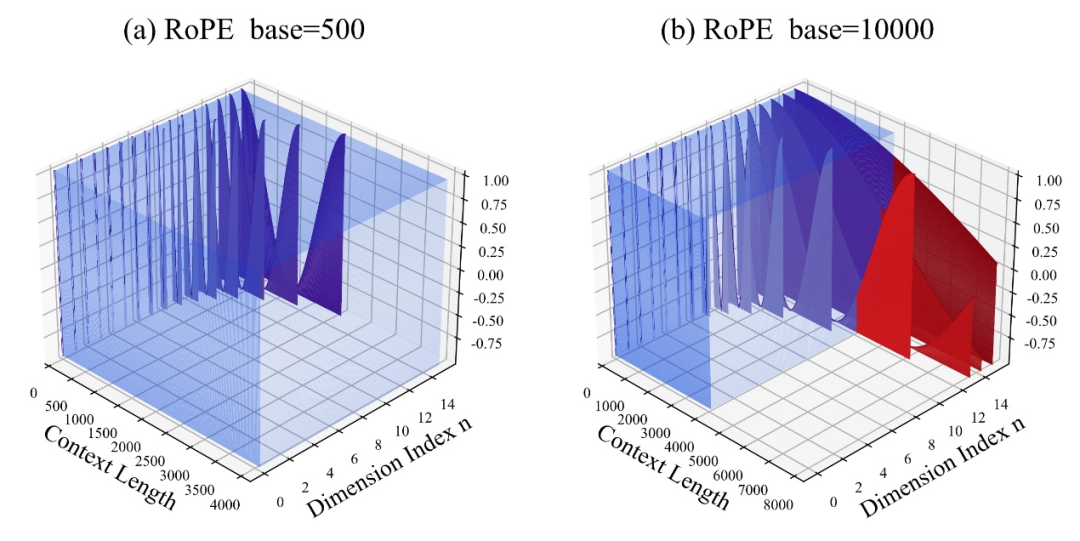

The author found that whether the base is enlarged or reduced, the extrapolation ability of the large model based on RoPE can be enhanced in the end. In contrast, when the base of the rotation angle is 10000, the extrapolation ability of the large model is the best. Poor.

This paper believes that a smaller base of the rotation angle can allow more dimensions to perceive position information, and a larger base of the rotation angle, then Can express longer location information.

In this case, when facing continued training corpus of different lengths, how much rotation angle base should be reduced and enlarged to ensure that the extrapolation ability of the large model is maximized? To what extent?

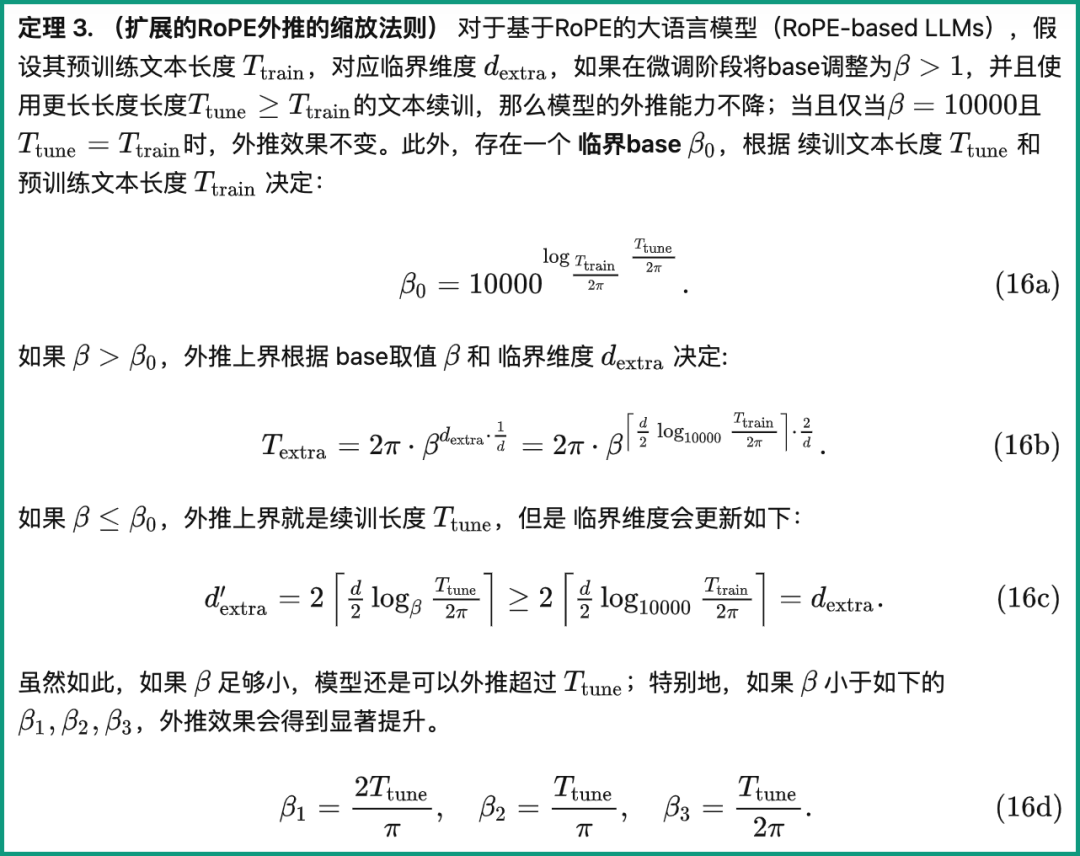

The paper gives a scaling rule for extended RoPE extrapolation, which is related to parameters such as critical dimensions, continued training text length and pre-training text length of large models:

Based on this rule, the extrapolation performance of the large model can be directly calculated based on different pre-training and continued training text lengths. In other words, the context length supported by the large model is predicted.

On the contrary, using this rule, we can also quickly deduce how to best adjust the base of the rotation angle, thereby improving the extrapolation performance of large models.

The author tested this series of tasks and found that currently inputting 100,000, 500,000, or even 1 million tokens lengths can ensure that extrapolation can be achieved without additional attention restrictions.

At the same time, work on enhancing the extrapolation capabilities of large models, including Code LLaMA and LLaMA2 Long, has proven that this rule is indeed reasonable and effective.

In this way, you only need to "adjust a parameter" according to this rule, and you can easily expand the context window length of the large model based on RoPE and enhance the extrapolation capability.

Liu Xiaoran, the first author of the paper, said that this research is still improving the downstream task effect by improving the continued training corpus. After completion, the code and model will be open source. You can look forward to it~

Paper address:

https://arxiv.org/abs/2310.05209

Github repository:

https://github.com/OpenLMLab/scaling-rope

Paper analysis blog:

https:// zhuanlan.zhihu.com/p/660073229

The above is the detailed content of The LLaMA2 context length skyrockets to 1 million tokens, with only one hyperparameter need to be adjusted.. For more information, please follow other related articles on the PHP Chinese website!

How to bind data in dropdownlist

How to bind data in dropdownlist

How to deal with garbled Chinese characters in Linux

How to deal with garbled Chinese characters in Linux

The difference between UCOS and linux

The difference between UCOS and linux

How to use hover in css

How to use hover in css

The latest ranking of the top ten exchanges in the currency circle

The latest ranking of the top ten exchanges in the currency circle

What protocol is udp?

What protocol is udp?

Second-level domain name query method

Second-level domain name query method

The difference between git and svn

The difference between git and svn