By comparing the parameters of GPT-3.5 and Llama 2 on different tasks, we can know under what circumstances choose GPT-3.5 and under what circumstances choose Llama 2 or other models.

Apparently, torqueing GPT-3.5 is very expensive. This paper experimentally verifies whether a manual torque model can approach the performance of GPT-3.5 at a fraction of the cost of GPT-3.5. Interestingly, the paper did.

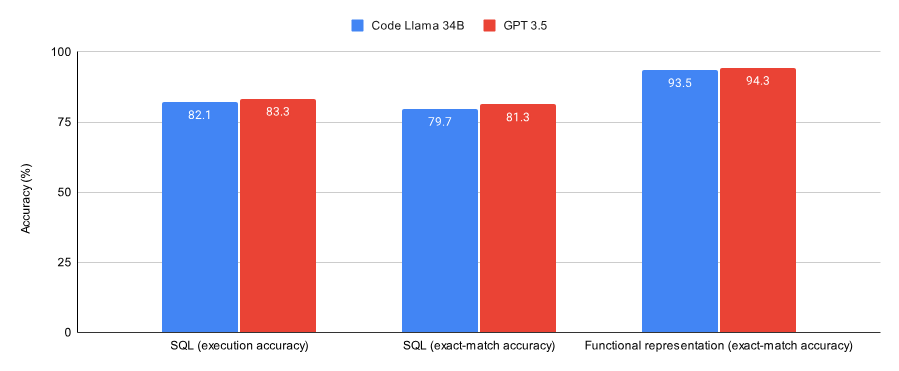

Comparing the results on SQL tasks and function representation tasks, the paper found that:

GPT-3.5 performed well in two data sets (a subset of the Spider data set and Viggo Function representation data set) are slightly better than Code Llama 34B through Lora.

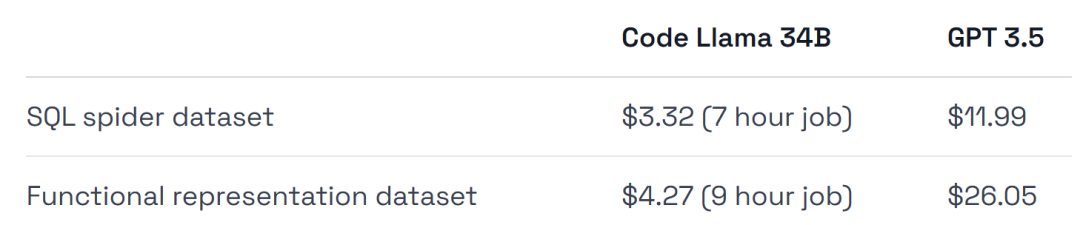

The training cost of GPT-3.5 is 4-6 times higher, and the deployment cost is also higher.

One of the conclusions of this experiment is that GPT-3.5 is suitable for initial verification work, but after that, a model like Llama 2 may be the best choice. To summarize briefly:

If you want validation to be the right way to solve a specific task/dataset, or want a fully managed environment, then adjust GPT-3.5.

If you want to save money, get maximum performance from your data set, have more flexibility in training and deploying infrastructure, and want or retain some data, then Just consume an open source model like Llama 2.

Next let’s see how the paper is implemented.

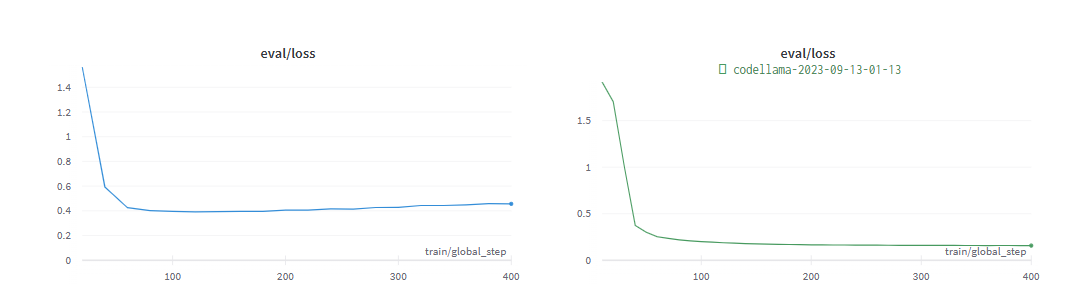

The following figure shows the performance of Code Llama 34B and GPT-3.5 trained to convergence on SQL tasks and function representation tasks. The results show that GPT-3.5 achieves better accuracy on both tasks.

In terms of hardware usage, the experiment used an A40 GPU, which is approximately US$0.475.

#In addition, the experiment enumerates two data sets that are very suitable for terrible experiments. The Spider data set is a subset of the Viggo function representing the data set.

In order to make a fair comparison with the GPT-3.5 model, experiments were performed on Llama with minimal hyperparameters.

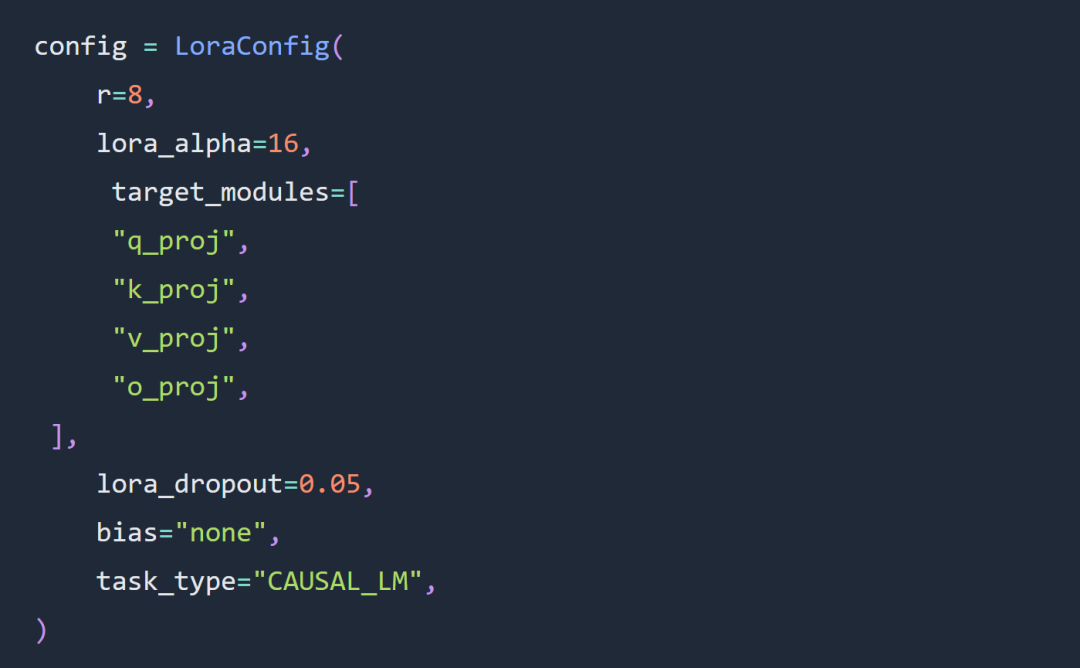

Two key choices for this article’s experiments are to use Code Llama 34B and Lora parameters instead of full-parameter parameters.

The rules for Lora hyperparameter configuration were followed to a large extent in the experiment. The Lora load is as follows:

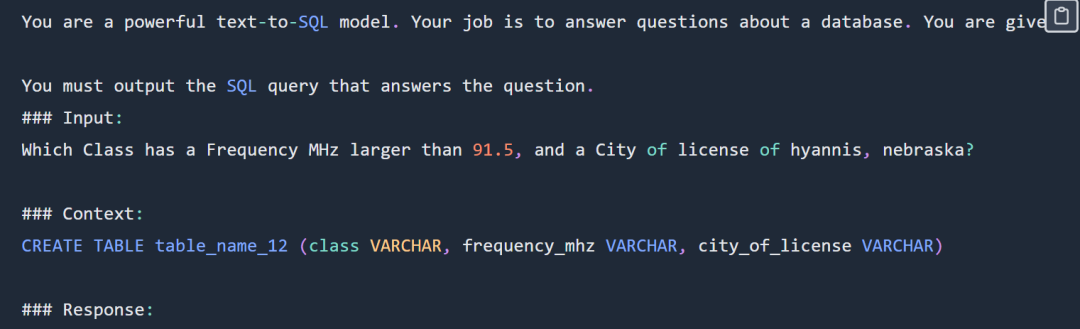

SQL prompt examples are as follows:

# SQL Reminder part of the display, please check the original blog

department : Department_ID [ INT ] primary_key Name [ TEXT ] Creation [ TEXT ] Ranking [ INT ] Budget_in_Billions [ INT ] Num_Employees [ INT ] head : head_ID [ INT ] primary_key name [ TEXT ] born_state [ TEXT ] age [ INT ] management : department_ID [ INT ] primary_key management.department_ID = department.Department_ID head_ID [ INT ] management.head_ID = head.head_ID temporary_acting [ TEXT ]

CREATE TABLE table_name_12 (class VARCHAR, frequency_mhz VARCHAR, city_of_license VARCHAR)

The code and data address of the SQL task: https://github.com/samlhuillier/spider-sql- finetune

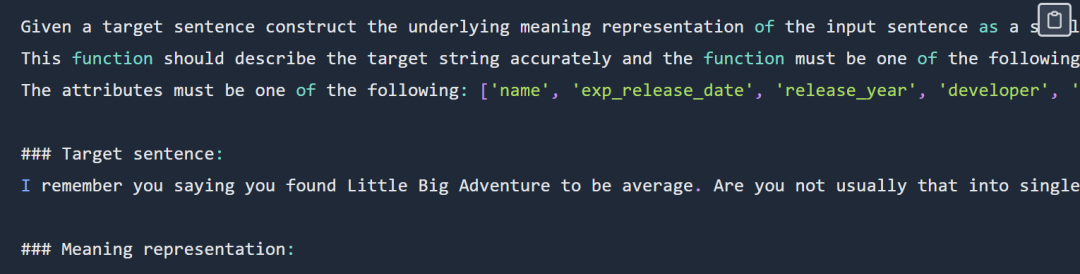

The example of the function representation prompt is as follows:

#The output is as follows:

verify_attribute(name[Little Big Adventure], rating[average], has_multiplayer[no], platforms[PlayStation])

Original link:

https://ragntune.com/blog/gpt3.5-vs-llama2 -finetuning?continueFlag=11fc7786e20d498fc4daa79c5923e198

###The above is the detailed content of Choose GPT-3.5 or Jordan Llama 2 and other open source models? After comprehensive comparison, the answer is. For more information, please follow other related articles on the PHP Chinese website!