Financial Associated Press News on September 19 (Editor Xiaoxiang) Since the rise of generative artificial intelligence this year, there have been constant disputes about data security. According to the latest research from a cybersecurity company, Microsoft's artificial intelligence research team accidentally leaked a large amount of private data on the software development platform GitHub a few months ago, which included more than 30,000 pieces of internal information about the Microsoft team.

A team at cloud security company Wiz discovered that open source training data was leaked by Microsoft's research team when it was released on GitHub in June. The cloud-hosted data was leaked through a misconfigured link.

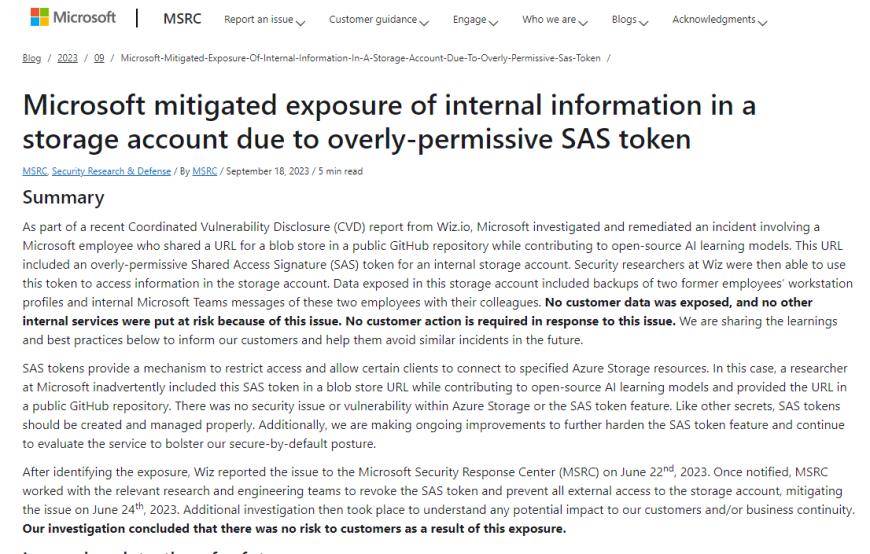

According to a blog post by Wiz, Microsoft's AI research team initially released the open source training data on GitHub. However, due to a misconfiguration of the SAS token, they incorrectly configured the permissions to grant the entire storage account and also gave the user full control instead of just read-only permissions. This means they can delete and overwrite existing files

According to Wiz, the 38TB of leaked data includes disk backups of the personal computers of two Microsoft employees. These backups contain passwords and keys for Microsoft services and more than 30,000 Microsoft team entries from 359 Microsoft employees. Internal information

Wiz researchers pointed out that open data sharing is an important part of AI training. However, large-scale data sharing can also pose significant risks to companies if not used correctly

Wiz CTO and co-founder Ami Luttwak pointed out that Wiz shared the situation with Microsoft in June, and Microsoft quickly deleted the exposed data. The Wiz research team discovered these data caches while scanning the Internet for misconfigured storage.

In response, a Microsoft spokesperson commented afterwards, "We have confirmed that no customer data has been exposed and no other internal services have been compromised."

In a blog post published on Monday, Microsoft said it has investigated and remediated an incident involving a Microsoft employee who shared a URL to an open source artificial intelligence learning model in a public GitHub repository. Microsoft said the exposed data in the storage account included backups of workstation configuration files of two former employees, as well as internal Microsoft Teams information about the two former employees and their colleagues.

The rewritten content is: Source: Financial Associated Press

The above is the detailed content of Up to 38TB of data accidentally leaked! Microsoft's AI research team 'poke trouble' How to ensure data security?. For more information, please follow other related articles on the PHP Chinese website!

What are the anti-virus software?

What are the anti-virus software?

Domestic digital currency platform

Domestic digital currency platform

How to configure Tomcat environment variables

How to configure Tomcat environment variables

What does c# mean?

What does c# mean?

How to recover permanently deleted files on computer

How to recover permanently deleted files on computer

How to open html files on mobile phone

How to open html files on mobile phone

Oracle database recovery method

Oracle database recovery method

How to solve problems when parsing packages

How to solve problems when parsing packages