Today we are going to introduce a paper "REACT: Combining Reasoning and Behavior in Language Models", which is a collaboration between researchers from Google Research and Princeton University. They published this paper while exploring the potential of combining reasoning and behavior in language models. Although the reasoning and action capabilities of large language models (LLMs) have been studied separately, this is the first time that these two capabilities have been combined into one system. Therefore, I think this paper is very important. The ReAct framework allows virtual agents to use a variety of tools such as connecting to the web and SQL databases, thus providing virtually unlimited scalability for reasoning and action Power

Human intelligence is characterized by a seamless combination of task-oriented action and reasoning about next steps. This ability allows us to learn new tasks quickly and make reliable decisions, as well as adapt to unforeseen circumstances. The goal of ReAct is to replicate this synergy in language models, enabling them to generate reasoning steps and task-specific actions in an interleaved manner

Human intelligence is characterized by a seamless combination of task-oriented action and reasoning about next steps. This ability allows us to learn new tasks quickly and make reliable decisions, as well as adapt to unforeseen circumstances. The goal of ReAct is to replicate this synergy in language models, enabling them to generate reasoning steps and task-specific actions in an interleaved manner

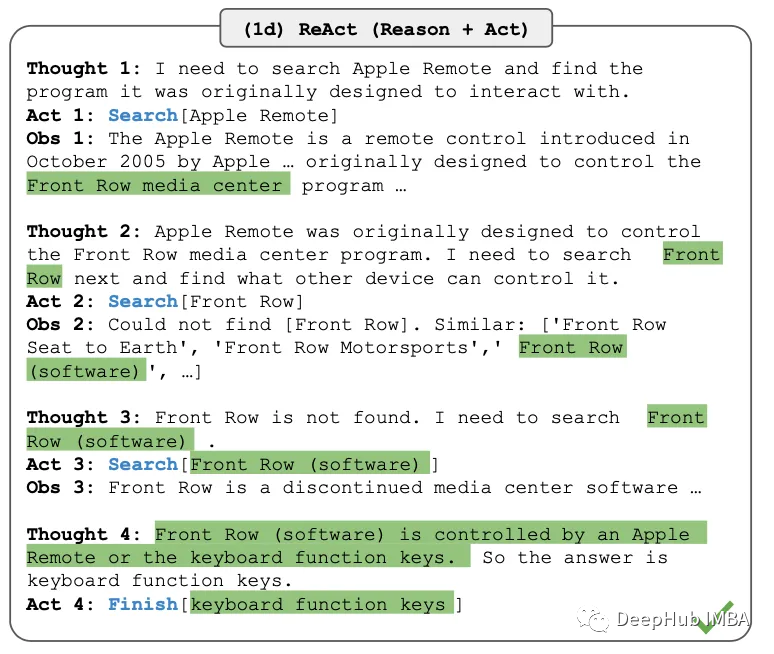

ReAct prompts large language models to generate verbal reasoning history steps and actions for a given task. These prompts consist of a small number of contextual examples that guide the thinking and action generation of the model. A contextual example is given in the figure below. These examples guide the agent through a cyclical process: generate an idea, take an action, and then observe the results of the action. By combining inference traces and actions, ReAct allows models to perform dynamic inference, which can generate high-level plans, and also interact with the external environment to gather additional information

Applications and Results

Researchers applied ReAct to a variety of linguistic reasoning and decision-making tasks, including question answering, fact verification, text-based games, and web page navigation. The results are outstanding, with ReAct consistently outperforming other state-of-the-art baselines in terms of interpretability and trustworthiness

Researchers applied ReAct to a variety of linguistic reasoning and decision-making tasks, including question answering, fact verification, text-based games, and web page navigation. The results are outstanding, with ReAct consistently outperforming other state-of-the-art baselines in terms of interpretability and trustworthiness

despite the intertwined reasoning, action, and observation steps , improves the reliability and credibility of ReAct, but this structure also limits the flexibility of its reasoning steps, resulting in a higher reasoning error rate on some tasks than the thinking chain prompt

The Importance of Reasoning and Action

The researchers also conducted ablation experiments to understand the importance of reasoning and action in different tasks. They found that combining ReAct's internal reasoning and external behavior consistently outperformed baselines that focused on reasoning or action alone. This highlights the value of integrating the two processes for more effective decision-making

Although ReAct has achieved good results, but there is still room for improvement. The researchers recommend scaling up ReAct to train and operate on more tasks and combining it with complementary paradigms such as reinforcement learning. Additionally, the model can be fine-tuned using more human-annotated data to further improve performance

ReAct is developing smarter , a big step towards a more general AI system, and it also supports some very useful proxy functions in the Langchain library. By combining reasoning and behavior in language models, performance improvements have been demonstrated across a range of tasks, while also enhancing interpretability and trustworthiness. As artificial intelligence continues to evolve, the integration of reasoning and behavior will play a key role in creating more capable and adaptive artificial intelligence systems

The above is the detailed content of Achieving smarter AI: ReAct technology that integrates reasoning and behavior in language models. For more information, please follow other related articles on the PHP Chinese website!

Application of artificial intelligence in life

Application of artificial intelligence in life

What is the basic concept of artificial intelligence

What is the basic concept of artificial intelligence

What is the function of mobile phone NFC?

What is the function of mobile phone NFC?

What are the core technologies necessary for Java development?

What are the core technologies necessary for Java development?

freelaunchbar

freelaunchbar

sp2 patch

sp2 patch

Solution to 0x84b10001

Solution to 0x84b10001

Common encryption methods for data encryption storage

Common encryption methods for data encryption storage