Use the diffusion model to generate textures for 3D objectsYou can do it in one sentence!

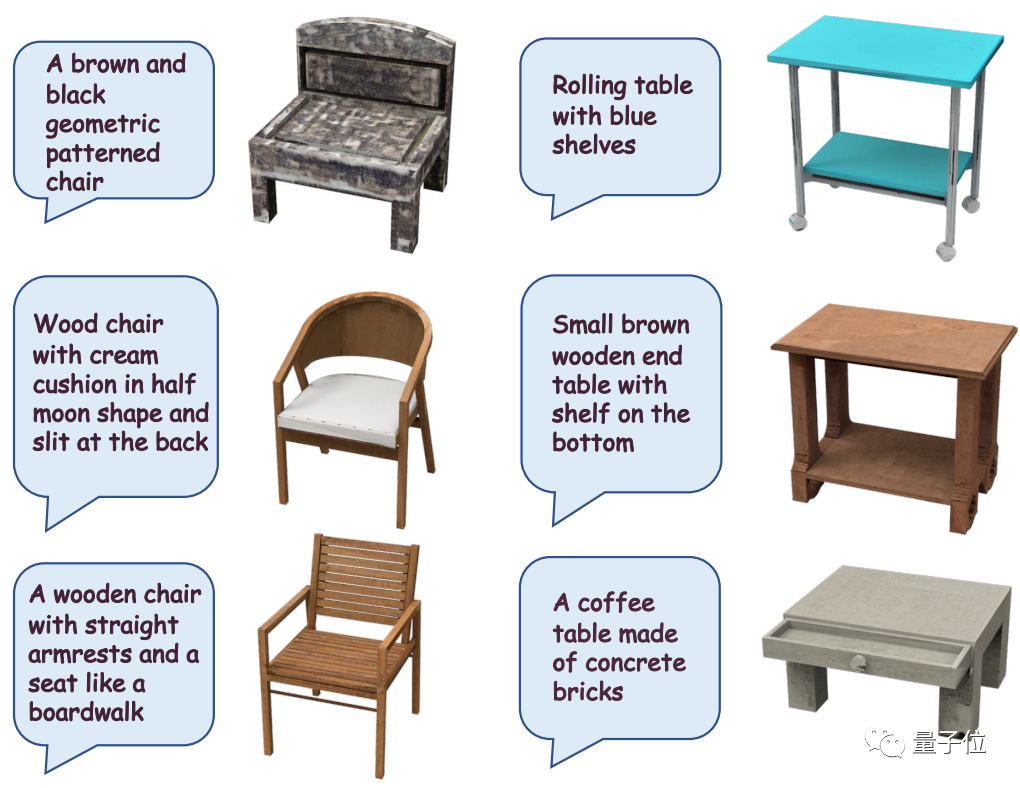

When you type "a chair with a brown and black geometric pattern", the diffusion model will immediately add an ancient texture to it, giving it a period feel

AI can immediately add detailed wooden textures to the desktop with imagination, even just a screenshot that cannot tell what the desktop looks like

You know, Adding texture to a 3D object is not just as simple as "changing the color".

When designing materials, you need to consider multiple parameters such as roughness, reflection, transparency, vortex, and bloom. In order to design good effects, you not only need to understand knowledge about materials, lighting, rendering, etc., but you also need to perform repeated rendering tests and modifications. If the material changes, you may need to restart the design

The content that needs to be rewritten is: the effect of texture loss in the game scene

However, previously used The textures designed by artificial intelligence are not ideal in appearance, so designing textures has always been a time-consuming and labor-intensive task, which has also resulted in higher costs.

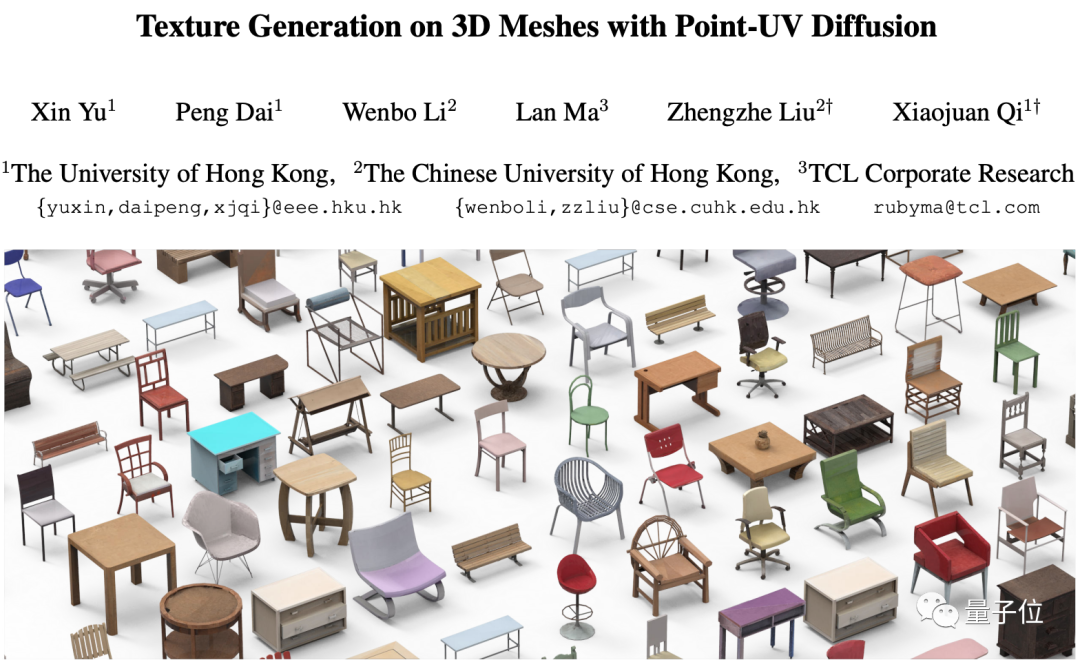

Researchers from HKU, Hong Kong Chinese and TCL recently developed A new artificial intelligence method for designing 3D object textures. This method can not only perfectly retain the original shape of the object, but also design a more realistic texture that perfectly fits the surface of the object

This research has been included as an oral report paper in ICCV 2023

What we need to rewrite is: What is the solution to this problem? Let's take a look

When using artificial intelligence to design 3D texture before, there were two main types of problems

Generation The texture is unrealistic and has limited details.

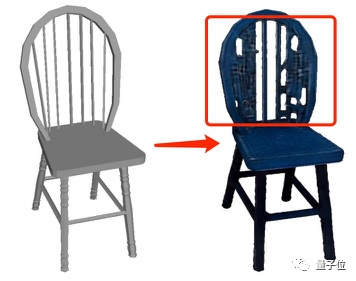

During the generation process, the geometry of the 3D object itself will be specially processed, making the generated texture unable to perfectly fit the original object. , strange shapes will "pop out"

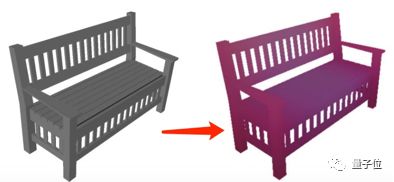

Therefore, in order to ensure the stability of the 3D object structure while generating detailed and realistic textures, this research designed a tool called Point-UV diffusion framework.

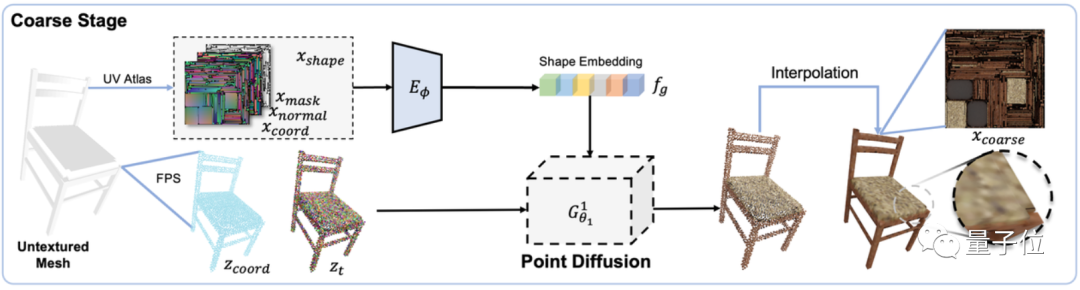

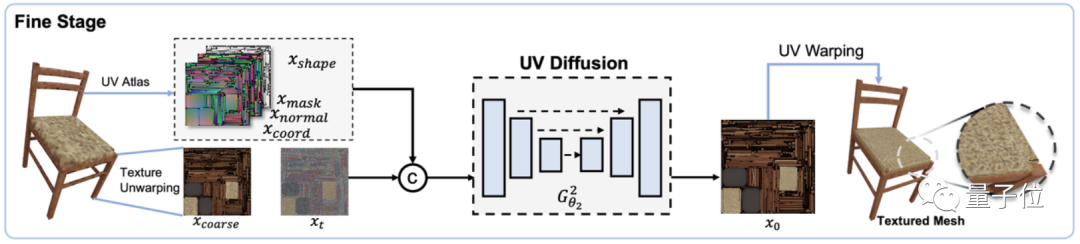

This framework includes two modules: "rough design" and "finishing", both of which are based on the diffusion model, but the diffusion models used in the two are different.

First in the "rough design" module, train a 3D diffusion model with shape features (including surface normals, coordinates and masks) as input conditions to predict the shape of the object The color of each point in it, thereby generating a rough texture image effect:

In the "Finishing" module, a 2D diffusion model is also designed for further utilization The previously generated rough texture images and object shapes are used as input conditions to generate finer textures

The reason for adopting this design structure is the previous high-resolution point cloud generation The calculation cost of the method is usually too high

With this two-stage generation method, not only can the calculation cost be saved, so that the two diffusion models can play their own roles, but compared with the previous method, not only the original 3D The structure of the object and the generated texture are also more refined

As for controlling the generated effect by inputting text or pictures, it is the "contribution" of CLIP.

In order not to change the original meaning, the content needs to be rewritten into Chinese. What needs to be rewritten is: for the input, the author will first use the pre-trained CLIP model to extract the embedding vector of the text or image, then input it into an MLP model, and finally integrate the conditions into "rough design" and "finishing" In the two-stage network

, the final output result can be achieved by controlling the texture generated through text and images

So, what is the implementation effect of this model?

Let us first take a look at the generation effect of Point-UV diffusion

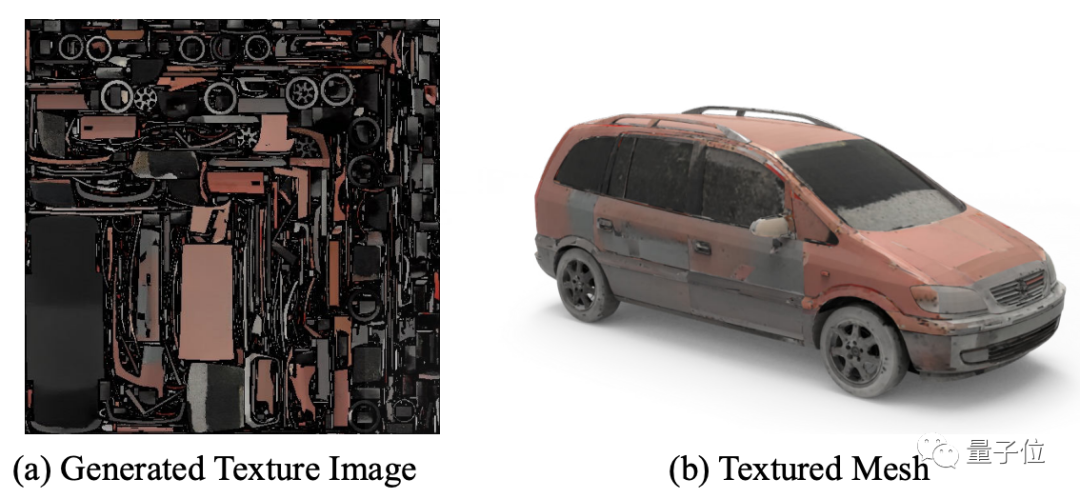

As can be seen from the renderings, in addition to tables and chairs, Point -UV diffusion can also generate textures for objects such as cars, with a richer variety

It can not only generate textures based on text:

The texture effect of the corresponding object can also be generated based on an image:

The authors also compared the texture effect generated by Point-UV diffusion with the previous method

By observing the chart, you can find that compared with other texture generation models such as Texture Fields, Texturify, PVD-Tex, etc., Point-UV diffusion shows better results in terms of structure and fineness

The author also mentioned that under the same hardware configuration, compared to Text2Mesh which takes 10 minutes to calculate, Point-UV diffusion only takes 30 seconds.

However, the author also pointed out some current limitations of Point-UV diffusion. For example, when there are too many "fragmented" parts in the UV map, it still cannot achieve a seamless texture effect. In addition, due to the reliance on 3D data for training, the refined quality and quantity of 3D data currently cannot reach the level of 2D data, so the generated effect cannot yet achieve the refined effect of 2D image generation

For those who are interested in this research, you can click on the link below to read the paper~

Paper address: https://cvmi- lab.github.io/Point-UV-Diffusion/paper/point_uv_diffusion.pdf

Project address (still under construction): https://github. com/CVMI-Lab/Point-UV-Diffusion

The above is the detailed content of Easily complete 3D model texture mapping in 30 seconds, simple and efficient!. For more information, please follow other related articles on the PHP Chinese website!

How to solve http request 415 error

How to solve http request 415 error

Which mobile phones does Hongmeng OS support?

Which mobile phones does Hongmeng OS support?

What does open source code mean?

What does open source code mean?

Solution to computer black screen prompt missing operating system

Solution to computer black screen prompt missing operating system

Introduction to the meaning of cloud download windows

Introduction to the meaning of cloud download windows

How to solve the problem of black screen after turning on the computer and unable to enter the desktop

How to solve the problem of black screen after turning on the computer and unable to enter the desktop

What does bios mean?

What does bios mean?

How to compare the file contents of two versions in git

How to compare the file contents of two versions in git