You are working For a start-up company. Suddenly, that year of hard coding paid off - success As web applications continue to grow in size and demand.

at this tutorial, I would like to humbly use one of our recent "success stories" Around our WebGL open source game framework Babylon.js and its website. We're glad to see so many networks Game developers give it a try. But to meet demand, we knew we needed a new Web Hosting Solutions.

While this tutorial focuses on Microsoft Azure, many of the These concepts apply to a variety of solutions you might like. We'll also see the various optimizations we do to limit as much as possible It could be the output bandwidth from our servers to your browser.

Babylon.js is a We have been working on a personal project for over a year now. Since this is a personal project (i.e. our time and money), we host the website, textures and 3D scenes on Relatively cheap hosting solution using a small dedicated Windows/IIS machine. The project started in France but quickly attracted a number of 3D and Network experts around the world as well as some game studios. we are very happy Feedback from the community, but traffic is controllable!

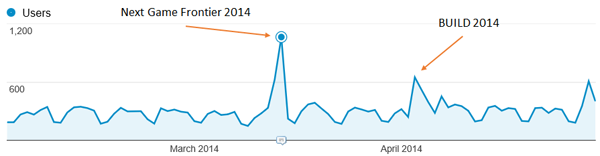

For example, between Between February 2014 and April 2014, we averaged over 7,000 users per month, averaging Over 16K pages viewed per month. Some of the events we've been talking about Some interesting peaks were generated:

but The experience on the website is still good enough. Scene loading has not been completed yet It's amazingly fast, but users aren't complaining that much.

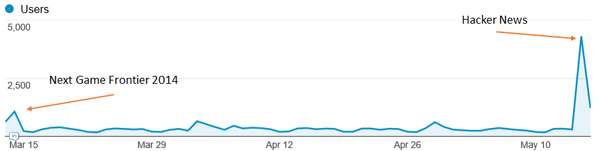

but, Recently, a cool guy decided to share our work on Hacker News. We are really happy to hear such news! But look what happens to the website's connection:

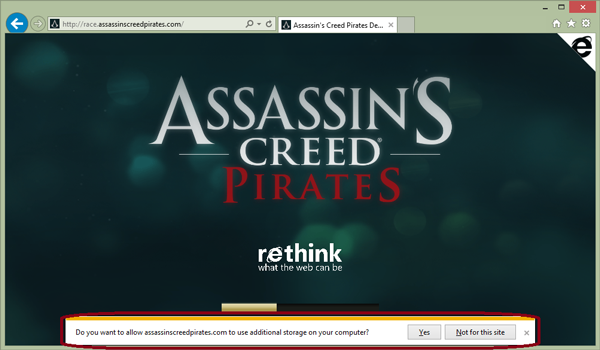

Game overOur little server! it slowly Stopped working and our user experience was terrible. IIS server Time spent processing large static assets and images, as well as CPU usage Too high. As we roll out WebGL for Assassin's Creed: Pirates Experience the project running on Babylon.js, it’s time to switch to something more scalable Professional hosting with cloud solutions.

But before Reviewing our hosting options, let’s briefly talk about our specific situation Engine and website:

Acknowledgments: I would like to give special thanks to Benjamin Talmard, one of our French Azure technicians Evangelists who helped us move to Azure.

As we wish To spend most of our time writing code and functionality for our engine, we Don't want to waste time on plumbing. That's why we decided immediately Choose a PaaS approach over an IaaS approach.

Besides that, we Love the Visual Studio integration with Azure. i can do almost anything Favorite IDE. Even though Babylon.js is hosted on GitHub, we use Visual Studio 2013, TypeScript and Visual Studio Online to write our engine. as your note projects, you can get free trials of Visual Studio Community and Azure.

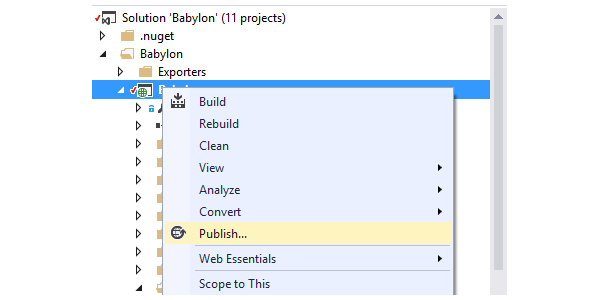

Migrate to Azure It took me about five minutes:

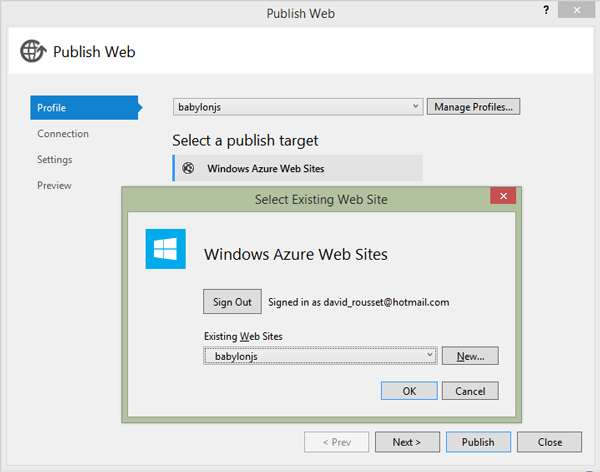

Coming now The great thing about tools. When I log into VS using Microsoft The account is tied to my Azure subscription and the wizard lets me just select Web The website I want to deploy.

No need Worry about complex authentication, connection strings, or anything else.

“Next, next, Next step and post ", a few minutes later, at the end of the article With all our assets and files uploaded, the website is up and running!

about Configuration-wise, we want to benefit from the cool autoscaling service. it It will be of great help to our previous Hacker News scenario.

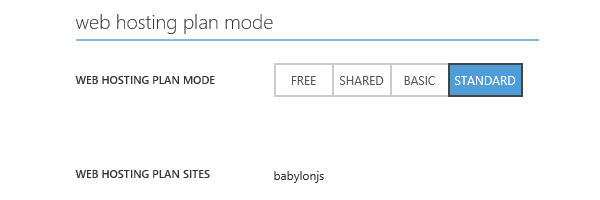

First, your The instance has been configured in the Scale tab for Standard mode.

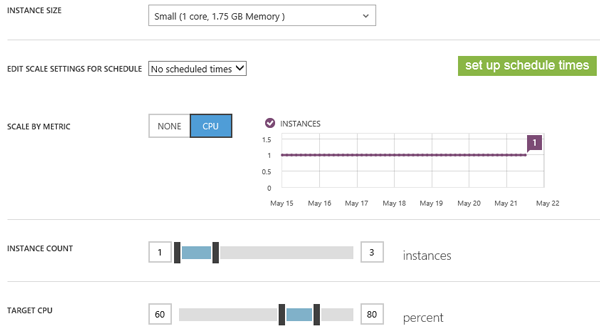

Then you can Selectthe number of instances you want to automatically scale, where CPU status and scheduled time.

In our example we have Decide to use up to three small instances (1 core, 1.75 GB memory) and If CPU utilization exceeds 80%, a new instance is automatically spawned. we will If the CPU drops below 60%, remove an instance. The autoscaling mechanism is Always on in our case - we haven't set a specific scheduled time yet.

The idea is ReallyPay only for what you need within a specific time frame load. I like this concept. With this, we will be able to handle With this Azure service, you don't need to do anything to reach your previous peak!

You can also quickly view the autozoom history via the purple chart. In our example, Since we moved to Azure, we have never checked an instance so far. we are Here's how to minimize the risk of getting stuck on autoscaling.

in conclusion

In the website configuration we want to enable automatic gzip compression

On our specific 3D engine resources (.babylon and .babylonmeshdata

document). This is crucial for us as it can save up to 3x bandwidth and

Hence...the price.

The website is Running on IIS. To configure IIS you need to go into web.config document. We use the following configuration in this example:

<system.webServer>

<staticContent>

<mimeMap fileExtension=".dds" mimeType="application/dds" />

<mimeMap fileExtension=".fx" mimeType="application/fx" />

<mimeMap fileExtension=".babylon" mimeType="application/babylon" />

<mimeMap fileExtension=".babylonmeshdata" mimeType="application/babylonmeshdata" />

<mimeMap fileExtension=".cache" mimeType="text/cache-manifest" />

<mimeMap fileExtension=".mp4" mimeType="video/mp4" />

</staticContent>

<httpCompression>

<dynamicTypes>

<clear />

<add enabled="true" mimeType="text/*"/>

<add enabled="true" mimeType="message/*"/>

<add enabled="true" mimeType="application/x-javascript"/>

<add enabled="true" mimeType="application/javascript"/>

<add enabled="true" mimeType="application/json"/>

<add enabled="true" mimeType="application/atom+xml"/>

<add enabled="true" mimeType="application/atom+xml;charset=utf-8"/>

<add enabled="true" mimeType="application/babylonmeshdata" />

<add enabled="true" mimeType="application/babylon"/>

<add enabled="false" mimeType="*/*"/>

</dynamicTypes>

<staticTypes>

<clear />

<add enabled="true" mimeType="text/*"/>

<add enabled="true" mimeType="message/*"/>

<add enabled="true" mimeType="application/javascript"/>

<add enabled="true" mimeType="application/atom+xml"/>

<add enabled="true" mimeType="application/xaml+xml"/>

<add enabled="true" mimeType="application/json"/>

<add enabled="true" mimeType="application/babylonmeshdata" />

<add enabled="true" mimeType="application/babylon"/>

<add enabled="false" mimeType="*/*"/>

</staticTypes>

</httpCompression>

</system.webServer>

This solution Works great, we even noticed that the time to load the scene has been This is a reduction from our previous console. I guess it's thanks to Azure data centers use better infrastructure and networking.

But, I have Been thinking about moving to Azure for a while. My first thought was not Have a website instance serve a bunch of my assets. From the beginning, I was more interested in storing my assets in blob storage which was more suitable for the design That. It will also give us a possible CDN scenario.

main reason Using blob storage in our case is to avoid loading the CPU of our network The site instance that serves them. If everything is served via blob In addition to some HTML, JavaScript, and CSS files, our website instance will also store There are few opportunities for automatic scaling.

But this triggered Two problems need to be solved:

CORS on blob Storage has been supported for several months. This article, "Windows Azure Storage: Introduction to CORS," explains how to use the Azure API to Configure CORS. In my case, I don't want to write a small application to do this. I have Find one already written online: Cynapta Azure CORS Helper – Free Tool Manage CORS rules for Windows Azure Blob storage.

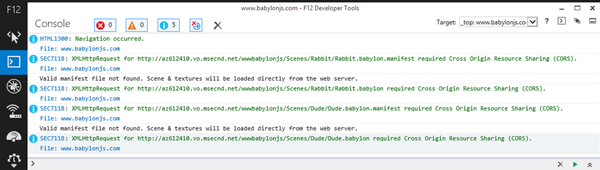

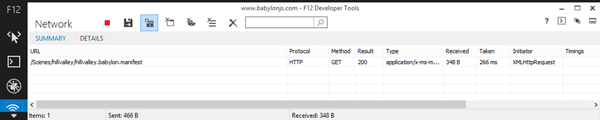

Then I will Enabled support for GET and correct headers on my container. Check if Everything works as expected, just open F12 developer bar and check Console log:

As you can see, Green log lines mean everything is running fine.

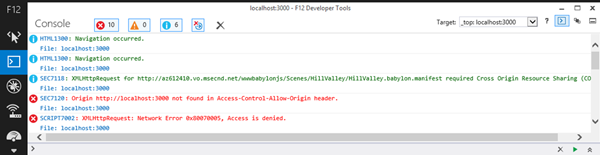

Here is one Example case where it will fail. If you try to load our scene from our blob Store directly from localhost (or any other domain) and you will get These errors in the logs:

总而言之, 如果您发现在“Access-Control-Allow-Origin”中找不到您的调用域 标头后面带有“访问被拒绝”,这是因为您 没有正确设置您的 CORS 规则。 控制自己的情绪非常重要 CORS 规则;否则,任何人都可以使用您的资产,从而您的 带宽,花钱而不让你知道!

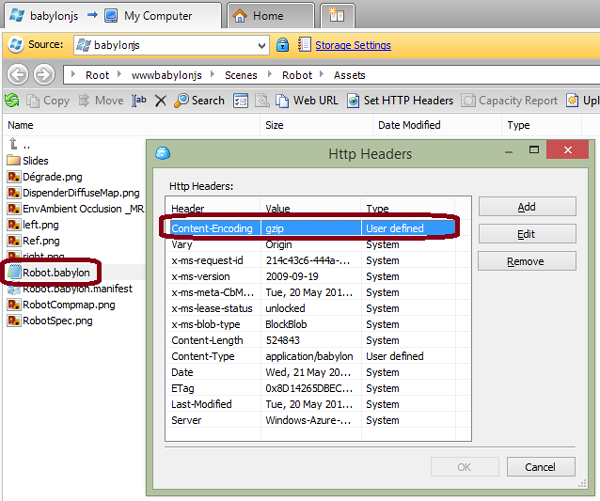

就像我一样 之前告诉过您,Azure Blob 存储不支持自动 gzip 压缩。竞争对手的解决方案似乎也是如此 S3。您有两种选择来解决这个问题:

content-encoding 标头

到 gzip。此解决方案有效,但仅适用于支持 gzip 的浏览器(是

还有一个浏览器不支持 gzip 吗?)。

.extension,另一个使用.extension.gzip,

例如。在 IIS 端设置一个处理程序来捕获 HTTP 请求

从客户端检查 accept-encoding 设置为 gzip 的标头,并根据此支持提供相应的文件。你会发现更多细节

有关本文中要实现的代码:从 Azure CDN 提供 GZip 压缩内容。就我们而言,我 不知道有哪个浏览器支持 WebGL 而不是 gzip 压缩。所以如果 浏览器不支持 gzip,没有真正的兴趣继续下去,因为这个 可能意味着 WebGL 也不被支持。

因此我选择了第一个解决方案。因为我们没有很多场景,而且我们也没有 每天生产一个新的,我目前正在使用这个手动过程:

.babylon

我的机器上的文件使用 gzip 编码并将“压缩级别”设置为“最快”。

其他压缩级别似乎在我的测试中产生了问题。 content-encoding 设置为 gzip。

我知道什么 你在想。我要对我的所有文件执行此操作吗?!?不,你可以工作 构建一个可以自动执行此操作的工具或构建后脚本。为了 例如,这是我构建的一个小命令行工具:

string accountName = "yoda";

string containerName = "wwwbabylonjs";

string accountKey = "yourmagickey";

string sceneTextContent;

// First argument must be the directory into the Azure Blob Container targeted

string directory = args[0];

try

{

StorageCredentials creds = new StorageCredentials(accountName, accountKey);

CloudStorageAccount account = new CloudStorageAccount(creds, useHttps: true);

CloudBlobClient client = account.CreateCloudBlobClient();

CloudBlobContainer blobContainer = client.GetContainerReference(containerName);

blobContainer.CreateIfNotExists();

var sceneDirectory = blobContainer.GetDirectoryReference(directory);

string[] filesArgs = args.Skip(1).ToArray();

foreach (string filespec in filesArgs)

{

string specdir = Path.GetDirectoryName(filespec);

string specpart = Path.GetFileName(filespec);

if (specdir.Length == 0)

{

specdir = Environment.CurrentDirectory;

}

foreach (string file in Directory.GetFiles(specdir, specpart))

{

string path = Path.Combine(specdir, file);

string sceneName = Path.GetFileName(path);

Console.WriteLine("Working on " + sceneName + "...");

CloudBlockBlob blob = sceneDirectory.GetBlockBlobReference(sceneName);

blob.Properties.ContentEncoding = "gzip";

blob.Properties.ContentType = "application/babylon";

sceneTextContent = System.IO.File.ReadAllText(path);

var bytes = Encoding.UTF8.GetBytes(sceneTextContent);

using (MemoryStream ms = new MemoryStream())

{

using (GZipStream gzip = new GZipStream(ms, CompressionMode.Compress, true))

{

gzip.Write(bytes, 0, bytes.Length);

}

ms.Position = 0;

Console.WriteLine("Gzip done.");

blob.UploadFromStream(ms);

Console.WriteLine("Uploading in " + accountName + "/" + containerName + "/" + directory + " done.");

}

}

}

}

catch (Exception ex)

{

Console.WriteLine(ex);

}

为了使用它,我 可以执行以下操作:

UploadAndGzipFilesToAzureBlobStorage 场景/Espilit C:\Boulot\Babylon\Scenes\Espilit\*.babylon* 推送包含多个的场景 文件(我们的增量场景包含多个 .babylonmeshdata 文件)。

或者简单地说:

UploadAndGzipFilesToAzureBlobStorage 场景/Espilit C:\Boulot\Babylon\Scenes\Espilit\Espilit.babylon 推送唯一文件。

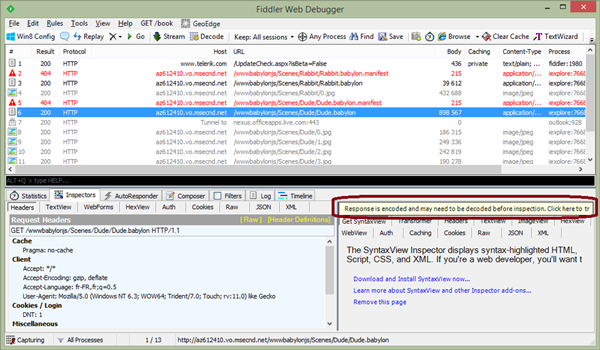

检查一下 gzip 使用此解决方案按预期工作,我使用的是 Fiddler。从客户端加载您的内容 机器并检查网络痕迹,返回的内容是否确实 已压缩且可以解压缩:

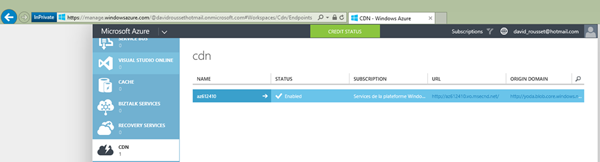

一旦你 完成前两个步骤后,您只需单击其中的一个按钮即可 用于启用 CDN 并将其映射到您的 Blob 存储的 Azure 管理页面:

就是这样 简单的!就我而言,我只需更改以下 URL:http://yoda.blob.core.windows.net/wwwbabylonjs/Scenes 到http://az612410.vo.msecnd.net/wwwbabylonjs/Scenes。请注意,您可以自定义此 CDN 如果您愿意,可以将域名添加到您自己的域名中。

多亏了这一点, 我们能够以非常快速的方式为您提供 3D 资产,因为您将得到服务 从此处列出的节点位置之一:Azure 内容交付网络 (CDN) 节点位置。

我们的网站是 目前托管在北欧 Azure 数据中心。但如果你来的话 从西雅图,您将 ping 该服务器只是为了下载我们的基本 index.html, index.js、index.css 文件和一些屏幕截图。所有 3D 资产将 从您附近的西雅图节点提供服务!

注意:我们所有的演示 正在使用完全优化的体验(使用 gzip、CDN 和 DB 的 Blob 存储) 缓存)。

optimization Loading times and controlling output bandwidth costs aren't just server-side issues. You can also build some logical clients to optimize things. Luckily, we’ve been able to do this since v1.4 of the Babylon.js engine. I have Detailed explanation of how I implemented support for IndexedDB In this article: Working with 3D WebGL using IndexedDB References Assets: Share feedback and tips for Babylon.JS. You will find out how to activate it Babylon.js on our wiki: Caching resources in IndexedDB.

Basically, you

Simply create a .babylon.manifest file that matches the .babylon name

Scene, then set what you want to cache (textures and/or JSON scenes). That is

it.

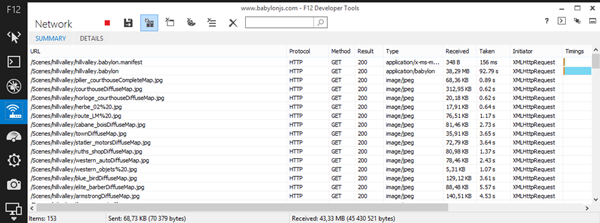

For example, Check out the Hill Valley demo scene. When loading for the first time, the following is the request sent:

153 items and 43.33 MB received. But if you agree to let babylonjs.com" use additional storage space on your computer" is what you'll see the second time you load The same scene:

1 item and 348 byte! We are only Check if the manifest file has been changed. If not, we will load everything From the database, we saved 43 MB of bandwidth .

For example, This method is being used in the Assassin's Creed Pirates game:

Let's think about it That:

This article is part of network development technology Series from Microsoft. We're excited to share with you Microsoft Edge and the new EdgeHTML rendering engine. free Virtual machine or remote testing on Mac, iOS, Android or Windows devices @http://dev.modern.ie/.

The above is the detailed content of The reasons and process for migrating Babylon.js to Azure. For more information, please follow other related articles on the PHP Chinese website!

A complete list of commonly used public dns

A complete list of commonly used public dns

python comment shortcut keys

python comment shortcut keys

What are the differences between hibernate and mybatis

What are the differences between hibernate and mybatis

How to use left join

How to use left join

vcruntime140.dll cannot be found and code execution cannot continue

vcruntime140.dll cannot be found and code execution cannot continue

How to insert page numbers in ppt

How to insert page numbers in ppt

How to find the median of an array in php

How to find the median of an array in php

How to solve the problem that Apple cannot download more than 200 files

How to solve the problem that Apple cannot download more than 200 files