Today I will share with you some common knowledge points about memory management in Go.

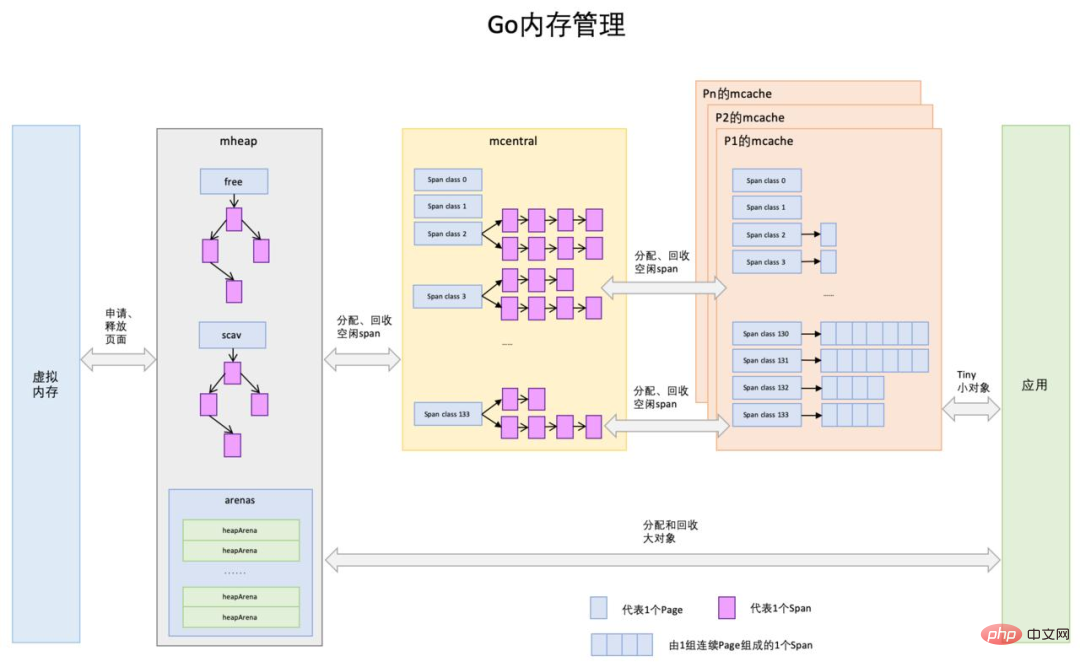

The process of allocating memory in Go is mainly composed of three The levels managed by large components from top to bottom are:

When the program starts, Go will first apply for a large piece of memory from the operating system, and It is left to the global management of themheapstructure.

How to manage it specifically? mheap will divide this large piece of memory into small memory blocks of different specifications, which we call mspan. Depending on the size of the specification, there are about 70 types of mspan. The division can be said to be very fine, enough to meet the needs of various object memories. distribution.

So these mspan specifications, large and small, are mixed together, which must be difficult to manage, right?

So there is the next-level component mcentral

Starting a Go program will initialize a lot of mcentral, each mcentral only Responsible for managing mspan of a specific specification.

Equivalent to mcentral, which implements refined management of mspan based on mheap.

But mcentral is globally visible in the Go program, so every time the coroutine comes to mcentral to apply for memory, it needs to be locked.

It can be expected that if each coroutine comes to mcentral to apply for memory, the overhead of frequent locking and releasing will be very large.

Therefore, a secondary proxy of mcentral is needed to buffer this pressure

In a Go program, each threadMwill be bound to a processorP, at a single granularity Only onegoroutinecan be run during multi-processing, and eachPwill be bound to a local cache calledmcache.

When memory allocation is required, the currently runninggoroutinewill look for availablemspanfrommcache. There is no need to lock when allocating memory from the localmcache. This allocation strategy is more efficient.

The number of mspans in mcache is not always sufficient. When the supply exceeds demand, mcache will apply for more mspans from mcentral again, the same , if the number of mspan of mcentral is not enough, mcentral will also apply for mspan from its superior mheap. To be more extreme, what should we do if the mspan in mheap cannot satisfy the program's memory request?

There is no other way, mheap can only shamelessly apply to the big brother of the operating system.

The above supply process is only applicable to scenarios where the memory block is less than 64KB. The reason is that Go cannot use the local cache of the worker threadmcacheand the global central cachemcentralto manage more than 64KB memory allocation, so for those memory applications exceeding 64KB, the corresponding number of memory pages (each page size is 8KB) will be allocated directly from the heap (mheap) to the program.

According to different memory management (allocation and recycling) methods, memory can be divided intoheap memoryandstack memory.

So what’s the difference between them?

Heap memory: The memory allocator and garbage collector are responsible for recycling

Stack memory: Automatically allocated and released by the compiler

When a program is running, there may be multiple stack memories, but there will definitely be only one heap memory.

Each stack memory is independently occupied by a thread or coroutine, so allocating memory from the stack does not require locking, and the stack memory will be automatically recycled after the function ends, and the performance is higher than that of heap memory.

And what about heap memory? Since multiple threads or coroutines may apply for memory from the heap at the same time, applying for memory in the heap requires locking to avoid conflicts, and the heap memory requires the intervention of GC (garbage collection) after the function ends. If A large number of GC operations will seriously degrade program performance.

It can be seen that in order to improve the performance of the program, the memory in the heap should be minimized Allocate up, which can reduce the pressure on GC.

In determining whether a variable is allocated memory on the heap or on the stack, although predecessors have summarized some rules, it is up to the programmer to always pay attention to this issue when coding. The requirements for programmers are quite high.

Fortunately, the Go compiler also opens up the function of escape analysis. Using escape analysis, you can directly detect all the variables allocated on the heap by your programmer (this phenomenon is called escape).

The method is to execute the following command

go build -gcflags '-m -l' demo.go # 或者再加个 -m 查看更详细信息 go build -gcflags '-m -m -l' demo.go

If you escape the analysis tool, you can actually manually determine which variables are allocated on the heap.

So what are these rules?

After summary, there are mainly four situations as follows

According to the scope of use of variables

Determine based on the variable type

Based on the occupied size of the variable

Based on Is the length of the variable determined?

Next we analyze and verify one by one

When When you compile, the compiler will do escape analysis. When it is found that a variable is only used within a function, it can allocate memory for it on the stack.

For example, in the example below

func foo() int { v := 1024 return v } func main() { m := foo() fmt.Println(m) }

we can view the results of escape analysis throughgo build -gcflags '-m -l' demo.go, where-mprints escape analysis information, while-ldisables inline optimization.

From the analysis results, we did not see any escape instructions about the v variable, indicating that it did not escape and was allocated on the stack.

$ go build -gcflags '-m -l' demo.go # command-line-arguments ./demo.go:12:13: ... argument does not escape ./demo.go:12:13: m escapes to heap

If the variable needs to be used outside the scope of the function, and if it is still allocated on the stack, then when the function returns, the memory space pointed to by the variable will be recycled, and the program will inevitably report an error. Therefore, such variables can only be allocated on the heap.

For example, in the example below,returns a pointer

func foo() *int { v := 1024 return &v } func main() { m := foo() fmt.Println(*m) // 1024 }

You can see from the results of escape analysis thatmoved to heap: v, v Variables are memory allocated from the heap, which is obviously different from the above scenario.

$ go build -gcflags '-m -l' demo.go # command-line-arguments ./demo.go:6:2: moved to heap: v ./demo.go:12:13: ... argument does not escape ./demo.go:12:14: *m escapes to heap

除了返回指针之外,还有其他的几种情况也可归为一类:

第一种情况:返回任意引用型的变量:Slice 和 Map

func foo() []int { a := []int{1,2,3} return a } func main() { b := foo() fmt.Println(b) }

逃逸分析结果

$ go build -gcflags '-m -l' demo.go # command-line-arguments ./demo.go:6:12: []int literal escapes to heap ./demo.go:12:13: ... argument does not escape ./demo.go:12:13: b escapes to heap

第二种情况:在闭包函数中使用外部变量

func Increase() func() int { n := 0 return func() int { n++ return n } } func main() { in := Increase() fmt.Println(in()) // 1 fmt.Println(in()) // 2 }

逃逸分析结果

$ go build -gcflags '-m -l' demo.go # command-line-arguments ./demo.go:6:2: moved to heap: n ./demo.go:7:9: func literal escapes to heap ./demo.go:15:13: ... argument does not escape ./demo.go:15:16: in() escapes to heap

在上边例子中,也许你发现了,所有编译输出的最后一行中都是m escapes to heap。

奇怪了,为什么 m 会逃逸到堆上?

其实就是因为我们调用了fmt.Println()函数,它的定义如下

func Println(a ...interface{}) (n int, err error) { return Fprintln(os.Stdout, a...) }

可见其接收的参数类型是interface{},对于这种编译期不能确定其参数的具体类型,编译器会将其分配于堆上。

最开始的时候,就介绍到,以 64KB 为分界线,我们将内存块分为 小内存块 和 大内存块。

小内存块走常规的 mspan 供应链申请,而大内存块则需要直接向 mheap,在堆区申请。

以下的例子来说明

func foo() { nums1 := make([]int, 8191) // < 64KB for i := 0; i < 8191; i++ { nums1[i] = i } } func bar() { nums2 := make([]int, 8192) // = 64KB for i := 0; i < 8192; i++ { nums2[i] = i } }

给-gcflags多加个-m可以看到更详细的逃逸分析的结果

$ go build -gcflags '-m -l' demo.go # command-line-arguments ./demo.go:5:15: make([]int, 8191) does not escape ./demo.go:12:15: make([]int, 8192) escapes to heap

那为什么是 64 KB 呢?

我只能说是试出来的 (8191刚好不逃逸,8192刚好逃逸),网上有很多文章千篇一律的说和ulimit -a中的stack size有关,但经过了解这个值表示的是系统栈的最大限制是 8192 KB,刚好是 8M。

$ ulimit -a -t: cpu time (seconds) unlimited -f: file size (blocks) unlimited -d: data seg size (kbytes) unlimited -s: stack size (kbytes) 8192

我个人实在无法理解这个 8192 (8M) 和 64 KB 是如何对应上的,如果有朋友知道,还请指教一下。

由于逃逸分析是在编译期就运行的,而不是在运行时运行的。因此避免有一些不定长的变量可能会很大,而在栈上分配内存失败,Go 会选择把这些变量统一在堆上申请内存,这是一种可以理解的保险的做法。

func foo() { length := 10 arr := make([]int, 0 ,length) // 由于容量是变量,因此不确定,因此在堆上申请 } func bar() { arr := make([]int, 0 ,10) // 由于容量是常量,因此是确定的,因此在栈上申请 }

The above is the detailed content of An article explains the memory allocation in Go. For more information, please follow other related articles on the PHP Chinese website!