According to news on July 13, foreign media Semianalysis recently revealed the GPT-4 large model released by OpenAI in March this year, including GPT-4 model architecture, training And specific parameters and information such as inference infrastructure, parameter amount, training data set, token number, cost, Mixture of Experts model.

▲ Picture source Semianalysis

Foreign media stated that GPT-4 contains a total of 1.8 trillion parameters in 120 layers, while GPT- 3 There are only about 175 billion parameters. In order to keep costs reasonable, OpenAI uses a hybrid expert model to build .

IT Home Note: Mixture of Experts is a kind of neural network. The system separates and trains multiple models based on the data. After the output of each model, the system integrates these models and outputs them into one separate tasks.

▲ Picture source Semianalysis

It is reported that GPT-4 uses 16 mixed expert models (mixture of experts), each with 1110 100 million parameters, each forward pass route passes through two expert models.

In addition, it has 55 billion shared attention parameters and was trained using a dataset containing 13 trillion tokens. The tokens are not unique and are calculated as more tokens according to the number of iterations.

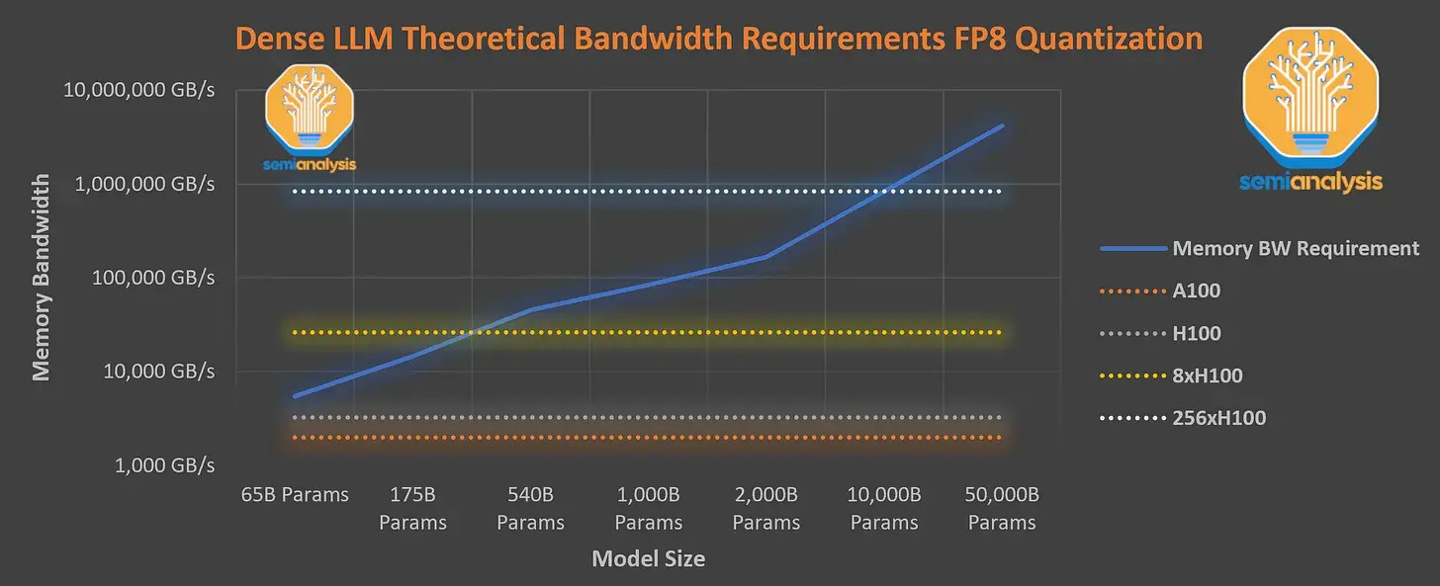

The context length of GPT-4 pre-training phase is 8k, and the 32k version is the result of fine-tuning 8k. The training cost is quite high. Foreign media said that 8x H100 cannot achieve 33.33 Tokens per second. The speed provides the required dense parameter model, so training this model requires extremely high inference costs. Calculated at US$1 per hour for the H100 physical machine, the cost of one training is as high as US$63 million (approximately 451 million yuan) ).

In this regard, OpenAI chose to use the A100 GPU in the cloud to train the model, reducing the final training cost to about US$21.5 million (approximately 154 million yuan), using a slightly longer time and reducing the training cost. cost.

The above is the detailed content of GPT-4 model architecture leaked: contains 1.8 trillion parameters, using hybrid expert model. For more information, please follow other related articles on the PHP Chinese website!

How about n5095 processor

How about n5095 processor

How much is Dimensity 8200 equal to Snapdragon?

How much is Dimensity 8200 equal to Snapdragon?

The difference between lightweight application servers and cloud servers

The difference between lightweight application servers and cloud servers

How to solve err_connection_reset

How to solve err_connection_reset

js method to delete node

js method to delete node

What software is podcasting?

What software is podcasting?

Windows cannot access the specified device path or file solution

Windows cannot access the specified device path or file solution

Linux adds update source method

Linux adds update source method