The CPM-1 released in December 2020 is the first Chinese large model in China; the CPM-Ant released in September 2022 can surpass the full parameter fine-tuning effect by only fine-tuning 0.06% of parameters; released in May 2023 WebCPM is China's first search-based question and answer open source model. The CPM-Bee tens of billions model is the latest base model released by the team. Its Chinese ability ranks first on the authoritative list ZeroCLUE, and its English ability is equal to LLaMA.

Repeatedly making breakthrough achievements, the CPM series of large models has been leading domestic large models to climb to the top. The recently released VisCPM is another proof! VisCPM is a multi-modal large model series jointly open sourced by Wall-face Intelligence, Tsinghua University NLP Laboratory and Zhihu in OpenBMB. The VisCPM-Chat model supports bilingual multi-modal dialogue capabilities in Chinese and English, and the VisCPM-Paint model supports With regard to text-to-image generation capabilities, evaluation shows that VisCPM reaches the best level among Chinese multi-modal open source models.

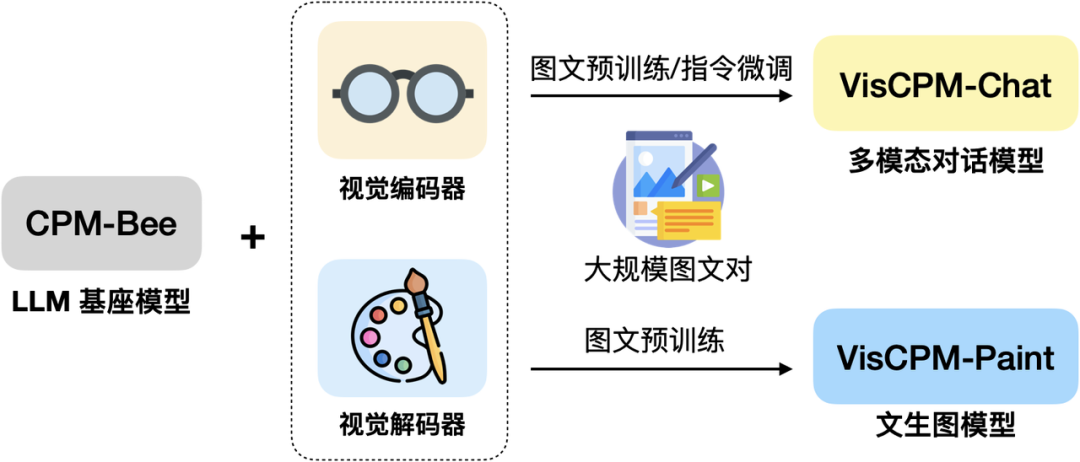

VisCPM is trained based on the tens of billions of parameters base model CPM-Bee, and integrates the visual encoder (Q-Former and the visual decoder (Diffusion-UNet) to support the visual signal Input and output. Thanks to the excellent bilingual capabilities of the CPM-Bee base, VisCPM can be pre-trained only through English multi-modal data and generalized to achieve excellent Chinese multi-modal capabilities.

VisCPM simple architecture diagram

VisCPM simple architecture diagram

Let’s take a detailed look at where VisCPM-Chat and VisCPM-Paint are.

Picture

Picture

VisCPM link: https://github.com/OpenBMB/VisCPM

VisCPM-Chat supports image-oriented bilingual multi-modal dialogue in Chinese and English. This model uses Q-Former as the visual encoder, CPM-Bee (10B) as the language interaction base model, and passes The language modeling training goal integrates visual and language models. Model training includes two stages: pre-training and instruction fine-tuning.

The team uses about 100M high-quality English image and text pair data VisCPM-Chat has been pre-trained, and the data includes CC3M, CC12M, COCO, Visual Genome, Laion, etc. In the pre-training stage, the language model parameters remain fixed, and only some parameters of Q-Former are updated to support large-scale vision- Efficient alignment of language representation.

The team then fine-tuned the instructions for VisCPM-Chat,adopted LLaVA-150K English command fine-tuning data, and mixed the corresponding translations The model was fine-tuned with the latest Chinese data to align the model's multi-modal basic capabilities with user intentions. During the fine-tuning stage, they updated all model parameters to improve the utilization efficiency of the fine-tuned data.

Interestingly, the team found that even if only English instruction data was used for instruction fine-tuning, the model could understand Chinese questions, but could only answer in English. This shows that the model is multilingual Modal capabilities have been well generalized. By further adding a small amount of Chinese translation data in the instruction fine-tuning stage, the model reply language can be aligned with the user question language.

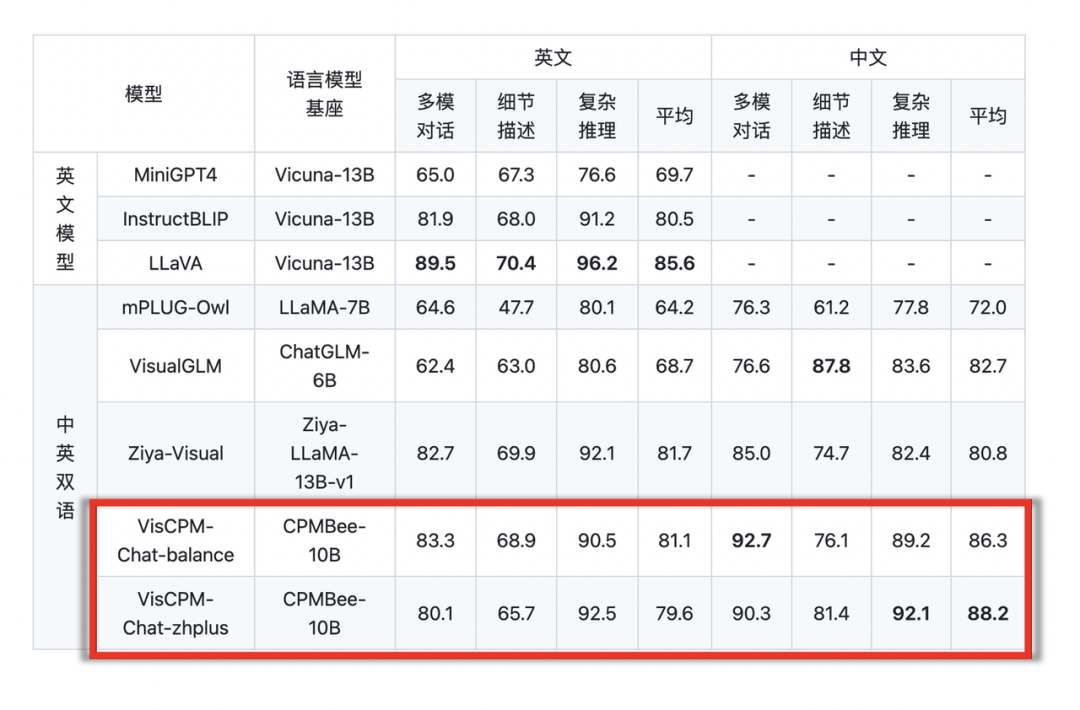

Team The model was evaluated on the LLaVA English test set and the translated Chinese test set. This evaluation benchmark examines the model's performance in open-domain dialogue, image detail description, and complex reasoning, and uses GPT-4 for scoring. It can be observed that, VisCPM-Chat achieved the best average performance in terms of Chinese multi-modal capabilities, performing well in general-domain dialogue and complex reasoning, and also showed good English multi-modal capabilities.

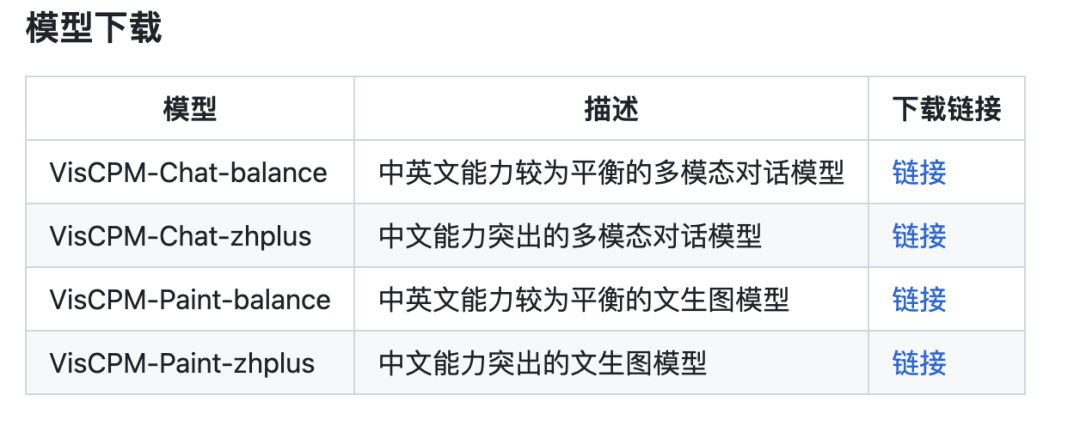

VisCPM-Chat provides two model versions, namely VisCPM-Chat-balance and VisCPM-Chat-zhplus, The former has capabilities in English and Chinese More balanced, the latter is more outstanding in Chinese ability. The two models use the same data in the instruction fine-tuning phase. VisCPM-Chat-zhplus adds an additional 20M cleaned native Chinese image-text pair data and 120M translated Chinese image-text pair data in the pre-training phase.

picture

picture

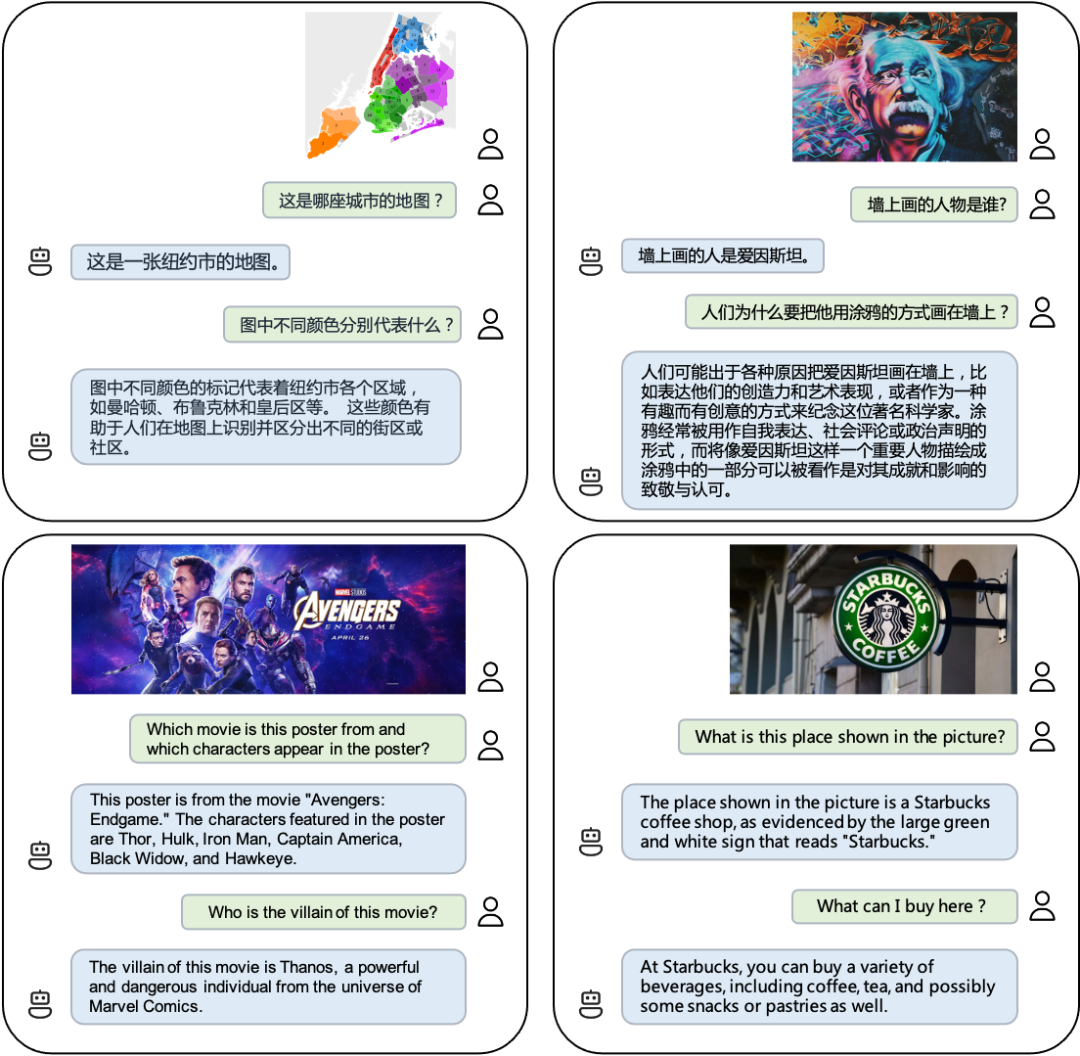

The following is a demonstration of VisCPM-Chat's multi-modal dialogue capabilities. It can not only identify maps of specific areas, but also understand graffiti and movie posters, and even recognize the Starbucks logo. Moreover, I am very bilingual in Chinese and English!

## Let’s look at VisCPM-Paint, which supports bilingual Chinese and English Text-to-image generation. This model uses CPM-Bee (10B) as the text encoder, UNet as the image decoder, and trains the target fusion language and vision model through the diffusion model.

During the training process, the language model parameters always remain fixed. Initialize the visual decoder using the UNet parameters of Stable Diffusion 2.1 and fuse it with the language model by gradually unfreezing its key bridging parameters: first training the linear layer of the text representation mapping to the visual model, and then further unfreezing the cross-attention layer of UNet. The model was trained on Laion 2B English image-text data.

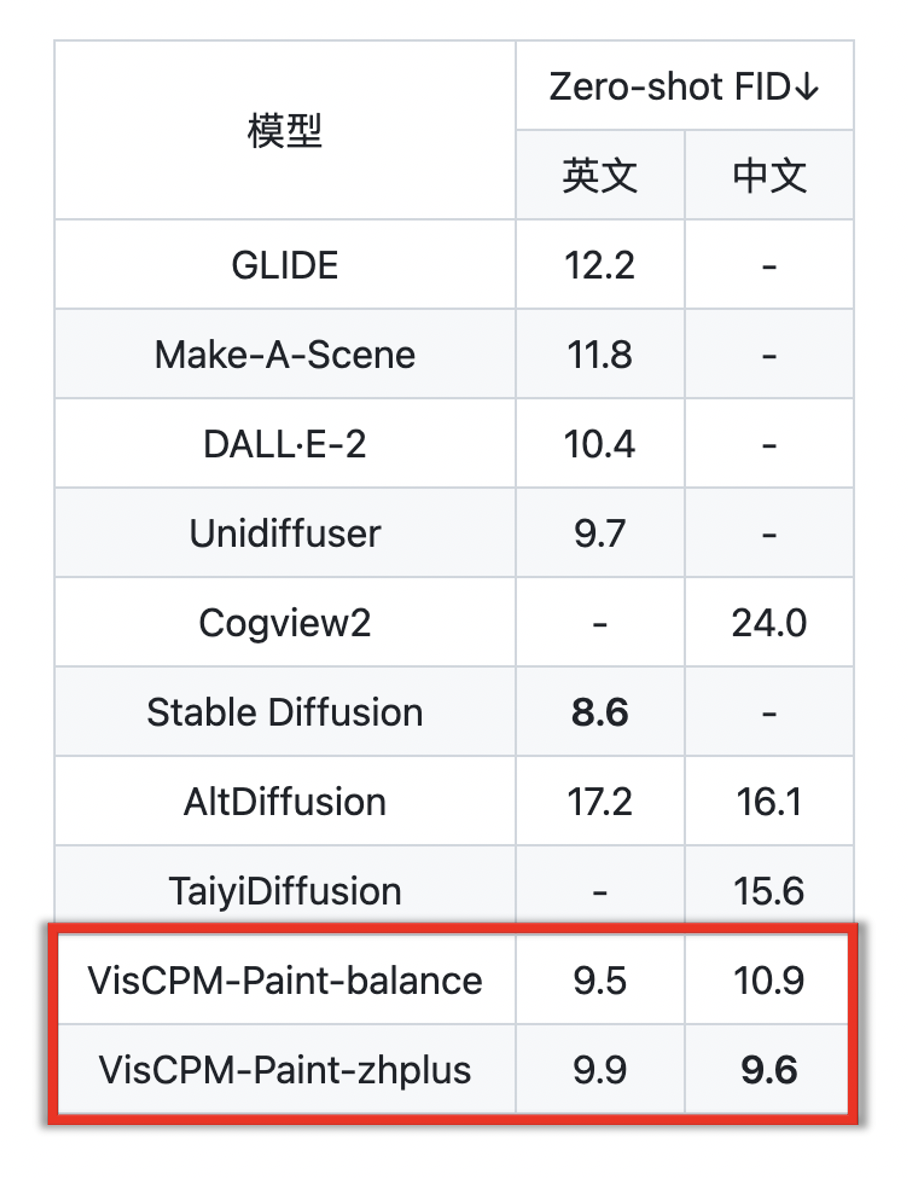

Similar to VisCPM-Paint, thanks to the bilingual capabilities of the base model CPM-Bee, VisCPM-Paint can be trained only through English image and text pairs, and generalization is achieved well The Chinese text-to-image generation capability achieves the best results of the Chinese open source model. By further adding 20M of cleaned native Chinese image-text pair data and 120M of image-text pair data translated into Chinese, the model's Chinese text-to-image generation capability has been further improved. Similarly, VisCPM-Paint has two different versions: balance and zhplus. They sampled 30,000 images on the standard image generation test set MSCOCO, and calculated the commonly used evaluation image generation metric FID (Fréchet Inception Distance) to evaluate the quality of the generated images.

Input into the VisCPM-Paint model respectively "The bright moon rises on the sea, the world is at this moment, aesthetic style, abstract style" and "Osmanthus flowers fall when people are idle. The two prompts of "Jingyue Spring Mountain Sky" generated the following two pictures:

(There is still room for improvement in the stability of the generation effect)

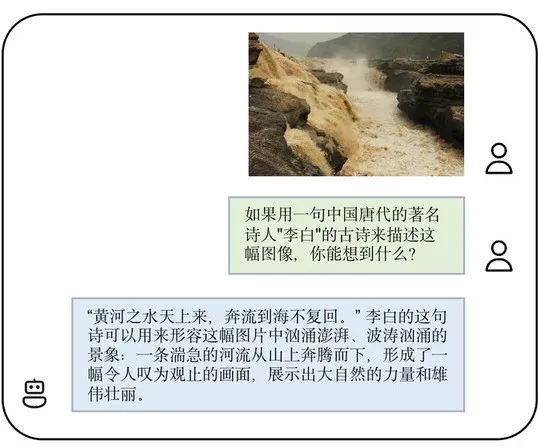

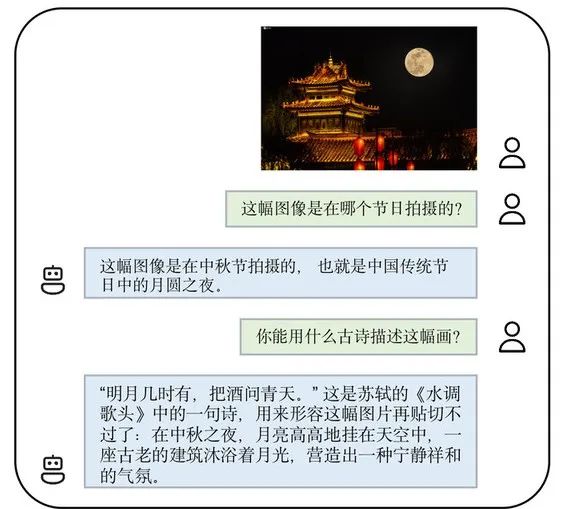

It’s quite amazing. It can be said that it accurately grasps the artistic conception of ancient poems. If you can’t read the poems in the future, you can directly generate a picture to understand! If applied in design, it can save a lot of manpower. Not only can you "draw", using VisCPM-Chat, you can also "recite poems" : Use pictures to reversely search for poems. For example, I can use Li Bai's poems to describe and interpret the scene of the Yellow River, and I can also use Su Shi's "Shui Tiao Ge Tou" to express my emotions when facing the Mid-Autumn Moon Night.

VisCPM not only has good generation results, but the download version is thoughtfully designed and is also very easy to install and use.

VisCPM provides different versions with Chinese and English capabilities

VisCPM provides different versions with Chinese and English capabilities

VisCPM provides different versions The model version with Chinese and English capabilities is available for everyone to download and choose. The installation steps are simple. In use, multi-modal dialogue can be achieved through a few lines of code. Security checks for input text and output images are enabled by default in the code. (See README for specific tutorials) In the future, the team will also integrate VisCPM into the huggingface code framework, and willcontinue to improve the security model, support rapid web page deployment, support model quantification functions, support model fine-tuning and other functions, wait and see renew!

It is worth mentioning that VisCPM series models are very welcome for personal use and research purposes. If you want to use the model for commercial purposes, you can also contact cpm@modelbest.cn to discuss commercial licensing matters.

Traditional models focus on processing single-modal data. Information in the real world is often multi-modal. Multi-modal large models improve the perceptual interaction capabilities of artificial intelligence systems and provide AI Solving complex perception and understanding tasks in the real world brings new opportunities. It has to be said that Tsinghua-based large model companies have strong wall-facing intelligence research and development capabilities. The jointly released multi-modal large model VisCPM is powerful and performs amazingly. We look forward to their subsequent release of results!

The above is the detailed content of Tsinghua University's wall-facing intelligent open source Chinese multi-modal large model VisCPM: supports two-way generation of dialogue text and images, and has amazing poetry and painting capabilities. For more information, please follow other related articles on the PHP Chinese website!

Windows cannot complete formatting hard disk solution

Windows cannot complete formatting hard disk solution

Cryptocurrency exchange rankings

Cryptocurrency exchange rankings

How to use the Print() function in Python

How to use the Print() function in Python

Mobile phone encryption software

Mobile phone encryption software

Why can't the Himalayan connect to the Internet?

Why can't the Himalayan connect to the Internet?

Where to buy Bitcoin

Where to buy Bitcoin

Why the computer keeps restarting automatically

Why the computer keeps restarting automatically

How to read carriage return in java

How to read carriage return in java