Some time ago, Meta released the "Segment Everything (SAM)" AI model, which can generate masks for any object in any image or video, causing researchers in the field of computer vision (CV) to exclaim: "CV does not exist ". After that, there was a wave of "secondary creation" in the field of CV. Some work successively combined functions such as target detection and image generation on the basis of segmentation, but most of the research was based on static images.

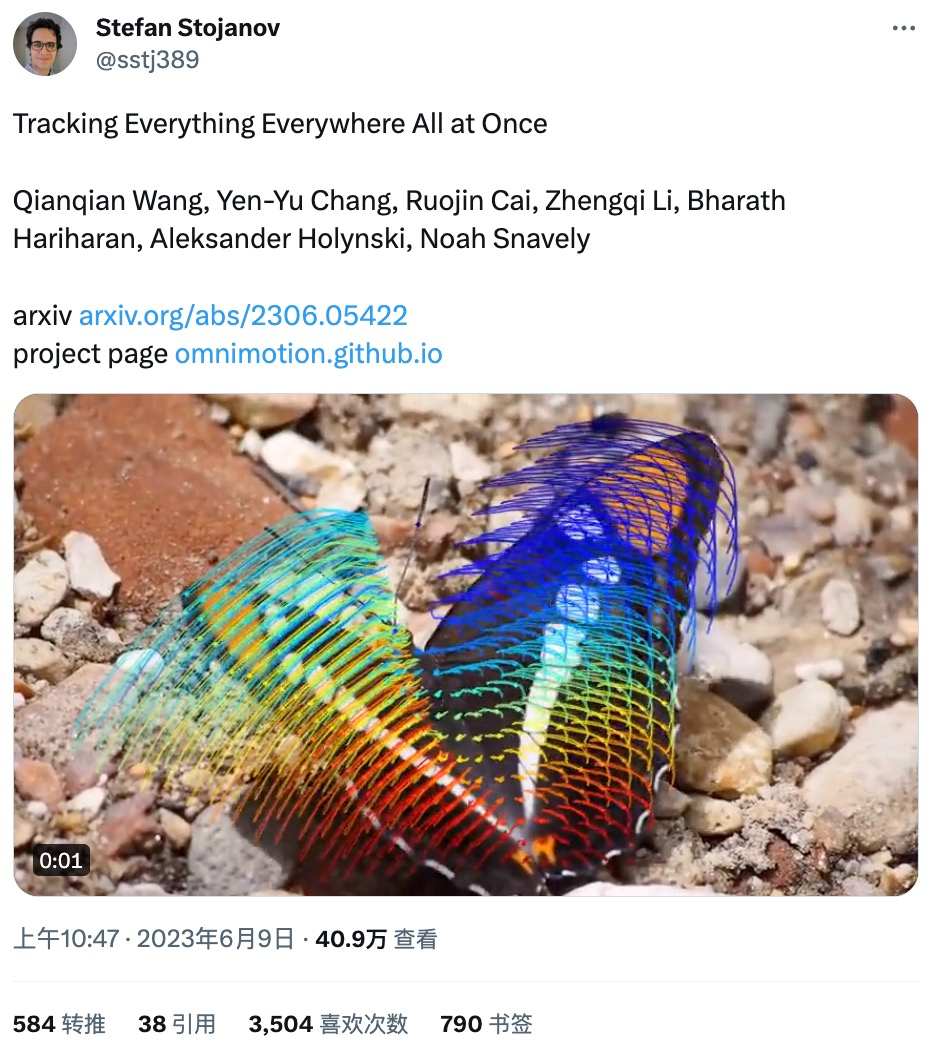

Now, a new study called "Tracking Everything" proposes a new method for motion estimation in dynamic videos that can accurately and completely track the movement of objects.

##The research was led by researchers from Cornell University, Google Research and UC Berkeley researchers worked together. They jointly proposed OmniMotion, a complete and globally consistent motion representation, and proposed a new test-time optimization method to perform accurate and complete motion estimation for every pixel in the video.

##You can track the motion trajectory even if the object is blocked. For example, a dog is blocked by a tree while running:

##You can track the motion trajectory even if the object is blocked. For example, a dog is blocked by a tree while running:

#In the field of computer vision, there are two commonly used motion estimation methods: sparse feature tracking and dense optical flow. However, both methods have their own shortcomings. Sparse feature tracking cannot model the motion of all pixels; dense optical flow cannot capture motion trajectories for a long time.

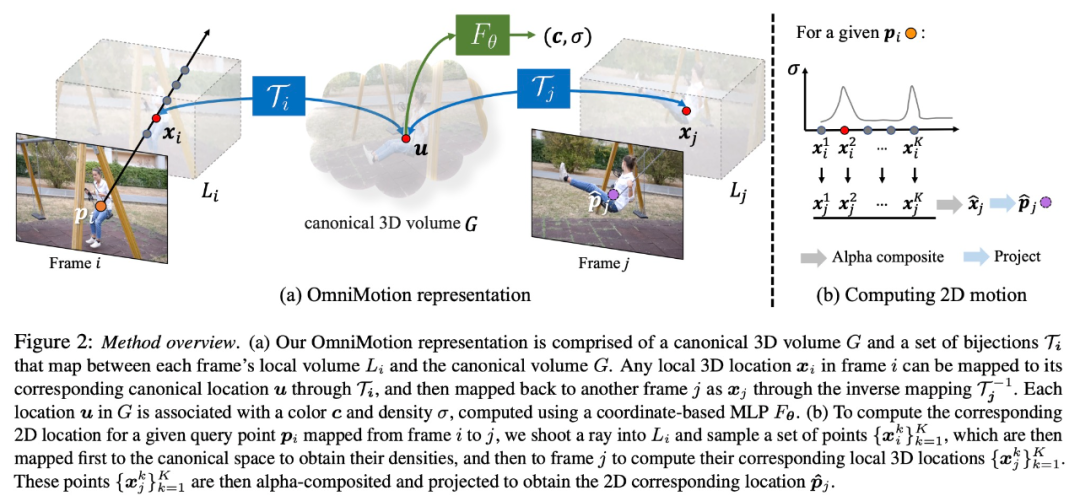

The OmniMotion proposed in this research uses a quasi-3D canonical volume to characterize the video and tracks each pixel through a bijection between local space and canonical space. This representation enables global consistency, enables motion tracking even when objects are occluded, and models any combination of camera and object motion. This study experimentally demonstrates that the proposed method significantly outperforms existing SOTA methods.

This study takes as input a collection of frames with paired noisy motion estimates (e.g., optical flow fields) to form a complete, globally consistent motion representation of the entire video. The study then added an optimization process that allowed it to query the representation with any pixel in any frame to produce smooth, accurate motion trajectories throughout the video. Notably, this method can identify when points in the frame are occluded and can even track points through occlusions.

OmniMotion characterization

Traditional motion estimation methods (such as pairwise optical flow), when objects are occluded Tracking of objects will be lost. In order to provide accurate and consistent motion trajectories even under occlusion, this study proposes global motion representation OmniMotion.

This research attempts to accurately track real-world motion without explicit dynamic 3D reconstruction. The OmniMotion representation represents the scene in the video as a canonical 3D volume, which is mapped to a local volume in each frame through a local-canonical bijection. Local canonical bijections are parameterized as neural networks and capture camera and scene motion without separating the two. Based on this approach, the video can be viewed as the rendering result from the local volume of a fixed static camera.

Because OmniMotion does not clearly distinguish between camera and scene motion, the representation formed is not a physically accurate 3D scene reconstruction. Therefore, the study calls it quasi-3D characterization.

OmniMotion retains information about all scene points projected to each pixel, as well as their relative depth order, which allows points in the frame to be moved even if they are temporarily occluded track.

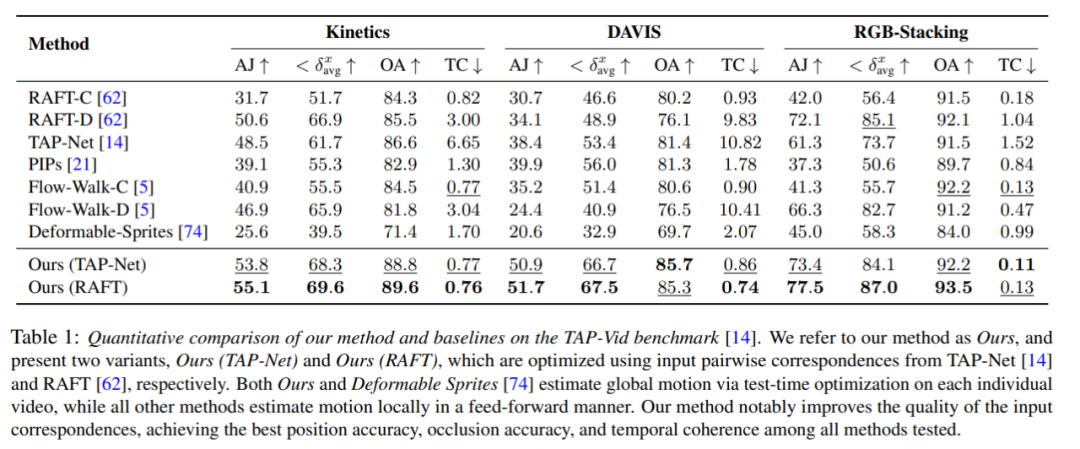

Quantitative comparison

The researchers compared the proposed method with the TAP-Vid benchmark, and the results are shown in Table 1. It can be seen that on different datasets, their method always achieves the best position accuracy, occlusion accuracy and timing consistency. Their method handles well the different pairwise correspondence inputs from RAFT and TAP-Net and provides consistent improvements over both baseline methods.

Qualitative comparison

As shown in Figure 3, the researcher Their method is qualitatively compared with baseline methods. The new method shows excellent recognition and tracking capabilities during (long) occlusion events, while providing reasonable positions for points during occlusions and handling large camera motion parallax.

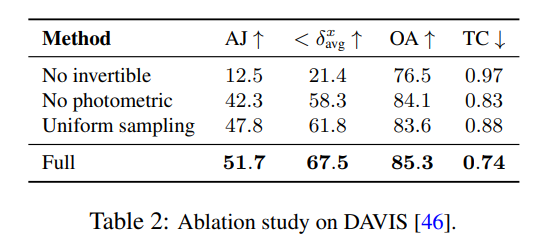

##Ablation experiment and analysis

Research Researchers used ablation experiments to verify the effectiveness of their design decisions, and the results are shown in Table 2.

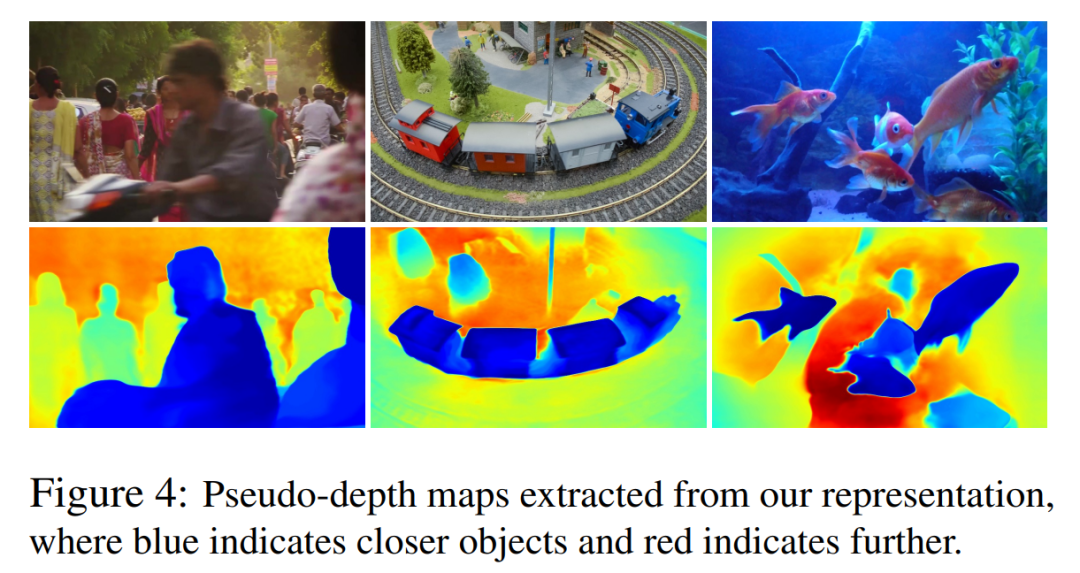

In Figure 4, they show pseudo-depth maps generated by their model to demonstrate the learned Depth sorting.

It should be noted that these figures do not correspond to physical depth, however, they demonstrate that the new method can effectively determine the relative order between different surfaces when using only photometric and optical flow signals, which is useful for Tracking in occlusion is critical. More ablation experiments and analytical results can be found in the supplementary material.

The above is the detailed content of The 'track everything' video algorithm that tracks every pixel anytime, anywhere, and is not even afraid of obstructions is here.. For more information, please follow other related articles on the PHP Chinese website!