Technology peripherals

Technology peripherals

AI

AI

Hinton, Turing Award winner: I am old, I leave it to you to control AI that is smarter than humans

Hinton, Turing Award winner: I am old, I leave it to you to control AI that is smarter than humans

Hinton, Turing Award winner: I am old, I leave it to you to control AI that is smarter than humans

Do you still remember that the experts were divided into two camps on "whether AI may exterminate mankind"?

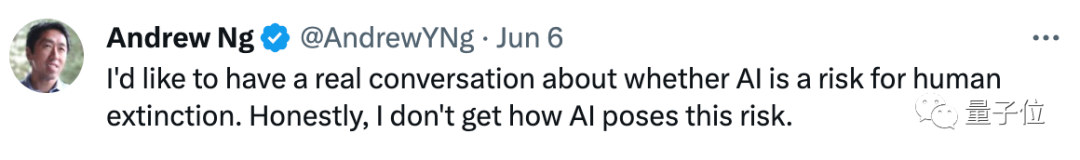

Because he does not understand why "AI will cause risks", Ng Enda recently started a dialogue series to talk to two Turing Award winners:

Does AI exist? What are the risks?

Interestingly, after having in-depth conversations with Yoshua Bengio and Geoffrey Hinton, he and they "reached a lot of consensus" "!

They both believe that both parties should jointly discuss the specific risks that artificial intelligence will create and clarify the extent of its understanding. Hinton also specifically mentioned Turing Award winner Yann LeCun as a "representative of the opposition"

The debate is still very fierce on this issue, and even respected scholars like Yann believe that large models do not really Understand what they are saying.

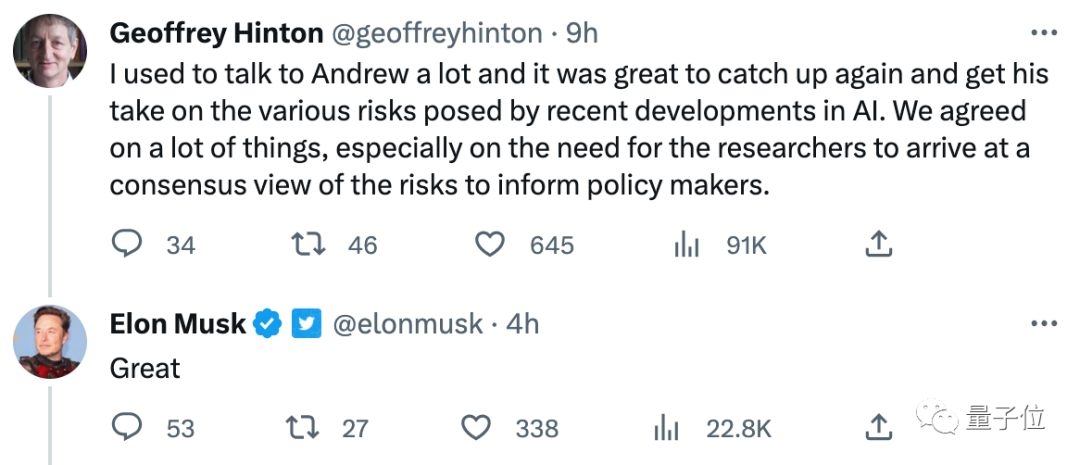

Musk was also very interested in this conversation:

In addition, Hinton was recently The Intellectual Property Conference once again "preached" about the risks of AI, saying that super intelligence that is smarter than humans will soon appear:

We are not used to thinking about things that are much smarter than us, and how to interact with them. They interact.

I don't see how to prevent superintelligence from "getting out of control" now, and I'm old. I hope that more young researchers will master the methods of controlling superintelligence.

Let’s take a look at the core points of these conversations and the opinions of different AI experts on this matter.

Ng Enda Dialogue with Turing Award Winner: AI Security Should Reach a Consensus

The first is a dialogue with Bengio. Ng and he reached a key consensus:

Scientists should try to identify “specific scenarios where AI risks exist.”

In other words, in which scenarios AI will cause major harm to human beings, or even lead to human extinction, this is a consensus that both parties need to reach.

Bengio believes that the future of AI is full of "fog and uncertainty", so it is necessary to find out some specific scenarios where AI will cause harm.

Then came the conversation with Hinton, and the two parties reached two key consensuses.

On the one hand, all scientists must have a good discussion on the issue of "AI risks" in order to formulate good policies;

On the other hand, AI is indeed understanding the world. Listing key technical issues on AI safety issues can help scientists reach a consensus.

In the process, Hinton mentioned the key point that needs to be reached, that is, "whether large dialogue models such as GPT-4 and Bard are really understand what they are saying":

Some people think they understand, some people think they are just random parrots.

I think we all believe that they understand (what they are talking about), but some scholars we respect very much, such as Yann, think that they do not understand.

Of course, LeCun, who was "called out", also arrived in time and expressed his views seriously:

We all agree that "everyone needs to reach a consensus on some issues." I also agree with Hinton that LLM has some understanding and saying they are "just statistics" is misleading.

1. But their understanding of the world is very superficial, largely because they are only trained with plain text. AI systems that learn how the world works from vision will have a deeper understanding of reality, whereas autoregressive LLM's reasoning and planning capabilities are very limited in comparison.

2. I don’t believe that AI close to human (or even cat) level will appear without the following conditions:

(1) World model learned from sensory input such as video

(2) An architecture that can reason and plan (not just autoregressive)

3. If we have architectures that understand planning, they will be goal-driven, that is, based on optimizing inference time (not just training time) Goals to plan work. These goals can be guardrails that make AI systems "obedient" and safe, or even ultimately create better models of the world than humans can.

The problem then becomes designing (or training) a good objective function that guarantees safety and efficiency.

4. This is a difficult engineering problem, but not as difficult as some people say.

Although this response still did not mention "AI risks", LeCun gave practical suggestions to improve AI safety (creating AI "guardrails"), and envisioned a better life than humans What does a more powerful AI “look like” (multi-sensory input capable of inferential planning).

To a certain extent, both parties have reached some consensus on the idea that AI has security issues.

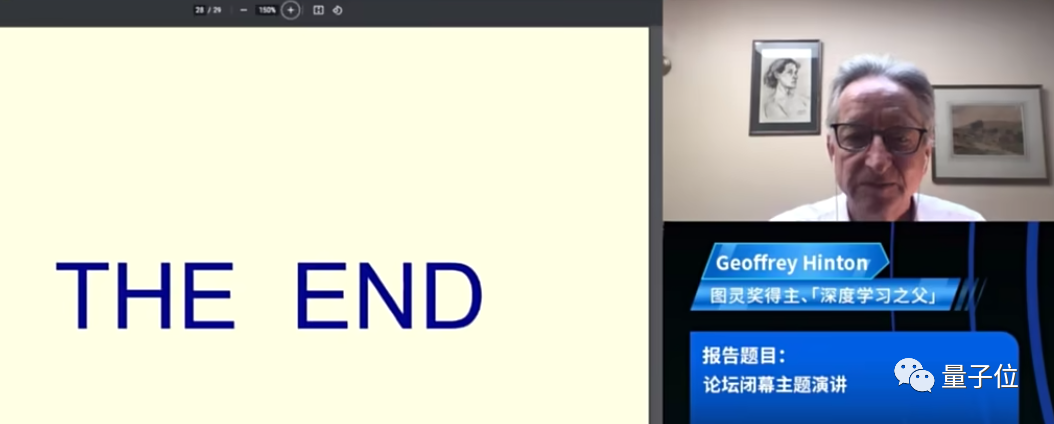

Hinton: Superintelligence is closer than imagined

Of course, it’s not just the conversation with Andrew Ng.

Hinton, who recently left Google, has talked about the topic of AI risks on many occasions, including the recent Intelligent Source Conference he attended.

At the conference, with the theme of "Two Routes to Intelligence", he discussed the two intelligence routes of "knowledge distillation" and "weight sharing", as well as how to make AI smarter, and My own views on the emergence of superintelligence.

To put it simply, Hinton not only believes that superintelligence (more intelligent than humans) will appear, but that it will appear sooner than people think.

Not only that, he believes that these super intelligences will get out of control, but currently he can’t think of any good way to stop them:

Super intelligence can easily gain more power by manipulating people . We are not used to thinking about things that are much smarter than us and how to interact with them. It becomes adept at deceiving people because it can learn examples of deceiving others from certain works of fiction.

Once it becomes good at deceiving people, it has a way of getting people to do anything... I find this horrifying but I don't see how to prevent this from happening because I'm old.

My hope is that young, talented researchers like you will figure out how we have these superintelligences and make our lives better.

When the "THE END" slide was shown, Hinton emphasized meaningfully:

This is my last PPT and the key to this speech. Finish.

[1]https://twitter.com/AndrewYNg/status/1667920020587020290

[ 2]https://twitter.com/AndrewYNg/status/1666582174257254402

[3]https://2023.baai.ac.cn/

The above is the detailed content of Hinton, Turing Award winner: I am old, I leave it to you to control AI that is smarter than humans. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Cryptocurrency IDO platform top5

Aug 21, 2025 pm 07:33 PM

Cryptocurrency IDO platform top5

Aug 21, 2025 pm 07:33 PM

The best IDO platforms in 2025 are pump.fun, Bounce, Coin Terminal, Avalaunch and Gate Launchpad, which are suitable for Meme coin speculation, community-driven auctions, high-return pursuits, Avalanche ecological investment and fair participation of novices. The choice needs to combine investment goals, risk tolerance and project preferences, and focus on platform review and security.

LINK price breaks through 24 USD Key Resistance Analysis: Chainlink Project Fundamentals and Price Trends

Aug 16, 2025 pm 12:15 PM

LINK price breaks through 24 USD Key Resistance Analysis: Chainlink Project Fundamentals and Price Trends

Aug 16, 2025 pm 12:15 PM

Contents: Current price trend and key technical signals to drive LINK up core factors whale and institutional funds strong entry strategic reserve mechanism strengthens deflation expectations Traditional financial giants adopt accelerated ecological expansion project fundamentals: dominating the real world assets (RWA) tokenization wave price forecast: short-term momentum and long-term potential Summary of current price trends and key technical signals Resistance and support level: If today effectively breaks through $24.64, LINK's next target is Fibonacci 0.786 retracement level 26.46, which may challenge the 2024 high of $30.93 after the breakthrough. Key support

What is Render (RNDR Coin)? What is the price? 2025 - 2030s Coin Price Forecast

Aug 16, 2025 pm 12:30 PM

What is Render (RNDR Coin)? What is the price? 2025 - 2030s Coin Price Forecast

Aug 16, 2025 pm 12:30 PM

What is Render? Blockchain reshapes the graphics rendering ecosystem Render is a decentralized GPU rendering network built on blockchain technology, committed to breaking the resource concentration pattern in the traditional graphics rendering field. It efficiently connects the supply and demand parties of the global computing power supply and demand through smart contract mechanisms: content creators (such as film production companies, game development teams, AI labs, etc.): they can submit complex rendering tasks on the platform and pay for them with RNDR tokens; computing power providers (individuals or institutions with idle GPUs): they contribute computing power through access to the network and receive RNDR token rewards after completing the tasks. This model effectively solves multiple bottlenecks in traditional rendering processes: Cost optimization: leveraging global distributed computing power funds

What exactly is token? What is the difference between token and Coin

Aug 16, 2025 pm 12:33 PM

What exactly is token? What is the difference between token and Coin

Aug 16, 2025 pm 12:33 PM

Coin is a native asset of its own blockchain, such as BTC and ETH, used to pay fees and incentivize networks; tokens are created based on existing blockchains (such as Ethereum) through smart contracts, representing assets, permissions or services, and relying on the host chain to operate, such as UNI and LINK, and transaction fees must be paid with ETH.

What is Polkadot (DOT currency)? Future development and price forecast of DOT

Aug 21, 2025 pm 07:30 PM

What is Polkadot (DOT currency)? Future development and price forecast of DOT

Aug 21, 2025 pm 07:30 PM

What is the directory DOT (Poker Coin)? The origin of Polkadot DOT (Polkadot) The operating principle of Polkadot has 5 major features, aiming to establish the Polkadot ecosystem (Ecosystem) 1. Interoperability 2. Scalability 3. Community Autonomy 4. No Fork Upgrade 5. NPOS Consensus Protocol Polkadot Key Features DOT Ecosystem Polkadot Vision: Connecting Everything Polkadot's Future Development Polkadot Price Forecast Polkadot 2025 Price Forecast Polkadot 2026-203

Altcoin bull market momentum strengthens, Bitcoin stagnates

Aug 16, 2025 pm 12:48 PM

Altcoin bull market momentum strengthens, Bitcoin stagnates

Aug 16, 2025 pm 12:48 PM

The crypto market has seen a subtle twist this week. Bitcoin fell into consolidation around about $119,000, with volatility narrowing, while most mainstream altcoins showed a strong rebound momentum. This differentiation has attracted widespread attention: Does it indicate that funds are shifting from Bitcoin to altcoins, and the altcoin rotation market has quietly started? Although Bitcoin still firmly controls market dominance, the Altseason Index has quietly rebounded, releasing potential changes. Altcoins have generally risen, and Bitcoin has accumulated sideways and has seen significant changes in the market structure recently. Bitcoin's market dominance has declined to 58.54%, down 5.32% in 24 hours, while Ether

The most complete currency nouns on the entire network that novices need to know - must read by Xiaobai

Aug 16, 2025 pm 12:21 PM

The most complete currency nouns on the entire network that novices need to know - must read by Xiaobai

Aug 16, 2025 pm 12:21 PM

The answer is that you need to master basic terms when entering the currency circle for the first time. The article introduces mainstream exchanges such as Binance, Ouyi, and Huobi in 2025, and explains the difference between centralized and decentralized exchanges. Then, it systematically explains the core concepts such as blockchain, cryptocurrency, Bitcoin, Ethereum, altcoins, stablecoins, as well as account security knowledge such as public keys, private keys, mnemonics, and covers market terms such as bull markets, bear markets, HODL, K-lines, FOMO, and FUD, and briefly describes technical concepts such as mining, consensus mechanisms, smart contracts, DApps and Gas fees, to help novices fully understand the coin circle.

What is Token

Aug 16, 2025 pm 12:39 PM

What is Token

Aug 16, 2025 pm 12:39 PM

Tokens are digital assets on blockchains that represent equity or value. They can be divided into payment, utility, securities, stablecoins and NFTs, etc., for value storage, exchange, governance, rewards, access and collateral. They are issued on chains such as Ethereum through smart contracts, and are created on ERC-20 standards. They can be traded on centralized or decentralized exchanges and stored in hot storage (such as MetaMask) or cold storage (such as Ledger), but face risks such as price fluctuations, supervision, technology, projects, liquidity and security, and should be treated with caution.