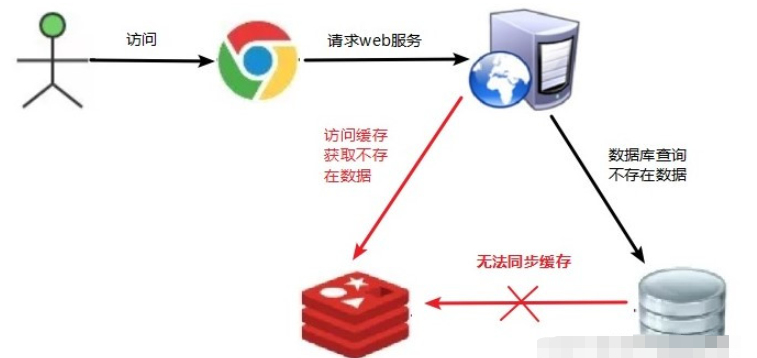

Cache penetration is to request a non-existent key on the client/browser side. This key does not exist in redis. There is no data source in the database. Every time a request for this key cannot be obtained from the cache, the data source will be requested.

If you use a non-existent user ID to access user information, it is neither in redis nor in the database. Multiple requests may overwhelm the data source

A data that must not exist in the cache and cannot be queried. Since the cache is passively written when there is a miss, the cache does not exist. For fault tolerance considerations, the data that cannot be queried will not be Caching in redis will cause the database to be requested every time data that does not exist is requested, which loses the meaning of caching.

(1) If the data returned by a query is empty (regardless of whether the data does not exist), we will still cache the empty result (null) and set the expiration time of the empty result to be very short, with the longest No more than five minutes

(2) Set the accessible list (whitelist): use the bitmaps type to define an accessible list, the list id is used as the offset of the bitmaps, each access and the id in the bitmap Compare, if the access id is not in the bitmaps, intercept and disallow access.

(3) Use Bloom filter

(4) Conduct real-time data monitoring and find that when the hit rate of Redis decreases rapidly, check the access objects and access data, and set up a blacklist.

When the user requests data for an existing key, the data for the key in redis has become outdated. If a large number of concurrent requests find that the cache has expired, the data source will be requested to load the data and cached in redis. At this time, the large number of concurrency may overwhelm the database service.

When the data of a certain key is requested in large numbers, this key is hot data and needs to be considered to avoid the "breakdown" problem.

(1) Pre-set popular data: Before redis peak access, store some popular data into redis in advance, and increase the duration of these popular data keys

(2) Real-time adjustment : On-site monitoring of which data is popular and real-time adjustment of key expiration length

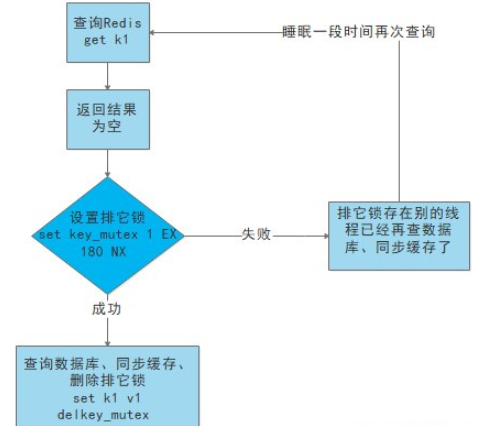

(3) Use lock:

is when the cache expires (the value taken out is judged to be Empty), instead of loading the db immediately.

First use some operations of the cache tool with a successful operation return value (such as Redis's SETNX) to set a mutex key

When When the operation returns successfully, perform the load db operation again, restore the cache, and finally delete the mutex key;

When the operation returns failure, it proves that there is a thread loading db, and the current thread sleeps for a while Time to retry the entire get cache method.

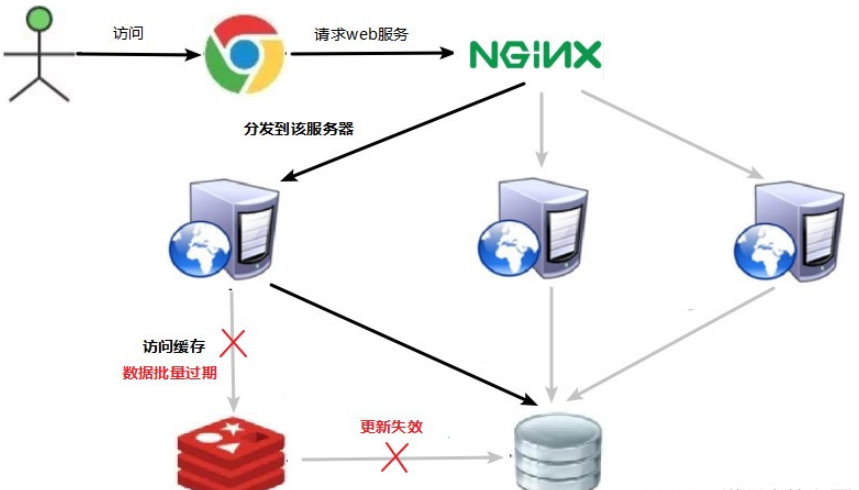

The corresponding data exists, but the key data Has expired (the redis cache will expire and this key will be automatically deleted). At this time, a large number of concurrent requests access different keys, that is, a large number of different keys are accessed at the same time. At this time, the key is in the expiration stage, and the database will be requested. A large number of concurrent requests It will overwhelm the database server. This situation is called cache avalanche. The difference from cache breakdown is that the former is a key.

The avalanche effect when the cache fails has a terrible impact on the underlying system!

(1) Build a multi-level cache architecture:

nginx cache redis cache other caches (ehcache, etc.)

(2) Use locks or queues:

Use locks or queues to ensure that there will not be a large number of threads accessing the database at once Read and write are performed continuously to avoid a large number of concurrent requests from falling on the underlying storage system in the event of a failure. Not applicable to high concurrency situations

(3) Set the expiration flag to update the cache:

Record whether the cached data has expired ( Set the advance amount), if it expires, it will trigger a notification to another thread to update the cache of the actual key in the background.

(4) Spread the cache expiration time:

For example, we can based on the original expiration time Add a random value, such as 1-5 minutes random, so that the repetition rate of each cache's expiration time will be reduced, making it difficult to cause collective failure events.

The above is the detailed content of How to solve common problems of caching data based on Redis. For more information, please follow other related articles on the PHP Chinese website!

Commonly used database software

Commonly used database software

What are the in-memory databases?

What are the in-memory databases?

Which one has faster reading speed, mongodb or redis?

Which one has faster reading speed, mongodb or redis?

How to use redis as a cache server

How to use redis as a cache server

How redis solves data consistency

How redis solves data consistency

How do mysql and redis ensure double-write consistency?

How do mysql and redis ensure double-write consistency?

What data does redis cache generally store?

What data does redis cache generally store?

What are the 8 data types of redis

What are the 8 data types of redis