Are you worried that artificial intelligence is developing too fast and may have negative consequences? Do you wish there was a national law regulating it? Today, there are no new laws to restrict the use of AI, and often self-regulation becomes the best option for companies adopting AI – at least for now.

Although "artificial intelligence" has replaced "big data" as the hottest buzzword in the technology world for many years, in late November 2022 ChatGPT The launch of AI started an AI gold rush that surprised many AI observers, including us. In just a few months, a slew of powerful generative AI models have captured the world’s attention, thanks to their remarkable ability to mimic human language and understanding.

The extraordinary rise of generative models in mainstream culture, fueled by the emergence of ChatGPT, raises many questions about where this is all headed. The astonishing power of AI to produce compelling poetry and whimsical art is giving way to concerns about its negative consequences, ranging from consumer harm and job losses, all the way to false imprisonment and even the destruction of humanity.

This has some people very worried. Last month, a coalition of AI researchers sought a six-month moratorium on the development of new generative models larger than GPT-4 (Further reading: Open letter urges moratorium on AI research), GPT-4, the massive model OpenAI launched last month Language Model (LLM).

An open letter signed by Turing Award winner Yoshua Bengio and OpenAI co-founder Elon Musk and others stated: "Advanced artificial intelligence may represent profound changes in the history of life on Earth and should Plan and manage with appropriate care and resources.” “Unfortunately, this level of planning and management has not been achieved.” The call is rising. Polls show Americans don’t think artificial intelligence can be trusted and want it regulated, especially on impactful things like self-driving cars and access to government benefits.

Yet despite several new local laws targeting AI — such as one in New York City that focuses on the use of AI in hiring — enforcement efforts have been delayed As of this month—Congress has no new federal regulations specifically targeting AI that’s approaching the finish line (although AI has made its way into the legal realm of highly regulated industries like financial services and health care).

Spurred by artificial intelligence, what should a company do? It’s no surprise that companies want to share the benefits of artificial intelligence. After all, the urge to become “data-driven” is seen as a necessity for survival in the digital age. However, companies also want to avoid the negative consequences, real or perceived, that can result from inappropriate use of AI.

Artificial intelligence is wild in "

Westworld". Andrew Burt, founder of artificial intelligence law firm BNH.AI, once said, "No one knows how to manage risk. Everyone does it differently." That being said, companies can use Several frameworks to help manage AI risks. Burt recommends using the Artificial Intelligence Risk Management Framework (

#RMF: Risk Management Framework), which comes from the National Institute of Standards and Technology (NIST) and was finalized earlier this year. RMF helps companies think about how their artificial intelligence works and the potential negative consequences it may have. It uses a “map, measure, manage and govern” approach to understand and ultimately mitigate the risks of using artificial intelligence across a variety of service offerings.

Another AI risk management framework comes from Cathy O'Neil, CEO of O'Neil Risk Advisory & Algorithmic Auditing (ORCAA). ORCAA proposed a framework called "

Explainable Fairness". Explainable fairness gives organizations a way to not only test their algorithms for bias, but also study what happens when differences in outcomes are detected. For example, if a bank is determining eligibility for a student loan, what factors can legally be used to approve or deny the loan or charge higher or lower interest?

Clearly, banks must use data to answer these questions. But what data can they use—that is, what factors reflect a loan applicant? Which factors should be legally allowed to be used and which factors should not be used? Answering these questions is neither easy nor simple, O'Neil said.

#"That's what this framework is all about, is that these legal factors have to be legal," O'Neil said during a discussion at the Nvidia GPU Technology Conference (GTC) last month. ization."

Even without new AI laws, companies should start asking themselves how to be fair and compliant, said Triveni Gandhi, head of Dataiku AI, a provider of data analytics and AI software. Implement AI ethically to comply with existing laws.

“People have to start thinking, okay, how do we take existing laws and apply them to the AI use cases that exist today?” “There are some regulations, but there are also a lot People are thinking about the ethical and value-oriented ways in which we want to build artificial intelligence. These are actually the questions companies are starting to ask themselves, even if there are no overarching prescriptions."

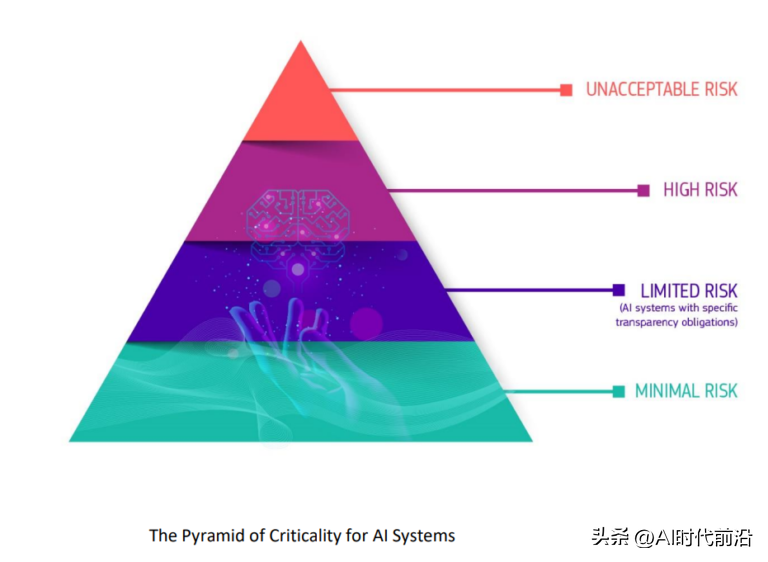

EU classifies potential harms of artificial intelligence into 'criticality pyramid'

The EU is already moving forward with its own regulations, the Artificial Intelligence Act , the bill could take effect later this year.

The Artificial Intelligence Bill will create a common regulatory and legal framework for the use of artificial intelligence that affects EU residents, including how it is developed and what companies can use it for purpose, and the legal consequences of failure to comply with the requirements. The law could require companies to get approval before adopting AI in certain use cases and ban certain other uses of AI deemed too risky.

The above is the detailed content of Self-regulation is now the control standard for artificial intelligence. For more information, please follow other related articles on the PHP Chinese website!

Application of artificial intelligence in life

Application of artificial intelligence in life

What is the basic concept of artificial intelligence

What is the basic concept of artificial intelligence

How to make gif animation in ps

How to make gif animation in ps

How to use debug.exe

How to use debug.exe

What are the big data storage solutions?

What are the big data storage solutions?

antivirus software

antivirus software

Why is my phone not turned off but when someone calls me it prompts me to turn it off?

Why is my phone not turned off but when someone calls me it prompts me to turn it off?

The difference between vue3.0 and 2.0

The difference between vue3.0 and 2.0